I found that outside of RT, my 6800 does 1440p just fine. No problems with, for example, Callisto Protocol.Imo, go for 6800, if target is 1080p mostly

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD To Unveil Next Major Products At Gamescom 2023, Radeon RX 7800 XT & 7700 XT Expected

- Thread starter 1_rick

- Start date

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,673

IMO, the 7800 XT is worth the extra money and you still get Starfield.I’m going to buy either a 7800 or 6800 card. The only question is which one will deliver the most bang for the buck? Here is hoping the 7800 is actually some sort of upgrade. There are some leaked YouTube testing vids that show a decent improvement but I have absolutely no way to verify if they are accurate.

Better quality H.264 encoding. AV1 encoding. Full support for all of the new FSR features. More VRAM.

Price drop for the 4060 ti 16gb.If you can afford to wait, there is talk of a price war between 16gb 4060 ti & the 7700xt

So chance of another "jebait" & 7700xt launching at $400 (instead of $450?)

https://twitter.com/mooreslawisdead/status/1697504693805564382?s=20

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,788

$431 PNY 16gb rtx 4060ti here: https://www.amazon.com/dp/B0CG2MX5H9Price drop for the 4060 ti 16gb.

So chance of another "jebait" & 7700xt launching at $400 (instead of $450?)

https://twitter.com/mooreslawisdead/status/1697504693805564382?s=20

View attachment 595331

As an Amazon Associate, HardForum may earn from qualifying purchases.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,780

lolwoopty F'ing doo

That is an impressive showing. Need to find that data for 4xxx vs 7xxx.Not sure I follow you at all here, DLSS or FSR reduce input lag a lot versus native (like most thing that augment frame rate). Not sure what you mean by placebo effect, in fast passed multiplayer shooter reflex can reduce latency by 33% and more:

View attachment 591401

In both the compared case ,DLSS2 and DLSS3 are not running the game at true 4K so it has nothing to do with that, I do not say lesser than native without reflex on.

i have no idea wtf amd is doing with these pricing structures.

7900XT is $100 less than 7900XTX

7700XT is $50 less than 7800XT

I think the performance difference will be between 8 and 10%, so 10% higher price is right on target. 11% more core, 2% less clock, maybe 1% gain from memory bus/GB thruput. So on average 9% ish difference.

Edit: If the memory bandwidth causes a larger perf difference, they should knock off $25 on the lower tiered part. It is kind of weird that the more expensive part has better cost per frame, which is the case if it ends up having >10% performance over the 7800xt.

Last edited:

Here's the thing with those prices, AMD is using a mature TSMC node with an 80+% yield rate, meaning more than 80% of the Navi 31 or 32 dies are perfectly fine at their intended specs, leaving something like 13-15% in a state where cutting it down in some capacity stabilizes it, and only 2-3% completely unusable.i have no idea wtf amd is doing with these pricing structures.

7900XT is $100 less than 7900XTX

7700XT is $50 less than 7800XT

i cant wait to see how these perform. I get the aggressive pricing of the 7800xt, but $50 difference for a tier lmao. If they want agressive pricing, why not make the part $350 or 400

Cut down or not, AMD's cost per wafer remains the same.

So given this situation AMD needs to price things in a way that the 7900xtx or the 7800xt outsell the other flavors of those dies at a ratio of 8:1 if not closer to 9:1 otherwise they are running into a situation where they will need to gimp the silicon in the firmware to sell it as a lesser product to meet market demand, and that only serves to cut down margins as a whole and AMD doesn't want that.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

lol

That is an impressive showing. Need to find that data for 4xxx vs 7xxx.

I think the performance difference will be between 8 and 10%, so 10% higher price is right on target. 11% more core, 2% less clock, maybe 1% gain from memory bus/GB thruput. So on average 9% ish difference.

Edit: If the memory bandwidth causes a larger perf difference, they should knock off $25 on the lower tiered part. It is kind of weird that the more expensive part has better cost per frame, which is the case if it ends up having >10% performance over the 7800xt.

Price shouldn't scale up linear with performance.

It looks like the 7700xt will be about a 6800 and thr 7800xt will be about a 6800xt, at least in rasterization.

https://www.guru3d.com/news-story/l...able-performance-of-rtx-4070-and-rx-6800.html

https://www.guru3d.com/news-story/r...k-points-in-timespy-(matching-rx-6800xt).html

There will likely be a bigger delta between the two when resolution is cranked (bandwidth) or when settings/RT is raised (vram).

Now that Nvidia seems to be dropping the 16GB 4060ti to $450, AMD needs to quickly drop the 7700xt to $400.

- Joined

- May 18, 1997

- Messages

- 55,665

When Nvidia starts dropping prices you know things are bad.

Or at least correcting.When Nvidia starts dropping prices you know things are bad.

Last gen supply is dwindling, and big new game that many need upgrades for about to launch. All while the economy is in the shitter.

Nobody’s looked at any of these prices and thought, yeah that’s where it should be.

Last edited:

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,849

Except some people do rationalize it. Usually by saying inflation and whatnot.Nobody’s looked at any of these prices and thought, yeah that’s where it should be.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

Except some people do rationalize it. Usually by saying inflation and whatnot.

I mean, that is a factor.

Also, midrange/mainstream prices have improved this gen. RX6600/7600 prices are close to what the trashy 6500xt was. RX6700 prices are about where the RX6600 prices were.

$500 and above has stagnated a bit for AMD, but below that, it's been looking rather good.

A 7700 non-xt in the $400 realm would round out AMDs midrange lineup nicely and replace the 6700 line with improved RT.

- Joined

- May 18, 1997

- Messages

- 55,665

Do not at all agree with most of that analysis, but hey, whatever.Or at least correcting.

Last gen supply is dwindling, and big new game that many need upgrades for about to launch. All while the economy is in the shitter.

Nobody’s looked at any of these prices and thought, yeah that’s where it should be.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

2 years ago, around the launch of thr rx6000 series, $335 in today's money was $300 back then. That's NOT (edit)insignificant for the consumer or corporations like AMD.

Last edited:

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

Covid was the testbed.Except some people do rationalize it. Usually by saying inflation and whatnot.

Companies found out real quick they could charge whatever they wanted and people would gladly pay it. Nvidia could sell sauteed racoon assholes on a stick and people would buy them.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

Covid was the testbed.

Companies found out real quick they could charge whatever they wanted and people would gladly pay it. Nvidia could sell sauteed racoon assholes on a stick and people would buy them.

Let's keep things in perspective. GPUs are hardly a necessary commodity and let's stop pretending that a 50% generational improvement in performance on gaming at the same price is some sort of God given right.

GPU prices are of infinitesimaly small significance when compared to the skyrocketing prices of food a the same wage. The food we buy on the shelf might as well be racoon assholes. It would provide alot more nutrition anyhow.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

I don't believe that consumer goods should be treated as commodities as they were. There was a time where a graphics card was worth more than its weight in gold. Thats a lot of money to pay for something that has a finite existence.Let's keep things in perspective. GPUs are hardly a necessary commodity and let's stop pretending that a 50% generational improvement in performance on gaming at the same price is some sort of God given right.

GPU prices are of infinitesimaly small significance when compared to the skyrocketing prices of food a the same wage. The food we buy on the shelf might as well be racoon assholes. It would provide alot more nutrition anyhow.

Also, I agree. I ate Taco Bell a couple weeks ago, in hindsight I might have opted for the racoon assholes if I were given the chance.

Round these parts we call em road side corn holes.I don't believe that consumer goods should be treated as commodities as they were. There was a time where a graphics card was worth more than its weight in gold. Thats a lot of money to pay for something that has a finite existence.

Also, I agree. I ate Taco Bell a couple weeks ago, in hindsight I might have opted for the racoon assholes if I were given the chance.

Charcoal grilled with some Dan-O’s Crunchy, good times. Shame about ricks fender but that’ll buff out.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,995

The reason for this is loans, or in this case, credit cards. People don't look at debt properly, and tend to see it as a monthly payment. This is why housing skyrocketed, both before 2008 and well as today. This is also why cars skyrocket in price. If you take on a loan, you don't care about the price of anything. For a lot of these people, it's a business expense, and by business I mean crypto mining. Just a matter of time before they max out their credit cards.Covid was the testbed.

Companies found out real quick they could charge whatever they wanted and people would gladly pay it. Nvidia could sell sauteed racoon assholes on a stick and people would buy them.

I suppose, but I know my supply of 3000 series has dried up, and all my circle who didn’t need to upgrade for BG3 definitely needs to for Starfield. Still lots of 6000 series out there on the lower side 6700 and down, but upwards of there it’s increasingly sketchy.Do not at all agree with most of that analysis, but hey, whatever.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,780

The 7700xt price should probably drop by $20 to $30 then, as the higher priced card at $500 is likely right where they want it. Nvidia got blasted for gen over gen performance (gains/not gains) on the low end parts, so AMD might be in for some choppy water.Price shouldn't scale up linear with performance.

Yeah this is how some previous gen's played out too. Nvidia 2xxx comes to mind where Rasterization perf was about the same (for the price) and it was all about the new tech.It looks like the 7700xt will be about a 6800 and the 7800xt will be about a 6800xt, at least in rasterization.

https://www.guru3d.com/news-story/l...able-performance-of-rtx-4070-and-rx-6800.html

https://www.guru3d.com/news-story/r...k-points-in-timespy-(matching-rx-6800xt).html

There will likely be a bigger delta between the two when resolution is cranked (bandwidth) or when settings/RT is raised (vram).

Competition is good.Now that Nvidia seems to be dropping the 16GB 4060ti to $450, AMD needs to quickly drop the 7700xt to $400.

If 4060ti hit $400 it would be the obvious choice for price conscious buyers. The frame gen looks good in my (limited so far) experience with it. DLSS AA looks good too, better by a significant amount. Put it all together, it is faster than the Raster only reviews show. Raster only reviews are not really all that useful anymore (everything recent is fast enough at raster).

Now that AMD is going to do Frame Gen, everyone will be "oh cool!" and finally get onboard...

But it also means reviews will finally compare and enable all of the performance boosting tech. I suspect that the 4060ti will outperform the same priced competition (image quality, latency, and probably fps too) when they do that. Sucks we have to wait until next year to find out.

Last edited:

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,891

Same VRAM. But as stated the 7800XT will be worth the extra $IMO, the 7800 XT is worth the extra money and you still get Starfield.

Better quality H.264 encoding. AV1 encoding. Full support for all of the new FSR features. More VRAM.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

Yeah this is how some previous gen's played out too. Nvidia 2xxx comes to mind where Rasterization perf was about the same and it was all about the new tech.

Except back then, people complained that price/performance didn't improve (as rtx didn't seem valuable then) while now people are complaining NAME/performance hasn't improved as in the 7800 line is about the same performance as the 6800 line and the rtx 4060 line is about the same performance as the 3060 line.

While true that historically the next generation of one line was about the same performance as the last gen higher level or 2, ie gtx 1070 being about a 980ti, who really cares.

It's all really trivial and people just need something to complain about. Fact is the 6700xt launched at around $500 while the 6800xt was around $650. The new 7800xt will likely perform like a 6800xt while priced closer to a 6700xt. This is even more prevalent with the 6600xt ($380 launch and we wont even discuss the street price) vs the 7600xt ($270)

Honestly, this is all that matters.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,214

And again, that isn't including inflation, which has been real, just in the last 2 years.

The RX570 was seen as the value champ at $170 back in 2017. The $270 7600xt would be about $215 in 2017 money, but today you get 3x the performance depending on game, twice the vram, and way more features.

After 6 years I'm fine with that especially considering the 'lost years' of 2020-2022.

The RX570 was seen as the value champ at $170 back in 2017. The $270 7600xt would be about $215 in 2017 money, but today you get 3x the performance depending on game, twice the vram, and way more features.

After 6 years I'm fine with that especially considering the 'lost years' of 2020-2022.

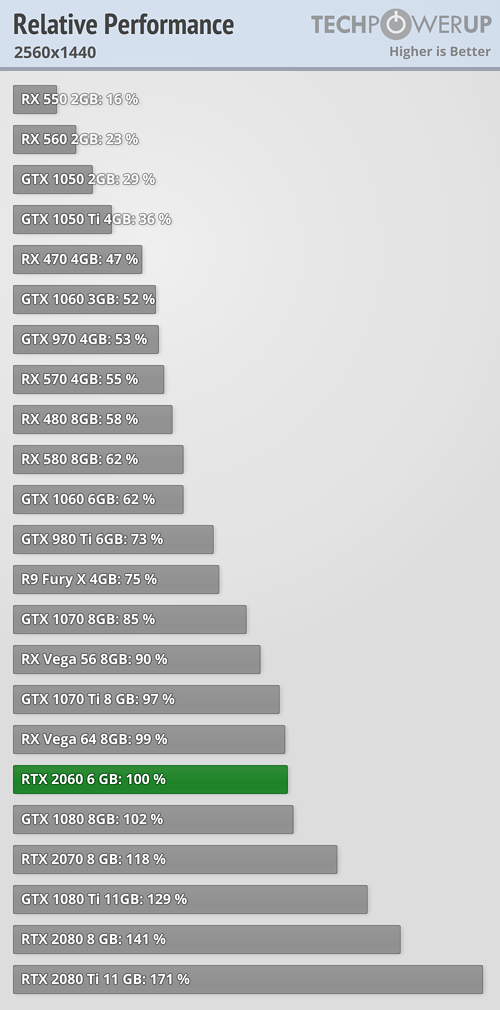

You mean for the price, because the raster increase was still quite good no ?Yeah this is how some previous gen's played out too. Nvidia 2xxx comes to mind where Rasterization perf was about the same and it was all about the new tech.

At 1440p the 2060 founder edition was 60% faster than a 1060 6GB, 2070 about 38-39% more than the 1070 same for the 2080 over the 1080, 33% for the 2080ti to 1080ti. Not bad at all accross the board if the price would have stayed around the same.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,891

But they have older cards to sell. The XT will be at $399 in minutes so I don't understand your angle here.I mean, that is a factor.

Also, midrange/mainstream prices have improved this gen. RX6600/7600 prices are close to what the trashy 6500xt was. RX6700 prices are about where the RX6600 prices were.

$500 and above has stagnated a bit for AMD, but below that, it's been looking rather good.

A 7700 non-xt in the $400 realm would round out AMDs midrange lineup nicely and replace the 6700 line with improved RT.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,891

Always wanted to know how to spell that. So many years wastedwoopty F'ing doo

I still think the 7800xt should have been named the 7700xt but it looks like it might actually be a decently priced current gen mid-range card which the market has been sorely lacking. Nvidia dropping prices on the 4060ti practically confirms it but reviews will be the real test.

The 7700xt doesn't look so great in comparison but perhaps the price will come down like the 7900xt did.

There's no free lunch and nothing I've seen convinces me the tradeoffs are worth it.

The 7700xt doesn't look so great in comparison but perhaps the price will come down like the 7900xt did.

Some might but I have no interest in frame gen or upscaling on high end or even mid-range hardware regardless of who's behind it.Now that AMD is going to do Frame Gen, everyone will be "oh cool!" and finally get onboard...

There's no free lunch and nothing I've seen convinces me the tradeoffs are worth it.

If you can afford to wait, then by next year AMD's price performance might look as below:The 7700xt doesn't look so great in comparison but perhaps the price will come down like the 7900xt did.

7900 xtx = $750

7900 xt = $600

8700 xt = $500

8700/7800xt = $400

8600xt/7700xt = $330

7600 = $200

6400xt = $125

Rev. Night

[H]ard|Gawd

- Joined

- Mar 30, 2004

- Messages

- 1,515

That chart is making my 7900xtx at $825 look really good

I already have a 7900xtx because I got tired of waiting for a good value during the last crypto boom and I was able to get a decent AIB card for msrp shortly after launch. I'm not thrilled that it's taken the better part of a year to finally maybe see some decently priced mid-range cards again but hopefully it's a step in the right direction for card prices going forward.If you can afford to wait, then by next year AMD's price performance might look as below:

7900 xtx = $750

7900 xt = $600

8700 xt = $500

8700/7800xt = $400

8600xt/7700xt = $330

7600 = $200

6400xt = $125

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,429

If you can afford to wait, then by next year AMD's price performance might look as below:

7900 xtx = $750

7900 xt = $600

8700 xt = $500

8700/7800xt = $400

8600xt/7700xt = $330

7600 = $200

6400xt = $125

True, I'm sure you can wait another 2 years for even better prices. Unless a noteworthy price drop is coming within 6-8 weeks, we all know GPUs will devalue eventually. Sometimes it is worth paying a bit more to get it earlier.

If you're in the market for a $400-600 GPU right now, I would absolutely wait until AMD's new GPUs come out. I assume Nvidia will see some noteworthy price cuts as well because their 4060ti 16GB is laughable at $500. But I am doubting there will be any price movement on the 7900s, so you may as well get them now and enjoy them.

People that make game for console seem to always find the upscaling to be the best compromise versus the tradeoff needed to run at native, now they tend to have resolution a bit high (would it be at the early 1080p) to now 4k and an audience that seat a bit farther, but they were making that choice when upscaling was way worse than what it should be by 2025.There's no free lunch and nothing I've seen convinces me the tradeoffs are worth it.

I feel the Starfield and co. type of game will make the upscaling tradeoff versus choosing lower setting more and more appealing to PC gamers as well.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,995

DLSS and FSR are just easy ways of lowering graphic settings. You could say that DLSS and FSR aren't running games at true resolutions. Frame Generation is still something that I feel creates fake frames, which brings the question if 60fps is really 60fps. It's just an illusion. I will continue to ignore these technologies in graphic card benchmarks, as these can be used to give inflated results. If your graphics card can't do 1080P 60fps without FSR or DLSS, despite what version it is, then your graphics card is inadequate.People that make game for console seem to always find the upscaling to be the best compromise versus the tradeoff needed to run at native, now they tend to have resolution a bit high (would it be at the early 1080p) to now 4k and an audience that seat a bit farther, but they were making that choice when upscaling was way worse than what it should be by 2025.

I feel the Starfield and co. type of game will make the upscaling tradeoff versus choosing lower setting more and more appealing to PC gamers as well.

Much easier way of lowering graphics settings but it looks way better than potato mode. High settings with upscaling at balanced looks and performs better in almost all cases than all lows with no upscaling at native resolutions or a lower non native one.DLSS and FSR are just easy ways of lowering graphic settings. You could say that DLSS and FSR aren't running games at true resolutions. Frame Generation is still something that I feel creates fake frames, which brings the question if 60fps is really 60fps. It's just an illusion. I will continue to ignore these technologies in graphic card benchmarks, as these can be used to give inflated results. If your graphics card can't do 1080P 60fps without FSR or DLSS, despite what version it is, then your graphics card is inadequate.

So with games getting more complex and GPU’s failing to keep pace I’ll take it as a trade off before dropping the cash for a 4090, or what ever new hit card comes after it.

Depend on easy for who and what you mean by easy, how long did some of the biggest company on the world worked on those (Epyc Unreal Engine, AMD, Nvidia, etc... upsampling tech, how much giant machine learning and 16k learning dataset was made in the last 6 years for this).DLSS and FSR are just easy ways of lowering graphic settings.

It is much faster for the user than changing individual settings for sure, but for gamedev that seem to almost always pick that route I doubt it is for that reason, they probably test what does it look like at setting where they are able to run native at the wanted performance, compare with the upscaling version that deliver the same performance and found that the upscaled look better. (Do you remember back in the day when you played game on a CRT, trying to lower the resolution versus lowering the graphic was something you would try and it was not always the same that would won the battle, the forced always the same resolution will have been a short quirk of the LCD, as their density continue to augment and the tool to make the image fit on them get better we will go back to the 90s/early 2000 days of choosing a resolution for different things)

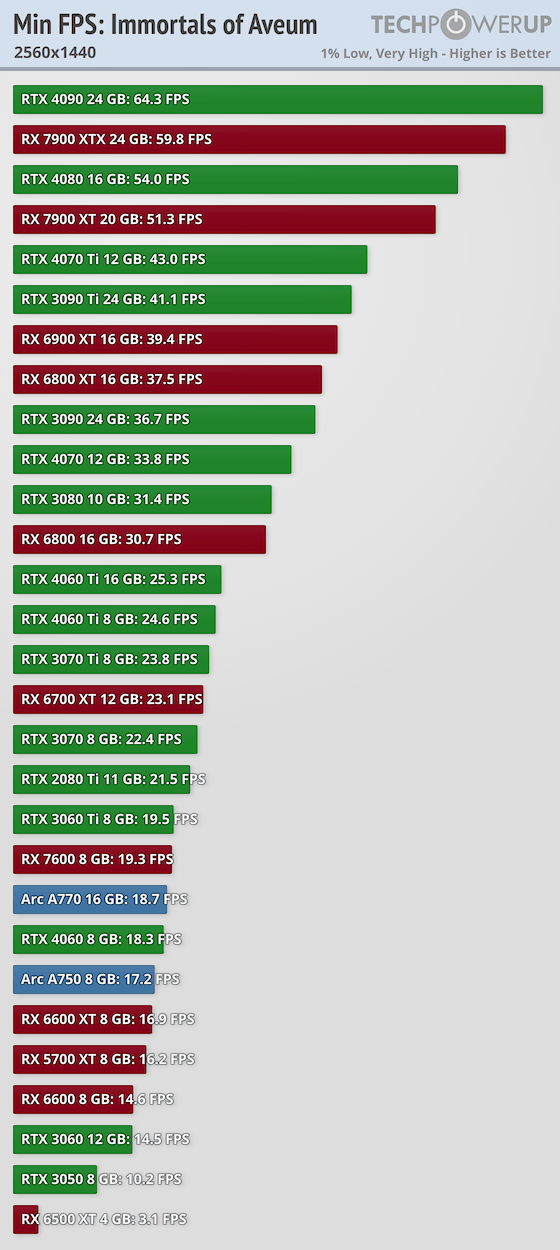

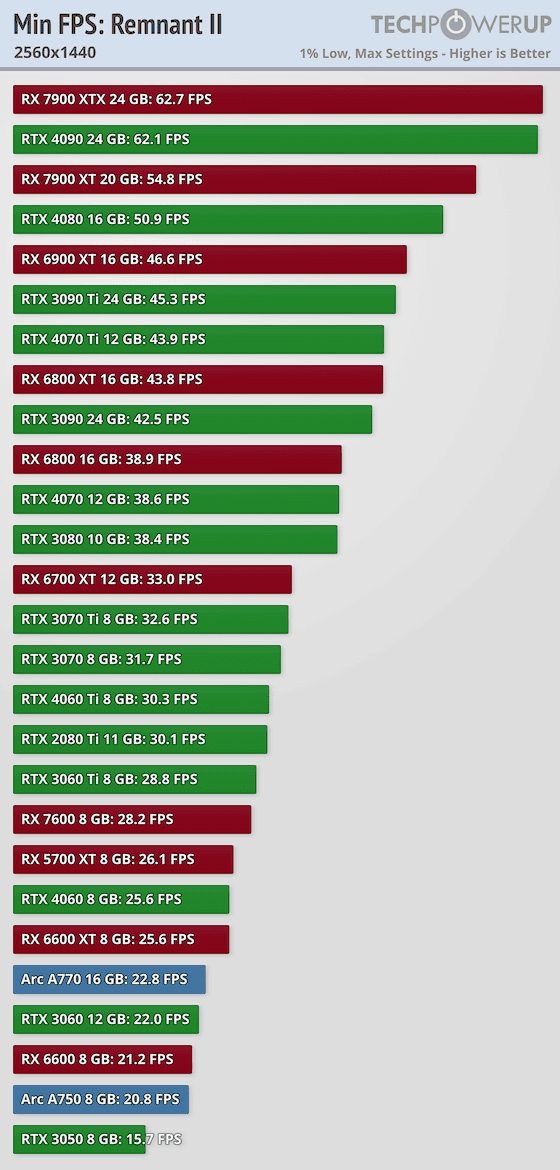

1080p is not where it shine the most (at least not FSR), about no graphic card can maintain 60fps at 1440p for Immortals of Aveum, the 4090 barely:If your graphics card can't do 1080P 60fps without FSR or DLSS, despite what version it is, then your graphics card is inadequate.

Now what ?, there is no amount of GPU that can guarantee being able to play a game at some setting at 1080p and maintain 60fps, the game could always be more complex, it is always a compromise.

If the 6800xt cannot keep up 60 fps at 1440p in starfield ultra even with a 7800x3d, does that mean that a 6800xt is not enough of a gpu, that the 6800xt buyer should have never bought a monitor above 1080p or a little bit of FSR2 will possibly look better than lowering settings.

Video game are made to barely run at 60fps (for those who are fast, sometime 30-40) at 720 to 1440p on console at around high setting on a 6700-6700xt, PC gamers that want fast fps or high quality graphic will need to compromise with any gpu.

Last edited:

Rev. Night

[H]ard|Gawd

- Joined

- Mar 30, 2004

- Messages

- 1,515

When are we getting OLED 27"-32" 4K monitors that dont look like shit if I drop it down to 1440

Basically a 27-32" LG C1

Basically a 27-32" LG C1

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,387

Rev. Night

[H]ard|Gawd

- Joined

- Mar 30, 2004

- Messages

- 1,515

Cliffs

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)