very disappointing performance on the 4070 Ti S...I was expecting performance closer to the 4080 but in reality it's closer to the non-Super 4070 Ti...WTH!...I was considering getting the 4070 Ti, now I'm going to wait and see the 4080 Super performance numbers

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA rumored to be preparing GeForce RTX 4080/4070 SUPER cards

- Thread starter Blade-Runner

- Start date

very disappointing performance on the 4070 Ti S...I was expecting performance closer to the 4080 but in reality it's closer to the non-Super 4070 Ti...WTH!...I was considering getting the 4070 Ti, now I'm going to wait and see the 4080 Super performance numbers

With the 4080S being a negligible, if any, performance increase on the non-Super there was no way that Nvidia was going to significantly close that gap. The ti Super and 80 Super are really just the 4070 it and 4080 getting set to how they should have been released in the first place (though, I think the ti is still priced a bit too high. The Super really should have come with a $50 price drop).

Liqq

Limp Gawd

- Joined

- Nov 14, 2010

- Messages

- 493

very disappointing performance on the 4070 Ti S...I was expecting performance closer to the 4080 but in reality it's closer to the non-Super 4070 Ti...WTH!...I was considering getting the 4070 Ti, now I'm going to wait and see the 4080 Super performance numbers

yup, disappointing. and if Im spending a grand it wont be until RTX 5 series. Now Im kind of wishing I jumped on the $799 XTX deal and might just say to hell with it and do it if it pops up again.

Im really wanting to grab one of the new 4k oleds and start the 4k gaming venture but it would kill my 3070. I was wanting to space the card and oled monitor purchases a bit to avoid wife aggro. I might just suffer with a nice monitor for a year. Decisions, decisions.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,379

very disappointing performance on the 4070 Ti S...I was expecting performance closer to the 4080 but in reality it's closer to the non-Super 4070 Ti...WTH!...I was considering getting the 4070 Ti, now I'm going to wait and see the 4080 Super performance numbers

It does come with 16GB, so it is a nice replacement for the 4070ti. Although this is more or less what the 4070ti should have been at launch.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,210

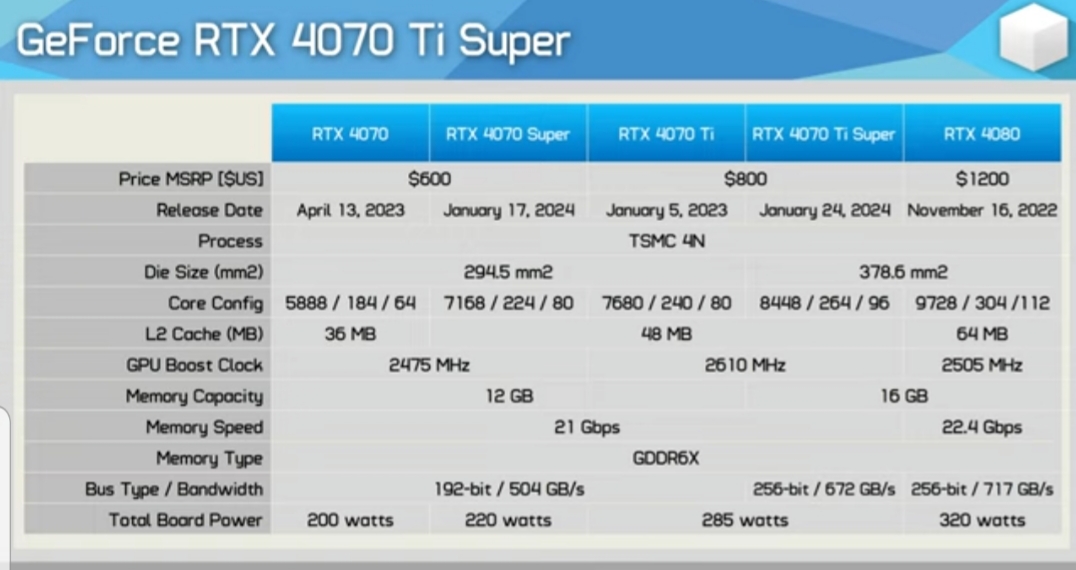

Conflicting info on the L2 cache - does the 4070ti super have the same as the 4080 or 4070ti?

yup, disappointing. and if Im spending a grand it wont be until RTX 5 series. Now Im kind of wishing I jumped on the $799 XTX deal and might just say to hell with it and do it if it pops up again.

Im really wanting to grab one of the new 4k oleds and start the 4k gaming venture but it would kill my 3070. I was wanting to space the card and oled monitor purchases a bit to avoid wife aggro. I might just suffer with a nice monitor for a year. Decisions, decisions.

yeah I'm thinking about waiting until the 50 series as well...I only really need a 40 series for Cyberpunk and Alan Wake 2 with RT maxed out...path tracing as well but no current cards outside of the 4090 do well in path tracing

I'm satisfied with staying at 1440p for the foreseeable future...4K gaming QD-OLED's are the best but they still have issues as far as using them for Desktop use (text rendering)...hopefully MicroLED display production starts up soon

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Yes but enought to be clear by anyone using it that the know it will not be at a 4080 level and certainly not above it.

Here you both go, as a conclusion to our discussion:Yep. No one claimed that but he's saying it anyway to falsely bolster his argument.

As you also illustrated the 4070ti super should be near what the 4080 is currently.

View: https://youtu.be/ZrdOWG0V2cQ?feature=shared&t=6

In an informal MLID poll, there were 11% of people that thought that the 4070Ti S was going to soundly beat the 7900XT and also trade blows with 7900XTX with another 3% believing that the 4070TiS was going to beat the 7900XTX. Which is a long convoluted way of saying they also believed that the 4070TiS was going to be faster than the 4080.

Now all of them, as I stated in my initial post, cruised directly into disappointment.

As for GoldenTiger directly, I never was making a statement about people just on these boards. Just merely "people" in general had this thought. There is other plenty of other evidence on this topic. A lot of people especially when Super cards were first getting rumors in November/December last year thinking that the 4080S was going to get AD102 and the 4070TiS was going to get full die AD103, also directly thought at that time that the 4070TiS was going to be faster than a 4080. I saw all those discussions back then, but it wasn't practical to try and dig up evidence.

I also didn't realize apparently that that was what was in question in the first place. Otherwise, again, I could've dug up evidence instead of the conversation we had, though I would've been at best loathed to do it for reasons already mentioned.

In fairness to both of you, your expectations were that humans are more rational than they are. It seems like no matter how often people get bitten by rumors people just keep believing everything they hear without considering the source or the consistency of the source.

Last edited:

I have two 4k screens on my desk to work (one to game occasionally). Then I run a USB hub and HDMI 2.1 to a 55 inch s90c. With the 7900 XTX, 4080, and moreso the 4090 quality 4K HDR gaming is legit now. I ran the 6800XT for a bit and it was enough running on medium settings and FSR Quality. But really we're at a place now where these cards are worth it with a proper OLED display. I'll probably swap to a 5090 or whatever RDNA 4 is if I can justify it. Good news is, the longer you wait the more tech will be available. Bad news is you only live so many years.yeah I'm thinking about waiting until the 50 series as well...I only really need a 40 series for Cyberpunk and Alan Wake 2 with RT maxed out...path tracing as well but no current cards outside of the 4090 do well in path tracing

I'm satisfied with staying at 1440p for the foreseeable future...4K gaming QD-OLED's are the best but they still have issues as far as using them for Desktop use (text rendering)...hopefully MicroLED display production starts up soon

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,366

Conflicting info on the L2 cache - does the 4070ti super have the same as the 4080 or 4070ti?

some 4070 Ti reviews are listing the 4070 Ti super as having 64 MB of L2 cache, though Nvidia says it only has 48 MB on their website (same as 4070 Ti non-Super)...I think the correct L2 cache is 48MB on the new 4070 Ti Super

Last edited:

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,210

Wonder if that contributed to the less than expected performance of the 4070tiS. Maybe not having the suitable amount of cache preventents it from tsking advantage of all that extra bandwidth.some 4070 Ti reviews are listing the 4070 Ti super as having 64 MB of L2 cache, though Nvidia says it only has 48 MB on their website (same as 4070 Ti non-Super)...I think the correct L2 cache is 48MB on the new 4070 Ti Super

Wonder if that contributed to the less than expected performance of the 4070tiS. Maybe not having the suitable amount of cache preventents it from tsking advantage of all that extra bandwidth.

I think Nvidia didn't want the 4070 Ti Super to get too close to 4080 performance

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,210

some 4070 Ti reviews are listing the 4070 Ti super as having 64 MB of L2 cache, though Nvidia says it only has 48 MB on their website (same as 4070 Ti non-Super)...I think the correct L2 cache is 48MB on the new 4070 Ti Super

Steve from HUB screwed up the specs. Performance actually makes sense now. The 40 series is reallly dependent on large cache for performance. Even the 700+ GB/S of thr 4080 is not that much for a card that performs above a 3090ti. While the 4070tiS drops that slightly, its going from 64MB to 48 MB that really bottlenecks the card to near 4070ti levels in certain titles.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,379

I think Nvidia didn't want the 4070 Ti Super to get too close to 4080 performance

More or less, they're slapping 16GB and a minor performance increase.

I suppose that helps further differentiate it from the 4070 Super, which is a decent jump over the regular 4070.

More or less, they're slapping 16GB and a minor performance increase.

I suppose that helps further differentiate it from the 4070 Super, which is a decent jump over the regular 4070.

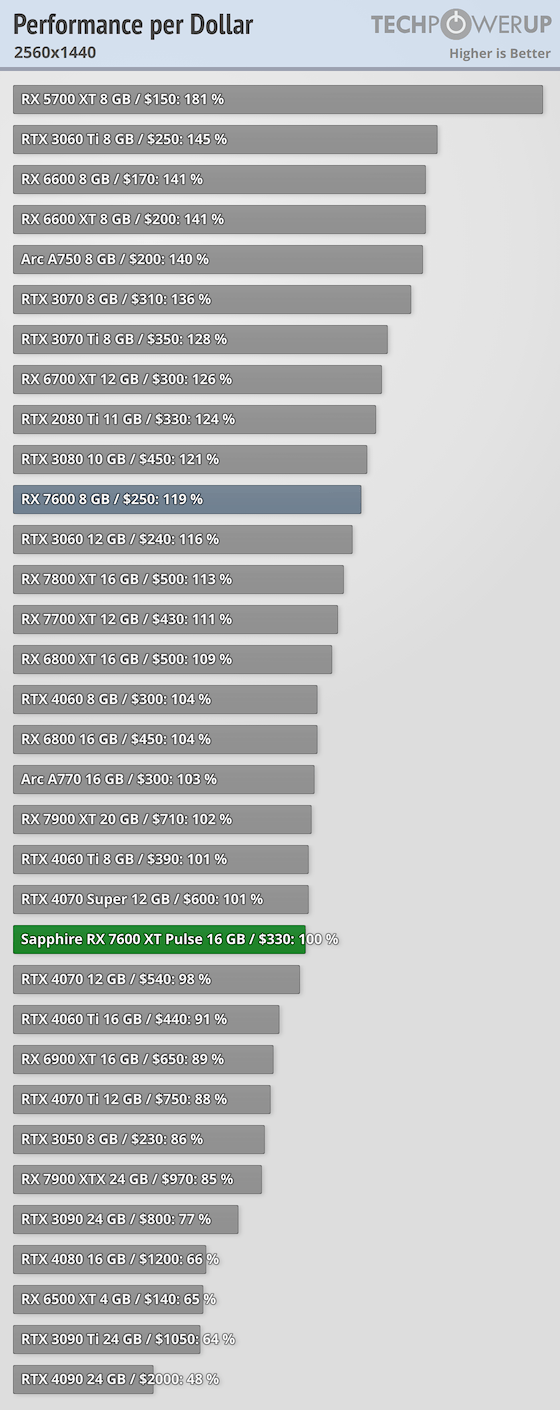

even if the 4080 Super is only a minor 5- 7% improvement over the 4080 non-Super it's still a better card because it's $200 cheaper

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

I agree. Ultimate "value" however, will depend on how AMD reacts and how many Super cards are available at MSRP.even if the 4080 Super is only a minor 5- 7% improvement over the 4080 non-Super it's still a better card because it's $200 cheaper

Certainly if you're "never Red" then it will be a card that a lot of people sick of trying to get a 4090 could justify buying at half the cost.

Supers are turning out to be super disappointing. Only card interesting is the 4090 for this generation. BestBuy had several versions for less than $1800 last night. Not something I would want to get this late in the game cycle though at even MSRP.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

I think we all were hoping the Super series would be the defacto recommendations in their price points, that they'd simply beat AMD in everything. And it's disappointing because frankly they are not.Supers are turning out to be super disappointing. Only card interesting is the 4090 for this generation. BestBuy had several versions for less than $1800 last night. Not something I would want to get this late in the game cycle though at even MSRP.

I also agree in the sense that Lovelace definitely has delivered less dollar value than Ampere did. However, if you need a card, what do you do? It just kind of is what it is.

Which is also why I generally think there is a lot of reasons to not be loyal to any one company and also to seriously consider AMD.

The 7900XTX just honestly might make a lot more sense for a good chunk of the games people play and also for the productivity tasks dollar for dollar over a card like the 4080S. However again, that's all dependent on ultimate price and use case.

No card is forever either. So it might make sense to compromise on some areas of performance just to save the money at this time. And then 2 gens from now when perhaps there is better ROI on an nVidia card (or a different AMD card for that matter) then simply switch at that time. That's the game. That's always been the game.

I also agree in the sense that Lovelace definitely has delivered less dollar value than Ampere did. However, if you need a card, what do you do? It just kind of is what it is.

Which is also why I generally think there is a lot of reasons to not be loyal to any one company and also to seriously consider AMD

for the people that care about ray-tracing and path tracing you can't seriously consider AMD...there is no option except Nvidia...if you mostly play games with rasterization then AMD is the better option

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

The only games where there is an "undeniable" massive performance advantage for nVidia is in heavily ray-traced titles such as CP2077 and AW2.for the people that care about ray-tracing and path tracing you can't seriously consider AMD...there is no option except Nvidia...

In every other title with RT the 4080 and 7900XTX trade blows and are otherwise close. This includes titles like Avatar, RE4 Remake, RE Village, Jedi Survivor, Forspoken, etc. So it's definitely not as simple as saying: nVidia wins at all RT games.

There are the UT5 titles which AMD is also ahead on in 5 out of 6. Daniel Owen did a breakdown specifically regarding that. Which include RT (albeit software RT).if you mostly play games with rasterization then AMD is the better option

So if AW2 and CP2077 are the only games you intend to play in the future, sure, great. But considering that custom engines are the minority and UE5 is going to be more and more the future, I don't think that "PT RT" games can be the only focus for gamers. And that is also not even considering console optimization, which AMD directly has the advantage on.

For nVidia to really have a pronounced advantage in the coming years by buying an nVidia based GPU today (let's say until the nVidia 6000 series comes out), there would have to be a lot more games that are using incredibly complex RT and more specifically path tracing (which you more or less acknowledge).

If a bunch of new path traced games come out over the next 4 years then yeah, nVidia just flat out wins. The problem is I think that UE5 will be the more common option, that software RT fallback options that have far fewer performance penalties will continue to exist, and that PT though cool will continue to be ultra niche until there are enough cards that exist out in the wild for devs to capitalize on them. Well that and also that it must be done in such a way that the console generation can also benefit. This is all verified that the first crop of UE5 games already exist today. And there are 6 of them and only "2" of AW2 and CP2077 which have PT.

In other words I see that nVidia's use cases specifically with RT + PT are limited. It's a much better argument to talk about how DLSS is better and that that will continue to make a big performance difference in UE5 titles that gain massive speed improvements by going to lower resolutions. As UE5's "big thing" is that everything is done per pixel in real time, so DLSS 4k in quality mode gives a massive speed benefit over trying to play native while minimizing quality differences from native.

DLSS I think is a much bigger argument to go with nVidia in the "near term" of the next 4 years. However I personally feel like both FSR 2.1 and UE5's TSR have mostly closed this gap and both are more universal than DLSS. That's a whole other debate, but again, those tensor cores with DLSS + Reflex + Frame Gen are a much bigger selling advantage I think to even nVidia users than pure RT performance in an absolutely small amount of games.

The other thing here too is that people can still get nice RT out of a 7900XTX in AW2 and CP2077 by simply not playing either in Ultra settings and by spending just a bit more time optimizing settings for IQ and performance. nVidia will of course still be faster and look better, but it's not as if on AMD cards even with nice looking levels of RT that the 7900XTX is just "unplayable". In other words, again, I don't know if it necessarily makes sense for the majority of people to buy a much more expensive card that performs equal or worse everywhere else just for two or three games. The Super may close the gap, but again, that will still be dependent both on how the Super is priced and where the XTX will be priced, post launch.

Last edited:

In every other title with RT the 4080 and 7900XTX trade blows and are otherwise close. This includes titles like Avatar, RE4 Remake, RE Village, Jedi Survivor, Forspoken, etc. So it's definitely not as simple as saying: nVidia wins at all RT games

every game you listed is an AMD sponsored title

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,673

Yep, and ones that use minimal raytracing effects.every game you listed is an AMD sponsored title

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Your big argument for buying an nVidia based card was bringing up two nVidia sponsored titles, CP2077 and AW2, which are the only 2 path traced games.every game you listed is an AMD sponsored title

So, talking about the reverse, considering that even by your own admission is the majority of games (apparently?) is a pretty relevant topic when talking about future game performance.

If that continues for the next 4 years, will that be the excuse? Because if it is, then people should just buy an AMD GPU then.

Or a majority of titles and a majority of upcoming titles.Yep, and ones that use minimal raytracing effects.

If there aren't a bunch of heavy RT + PT games in the future then it ceases to be relevant. And already we have 3:1 UE5 to PT games. I don't see that ratio getting any better. Do you?

Your big argument for buying an nVidia based card was bringing up two nVidia sponsored titles, CP2077 and AW2, which are the only 2 path traced games.

So, talking about the reverse, considering that even by your own admission is the majority of games (apparently?) is a pretty relevant discussion topic.

If that continues for the next 4 years, will that be the excuse? Because if it is, then people should just buy an AMD GPU then.

I brought up CP2077 and AW2 as examples of path tracing...even in non-path traced RT titles Nvidia wins out...even the AMD sponsored games only have a lead in the window after release, eventually Nvidia catches up and surpasses a majority of those titles as well- Jedi Survivor being a recent example once it got DLSS support with a post release patch

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Feel free to list them all. Because that's not what I'm seeing. At least certainly not by any significant metric. I posted two sources above.I brought up CP2077 and AW2 as examples of path tracing...even in non-path traced RT titles Nvidia wins out...even the AMD sponsored games only have a lead in the window after release, eventually Nvidia catches up and surpasses a majority of those titles as well-

In my statement I said that all of the other RT titles trade blows. Jedi Survivor is one of those titles. nVidia is winning yes, but not in a significant way.Jedi Survivor being a recent example once it got DLSS support with a post release patch

EDIT: And by "not significant" what I mean is that if someone did a double blind test on either GPU without being able to see a frame time graph they wouldn't be able to tell the difference. All else being equal.

Feel free to list them all. Because that's not what I'm seeing. At least certainly not by any significant metric. I posted two sources above.

In my statement I said that all of the other RT titles trade blows. Jedi Survivor is one of those titles. nVidia is winning yes, but not in a significant way.

look at the recent HUB video where he reviews the 4070 Ti Super and compares performance in 10 RT enabled games...timestamped video below...

View: https://youtu.be/ePbKc6THvCM?t=728

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,673

Actually, the fsr implementation in it is atrocious. Dlss looks FAR better in that game than the fsr does at equivalent settings.Jedi Survivor is one of those titles.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

look at the recent HUB video where he reviews the 4070 Ti Super and compares performance in 10 RT enabled games...timestamped video below...

View: https://youtu.be/ePbKc6THvCM?t=728

Just rescrubbed through (I've watched this video before) and it confirms everything I'm saying. There are 3 games that the nVidia GPU's are better at, CP2077, AW2, and Ratchet and Clank. Basically the same as its been for the past year+. I suppose and Fortnite, I'll even give you that one.

On literally every other RT game, nVidia and AMD trade blows - meaning sometimes AMD is ahead and sometimes nVidia is ahead. And either way it's generally speaking <5%+/- difference. So if this is supposed to be your gotcha, it's not.

The 10 game averaging at the end is skewed as a result. Obviously AMD performs poorly, very poorly in those 3 titles. If again those titles aren't the basis of your consideration then the balance of performance doesn't look anything like that.

I've literally covered all this ground already. Meanwhile you're not talking about any of the rest of the field. Including UE5 performance or how nearly the rest of the field is raster - where the 7900XT has a commanding lead regardless of resolution. The 4070TiS only comes ahead in a few titles and generally not across the board, only at lower resolutions. I guess if you only care about 3 (or 4) games, then yeah, you can justify it. But I don't think it's hard to convince "normal people" that want a card that's performative in literally everything else, that also costs less money, "is reasonable".

I feel like you're really having to push back pretty hard to justify the 4080 (or 4070TiS), which we already know is not a GPU that is selling particularly well. Hence a price drop and the refresh.

DLSS and FSR are not required on either GPU in that title. And this has always been down to implementation, which I've also already said. I guess if you need more than 150fps and want to take the DLSS image quality penalty (no matter how small) to get more fps then you win. With RT on it becomes more significant, but both cards are over 60 fps at 2.5k.Actually, the fsr implementation in it is atrocious. Dlss looks FAR better in that game than the fsr does at equivalent settings.

Last edited:

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

I think the main rub with the "better rasterization" with AMD is that it's usually a single-digit percentage difference where you're already getting 100+ fps whereas with the RT titles Nvidia is noticeably better and it's at framerates that are already borderline unplayable.

I think the main rub with the "better rasterization" with AMD is that it's usually a single-digit percentage difference where you're already getting 100+ fps whereas with the RT titles Nvidia is noticeably better and it's at framerates that are already borderline unplayable.

But you’re also typically paying less for the AMD card.

I would say any generalization misses the mark. Go by per game and then if RT even makes a meaningful difference in the IQ. Then the odds one will even care playing it.I think the main rub with the "better rasterization" with AMD is that it's usually a single-digit percentage difference where you're already getting 100+ fps whereas with the RT titles Nvidia is noticeably better and it's at framerates that are already borderline unplayable.

AW has software RT when PT is off and looks awesome in itself. PT in that title does add some more accuracy but is it ground breaking for the performance hit? Personal choice if it matters.

As for the Supers, I don’t see anything meaningful brought to the table unless one is Nvidia only club for what ever reason valid or not.

My view:

$200-$300

AMD 6600 XT, 6700, 7600XT, Intel Arcs - nothing here with Nvidia

$300-$400:

6700 XT, 6800 - what Nvidia has here is a joke

$400-$500

lol, 7700 XT 7800XT

$500-$600

Just go with the $400-$500 segment

$700 - $800

7900 XT or 4080 TI Super(if at MSRP). I would go with the 7900 XT hands down.

$800 - $900 hands down the 7900 XTX (seen 7900 XTX as low as $800 several times).

Above $900

4090, 4080 Super? Personally at this time I will just wait until next generation. Which look like it will be Nvidia due to AMD not having or assumed won’t have anything in the ultra high end.

It is more about a better rasterization per dollar (7600xt aside), than a blanket better raster (after all the strongest raster card around will tend to be the 4090 and some 70 class card will beat the new 80 class card from amd in pure raster, the old 3080 still tie the 7800xt in pure raster 4k average on TPU), in raster performance per dollar in some price bracket you can see a more than single digit difference, say between the 7800xt and the 4070 / 4060TI 16gb.I think the main rub with the "better rasterization" with AMD is that it's usually a single-digit percentage difference where you're already getting 100+ fps whereas with the RT titles Nvidia is noticeably better and it's at framerates that are already borderline unplayable.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

I didn't say that AMD wasn't worth it; I wasn't making any statements about pricing at all. I was just stating that as far as rasterization is concerned the cards are usually close enough to be a wash. For instance, a 7900XTX isn't likely to last any longer than a 4080 for rasterization but for Ray-tracing you're probably going to get more mileage out of the 4000 series since they're still a generation ahead there.

If one wants to argue pricing then sure you can save a few bucks with AMD. I'm interested in grabbing a 7900XTX to play with. Hopefully they'll move to the $700-$800 bracket when the 4080S drops. Otherwise I'll just wait until Blackwell/RDNA4 releases and see what they're capable of.

If one wants to argue pricing then sure you can save a few bucks with AMD. I'm interested in grabbing a 7900XTX to play with. Hopefully they'll move to the $700-$800 bracket when the 4080S drops. Otherwise I'll just wait until Blackwell/RDNA4 releases and see what they're capable of.

Last edited:

Yes and the statement was the talk is not about raster performance in a vacuum but by price.. I was just stating that as far as rasterization is concerned the cards are usually close enough to be a wash.

The 7900xtx is not that faster at raster than a 4080 for a perfect example, but a bit under $1000 versus $1200, the difference is in raster per $ (at least that usually how I see it being talked about) or available vram per $.

Last edited:

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

For instance, a 7900XTX isn't likely to last any longer than a 4080 for rasterization but for Ray-tracing you're probably going to get more mileage out of the 4000 series since they're still a generation ahead there.

No offense but people were saying that to justify the 3000 series purchase, which the 7900XTX ray tracing is as good if not better and now that is junk according to some people in the forum. People that want the best ray tracing will drop their old card instantly for the new hotness.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

There's always a subset of consumers that work in that way. There are already users here chomping at the bit to replace their 4090s with something faster.No offense but people were saying that to justify the 3000 series purchase, which the 7900XTX ray tracing is as good if not better and now that is junk according to some people in the forum. People that want the best ray tracing will drop their old card instantly for the new hotness.

RTX 4070 Super vs PS5: How Much Faster/Better Are Today's Mid-Range GPUs?

View: https://www.youtube.com/watch?v=5DVUMIol_yM

View: https://www.youtube.com/watch?v=5DVUMIol_yM

In my experience, RT is good when it has a significant impact on image representation, realism when needed and not degrade performance to point of affecting adversely the game play.

Also when choosing what looks better, as in lower resolutions with RT on, maybe with lower other graphics settings to have sufficient performance compared to RT off with higher resolutions and settings. With the 3090, it just never panned out in a straight fashion. IQ would be worst due having other settings lower, more aggressive DLSS etc. to get performance for smooth game play. Some titles having RT on made insignificant IQ gains with a big performance hit. Some titles with RT on look ridiculous, shiny mirrors everywhere such as BFV, in a war zone, perfectly cleaned cars floors and windows, lol, looks stupid to say the least. Games have gotten better.

Also when choosing what looks better, as in lower resolutions with RT on, maybe with lower other graphics settings to have sufficient performance compared to RT off with higher resolutions and settings. With the 3090, it just never panned out in a straight fashion. IQ would be worst due having other settings lower, more aggressive DLSS etc. to get performance for smooth game play. Some titles having RT on made insignificant IQ gains with a big performance hit. Some titles with RT on look ridiculous, shiny mirrors everywhere such as BFV, in a war zone, perfectly cleaned cars floors and windows, lol, looks stupid to say the least. Games have gotten better.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

I think most would agree.In my experience, RT is good when it has a significant impact on image representation, realism when needed and not degrade performance to point of affecting adversely the game play.

Also when choosing what looks better, as in lower resolutions with RT on, maybe with lower other graphics settings to have sufficient performance compared to RT off with higher resolutions and settings. With the 3090, it just never panned out in a straight fashion. IQ would be worst due having other settings lower, more aggressive DLSS etc. to get performance for smooth game play. Some titles having RT on made insignificant IQ gains with a big performance hit. Some titles with RT on look ridiculous, shiny mirrors everywhere such as BFV, in a war zone, perfectly cleaned cars floors and windows, lol, looks stupid to say the least. Games have gotten better.

Where the big contention I think between "never Red" and people who will actually consider every card because dollar per frame value matters to them is:

Whether or not the graphics settings can be played with in such a way that the performance is good and the IQ penalty is low.

Like you mentioned above, you could use software RT for AW2, gain back a bunch of performance and have it look nearly identical.

And I actually would say the same thing(s) about CP2077. Which has so many options that it's possible to optimize settings to maintain performance. Does nVidia win there in total IQ? Of course, that is without question, but is the price worth it if I can turn down a few settings, have it look 80-90% as good as the nVidia card and save 20%+? Fortnite is even more pronounced there, because it has software lumen/nanite, which AMD cards excel at. Is nVidia better with hardware RT in that title? Yes of course, but again in motion the difference between the two is minimal, mostly affecting accuracy and not just pure IQ.

And as you I think are alluding to, it's not as if the 7900XTX performs poorly in RT. It does compared to nVidia Lovelace, but it's very comparable to 3090 level performance which was top for the previous gen. And a 3090 owner today (if they could magically swap cards for free) would greatly benefit from the raster on a 7900XTX, which they would be more than happy to have. And similarly said 3090 owner also has to turn down IQ settings in those same RT titles that the 7900XTX does in order to hit acceptable frame rates. The tradeoffs also there I think still being worth it to hang onto a 3090 until Blackwell comes out (unless you have more money than sense - but that's the point, it's more than good enough to hang onto through this generation).

With that framing it's obvious that for most AMD has been stacking their pricing well in comparison to the competition. And although I had high hopes for the Super series bringing fiercer competition, so far both of the 4070 Super cards have brought up 10-15% performance increases at most, which all AMD had to do was bring a commensurate price drop. I don't think the 4080S will shake up much either, other than selling to people so impatient that they don't want to wait for 4090's to get reasonable in price again AND they also can't be bothered to wait for Blackwell.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)