UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

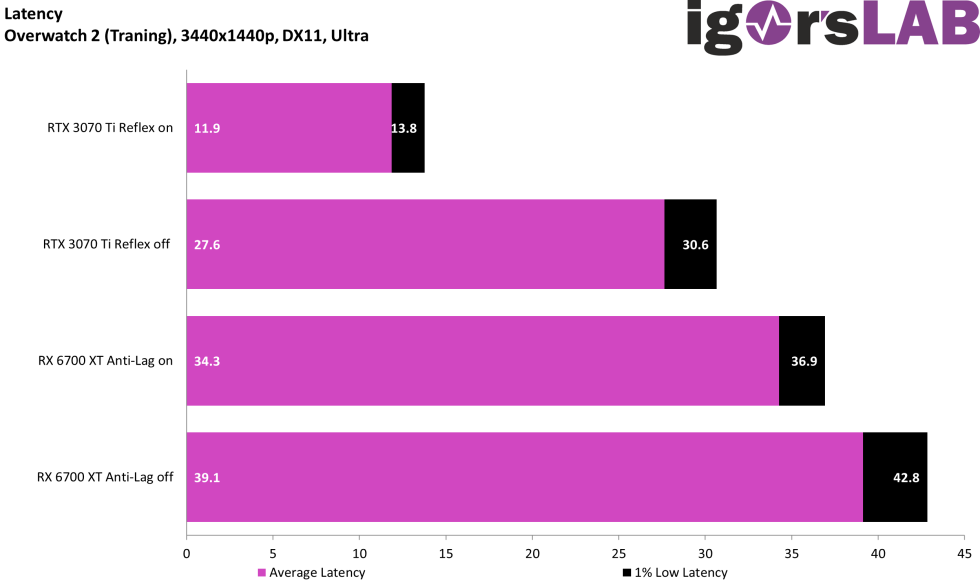

Again, the numbers speak for themselves. These are excuses. They aren’t good enough for anyone to actually buy.He isn't wrong though. AMD is decent, just not as good (overall). The problem is they are seen as a slightly inferior product with a slightly lower price. Their software/features are generally a generation behind, and their product releases generally lag as well. The 4070/4060ti have been out for a few months now. AMD will be what, 5-6 months late to the party? Even if people wanted to give AMD a chance, Nvidia was the only game in town with exception of last generation products. 5-6 months is a long time in the tech world so even if people were unhappy with Nvidia's $400-600 offerings, a lot of gamers already bought them.

If the products were on time and maybe priced a bit lower than they currently are we'd likely see some shift.

DLSS Frame Gen in Ratchet & Clank is was fairly impressive for me. I didn't think I noticed much latency issues, and for slower paced single player games it is likely fine. That is an example of something AMD will need to counter.

That’s the bottom line.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)