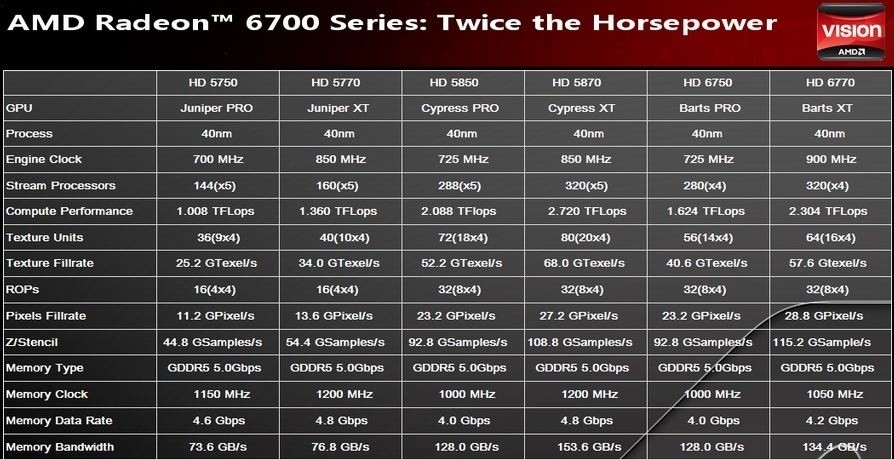

Very little known on 6700 cards yet.

Since almost all discussion is about the 6800 & 6900, I created this thread for speculation, rumors, and crumbs of info specific to 6700.

They're based on navi 22 gpu, rumored to have 12gb gddr6 memory.

wccftech posted speculation that there will be two 6700 cards.

Love to find out TDP, card size, guesses on release date etc...

Since almost all discussion is about the 6800 & 6900, I created this thread for speculation, rumors, and crumbs of info specific to 6700.

They're based on navi 22 gpu, rumored to have 12gb gddr6 memory.

wccftech posted speculation that there will be two 6700 cards.

Love to find out TDP, card size, guesses on release date etc...

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)