Mad Maxx

Supreme [H]ardness

- Joined

- Apr 12, 2016

- Messages

- 7,329

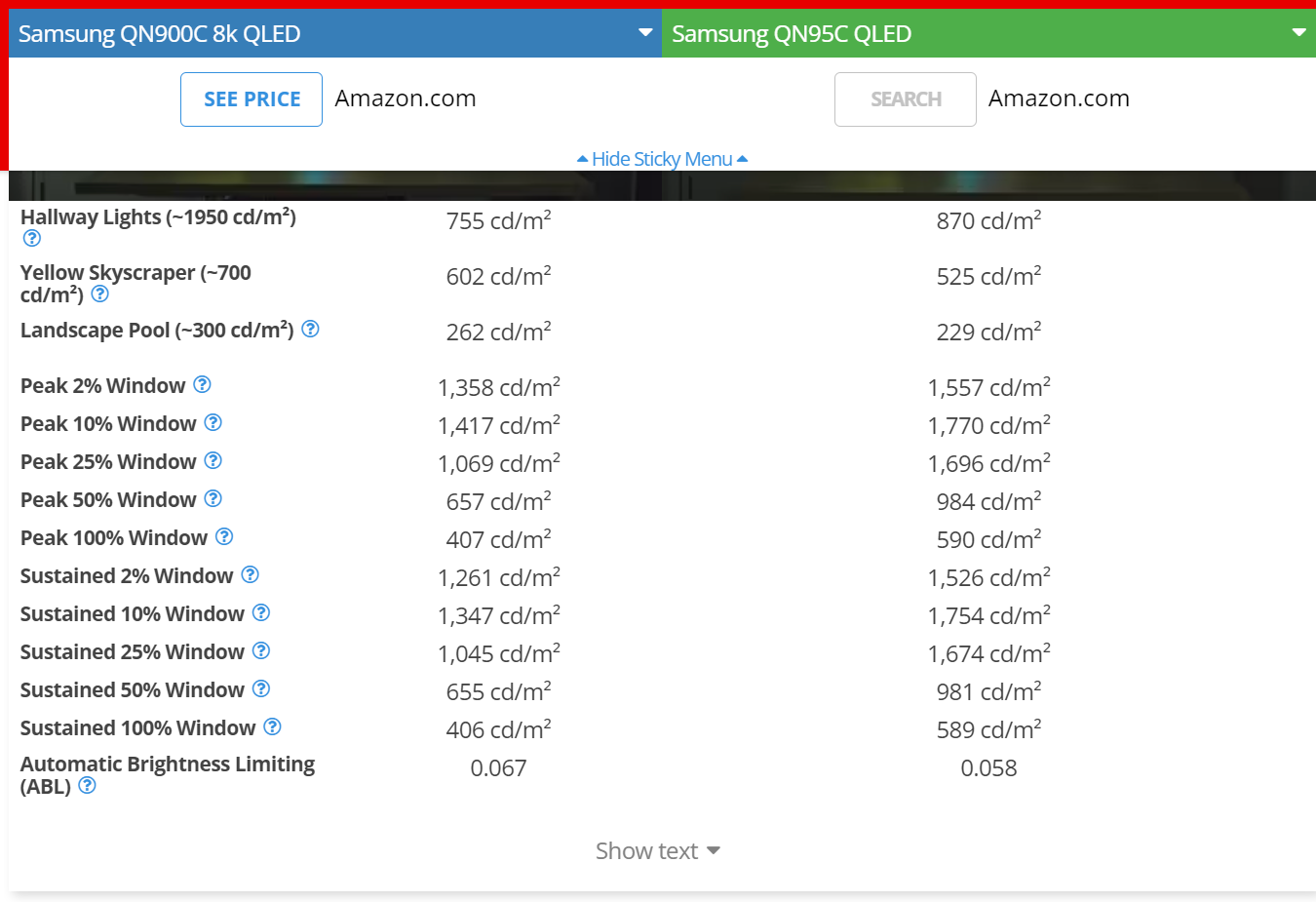

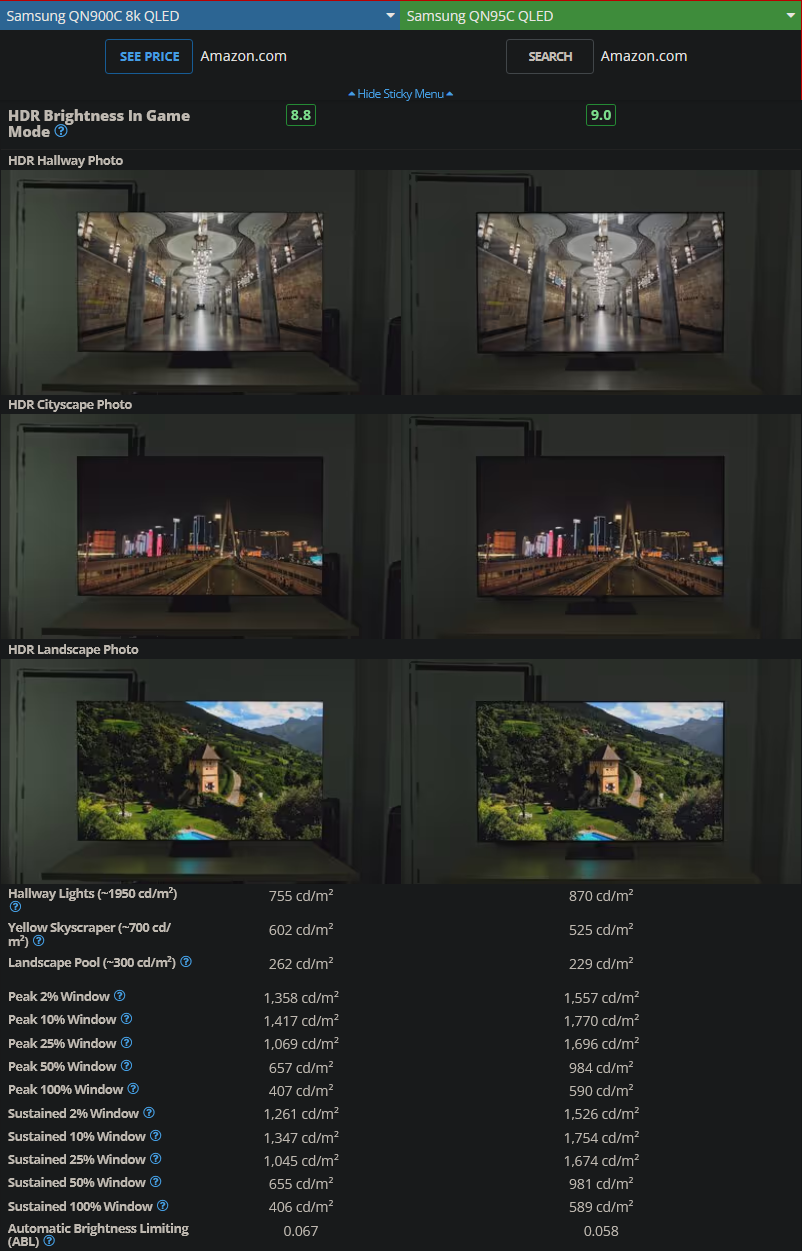

MiniLED is my preference at least until OLED gets substantially brighter and burn-in is resolved. I'm well aware that lots of people swear by OLED and claim never to have any burn-in whatsoever. However, unless I'm mistaken, burn-in still is not covered by manufacture warranty. That tells me it's still a problem.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)