DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,577

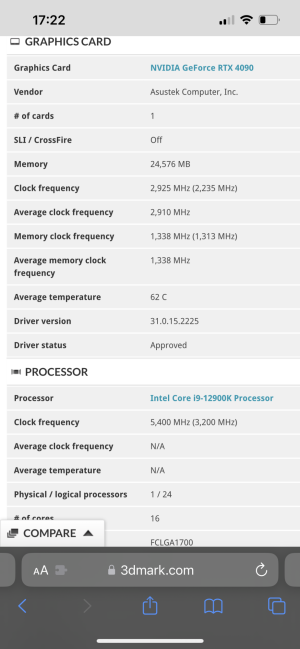

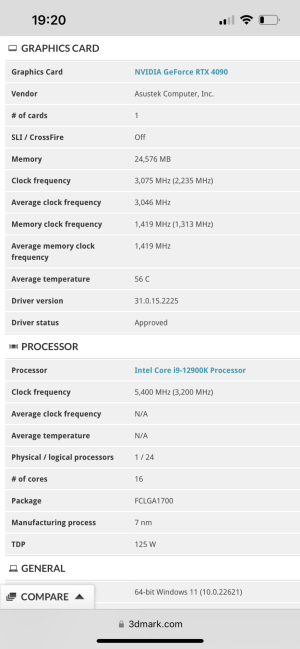

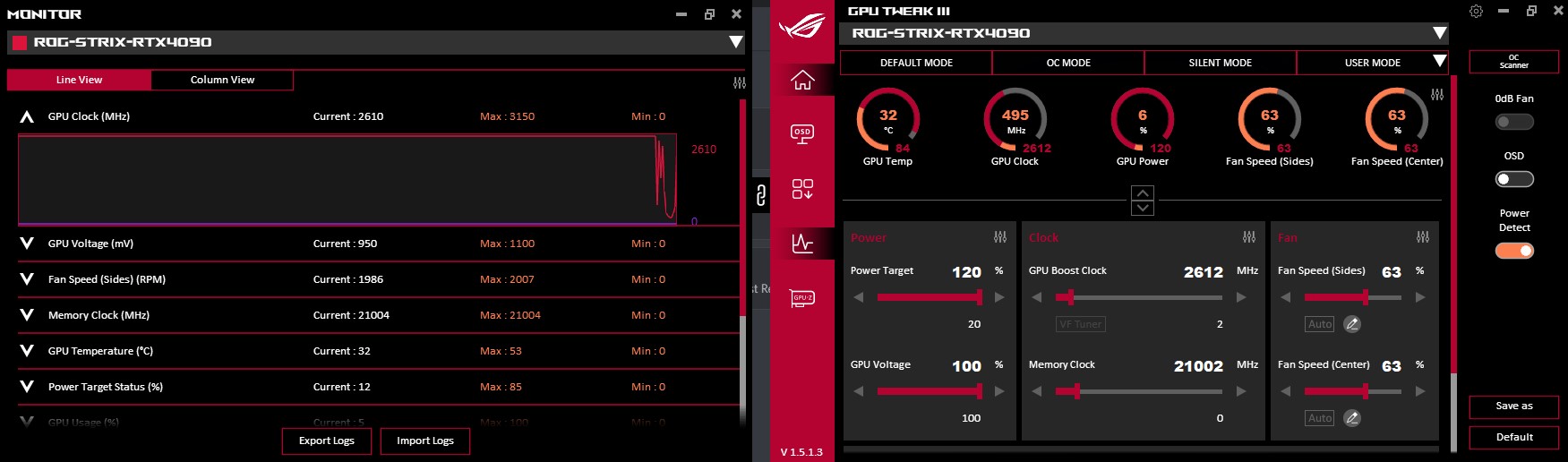

Drop a 5800X3D in your board and I bet that helps. Better yet grab a 7XXX/13900K with DDR5 and a new MB. You'll see an increase in score.You guys think my ASUS TUF is a dud?

I can only get it to run at 2880 and 12,000 mem.

Also my scores seem to be lower than most people. I am doing 2709X in Port Royal and about 29K in Timespy. Superposition is 33K or something.

What gives?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)