DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,952

Not exactly disproving me if that's the only game using PhysX this year. Nearly every game sold for the past decade has had physics in it. PhysX is being phased out. Pretty sure at this point Nvidia isn't helping developers by having it around.Ghostwire: Tokyo uses PhysX, and that just came out.

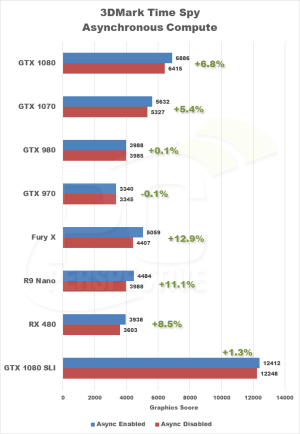

Mantle is the basis of Vulkan and that's a pretty big innovation. May not be a big deal for you since as far as I can remember DX12 and Vulkan were not performance improvements for Nvidia hardware until RTX was released. Fact is that AMD has working asynchronous compute since the creation of GCN while it took Nvidia a while to not lose performance when using it. AMD was also involved with HBM's creation, along with Samsung and Hynix. The only innovation that Nvidia has done is Ray-Tracing which is arguably garbage unless you play games like Quake 2 or Minecraft. DLSS was created specifically to allow games that could not normally do Ray-Tracing by literally lowering the resolution of your game and then using high quality textures to mimic higher resolution. In the process of this the RTX 2000 series of cards were largely ignored by Nvidia's customers because the Ray-Tracing hardware was taking so much silicon that games didn't see much of any improvements compared to the GTX 1080's and 1070's. The fact that Ray-Tracing is such a failure and requires DLSS to even have a chance at working is why Nvidia even created Streamline, to encourage developers to work on their technology so their investments into Ray-Tracing wasn't a waste.To:

We can thank Mantle for accelerating Microsoft's timeline to release DX12, but that doesn't count as Graphics innovation though.

Try again? Show me 1 innovation AMD has created since 2007 (15 years), that wasn't a copy of something nVidia did first.

I'm not for team Red or Green, and will gladly go team Blue if Intel makes affordable and capable hardware. Considering whats happened for the past 2 years both AMD and Nvidia can go to hell for all I care. But to say that AMD has been catching up to Nvidia is just fanatic fanboism. No, Gsync doesn't count. An advanced version of Vsync isn't special and Vesa did implement adaptive synchronization, which then AMD later on rebranded as FreeSync. Nobody gets credit for this technology that is just mostly meant to drive the sales of monitors.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)