I gotta know why, I think it's amazing with a proper display. Even something with a good FALD setup seems far superior to me than standard.I'm not going to lie. HDR is a complete non-issue for me. I never use it.

I don't really like HDR.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Starfield

- Thread starter Blade-Runner

- Start date

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,820

I've only recently used HDR and while I do like it, it's not a deal breaker. Really just about no graphics options are, as long as the visual style is good enough.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,695

Sounds pretty weasely worded to me, but I'll reserve judgement until they actually say it outright one way or another officially, not some guy in pr saying he doesn't want to go into contractual obligations and not addressing if there's a penalty for doing so *shrug*."By all accounts," you mean by rumors you heard on line? Guess you missed Frank Azor directly addressing this exact rumor?

Edit: "AMD claims there’s nothing stopping Starfield from adding Nvidia DLSS" https://www.theverge.com/2023/8/25/22372077/amd-starfield-dlss-fsr-exclusive-frank-azor

peppergomez

2[H]4U

- Joined

- Sep 15, 2011

- Messages

- 2,156

What's you guys have a chance to play for a sizable amount of time like twenty or thirty hours plus i'm interested to hear your thoughts on the quality of the quest lines and the dialogue, as well as the combat, companion interactiins, and assorted gamelpay, as well as ship combat, since so far, we're focusing on technical issues.

Remove 30fps cap on the ship crosshair.

Yes that is a thing.

https://www.nexusmods.com/starfield/mods/270

No wonder why crosshair was stuttering all over the place. A mod to un-stutter your crosshair. Bethesda is the clown world of game devs.

peppergomez

2[H]4U

- Joined

- Sep 15, 2011

- Messages

- 2,156

You win the award for ugliest GIF of all timeNo wonder why crosshair was stuttering all over the place. A mod to un-stutter your crosshair. Bethesda is the clown world of game devs.

View attachment 595439

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,695

Wow lol. They really are.No wonder why crosshair was stuttering all over the place. A mod to un-stutter your crosshair. Bethesda is the clown world of game devs.

View attachment 595439

Say what you will about Ubisoft, but their HDR implementation has been stellar since Assassin's Creed Origins back in 2017. So anyone can get it right if they put some effort into it- and clearly Bethesda did not- they didn't even place priority in getting SDR looking good.I wonder if HDR mastering is harder for games. Given how much of a problem it continues to be it really feels like there are more challenges with games over movies and tv shows.

Huh, I didn't know that. Maybe I'll have to try out Mirage. I'm playing Halo Infinite Campaign mode currently and it blows away any other HDR I've seen so far. Resident Evil games and capcom games in general tend to have great HDR I've noticed.Say what you will about Ubisoft, but their HDR implementation has been stellar since Assassin's Creed Origins back in 2017. So anyone can get it right if they put some effort into it- and clearly Bethesda did not- they didn't even place priority in getting SDR looking good.

Hopefully it gets fixed with this game, I'm guessing a modder fixes it first haha.

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

Who cares, DLSS mod is already available: https://www.nexusmods.com/starfield/mods/111?tab=descriptionSounds pretty weasely worded to me, but I'll reserve judgement until they actually say it outright one way or another officially, not some guy in pr saying he doesn't want to go into contractual obligations and not addressing if there's a penalty for doing so *shrug*.

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,759

Sounds pretty weasely worded to me, but I'll reserve judgement until they actually say it outright one way or another officially, not some guy in pr saying he doesn't want to go into contractual obligations and not addressing if there's a penalty for doing so *shrug*.

I believe him that there were no contractual oblications or any money specifically about DLSS.

The reason it sounds weasily is because it's kind of assumed you're not going to do that.

aka "unwritten rule", "don't bite the hand that feeds you", "common sense", etc. etc. etc.

What do you think the point of an AMD sponsored game is? AMD isn't doing this for charity, they're doing it to sell cards.

Unless AMD straight up told Bethesda to put DLSS in Starfield they weren't going to do it.

You don't get paid by AMD so they can put "AMD is Starfield's Exclusive PC Partner" on their website, then put DLSS3 in and NVIDIA cards get double the framerate with higher image quality.

That would make you an asshole, idiot, etc.

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

Same, the shadows look too dark, and the highlights are so bright they hurt my eyes. Maybe it would be better if I was willing to pay double for a monitor / TV, but I'm not. I'd rather not have HDR.I'm not going to lie. HDR is a complete non-issue for me. I never use it.

I don't really like HDR.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,695

Mr. Burns: "Excellent..."Who cares, DLSS mod is already available: https://www.nexusmods.com/starfield/mods/111?tab=description

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Capcom's HDR looks good too - but has confusing in game HDR calibration controls and there's a bug/conflict where if you leave AutoHDR on in Windows 11, actual HDR won't engage in game when set to 'HDR ON' - turn AutoHDR off and HDR proper works fine

If you're speaking about gaming that's because you haven't seen it implemented properly. If you're talking about TV/Theatrical content, well, I don't know what to say. Proper HDR can make a bigger difference than resolution.Same, the shadows look too dark, and the highlights are so bright they hurt my eyes. Maybe it would be better if I was willing to pay double for a monitor / TV, but I'm not. I'd rather not have HDR.

CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,784

Mr. Burns: "Excellent..."

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Same, the shadows look too dark, and the highlights are so bright they hurt my eyes. Maybe it would be better if I was willing to pay double for a monitor / TV, but I'm not. I'd rather not have HDR.

If the LED monitor doesn't have local dimming or is an OLED (emissive so doesn't need local dimming) then it's not a 'true' HDR image and I wouldn't really judge HDR off of such an image - stick to SDR on displays like that (usually HDR400 or HDR600 monitors - but if a display has local dimming even HDR 400 can look 'good')

My old HDR400 monitor without local dimming hurt my eyes quickly because even though the monitor and windows were doing best to filter black levels, you still basically have the entire monitor blasting full brightness in your face, even in black areas - my HDR1200 monitor with local dimming I don't even squint now

NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

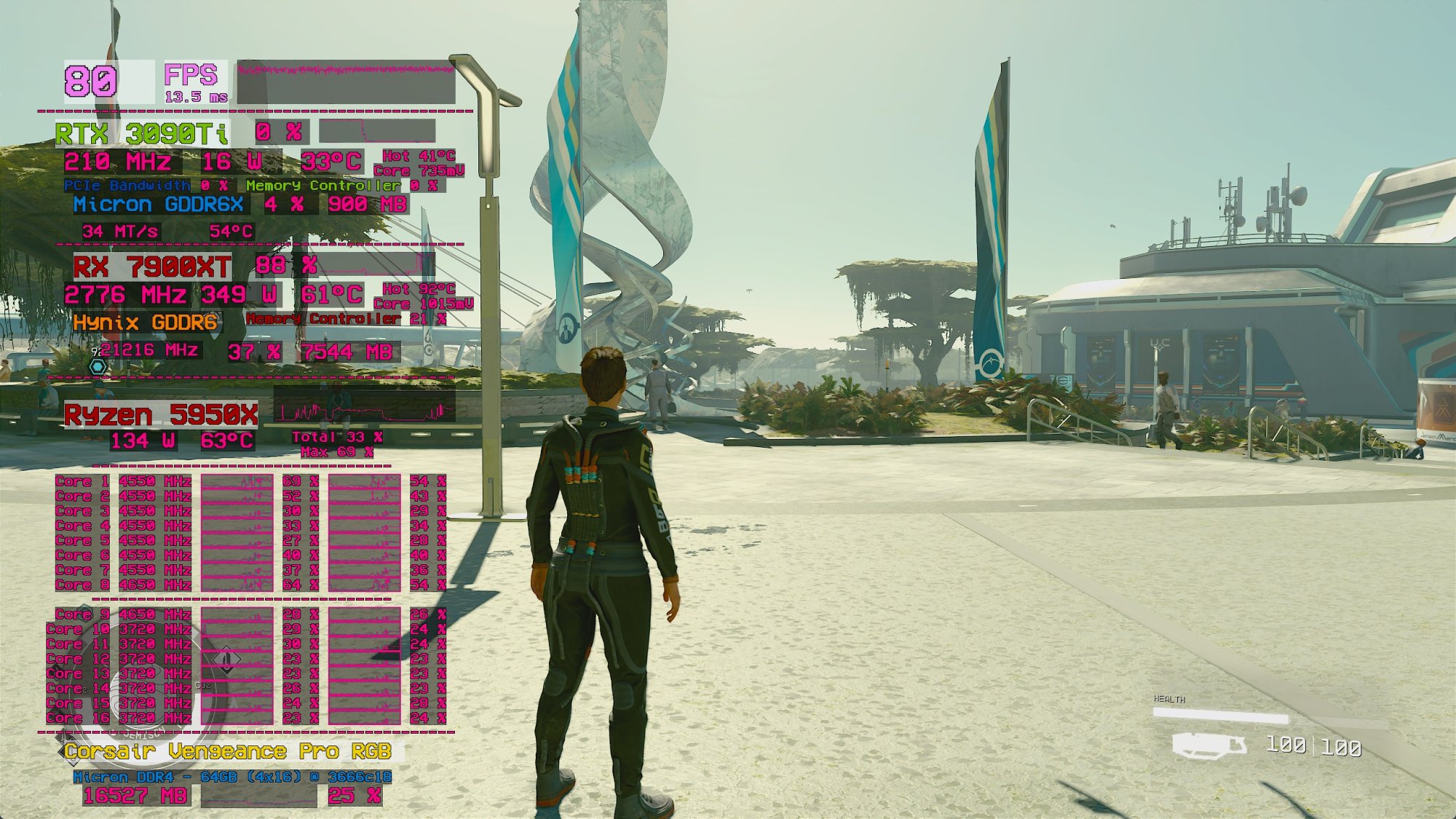

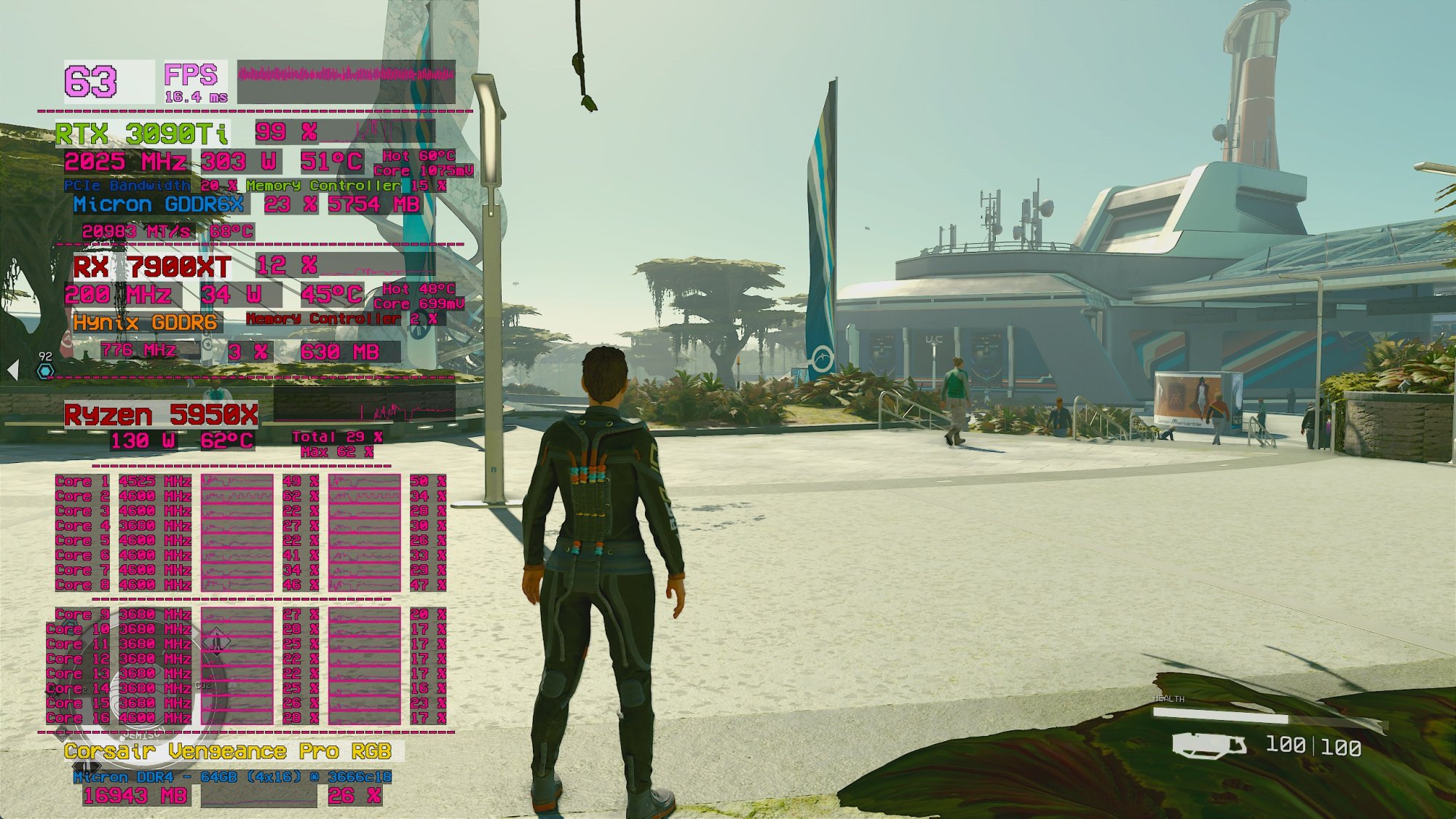

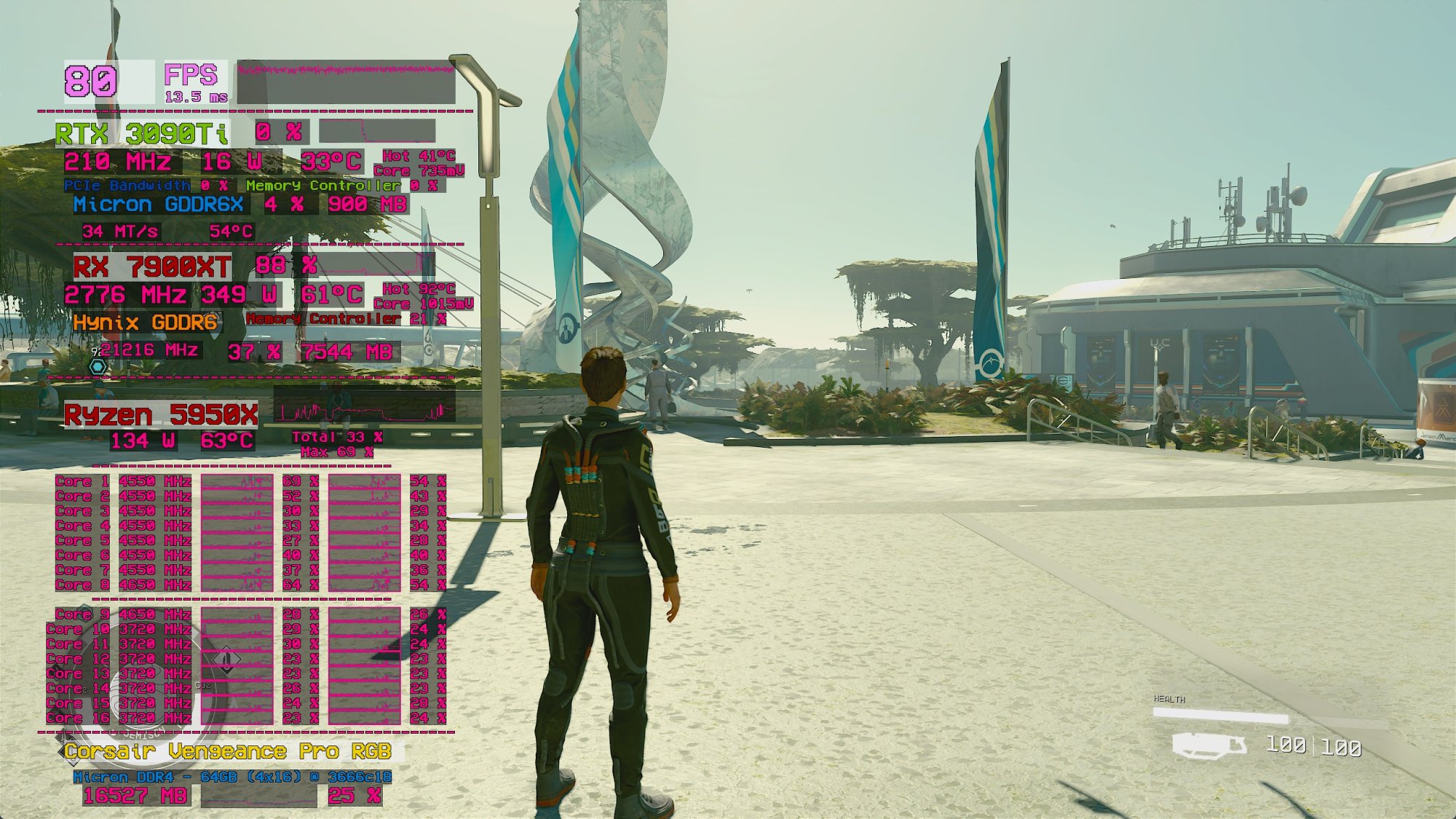

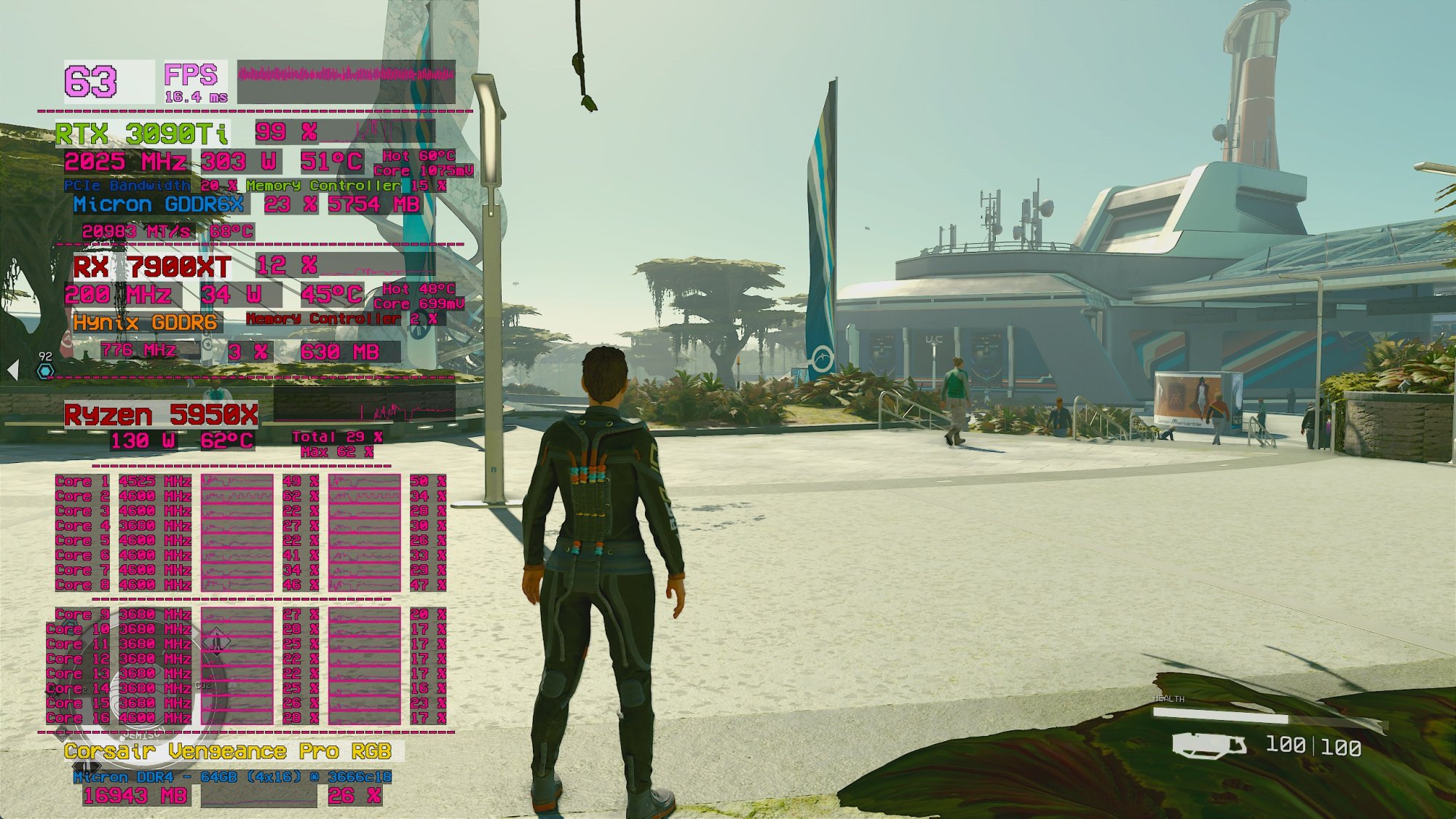

Folks running Starfield on NVidia GPUs, I'm curious what your performance / GPU utilization / GPU power consumption are like, specifically at Ultra settings and native res.

Based on some things I'm seeing around (such as the Daniel Owen's video) and my own little bit of testing, it seems that Ultra quality settings may result in broken performance on NV cards in some scenarios...

Peep this (not actually spoilers)

Note

- 7900XT is performing ~30% better than 3090Ti and could even go further on faster CPU as it's hitting CPU limit on 5950X

- 3090Ti is only consuming 300W despite reporting 99% utilization at 1075mV- that's a big red flag for me, should be more like 400W-500W at that voltage at 99% util

- This seems consistent across the few areas of the game I've visited so far

- Odd performance behavior persists when the Radeon GPU is disabled in Device Manager and all Radeon-related background software is closed

- Behavior persists after clearing shader caches and reinstalling the game

- Both GPUs are using latest (as of 9/1) drivers, Windows 11 fully updated, Steam version of Starfield

So is there something wrong with maxxing settings at native res on NV GPUs in the current version of the game? I'd say this is a fluke on my system, NV and AMD drivers conflicting or something- but I have yet to see this behavior in any other game, and some of the early YT vids seem to cooberate this.

Based on some things I'm seeing around (such as the Daniel Owen's video) and my own little bit of testing, it seems that Ultra quality settings may result in broken performance on NV cards in some scenarios...

Peep this (not actually spoilers)

Note

- 7900XT is performing ~30% better than 3090Ti and could even go further on faster CPU as it's hitting CPU limit on 5950X

- 3090Ti is only consuming 300W despite reporting 99% utilization at 1075mV- that's a big red flag for me, should be more like 400W-500W at that voltage at 99% util

- This seems consistent across the few areas of the game I've visited so far

- Odd performance behavior persists when the Radeon GPU is disabled in Device Manager and all Radeon-related background software is closed

- Behavior persists after clearing shader caches and reinstalling the game

- Both GPUs are using latest (as of 9/1) drivers, Windows 11 fully updated, Steam version of Starfield

So is there something wrong with maxxing settings at native res on NV GPUs in the current version of the game? I'd say this is a fluke on my system, NV and AMD drivers conflicting or something- but I have yet to see this behavior in any other game, and some of the early YT vids seem to cooberate this.

Could it be because there are many HDR format, HDR10, 10+, Dolby, HLG, HDR by technicolor, different from Xbox to playstation, different from AMD to Nvidia and from monitor to monitor, that could help created issue for some people on some setup at launch.Do you seriously not understand why this continues to be an issue? It continues to be an issue because consumers tolerate it.

Not even sure you can conclude that it is broken with a sample of one setup.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Could it be because there are many HDR format, HDR10, 10+, Dolby, HLG, HDR by technicolor, different from Xbox to playstation, different from AMD to Nvidia and from monitor to monitor, that could help created issue for some people on some setup at launch.

Not even sure you can conclude that it is broken with a sample of one setup.

I don't believe devs handle all HDR formats individually like that - even when authoring video you basically now have grade 1 version and it (software) properly maps + outputs to all other versions - I believe devs just handle HDR10 and then the console itself a layer above maps to Dolby Vision gaming (again guessing here never looked into it) - if a game itself has Dolby Vision they'd have to ensure DV PQ to around certain % of spec as part of the licensing like displays and players do I'd assume - that's handled by the device manufacturer as well for the few DV liscenced laptops - edit: wait no they'd (game) pay for the DV liscencing like video outlets do to release Dolby Vision videos, but the console or laptop or TV manufacturer would be required to meet the % spec guidelines to be branded a Dolby VIsion playback device

Last edited:

We are talking about HDR10. That's it. That's the standard- any tv that supports 10+/DV/HLG supports HDR10 so that's where we start- particularly for PC gaming. The only two PC games I know of that support DV are Mass Effect Andromeda and Battlefield 1. There are no DV enabled monitors, just TVs.Could it be because there are many HDR format, HDR10, 10+, Dolby, HLG, HDR by technicolor, different from Xbox to playstation, different from AMD to Nvidia and from monitor to monitor, that could help created issue for some people on some setup at launch.

Not even sure you can conclude that it is broken with a sample of one setup.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,163

You're the third person to post this in the thread already...Who cares, DLSS mod is already available: https://www.nexusmods.com/starfield/mods/111?tab=description

Every developer uses HDR10 in games. Series X|S has Dolby Vision for games, but it's a software solution that is basically just tone mapping. There is one game on PC I know of that has native Dolby Vision support: Mass Effect Andromeda.Could it be because there are many HDR format, HDR10, 10+, Dolby, HLG, HDR by technicolor, different from Xbox to playstation, different from AMD to Nvidia and from monitor to monitor, that could help created issue for some people on some setup at launch.

Not even sure you can conclude that it is broken with a sample of one setup.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,695

It was in response to me, and I'm glad he pointed it outYou're the third person to post this in the thread already...

Whiffle Boy

Limp Gawd

- Joined

- Feb 23, 2001

- Messages

- 411

I know right?This game definitely does something right as evidenced by the two hours that just disappeared in a flash for me. That's not really a surprise for a lot of us when it comes to these games regardless of a number of valid criticisms.

I kept saying in my head to myself "stop that, it says follow X" as I wander into the mines or whatever, essentially trying to strip mine the whole place myself.....

or the almost two hours I spent in the character design part... I NEVER do this in these games....

Finally, back at the mining base, literally taking everything that isnt bolted down, right down to the foam cups in the trash cans.

That is gaming for me, i am still in the first "dungeon", ie the pirate base leader mission. Im at the end... but im weighing my options... i already successfully talked him down (then blew his @## up, which caused ships full of pirates to land instantaneously [which kinda bothers me... hoping this isnt a "do bad, bad guys spawn" game like GTA 5, why cant i hide in a closet with a sniper rifle and kill people without the cops knowing exactly where i am...) so I am currently spending my time ferrying ALL of the items out of the building and throwing them off the roof so that I can pick them up after.

Yes, I really need them to make pat rack simulator, or hoarding simulator or something. its a definite problem.

glad to see others enjoying it as well. i dont treat these things as the end all be all games. it came out, im playing it, im having fun. (looks at the diablo community and weeps)

Yes but game like this are made to run on TVs (via a game consoles) , probably more common for TV players to have worth it HDR than monitor players.The only two PC games I know of that support DV are Mass Effect Andromeda and Battlefield 1. There are no DV enabled monitors, just TVs.

There a long series of hardware unboxed video and message board about HDR working with monitor X with both Nvidia and AMD but on monitor Y only one of the 2, it seems to not be that straightforward

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

I prefer viewing both in SDR, HDR is a mess and all over the place. It makes a difference alright, for the worse.If you're speaking about gaming that's because you haven't seen it implemented properly. If you're talking about TV/Theatrical content, well, I don't know what to say. Proper HDR can make a bigger difference than resolution.

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,642

Folks running Starfield on NVidia GPUs, I'm curious what your performance / GPU utilization / GPU power consumption are like, specifically at Ultra settings and native res.

Based on some things I'm seeing around (such as the Daniel Owen's video) and my own little bit of testing, it seems that Ultra quality settings may result in broken performance on NV cards in some scenarios...

Peep this (not actually spoilers)

Note

- 7900XT is performing ~30% better than 3090Ti and could even go further on faster CPU as it's hitting CPU limit on 5950X

- 3090Ti is only consuming 300W despite reporting 99% utilization at 1075mV- that's a big red flag for me, should be more like 400W-500W at that voltage at 99% util

- This seems consistent across the few areas of the game I've visited so far

- Odd performance behavior persists when the Radeon GPU is disabled in Device Manager and all Radeon-related background software is closed

- Behavior persists after clearing shader caches and reinstalling the game

- Both GPUs are using latest (as of 9/1) drivers, Windows 11 fully updated, Steam version of Starfield

So is there something wrong with maxxing settings at native res on NV GPUs in the current version of the game? I'd say this is a fluke on my system, NV and AMD drivers conflicting or something- but I have yet to see this behavior in any other game, and some of the early YT vids seem to cooberate this.

Good lord that color vomit would drive me nuts. I haven't got to the city there yet but I haven't seen anything under 100fps with FSR off. 7900XTX+7950X3D with 6400mhz DDR5 and very tight timings.

Again, HDR10 is what we are talking about- and now Win11 has an auto-HDR feature which should make it even easier to implement.Yes but game like this are made to run on TVs (via a game consoles) , probably more common for TV players to have worth it HDR than monitor players.

There a long series of hardware unboxed video and message board about HDR working with monitor X with both Nvidia and AMD but on monitor Y only one of the 2, it seems to not be that straightforward

As I said before- Ubisoft has been doing it right since 2017. HDR10 can be implemented properly on PCs if the devs just put a little effort into it.

That's fine if you like SDR better- but if HDR10 is implemented properly then SDR will look good too, which clearly isn't the case here.

peppergomez

2[H]4U

- Joined

- Sep 15, 2011

- Messages

- 2,156

Other than the technical stuff for folks who've been playing a while what are impressions of the the combat, space flight and space combat, quality of the quests and the writing, NPC companion interactions and stories, overall quality of the cities and towns, planet exploration, and other gameplay elements?

Well, the game started up, was a bit wonky loading shaders and didn't like me tabbing out and crashed. On restart it loaded the shaders in 20 seconds or so. Other than that, the game ran fine for about an hour, on my i7 4790k with 32GB ram and 2060 Super. 40-60 fps, vsync on. Graphics preset was medium with Dynamic Res and FSR turned on. I only turned off motion blur. The first combat was a little stuttery but entirely playable at around 40fps.

M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,039

But I'd rather talk about the game than the merits or lack thereof of HDR.

First impressions after 5 hours: This is 85% Mass Effect and 10% Cyberpunk, with 5% space combat sim. You might call it an outright copycat, as even some of the side missions are eerily similar to ones found in the OG Mass Effect on the citadel.

But still I'm unable to stop playing. It took me 2 hours to actually go eat something after realizing that I'm hungry, because I just wanted to play on and on.

I feel I have barely scratched the surface, so I might eat my words later, but so far I think this is by far the best Bethesda game to date. Apart from a few minor NPC glitches I didn't even encounter bugs.

The graphics is much nicer than I expected, even with some areas being less detailed than others and with DLSS it runs fine on Ultra on my 2080Ti.

So far my only complaint that didn't go away is the atrocious and totally useless map. The inventory / loadout / mission screen was weird at first too, but it works pretty well after getting used to it.

There might be some pacing issues, as I haven't had to fire a single bullet in hours, it does not bother me yet, just something I thought noteworthy.

First impressions after 5 hours: This is 85% Mass Effect and 10% Cyberpunk, with 5% space combat sim. You might call it an outright copycat, as even some of the side missions are eerily similar to ones found in the OG Mass Effect on the citadel.

But still I'm unable to stop playing. It took me 2 hours to actually go eat something after realizing that I'm hungry, because I just wanted to play on and on.

I feel I have barely scratched the surface, so I might eat my words later, but so far I think this is by far the best Bethesda game to date. Apart from a few minor NPC glitches I didn't even encounter bugs.

The graphics is much nicer than I expected, even with some areas being less detailed than others and with DLSS it runs fine on Ultra on my 2080Ti.

So far my only complaint that didn't go away is the atrocious and totally useless map. The inventory / loadout / mission screen was weird at first too, but it works pretty well after getting used to it.

There might be some pacing issues, as I haven't had to fire a single bullet in hours, it does not bother me yet, just something I thought noteworthy.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,883

I gotta know why, I think it's amazing with a proper display.

I'm willing to admit that it is possibly because the HDR features on my screen aren't that amazing. It's an Assus XG438q, a 43" 4k VA panel with DisplayHDR 600...

But it was a screen that got top reviews when I only just bough it a little while ago.... uuhh. I guess that was 4 years ago, but still! In the grand scheme of things, what is 4 years?

Anyway, I don't use Windows 11, so I don't have any of the AutoHDR features. For some games that natively support HDR I use the feature. Cyberpunk 2077 was one of these, but - at least in Windows 10 - it requires that I turn on HDR for the desktop (otherwise the HDR settings don't show up in game, and it doesn't use HDR), which makes white windows (like the file manager and other things) blast so bright that they sear my eyeballs. In general it makes the desktop experience mostly unusable, or at the very least very uncomfortable, so I wind up turning it on, starting the game and turning it off again, which gets old, and I often forget to enable it before starting the game, and don't even notice until I've been playing for a half an hour, and don't feel like quitting to enable it.

I guess to me HDR is more of a marginal feature than a game changing one, and on or off it doesn't make a huge difference in game to me, but it does completely ruin my 2D/Desktop experience, and for this reason I almost always keep it off.

And yes, this may be in part because I have refused to "upgrade" to Windows 11, and in part because my monitor is 4 years old, but still.

Monitors used to be the one part of our hobby that would last nearly indefinitely. I can't bring myself to replace it just for HDR, a feature my screen purportedly already has...

At some point I may invest in a 42" LG C<insert number here> but it hasn't been a priority yet, in part because the possibility of burn-in concerns me. I still use this computer for work more than I do for games or movies. We are probably talking 50 hours a week for work work (MS Office, Stats software, email, etc.) with static windows menus and window decorations all day every day. Then maybe another 20-30 hours a week of home productivity and web browsing. I maybe squeeze in 4 hours of games per week.

So for this reason, the screen I pick HAS TO be compatible with productivity style static windows without side effects. Everything else is secondary. I'm not going to get a dedicated screen just for games, when I only have time to spend a few hours per week on games.

Even something with a good FALD setup seems far superior to me than standard.

I havent read up enough to know the difference between FALD and other forms of local dimming, but I know my monitor has local dimming and I usually turn it off. The screen segments are way too large, and it usually just winds up looking worse.

Same, the shadows look too dark, and the highlights are so bright they hurt my eyes. Maybe it would be better if I was willing to pay double for a monitor / TV, but I'm not. I'd rather not have HDR.

I'm with you on the "too bright for my eyes" part in many cases. Usually not in games, but definitely on the desktop, which is where I spend most of my time.

There is literally like no immersion to this game. You are locked into a lot of tiny cubes on the planets and in space, all linked together with string/loading screens.

You cannot move your spacecraft on a planet, you can only touchdown and takeoff with cut scenes, and in space you can fly about 2 kilometers in either direction. That's the entirety of the movement in this game, besides walking. This in 2023.

Makes me appreciate Star Citizen more.

You cannot move your spacecraft on a planet, you can only touchdown and takeoff with cut scenes, and in space you can fly about 2 kilometers in either direction. That's the entirety of the movement in this game, besides walking. This in 2023.

Makes me appreciate Star Citizen more.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,883

Haven’t felt it with the 90 minutes I played, I do get it in some other games though. Digital Combat Simulator for example suffers for my poor threaded performance, VR literally pops up saying CPU bound.

It’s a workstation though, the fact it plays games is almost incidental and given the amount of time I get to play a gaming box would be silly. Though less power use would be nice

What kind of framerates have you been getting, at what resolution with the 3960x and 3090?

I'm particularly interested in the minimum dips.

So this is a lot like Outer Worlds?There is literally like no immersion to this game. You are locked into a lot of tiny cubes on the planets and in space, all linked together with string/loading screens.

You cannot move your spacecraft on a planet, you can only touchdown and takeoff with cut scenes, and in space you can fly about 2 kilometers in either direction. That's the entirety of the movement in this game, besides walking. This in 2023.

Makes me appreciate Star Citizen more.

They never said it was going to be a space-sim.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,606

There is probably an ini setting for the view model FOV, people just need to find it. I don't know why Bethesda had to go and change all the parameter names around in this version of the engine.

in Documents/MyGames/Starfield create a text file and name it "StarfieldCustom.ini"

Here is a sample config, from a satisfied user:

[Display]

fDefault1stPersonFOV=90

fDefaultFOV=90

fDefaultWorldFOV=90

fFPWorldFOV=90.0000

fTPWorldFOV=90.0000

[Camera]

fDefault1stPersonFOV=90

fDefaultFOV=90

fDefaultWorldFOV=90

fFPWorldFOV=90.0000

fTPWorldFOV=90.0000

No idea how legit video like this are:

View: https://youtu.be/ZtJLCAWSzR8?t=37

DLSS mod seem to have good visual stability advantage

View: https://youtu.be/ZtJLCAWSzR8?t=37

DLSS mod seem to have good visual stability advantage

No idea how legit video like this are:

View: https://youtu.be/ZtJLCAWSzR8?t=37

DLSS mod seem to have good visual stability advantage

Seems legit.

I'm more interested in seeing how XeSS looks/performs on AMD boards.

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,642

There is literally like no immersion to this game. You are locked into a lot of tiny cubes on the planets and in space, all linked together with string/loading screens.

You cannot move your spacecraft on a planet, you can only touchdown and takeoff with cut scenes, and in space you can fly about 2 kilometers in either direction. That's the entirety of the movement in this game, besides walking. This in 2023.

Makes me appreciate Star Citizen more.

Man you must really hate the game to appreciate a tech demo old enough to vote like Star Citizen better.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)