Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 39,004

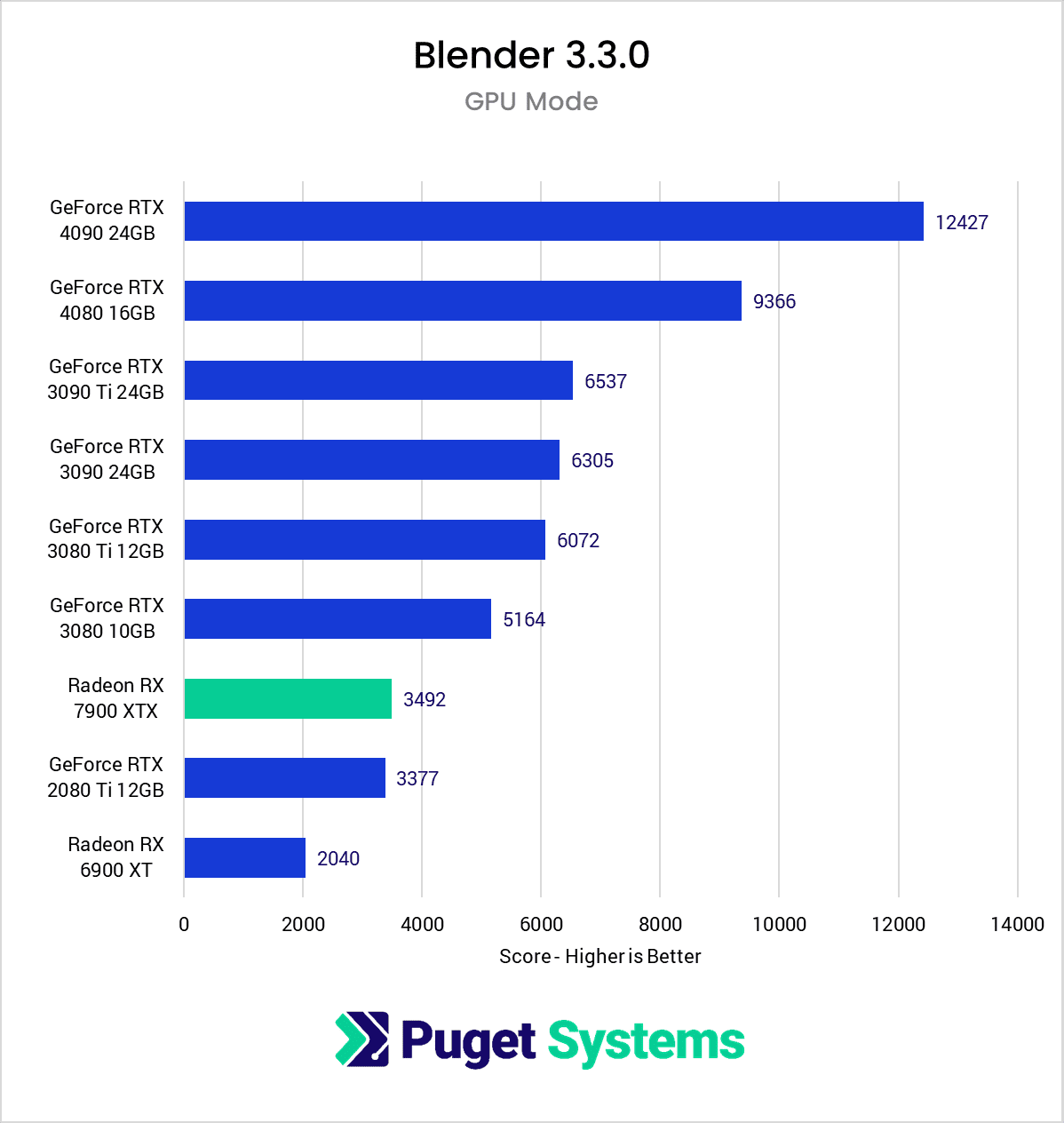

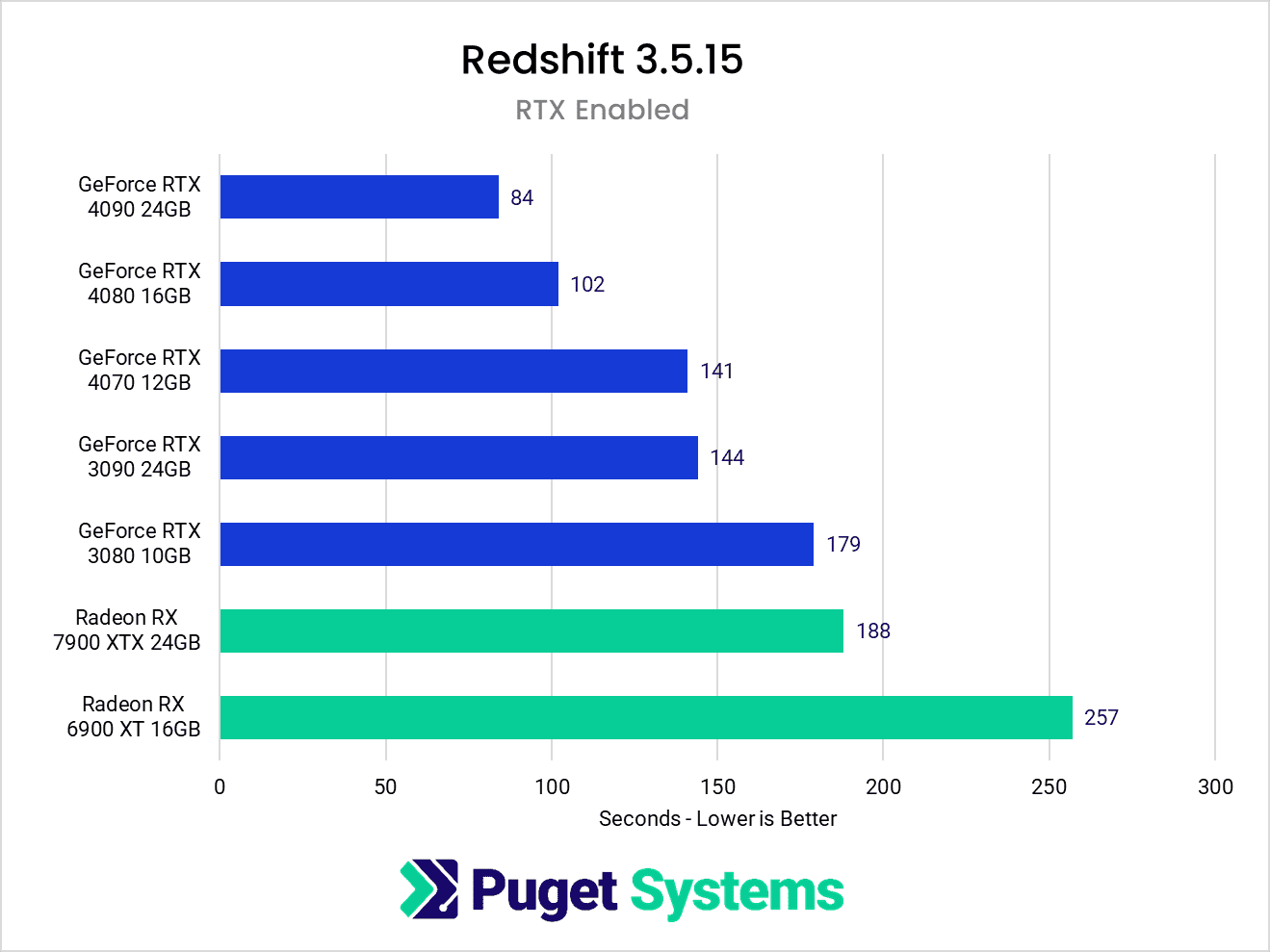

Sure, Apple's M1 and M2 are pretty cool, but where do Apples claimed performance numbers come from anyway?

Linus tackles this:

Marketers will, uh, Marketeer I guess.

Not usually a fan of Linus, but I have to admit, this is a decent expose.

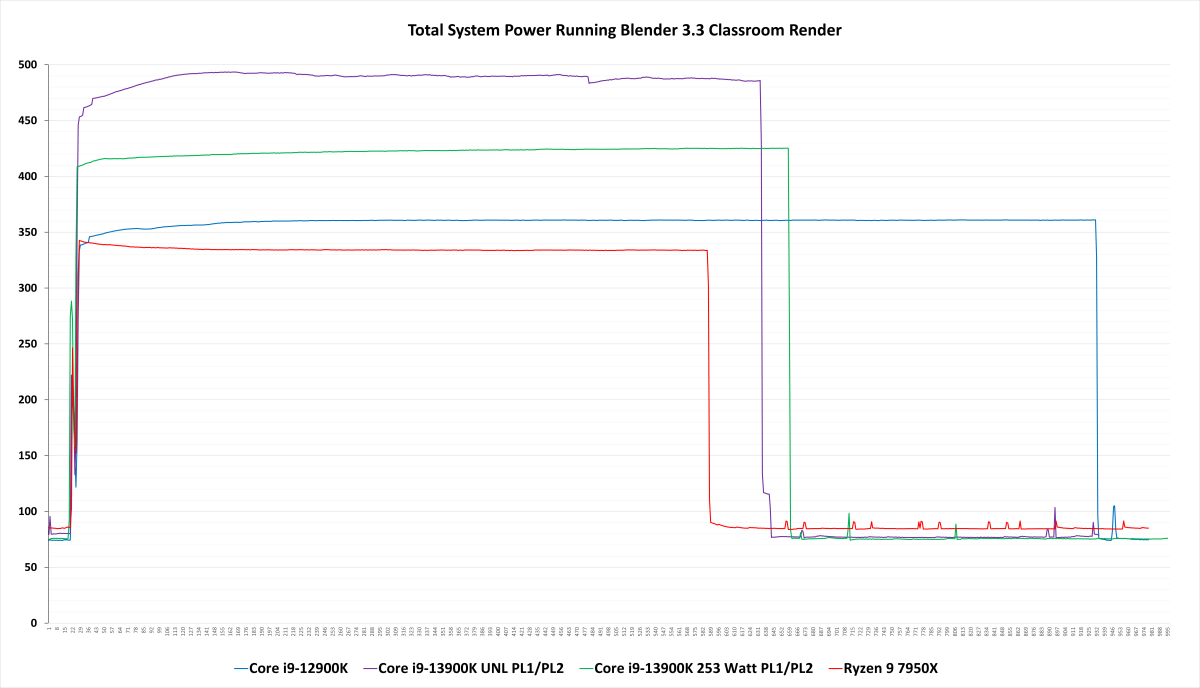

He does give Apple high marks for power use though, which is well deserved.

Linus tackles this:

Marketers will, uh, Marketeer I guess.

Not usually a fan of Linus, but I have to admit, this is a decent expose.

He does give Apple high marks for power use though, which is well deserved.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)