https://www.extremetech.com/gaming/asus-announces-hidden-cable-hardware-alliance

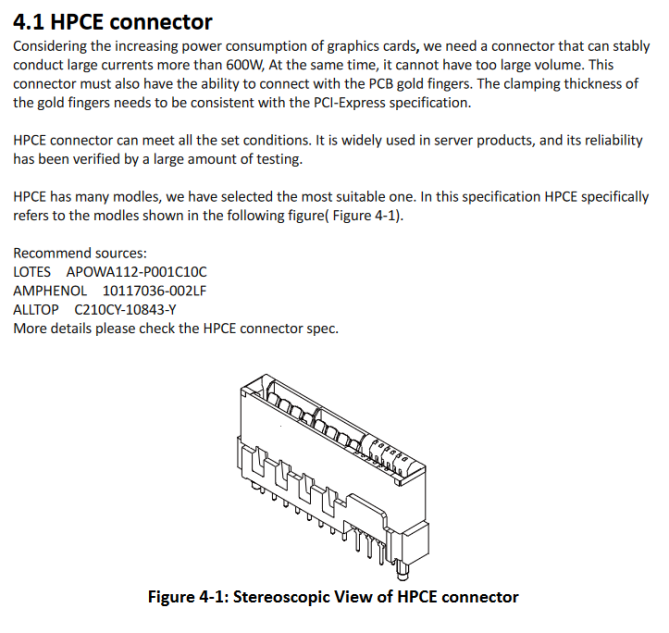

Those cable free ASUS GPUs are going on sale and Asus is trying to make it a standard.

Those cable free ASUS GPUs are going on sale and Asus is trying to make it a standard.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)