erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,900

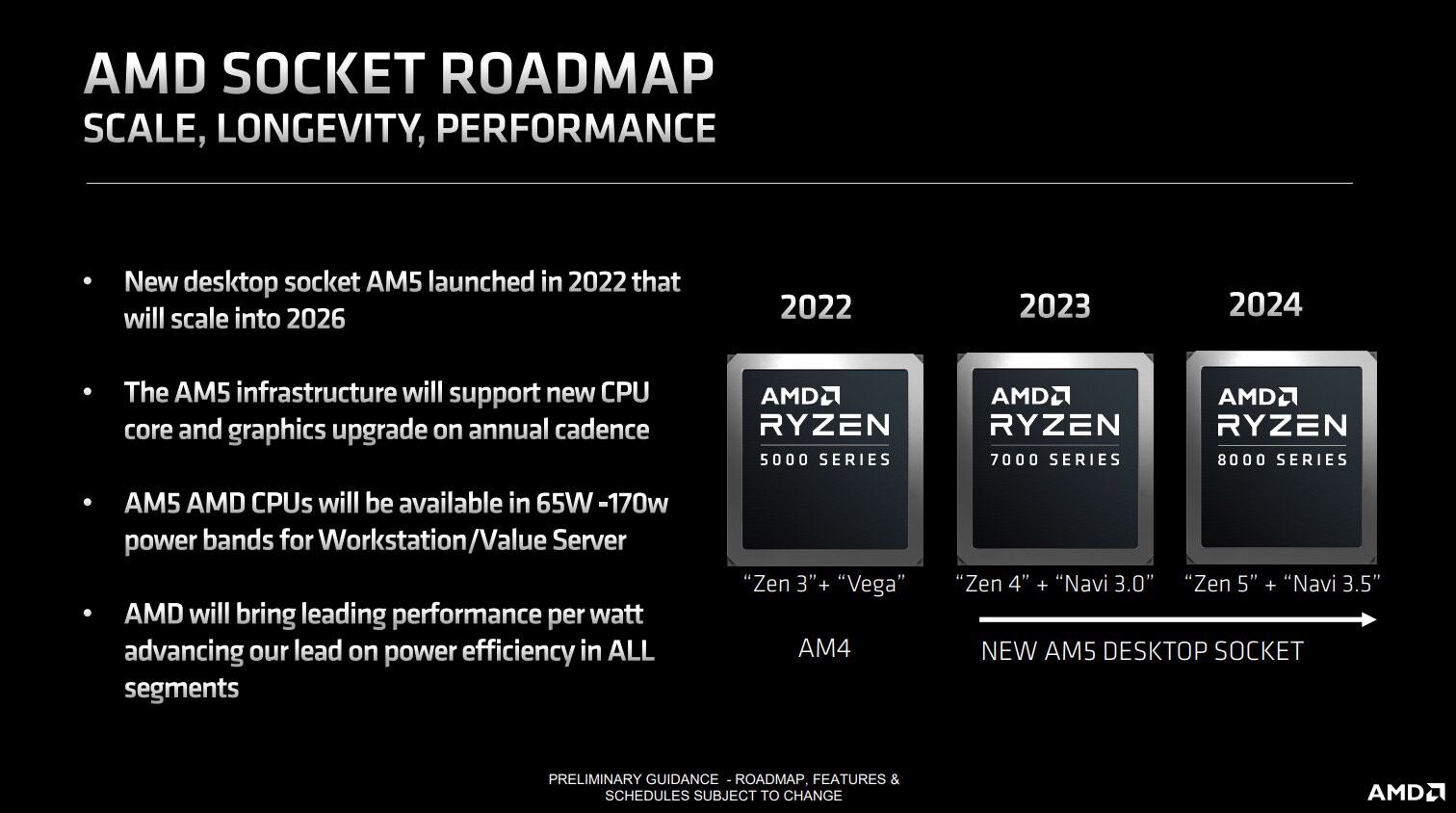

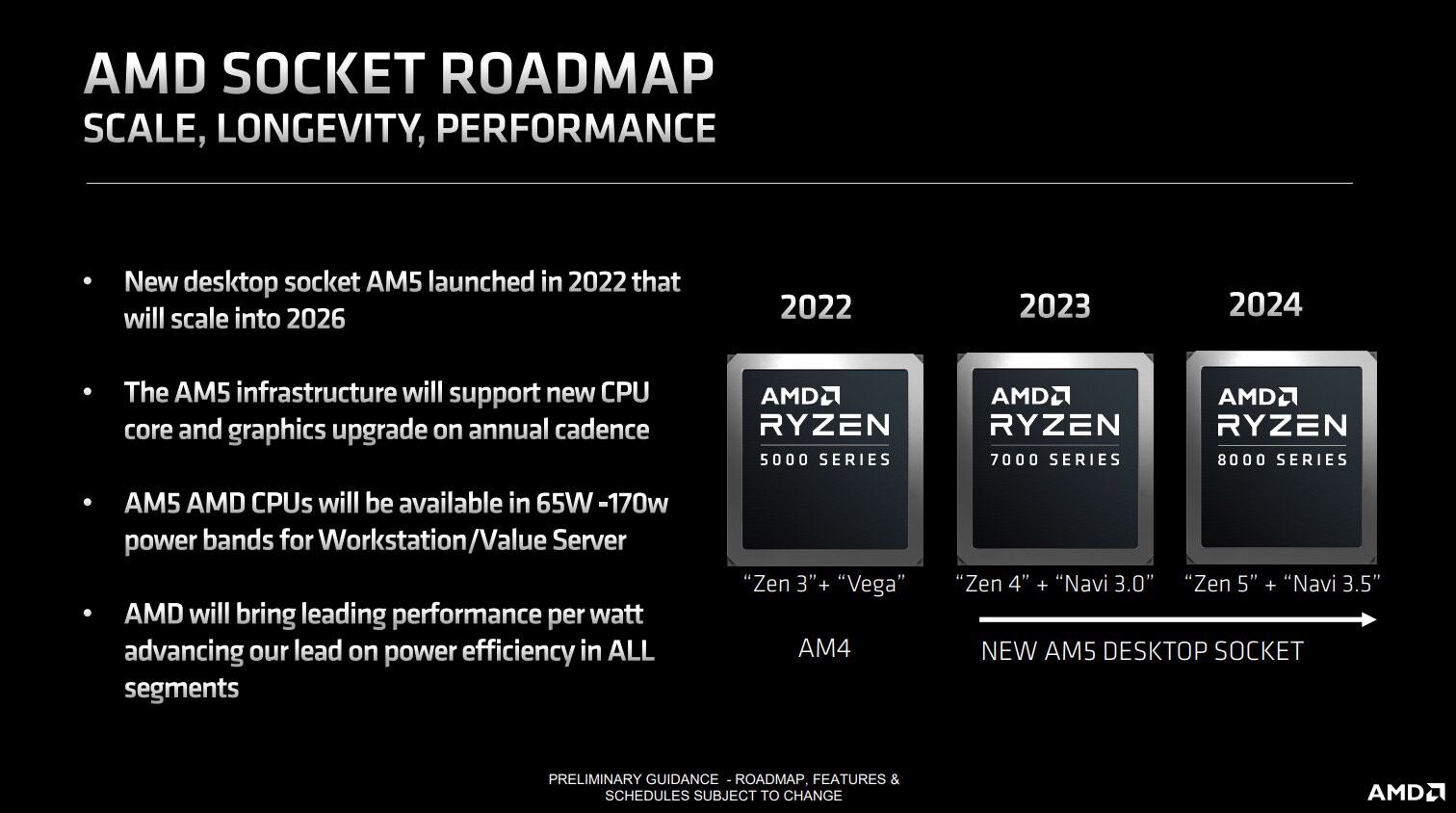

Zen 5 and Navi 3.5 coming

"Another major disclosure is the very first mention of "Navi 3.5" This implies an incremental to the "Navi 3.0" generation (Radeon RX 7000 series, RDNA3 graphics architecture), which could even be a series-wide die-shrink to a new foundry node such as TSMC 4 nm, or even 3 nm; which scoops up headroom to dial up clock speeds. AMD probably finds its current GPU product stack in a bit of a mess. While the "Navi 31" is able to compete with NVIDIA's high-end SKUs such as the RTX 4080, and the the company expected to release slightly faster RX 7950 series to have a shot at the RTX 4090; the company's performance-segment, and mid-range GPUs may have wildly missed their performance targets to prove competitive against NVIDIA's AD104-based RTX 4070 series, and AD106-based RTX 4060 series; with its recently announced RX 7600 being based on older 6 nm foundry tech, and performing a segment lower than the RTX 4060 Ti."

Source: https://www.techpowerup.com/309632/...000-series-branding-navi-3-5-graphics-in-2024

"Another major disclosure is the very first mention of "Navi 3.5" This implies an incremental to the "Navi 3.0" generation (Radeon RX 7000 series, RDNA3 graphics architecture), which could even be a series-wide die-shrink to a new foundry node such as TSMC 4 nm, or even 3 nm; which scoops up headroom to dial up clock speeds. AMD probably finds its current GPU product stack in a bit of a mess. While the "Navi 31" is able to compete with NVIDIA's high-end SKUs such as the RTX 4080, and the the company expected to release slightly faster RX 7950 series to have a shot at the RTX 4090; the company's performance-segment, and mid-range GPUs may have wildly missed their performance targets to prove competitive against NVIDIA's AD104-based RTX 4070 series, and AD106-based RTX 4060 series; with its recently announced RX 7600 being based on older 6 nm foundry tech, and performing a segment lower than the RTX 4060 Ti."

Source: https://www.techpowerup.com/309632/...000-series-branding-navi-3-5-graphics-in-2024

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)