Posted on the PrimeGrid web site.

What does this mean?

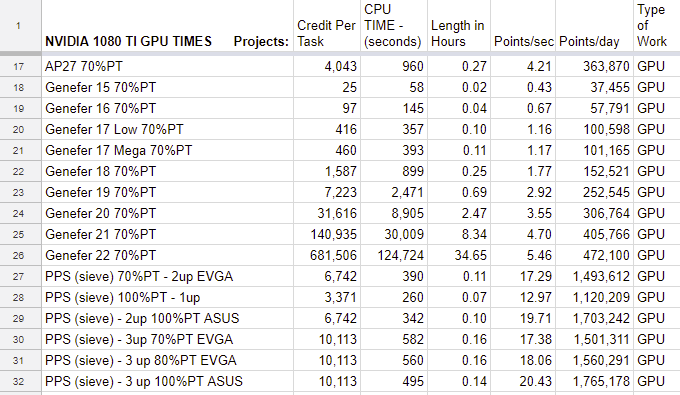

PrimeGrid has two "manual" programs, where the work isn't sent through the BOINC system, but they do give BOINC credit. People were abusing this in the past, and storing up a lot of this manual work and turning it in during the contest.

The two programs are:

Manual Sieving: http://www.primegrid.com/forum_forum.php?id=22

PRPNet: http://www.primegrid.com/forum_forum.php?id=24

The most important thing to know is that if you don't know what manual credit is, this isn't going to affect you.

If PrimeGrid gets chosen, then stick with the main selections on the project page, not these obscure manual programs.

Edit: if you are a badge collector, then these manual sieving points do get you another primegrid badge, just won't help with the Pentathlon race.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)