that’s a part of it, Intel does better than both though which is funny.Do you mean how they have a 3060ti listed under the same tier as a 6800XT? Wouldn't that likely have to do with Direct Storage? Nvidia cards are able to do hardware decompression much better than similar AMD cards, do they not?

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

4060 Ti 16GB due to launch next week (July 18th)

- Thread starter Marees

- Start date

6800 non xt I think, for a game with ray tracing game that not that special (if high include some RT), could be rough equivalent when RT was not made with Nvidia in mind.Do you mean how they have a 3060ti listed under the same tier as a 6800XT?

On DS 1.1 avocado benchmark at least there was just a very small difference between lovelace and RDNA 3:

https://www.tomshardware.com/news/directstorage-performance-amd-intel-nvidia

But on 1.2 (which is the version Ratchet and Clank is using) Nvidia pulls ahead by almost 50%. Nvidia got some serious driver optimizations in and mapped most of the calls over to their RTX IO ones which are further accelerated, it gives them a huge advantage there when dealing with non-NVME storage.6800 non xt I think, for a game with ray tracing game that not that special (if high include some RT), could be rough equivalent when RT was not made with Nvidia in mind.

On DS 1.1 avocado benchmark at least there was just a very small difference between lovelace and RDNA 3:

https://www.tomshardware.com/news/directstorage-performance-amd-intel-nvidia

Do you mean how they have a 3060ti listed under the same tier as a 6800XT? Wouldn't that likely have to do with Direct Storage? Nvidia cards are able to do hardware decompression much better than similar AMD cards, do they not?

Dunno, could be. Nixxes is the dev who said upscaling techs were all easy once one was done.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,014

Good catch, my apologies for the error, I'll edit my earlier post to correct it.6800 non xt I think, for a game with ray tracing game that not that special (if high include some RT), could be rough equivalent when RT was not made with Nvidia in mind.

On DS 1.1 avocado benchmark at least there was just a very small difference between lovelace and RDNA 3:

https://www.tomshardware.com/news/directstorage-performance-amd-intel-nvidia

And they were not wrong then about upscaling techs, This has been known for a long time now and it is sad that it needs to be repeated over and over to correct those who insist that devs are doing it as a cost cutting measure.Dunno, could be. Nixxes is the dev who said upscaling techs were all easy once one was done.

It's more likely that they just don't care how well or badly it does. At this point their focus is squarely on other endeavors and gaming will be taking a massive back seat for the foreseeable future.

People keep saying this, but that’s not correct. That market is worth several billion dollars annually and they have 80%-ish share. They care, they just don’t have competitors most people are willing to buy, so their customers haven’t provided them a reason to try harder.

Mr. Bluntman

Supreme [H]ardness

- Joined

- Jun 25, 2007

- Messages

- 7,088

Paying $500 for a 60 series card makes about as much sense to me as wiping my ass with five one hundred dollar bills and flushing them.

Remember all those 512MB or 1GB FX 5200 cards? Getting shades of this here.

Remember all those 512MB or 1GB FX 5200 cards? Getting shades of this here.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,457

I think you might be remembering the FX 5200 GPUs with 256MB of VRAM when the standard models were paired with 64MB or 128MB.Remember all those 512MB or 1GB FX 5200 cards? Getting shades of this here.

No GPU in 2003 had that much RAM, and the 6800 Ultra in 2004 was the earliest I can think of with 512MB of VRAM.

I do get what you mean, and remember a GT 430 paired with 4GB VRAM in 2013, when even a top-end GPU only had 2-3GB VRAM.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,970

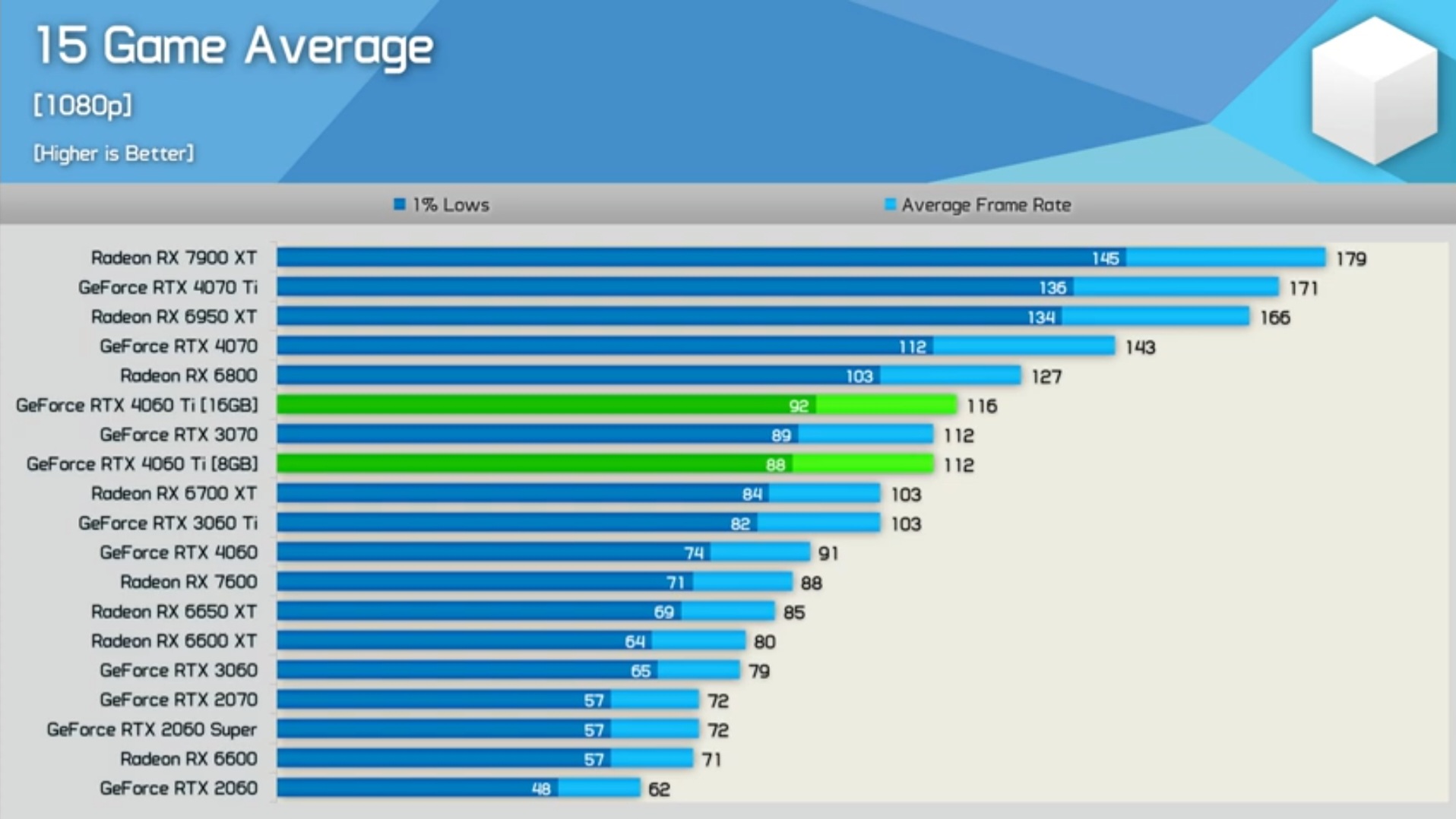

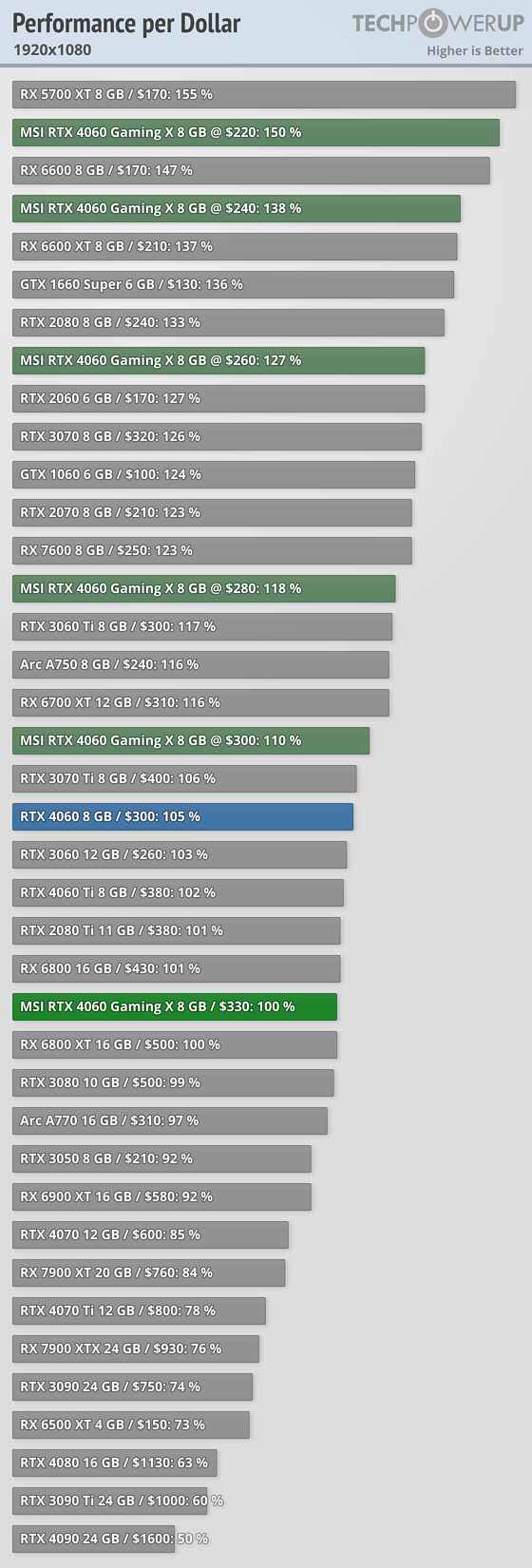

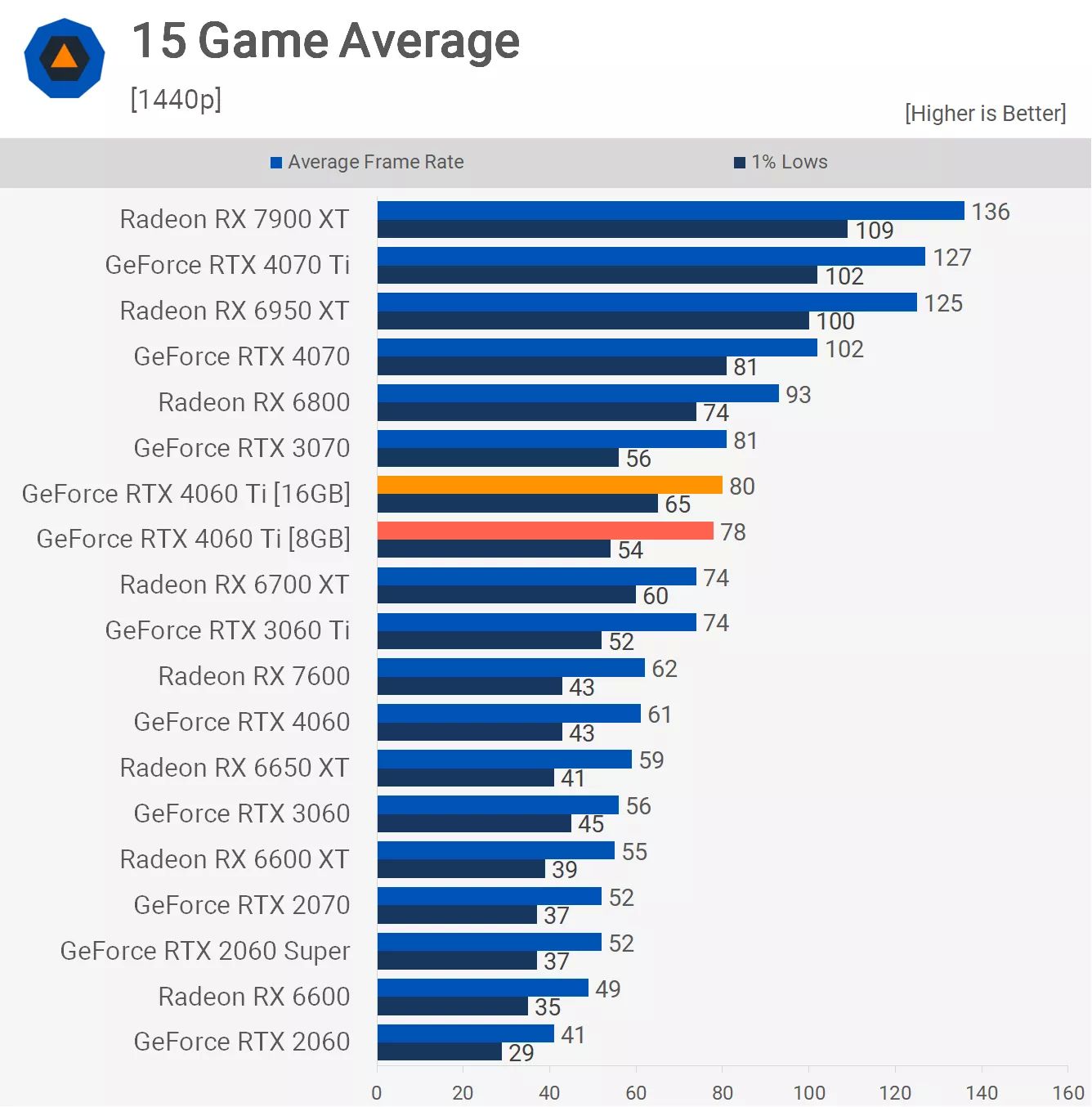

HUB makes the case for spending $100 extra for more VRAM

Better performance:

The Last Of Us

Resident Evil

Callisto Protocol

Plague Tale Requim

Better Texture Quality:

Halo Infinite

Forspoken

Nvidia fanboys going to feel this in the morning.

Both the 4060 ti cards seem to be caught in a pincer attack by the 4060 & 4070Nvidia fanboys going to feel this in the morning.

View attachment 584750

If you go by value:

4060 > 4060 ti 8gb

4070 > 4060 ti 16gb

Maybe below pricing might have worked:

4060 — $220

4060 ti 8gb — $280

4060 ti 16gb — $330

4070 — $400

4070 ti — $500

4080 — $700

4090 — $1000+

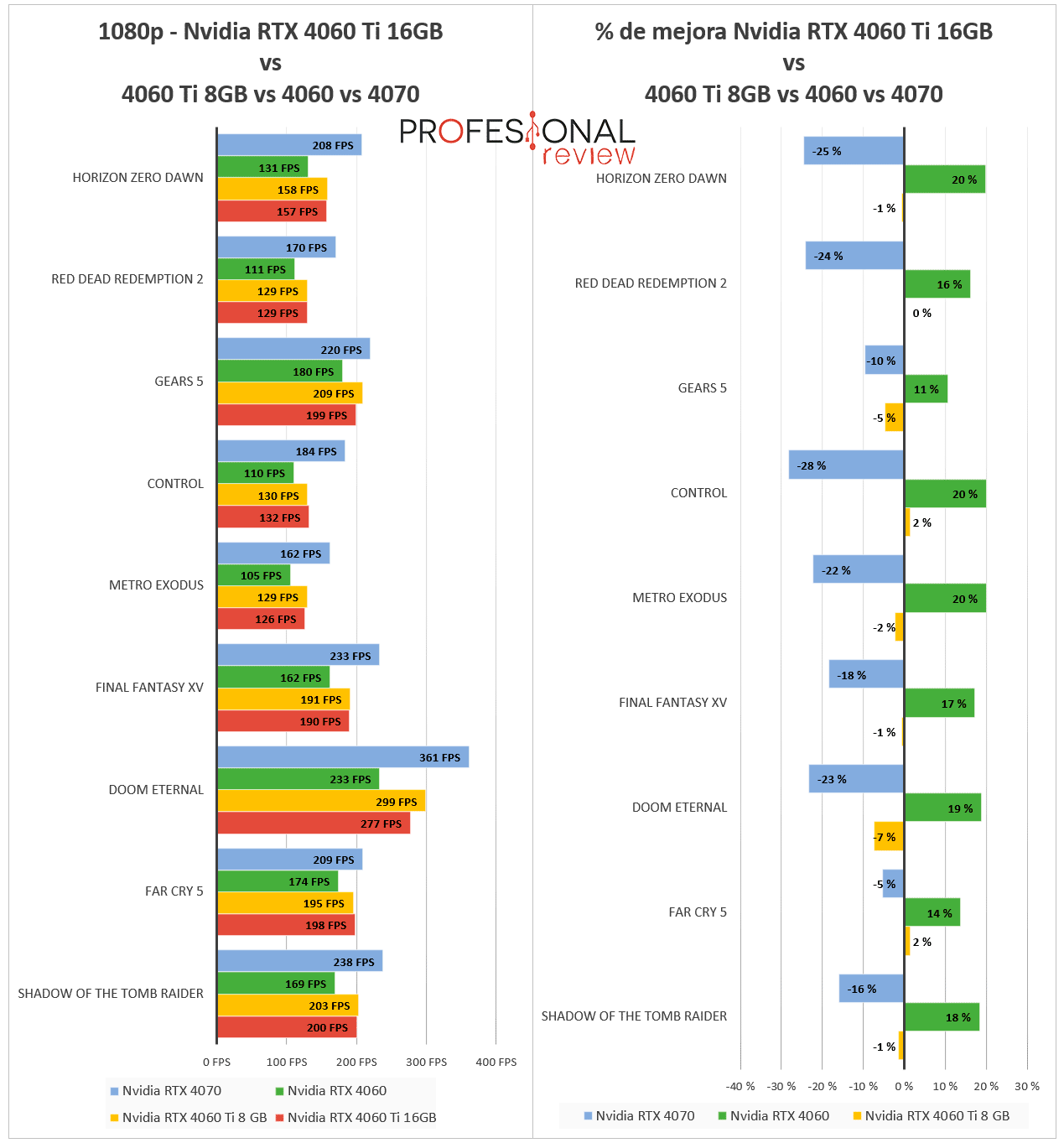

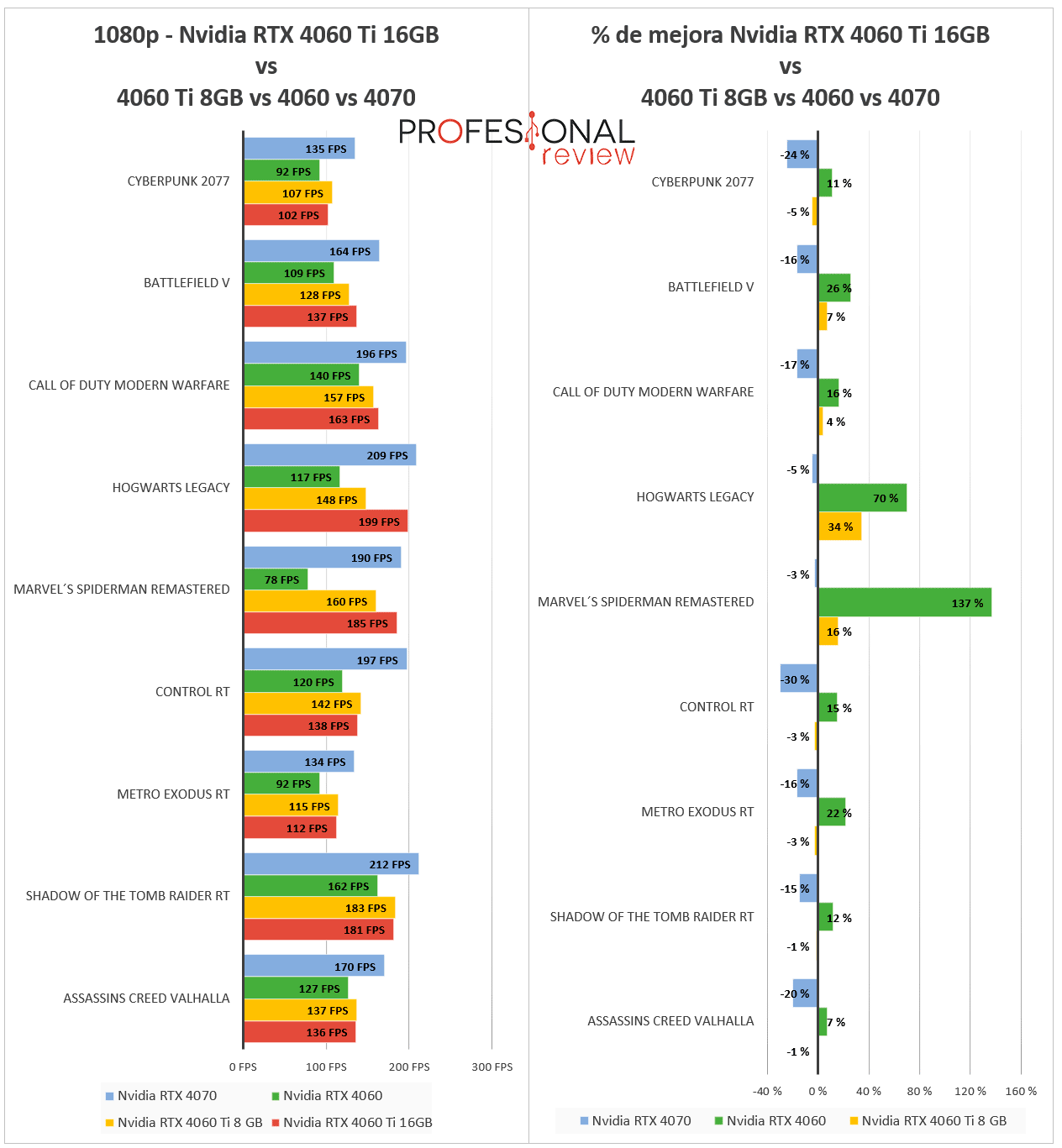

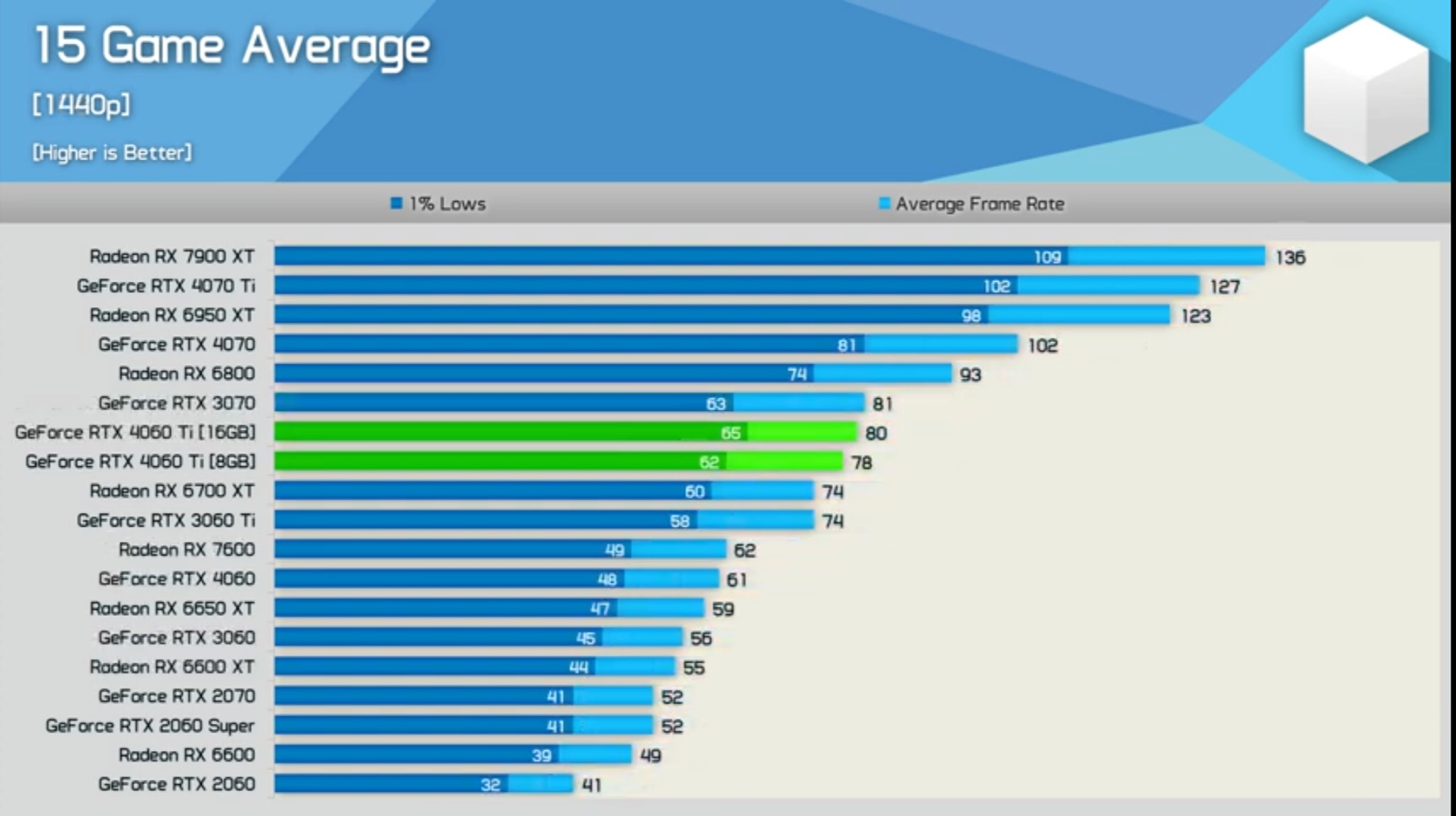

Considering their card where margin of error close in their average performance (1% low and ave. fps) at 1080p but that I am sure you can find exception, you can really choose what you want as a narrative.Nvidia fanboys going to feel this in the morning.

Even at 1440p, margin or error the same average performance here.

Yellow bar is 8GB, red is 16GB:

https://www.profesionalreview.com/2023/07/21/nvidia-rtx-4060-ti-16gb-review/

Using a large suite of game average is probably not the way to go to compare card with only VRAM change, massing down the moment when it matter a lot by all the time it does not.

In that review the 4060Ti 16gb was 1.9, 1.6 and 1.8% faster in average at 1080p, 1440p, 2160p, i.e. a tie average wise even at 4k.

Last edited:

That about half the price than the 2060 launched at in 2023 dollars.Maybe below pricing might have worked:

4060 — $220

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,576

LOL. Glad you aren't in charge. Talk about out to lunch...Both the 4060 ti cards seem to be caught in a pincer attack by the 4060 & 4070

If you go by value:

4060 > 4060 ti 8gb

4070 > 4060 ti 16gb

Maybe below pricing might have worked:

4060 — $220

4060 ti 8gb — $280

4060 ti 16gb — $330

4070 — $400

4070 ti — $500

4080 — $700

4090 — $1000+

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,970

Those games are old, so of course 8GB of VRAM won't be an issue. What matters is new games, because those are what owners of the 4060 Ti have to deal with in the years to come.Considering their card where margin of error close in their average performance (1% low and ave. fps) at 1080p but that I am sure you can find exception, you can really choose what you want as a narrative.

Even at 1440p, margin or error the same average performance here.

Yellow bar is 8GB, red is 16GB:

https://www.profesionalreview.com/2023/07/21/nvidia-rtx-4060-ti-16gb-review/

View attachment 584778View attachment 584779

Using a large suite of game average is probably not the way to go to compare card with only VRAM change, massing down the moment when it matter a lot by all the time it does not.

In that review the 4060Ti 16gb was 1.9, 1.6 and 1.8% faster in average at 1080p, 1440p, 2160p, i.e. a tie average wise even at 4k.

The Last Of Us

Resident Evil 4

Callisto Protocol

Plague Tale Requim

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

Those games are old, so of course 8GB of VRAM won't be an issue. What matters is new games, because those are what owners of the 4060 Ti have to deal with in the years to come.

The Last Of Us

Resident Evil 4

Callisto Protocol

Plague Tale Requim

And as always, new games will be played at medium settings to get 60 fps with either card so things will not really get worse for the 8 GB card over the 16 GB card.

On the games listed, either the 16 GB card was still sub 60 fps or the 16 GB was using just over 8 GB (usually around 10 GB) meaning slight tweaks to settings would make a world of difference for the 8 GB card. Minor effort for $100, though both are equally bad for the money.

Here is a 1080p chart that includes those games when settings are at manageable levels for that performance tier (likely high to ultra):

Things are actually even closer at 1440p since most of the game's will be have to be set at high for this tier regardless of vram and some games will need to be a medium even.

I doubt this.And as always, new games will be played at medium settings to get 60 fps with either card

The expectation usually is that a xx60 ti card is usually good to play at 1080p ultra for a few years atleast

Also to clarify, the benefit of the extra vram may not be visible in average fps charts but in quality of gameplay such as stutter & texture pop-in etc

In a game like The Last of Us, which is notorious for sucking up VRAM, the two cards performed similarly in some scenarios. But the 16GB version eliminated the stutter and hitching experienced on the 8GB GPU and provided a slightly higher frame rate.

In Resident Evil 4, it was similar, as the game was utilizing 11GB of GPU memory at 1080p with max settings. This allowed the 16GB version to offer smoother gameplay than its baby brother, with a slightly higher frame rate.

The most obvious example of the benefit of the additional VRAM is in Forspoken, where the 8GB card features visible texture pop absent on the more expensive GPU.

https://www.extremetech.com/gaming/...ew-benchmarks-faster-than-8gb-model-still-too

In a game like The Last of Us, which is notorious for sucking up VRAM, the two cards performed similarly in some scenarios. But the 16GB version eliminated the stutter and hitching experienced on the 8GB GPU and provided a slightly higher frame rate.

In Resident Evil 4, it was similar, as the game was utilizing 11GB of GPU memory at 1080p with max settings. This allowed the 16GB version to offer smoother gameplay than its baby brother, with a slightly higher frame rate.

The most obvious example of the benefit of the additional VRAM is in Forspoken, where the 8GB card features visible texture pop absent on the more expensive GPU.

https://www.extremetech.com/gaming/...ew-benchmarks-faster-than-8gb-model-still-too

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

Though not quite the performance tier of the 4060ti, the 2060 super still beats the 2060 12gb, even in newer titles.

Nvidias gamble on game cache or whatever they call it was a bad move. And with the cost of implementing the claim shell vram setup to stuff 16 gb on this card, they would have had better future performance running 12 gb at 192 bit or even 10 gb at 160 bit while likely being cheaper.

Though I guess you don't make a trillion dollar company without sticking it to the customer on some fashion.

Nvidias gamble on game cache or whatever they call it was a bad move. And with the cost of implementing the claim shell vram setup to stuff 16 gb on this card, they would have had better future performance running 12 gb at 192 bit or even 10 gb at 160 bit while likely being cheaper.

Though I guess you don't make a trillion dollar company without sticking it to the customer on some fashion.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,970

The Last Of Us ran around mid 50's at 1440p Ultra on the 16GB 4060, while the 8GB got mid 40's. Resident Evil 4 would get over 100fps at 1080P Max on the 16GB, while on the 8GB it was mid 80's. Callisto Protocol was a shit show on the 8GB model getting as low as 30 fps, while on the 16GB model it can achieve nearly 100fps at 1080p Ultra RT. Plague Tale Requim was just as bad on the 8GB, seeing around 20 fps in some areas while the 16GB was comfortable in the 50's. Nothing here is medium settings at 60fps, nor should it be at these prices.And as always, new games will be played at medium settings to get 60 fps with either card so things will not really get worse for the 8 GB card over the 16 GB card.

Most of those games listed are old and therefore will dilute the results. Again, look at new games not old games. The only reason this is an issue is because Nvidia is charging a hearty $100 fee for the extra 8GB, on top of the crazy $400 asking price for the 4060 Ti. The card doesn't need 16GB either, just 10GB or 12GB would do, but because of the limiting 128-bit bus, Nvidia has to go with 8GB or 16GB. If this were a 192-bit bus, it could be 12GB which would work just as well as 16GB for this card.On the games listed, either the 16 GB card was still sub 60 fps or the 16 GB was using just over 8 GB (usually around 10 GB) meaning slight tweaks to settings would make a world of difference for the 8 GB card. Minor effort for $100, though both are equally bad for the money.

Here is a 1080p chart that includes those games when settings are at manageable levels for that performance tier (likely high to ultra):

HUB makes the case for spending $100 extra for more VRAM

Better performance:

The Last Of Us

Resident Evil

Callisto Protocol

Plague Tale Requim

Better Texture Quality:

Halo Infinite

Forspoken

No, they made the case for 16GB of VRAM on a GPU being the base for a $500USD GPU, not for spending $500USD on that piece of crap, which was their actual conclusion in the video.

That about half the price than the 2060 launched at in 2023 dollars.

Yeah, but the 4060 is really a 4050 considering the traditional generational performance lift for a 60-class card, so it makes sense when you think about it that way.

Though not quite the performance tier of the 4060ti, the 2060 super still beats the 2060 12gb, even in newer titles.

Nvidias gamble on game cache or whatever they call it was a bad move. And with the cost of implementing the claim shell vram setup to stuff 16 gb on this card, they would have had better future performance running 12 gb at 192 bit or even 10 gb at 160 bit while likely being cheaper.

Though I guess you don't make a trillion dollar company without sticking it to the customer on some fashion.

It’s not a gamble it’s a design lockout. Nvidia’s workstation silicon is the same as their gaming silicon the only difference being the memory, firmware and drivers.

They intentionally put less memory on the consumer cards to make them incapable of stepping on the toes of their workstation brethren to protect their artificial market segmentation.

Or other new game like Hogwarts and the latest spiderman remaster in that list, you just repeated exactly what I said I feel like.Those games are old, so of course 8GB of VRAM won't be an issue. What matters is new games, because those are what owners of the 4060 Ti have to deal with in the years to come.

The Last Of Us

Resident Evil 4

Callisto Protocol

Plague Tale Requim

True, looking back today the 1060->2060 was quite impressive (the 2060 was beating the 1070ti and about a 1080).Yeah, but the 4060 is really a 4050 considering the traditional generational performance lift for a 60-class card, so it makes sense when you think about it that way.

It would still be extremely aggressive to launch a 4060 much cheaper than the 7600, cheapest 6600 right now is around $210 on newegg, cheapest 7600 are $270):

Calling it a 4050 and a $250 price tag would have probably worked (the 1060 3gb price MSRP price tag in today dollars), they're yet to have 3060 that low.

Last edited:

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,776

HUB makes the case for spending $100 extra for more VRAM

Better performance:

The Last Of Us

Resident Evil

Callisto Protocol

Plague Tale Requim

Better Texture Quality:

Halo Infinite

Forspoken

The poor performing games when using a card with 8Gb, are they all console ports?

HU purports that the games are "texture intensive"/"higher quality textures" as the reason the extra vRam is needed. There could be some truth to that if a lot of texture swapping is happening.

But could it also simply be that they are shitty ports of console games?

What's the games' install sizes? I know Jedi Survivor was 140Gb. Doom Eternal, is 95Gb. We can rough guesstimate the texture quality from the games install size, larger game install ~= better/more textures.

Doom runs at 175Fps, 144fps 1% lows, smooth and without stutter, on the max quality setting. It's pretty dang big at 95gig. And it runs amazing on those cards with 8Gb vRam, 128bit memory busses. Better game engine? I have 0 doubt that iD puts out some of the best written game engines for pc and has done so for decades. So I expect every other game (of the same size/complexity/texture intensiveness) to be some amount slower than one of iD's games. But less than HALF the frames?

You can buy a card with more vRam, if you want to play the few games at max settings from the guys who just are not as good at coding, whatever it is that makes the game run perfectly on a potato. Or, just turn down quality from Ultra to High.

It's bogus that the reviewer doesn't do the extra steps and find those playable points, instead they are "selling" you on their opinion. They could fully test, find playable settings, and let the viewer come to their own conclusion while offering their opinion, but nope. You need to read between the bias. (Applies to all reviewers, not singling out HU). If it's a review that doesn't cover the game you like to play, look elsewhere.

"Developers don't want to optimize.." So that is the video cards' fault?

"It's sad this isn't the default configuration of a 4060Ti" So having a cheaper, less performant choice is bad? (I think he was bitching about the price. Turns out he doesn't work for the GPU companies, therefore doesn't get to set prices. If the price is bad, the market will sort that out.)

I agree that games that will use more vRam is a good thing, but I don't think it should excuse a game company of poor optimization. Games in the list made those companies hundreds of millions of dollars from the PC game sales, but they can't be bothered to properly optimize a game and the engine like iD does? I call BS on that one.

If you play Fortnite/CS:go/Rainbow6/Valorant/Apex, any of those cards will do, and will do so for years to come. Everything else, just adjust the quality slider as required. If you gotta have it maxed, welcome to PC gaming, don't buy any of these low end cards. Yup, you should plan to spend $800 on the gpu. We don't play on overgrown N64's... well not most of us

Last edited:

xDiVolatilX

2[H]4U

- Joined

- Jul 24, 2021

- Messages

- 2,546

The clown Steve from hardware unboxed simultaneously slams the card yet says it's performance is signiicantly better lol.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,841

The amount of goalpost shifting as to what makes up a game review just to avoid calling the 4060ti bullshit is amazing.

"The games are too new/ bad console ports"

"The cards are above/under X fps target, so it doesn't matter anyways"

"Lazy devs just want to use more vram, doesn't count"

"People shouldn't expect max textures at 1080p from a $400 gpu"

Whatever guys, enjoy the future of shit hardware.

"The games are too new/ bad console ports"

"The cards are above/under X fps target, so it doesn't matter anyways"

"Lazy devs just want to use more vram, doesn't count"

"People shouldn't expect max textures at 1080p from a $400 gpu"

Whatever guys, enjoy the future of shit hardware.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

One is a turd and one is a significantly better turd. Those statements are not necessarily contradictory.The clown Steve from hardware unboxed simultaneously slams the card yet says it's performance is signiicantly better lol.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

Many confuse lazy development with incompetent development. I'm sure they are trying, there are just some...hardware shortcomings.The amount of goalpost shifting as to what makes up a game review just to avoid calling the 4060ti bullshit is amazing.

"The games are too new/ bad console ports"

"The cards are above/under X fps target, so it doesn't matter anyways"

"Lazy devs just want to use more vram, doesn't count"

"People shouldn't expect max textures at 1080p from a $400 gpu"

Whatever guys, enjoy the future of shit hardware.

While the new gpu requirements we have seen this year are a sign of things to come, it's more so a product of ESG than mind blowing new visuals. Below is what developers like Focus Enternament (Plague Tale Requiem) prioritize rather than a competent work force.

Similiar stories found in the mission statements of the other 'unoptimized' new games. Fortunately, there are a few game developers that still have their priorities straight.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,263

Though not quite the performance tier of the 4060ti, the 2060 super still beats the 2060 12gb, even in newer titles.

Nvidias gamble on game cache or whatever they call it was a bad move. And with the cost of implementing the claim shell vram setup to stuff 16 gb on this card, they would have had better future performance running 12 gb at 192 bit or even 10 gb at 160 bit while likely being cheaper.

Though I guess you don't make a trillion dollar company without sticking it to the customer on some fashion.

The 2060 Super had 16 more ROPS than the 2060 12GB. The GPU on both the 4060 and 4060 Ti are exactly the same. It's not an apples-to-apples comparison.

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

The 2060 Super had 16 more ROPS than the 2060 12GB. The GPU on both the 4060 and 4060 Ti are exactly the same. It's not an apples-to-apples comparison.

Is a ROP the same as a shader processor? This list shows 120, 136, 136 for the 6gb 2060, 12 gb 2060 and 2060 super, respectively.

https://en.m.wikipedia.org/wiki/GeForce_20_series

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,653

The 2060 12gb and 2060 super, are the same GPU core. But, the memory bus of the 12gb is 192bit (like the 2060) instead of 256 bit (like the 2060 super).Is a ROP the same as a shader processor? This list shows 120, 136, 136 for the 6gb 2060, 12 gb 2060 and 2060 super, respectively.

https://en.m.wikipedia.org/wiki/GeForce_20_series

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,211

That's what I thought so it is a direct comparison of bandwidth vs capacity.The 2060 12gb and 2060 super, are the same GPU core. But, the memory bus of the 12gb is 192bit (like the 2060) instead of 256 bit (like the 2060 super).

TheHig

[H]ard|Gawd

- Joined

- Apr 9, 2016

- Messages

- 1,357

All my opinion of course.

The problem I have with the 4060/ti is the price for what it is.

It’s bandwidth starved even with 16gb of vram for way too much money.

It has all the “nice to haves” for 4000 series my preference being power efficiency. Frame gen is neat but I don’t care personally. DLSS works well enough on much cheaper , higher bandwidth cards such as the 3060ti and I love discounted used cards. Others may want only new so pay up I guess?

A new 8GB GPU absolutely has come in sub $300 in 2023 from anyone. AMD, Nvidia, or Intel. I’m not doing it.

At this point unless a card is niche like low profile or a single fan card for sff systems I’m not looking at anything brand new with less than 12Gb of vram.

Conclusion, for me at present ,the 4060 series of cards are a hard pass.

The problem I have with the 4060/ti is the price for what it is.

It’s bandwidth starved even with 16gb of vram for way too much money.

It has all the “nice to haves” for 4000 series my preference being power efficiency. Frame gen is neat but I don’t care personally. DLSS works well enough on much cheaper , higher bandwidth cards such as the 3060ti and I love discounted used cards. Others may want only new so pay up I guess?

A new 8GB GPU absolutely has come in sub $300 in 2023 from anyone. AMD, Nvidia, or Intel. I’m not doing it.

At this point unless a card is niche like low profile or a single fan card for sff systems I’m not looking at anything brand new with less than 12Gb of vram.

Conclusion, for me at present ,the 4060 series of cards are a hard pass.

Last edited:

And ? the price is also significantly higher.The clown Steve from hardware unboxed simultaneously slams the card yet says it's performance is signiicantly better lol.

Considering the 3060 ti can be found at $350

https://www.newegg.com/zotac-geforce-rtx-3060-ti-zt-a30610h-10mlhr/p/N82E16814500518

Both 4060ti are quite expensive for what they are, the price will have to fall down to move.

Last edited:

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,841

How quickly Nvidia has turned pc users into console users. Only want to talk about weaker hardware using upscaling.Oh so you must be one of the Nvidia shills he always talks about who says the correct way to FPS review their products is with DLSS enabled.

And what was said is the card is better than the 4060 Ti 8GB performance wise, that the 16GB card should be the $400 card, and not to buy it because it cost too much for what it is. Just because it's an OK card doesn't mean it's a good value and something to recommend buying.

TheSlySyl

2[H]4U

- Joined

- May 30, 2018

- Messages

- 2,704

I'm curious how this compares to my old GTX 1080ti. I feel like it would be way more efficient, especially for Stable Diffusion.

Darunion

Supreme [H]ardness

- Joined

- Oct 6, 2010

- Messages

- 5,375

Yea im on a regular 1080 myself. KInd of wanting to see if they bump the price down for this after a hot minute and then jump on board.I'm curious how this compares to my old GTX 1080ti. I feel like it would be way more efficient, especially for Stable Diffusion.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)