ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,016

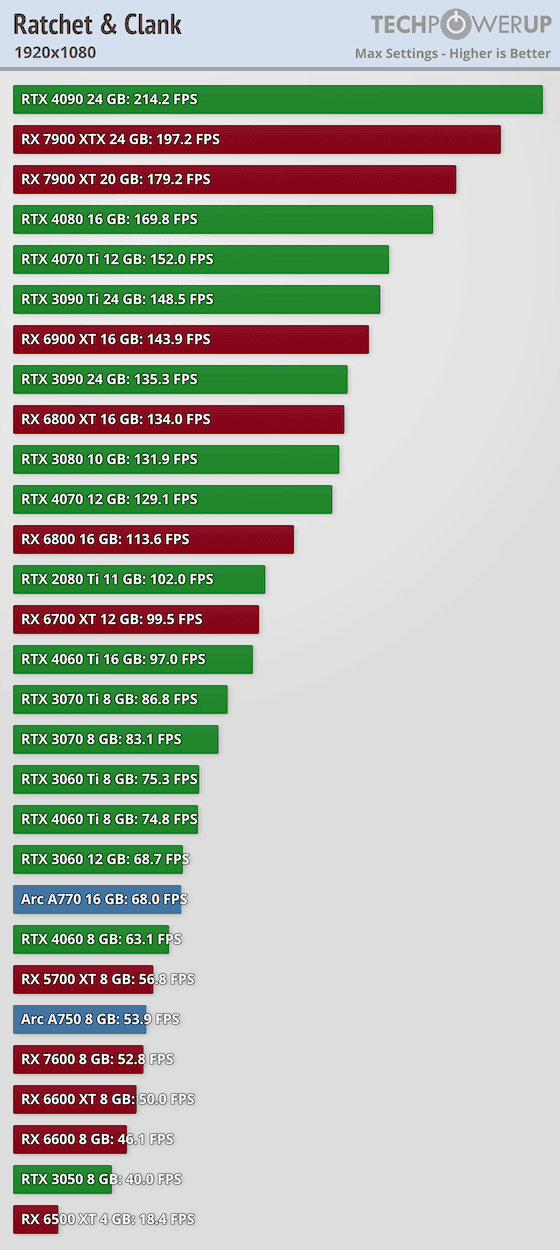

Last time I checked, AMD has nothing to compete against Nvidias top end. Like not even remotely close in performance.How quickly Nvidia has turned pc users into console users. Only want to talk about weaker hardware using upscaling.

I don't like this cycle from either Nvidia or AMD, but to suggest Nvidia is the company with the weaker hardware is ludicrous at best and fanboy trolling at worst.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)