erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,096

This is cool

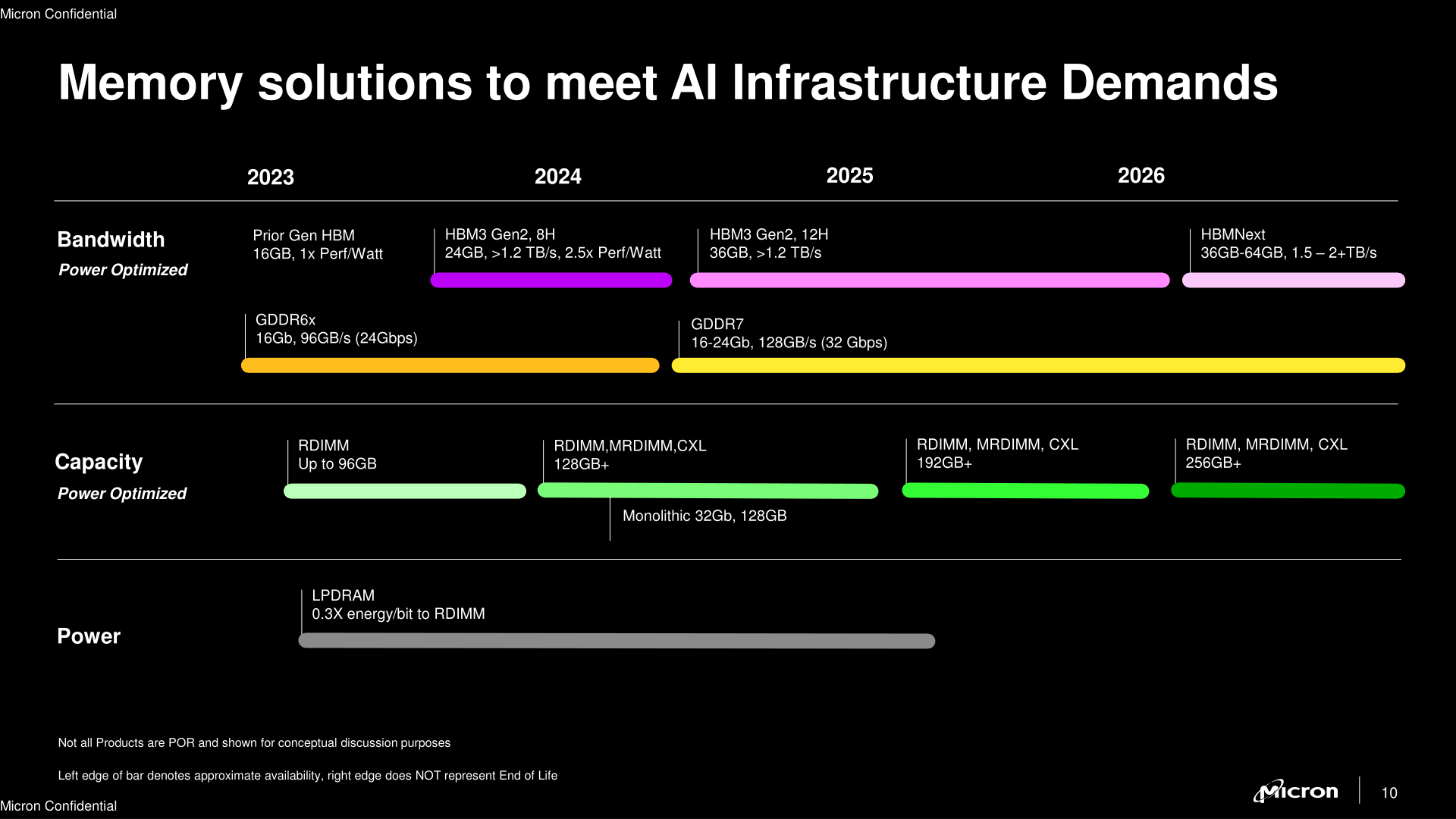

“Cadence already has its GDDR7 verification solution, so adopters can ensure that theire controllers and physical interfaces will be compliant with the GDDR7 specification eventually.”

Source: https://www.tomshardware.com/news/micron-to-introduce-gddr7-memory-in-1h-2024

“Cadence already has its GDDR7 verification solution, so adopters can ensure that theire controllers and physical interfaces will be compliant with the GDDR7 specification eventually.”

Source: https://www.tomshardware.com/news/micron-to-introduce-gddr7-memory-in-1h-2024

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)