spacediver

2[H]4U

- Joined

- Mar 14, 2013

- Messages

- 2,715

Better color

CRTs have a larger color gamut than even the best IPS LCDs.

Why would you make this claim?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Better color

CRTs have a larger color gamut than even the best IPS LCDs.

How does the vertical height of the FW900 compare to a F520? Are they the same? Also, check out my CRT Master Race subreddit here: http://www.reddit.com/r/gloriousCRTmasterrace

Why would you make this claim?

Because he is a troll and most likely took too much of his own acid (lol check dat reddit history)?

I have a question. I have two FW900 monitors side by side. When one monitor is running, and I power on the second monitor next to it, the first running monitor flickers briefly almost like a mild degauss effect on the screen. What causes this? Afterwards everything looks fine on both screens just concerns me. Could this cause damage to one of my units running them next to each other?

Because I have a CRT next to an IPS LCD and the colors tend to look better on the CRT. Am I wrong about this?

Do let us know if it actually goes higher than 165mhz. I think the fastest HDFury only does about 210mhz or 1080p @ 72hz.

I have a question. I have two FW900 monitors side by side. When one monitor is running, and I power on the second monitor next to it, the first running monitor flickers briefly almost like a mild degauss effect on the screen. What causes this? Afterwards everything looks fine on both screens just concerns me. Could this cause damage to one of my units running them next to each other?

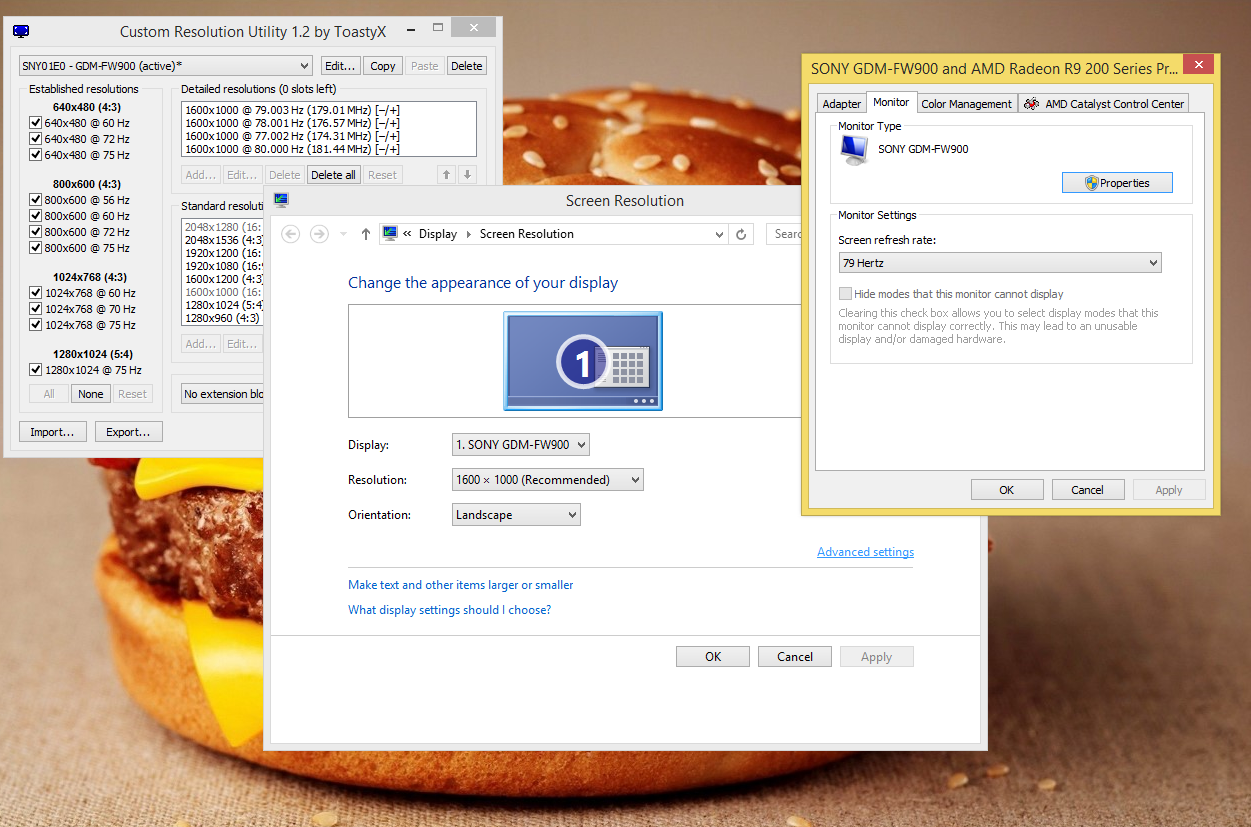

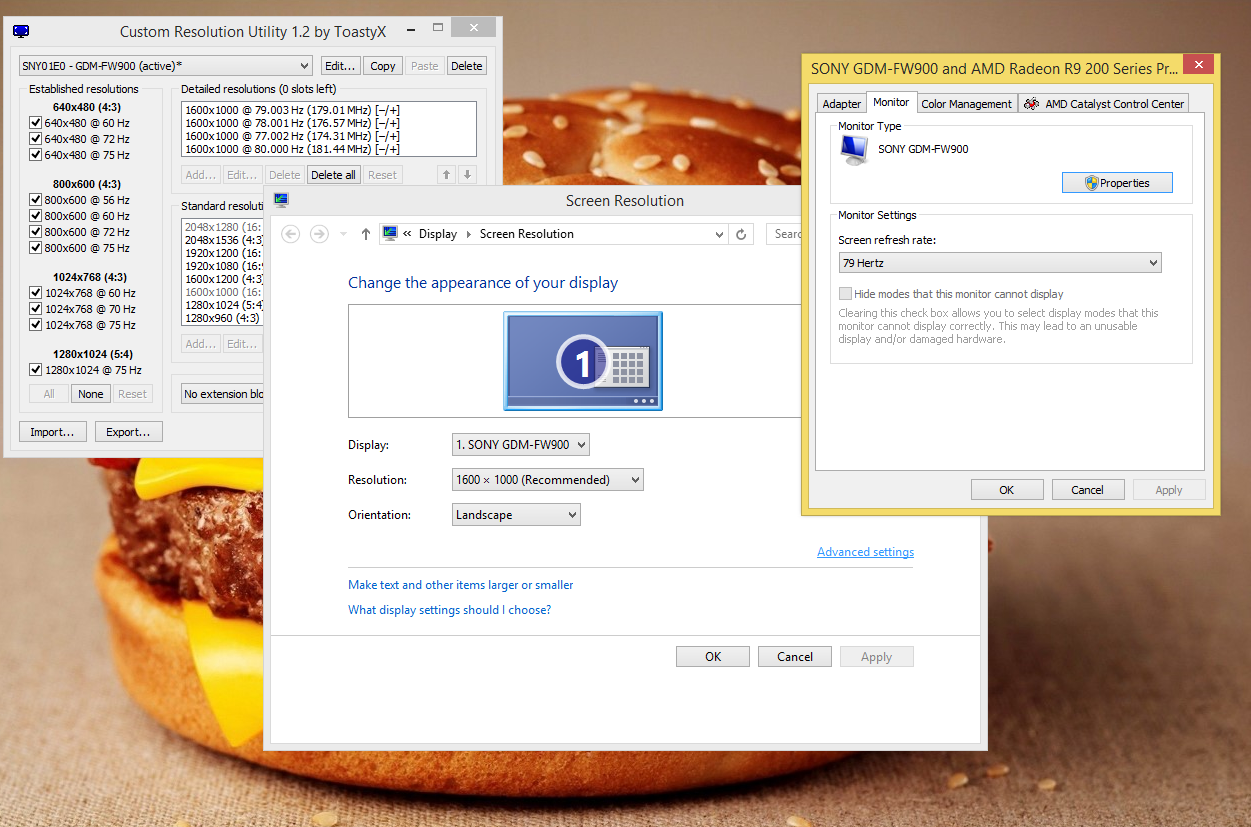

soooo, the adaptor arrived today and well....i can't say i'm surprised but i was hoping for more.

It maxes out at 179Mhz; anything beyond that pixel clock isn't shown in the windows resolution settings. The image itself still looks just as good as it did on my hd 7870 with native DVI-I and it doesn't have any (noticeable) input lag, so there's that at least.

But using my fw900 again makes me realize how much better it feels than my 120Hz IPS;

even at 70Hz it's so much clearer and the movement feels so direct (i tried it with F.E.A.R.).

I completely forgot about that. (and also about the 120 watt power consumption according to my electricity usage monitor lol)

soooo, the adaptor arrived today and well....i can't say i'm surprised but i was hoping for more.

It maxes out at 179Mhz; anything beyond that pixel clock isn't shown in the windows resolution settings.

I am using the pixel clock patcher since i got my Sony 2 years ago (and for my LCD to "overclock" it to 96Hz) but it doesn't change anything. It still doesn't show up.

yea... that's a steal

The guy was used them for Photoshop and all that stuff, bought them brand new when they came out and apparently spent 1200 each.

Was testing out the refresh rate and resolution they are doing, I got one to do 2560x1440 @ 60hz and it's crystal clear looking like text was sharper than most LCD panels.. Couldn't get 2560x1600 working though.

In the past, we tried some torture resolutions trial runs with the GDM-FW900 and achieved 2560x1440 and 2560x1600 @60Hz, and we were able to have the unit maintain it. Again, these resolutions and timings ARE NOT RECOMMENDED! It only illustrates the range of the bandwidth of the unit.

Hope this helps...

Sincerely,

Unkle Vito!

You can actually do 4k on a FW900 at 60hz interlaced. AMD cards won't let you go over a 2000 pixel vertical resolution in interlaced mode, though. Somebody should give it a shot on Nvidia.

Uncle Vito, can you give us an idea of what parts of the monitor are actually put under more stress as a result of non-recommended timings?

I wouldn't bother with interlaced on a CRT designed from the start for progressive scan. The flicker is even worse, because each line is effectively getting half the refresh rate, and CRT monitors are a lot are lower-persistence than TVs were.

I tried the interlaced resolutions on my S3 card just to see, and they looked like crap. This coming from one of the worst 2D quality cards of all time - I could see the difference in interlaced resolutions, even with a static screen.

Any hint on how to try interlaced? As quality geek I wouldn't probably use it at all but might be cool for taking screenshots just like ones I posted above

I wouldn't bother with interlaced on a CRT designed from the start for progressive scan. The flicker is even worse, because each line is effectively getting half the refresh rate, and CRT monitors are a lot are lower-persistence than TVs were.

I tried the interlaced resolutions on my S3 card just to see, and they looked like crap. This coming from one of the worst 2D quality cards of all time - I could see the difference in interlaced resolutions, even with a static screen.

You can actually do 4k on a FW900 at 60hz interlaced. AMD cards won't let you go over a 2000 pixel vertical resolution in interlaced mode, though. Somebody should give it a shot on Nvidia.

Uncle Vito, can you give us an idea of what parts of the monitor are actually put under more stress as a result of non-recommended timings?

Question for the CRT gods. Just picked up a Lacie Blue IV 22" and the colors seem dull, I can't get the intense white that I can get with my LG34 or Korean 27. Not sure if this is a limitation of the fact that the CRT has 110-150nit vs my LCD's 300nit. Whites look very dull. On my LCD they are insane.

yes you can; they both show images.You are not comparing apples to apples...You CANNOT compare a CRT to an LCD...

LCD are insanely color inaccurate and they over-bright and over-saturate the colors.

UV!

yes you can; they both show images.

backlight can be turned down to crt levels

nowadays, even midrange lcd's have gamuts closer to rec709 than crt. as to which gamut is better, well most content on computers is designed for rec709

what lcd's will never match are the black levels and the viewing angles.

You are not comparing apples to apples...You CANNOT compare a CRT to an LCD... LCD are insanely color inaccurate and they over-bright and over-saturate the colors.

UV!

So Goodwill had a working Gateway VX920 for $10. I passed on it because it is a 19" and I already have two Dell P991's and one Dell P992. But apparently the Gateway used to retail for almost $1200? Is this monitor in a higher league than my Trinitrons? Should I go back and get it?

LCD's can be color calibrated tho can't they? I've seen a ton of reviews on various models stating excellent results after calibration, although I know this isn't something the average user can do without the required calibration hardware.