sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,762

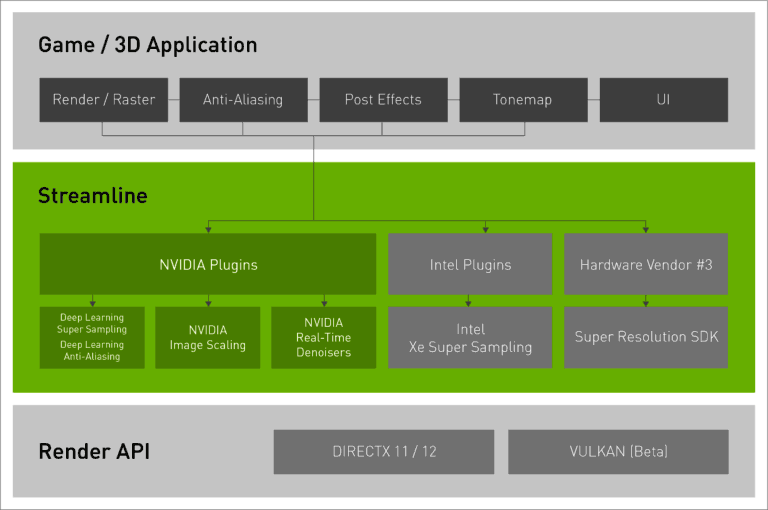

RT is a feature of the engine you don't just turn it on... developing for it still requires the developer to do the work. Its not like RT is zero developer input or something. DLSS again may be supported by Unreal 4... but its not automatic.

I know this might be a shock to people... a great many developers DON'T like DLSS. Its not that its bad tech... its just needs to be implemented for a specific companies hardware for one market. FSR is easier to implement and works for consoles and pc regardless of GPU installed. (including not just Nvidia but also Intel)

I don't see them doing anything here that Nvidia hasn't done x100 in the past. There was never a reason for developers to not include FSR either... but they would often skip the day of work and zero capital investment needed because they where the way it was meant to be played money takers. All they did here was refuse to spend the weeks it would take to implement DLSS.... which is galling when FSR works with Nvidia hardware just fine.

DLSS takes like 10 minutes to get working on a UE4 project (the engine this game uses). You download the DLSS files from the UE website, put them in the plugin folder for your project and it shows up and works in the editor. The only work the developers need to do is add menus so the players can set the options themselves. There's already bueprints built in to do all the hardware detection. It's extremely easy.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)