PS5 and the Xbox are not using the Zen cores for compression or decompression work.That really different, the PS5-Xbox hardware decompression is capable like 4 zen2 core at decompression or something of the sort, Phison I/0, is a capacity to sustain long work on big size IO, for hours, like a openGAMe world with continous stream would do, but I do not think they know what a compressed texture is, it is just that it would be good at something the SmartAccess-Direcstorage workload could typically look like.

View attachment 2021-05-21-image-20-j_1100.webp

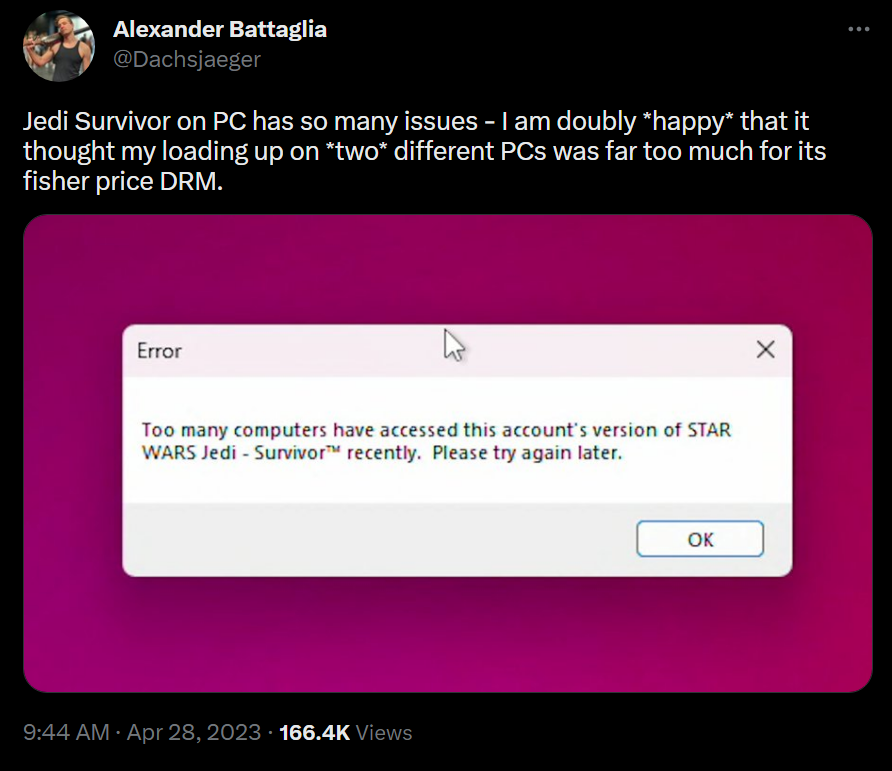

Sony licensed Kraken compression and oodle texture compression from Rad Games. Not just for use in a software SDK... they actually built silicon to do that work into their IO die. MS did something similar with their own inferior in house compression method. In that image the 2 io co processors are the Kraken/oodle compressor/decompressors.

http://www.radgametools.com/oodlekraken.htm

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)