https://twitter.com/HardwareUnboxed/status/1646654457781063681

https://twitter.com/tomshardware/status/1646872696624492545

https://twitter.com/firstadopter/status/1647408780773031936

https://twitter.com/TechEpiphany/status/1646644386694873088

Suck it Ngreedia....Jenson will have to hold off buying another whale skin lined leather jacket until the 50X0 release.

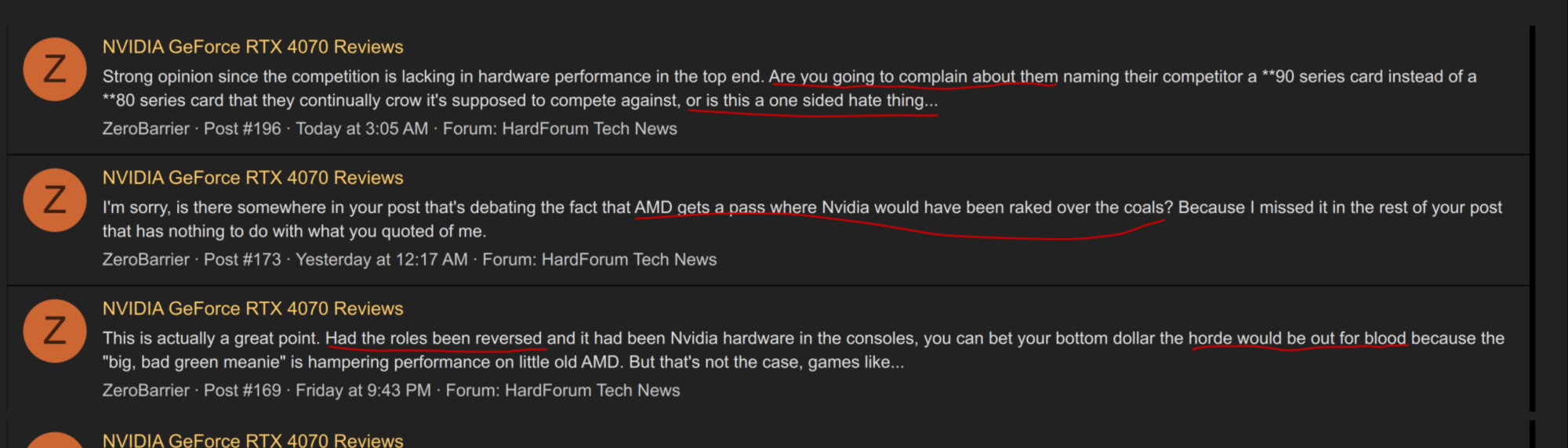

Canada Computers is already giving away a RAM kit with purchase, and I have already seen some models on sale locally here, for a card that just launched.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)