Either way at the end of the day the latest games use more VRAM. If you have it then you're fine. The 4090,4080, and even 4070 have zero problems running the latest games (and well). The ones that don't have issues. This is no different even on the AMD side. I mentioned God of War and might as well also mention Guardians of the Galaxy.. Neither use more than 6GB - 8GB roughly. But you know what does get used? A crap ton of system memory. There's no free lunch. You can either configure so its all in VRAM or you can get creative and stream the textures in. At the end of the day though you're going to need the assets as close to the GPU as possible which means in RAM somewhere.Nvidia engineers also made extensive tweaks and modifications to the games core engine and Nvidia is supposedly working with Sony currently to adapt their tools to better work with the PC going forward as Sony realized that not bringing their titles to PC was leaving money on the table, because as much as we might want their exclusives, very few of us actually buy Play Stations to play them, as made evident by the FF7 and God of War PC sales figures. Who knows if Sony's hate boner for Microsoft and the recent Activision Blizzard drama will change those plans though, especially now that Microsoft and Nvidia have entered a 10-year agreement for the GeForce Now program.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GeForce RTX 4070 Reviews

- Thread starter erek

- Start date

No this is only coming to light now because development costs and inflation have not kept pace with the selling price of games and as Lootboxes and such are now regulated to the point where including them is not financially beneficial for most markets studios are just cutting costs and finishing touches are the first to go.I get that the console titles are written for console memory management. I get that the first step in porting to PC is to duplicate assets in system RAM and VRAM, and that lazy developers/greedy publishers are shipping PC games in that state rather than finishing the job. These games generally shouldn't require 16 GB VRAM or 32 GB system RAM. They shouldn't require 100+ GB storage, either.

I don't think that makes these games "AMD titles". Midrange AMD cards with more VRAM apparently run a shoddy port better than corresponding Nvidia cards with less VRAM. Once the work is finished, the VRAM difference diminishes.

Nvidia limited several 30X0 series cards with 8GB VRAM. That limit is showing sooner than it should due to half-assed ports. I doubt this is a nefarious AMD plot. It would be a Machiavellian master stroke for AMD to control both Nvidia's card design and developers' console ports to clash with each other 2 years down the line.

The cost of developing a game has almost doubled in the past 5 years, game programmers who were once abundant have moved on, the field is notorious for shit work conditions with very little job security, and really isn't nearly as attractive as it once was with other development fields paying better with nicer conditions and better job security.

So as developers cut back we we can expect a string of increasingly poor PC launches, as it is proven time and time again that we will spend our time infighting while they slowly get around to the fix but Microsoft and Sony issue fines when they need to start processing refunds on purchases made in their store.

The big two tackling this problem are Epic and Unity, their new tools for their new engines, greatly simplify the process of porting and have all sorts of built-in streamlining tools that work with the current state of the game development community which is why you will notice that many studios who always used their own engines are making the switch. Microsoft is obviously doing what it can to aid things but really it's not their problem to fix so the best they can do is streamline tools for DX12 which obviously helps the PC and XBox teams but is a bandaid at best. So until the new tools get started on the new projects this is the reality we are stuck with.

It's not AMD and some grand plan, but they saw it coming and equipped their cards accordingly, that is just good foresight on their part, Nvidia is kinda stuck because they straight up know if they add more VRAM to their consumer lineup they will be stepping on their workstation sales and they probably did the calculations on how much that would cost them compared to the people who were upset by the VRAM amounts moving to AMD and decided that they made more cutting back on VRAM. Honestly can't fault them for it, their investors would shit a brick if they did, and now that their stock is so massively inflated they have to work to protect that because if things fall too much they get sued anyways. Something something more money more problems.

Aside:

But that is why they branded the 90 series cards as RGB GAMER XTREME SNUF BBQNINJA edition crap so it would trigger every auditing department from here to China and back again, while they were the TITANS we could squeak those in under the radar for jobs where we needed the memory or the processing power but not the EEC memory or the driver validations or any of the extra's you pay for with the workstation and server components. By getting rid of the Titan branding, then killing off Quadro they made it so auditors actually had to look at what IT departments were buying, and with number crunchers watching, you better believe people ask a lot of questions when IT departments, many of which are filled with gamers, start buying gaming parts, accusations get insinuated, managers make meetings about how it affects the image of the department and red flags go up all over. It's a headache nobody wants and if there is government money involved then you have a whole second set of auditors to answer to and it only gets worse from there, it's protectionism at its finest, or worst, I can't tell any more I am in too deep, but I pray that Intel can save me their OneAPI looks solid, well documented, well supported, and has a cross over library so many CUDA functions can be directly mapped one to one. XESS also looks to be the answer to the whole DLSS/FSR battle as well and we can only hope that AMD works with them there, because Nvidia is making bank off everybody else trying to duplicate their work with competing open standards.

Last edited:

System memory is at least cheap and relatively abundant, the latest direct storage API's cut that down by a lot too then you can get texture streaming setup, there is a lot of really neat stuff in the works all around to let developers do more with a much smaller budget which will ultimately lead to better end products all around but we have to wait for them to start being used.Either way at the end of the day the latest games use more VRAM. If you have it then you're fine. The 4090,4080, and even 4070 have zero problems running the latest games (and well). The ones that don't have issues. This is no different even on the AMD side. I mentioned God of War and might as well also mention Guardians of the Galaxy.. Neither use more than 6GB - 8GB roughly. But you know what does get used? A crap ton of system memory. There's no free lunch. You can either configure so its all in VRAM or you can get creative and stream the textures in. At the end of the day though you're going to need the assets as close to the GPU as possible which means in RAM somewhere.

Can you imagine a world where we could replace and upgrade the VRAM on the GPUs as easily as we could the system ram...

Last edited:

Genuinely asking: How much of the increased dev cost is RT, DLSS, etc?No this is only coming to light now because development costs and inflation have not kept pace with the selling price of games and as Lootboxes and such are now regulated to the point where including them is not financially beneficial for most markets studios are just cutting costs and finishing touches are the first to go.

The cost of developing a game has almost doubled in the past 5 years, game programmers who were once abundant have moved on, the field is notorious for shit work conditions with very little job security, and really isn't nearly as attractive as it once was with other development fields paying better with nicer conditions and better job security.

So as developers cut back we we can expect a string of increasingly poor PC launches, as it is proven time and time again that we will spend our time infighting while they slowly get around to the fix but Microsoft and Sony issue fines when they need to start processing refunds on purchases made in their store.

The big two tackling this problem are Epic and Unity, their new tools for their new engines, greatly simplify the process of porting and have all sorts of built-in streamlining tools that work with the current state of the game development community which is why you will notice that many studios who always used their own engines are making the switch. Microsoft is obviously doing what it can to aid things but really it's not their problem to fix so the best they can do is streamline tools for DX12 which obviously helps the PC and XBox teams but is a bandaid at best. So until the new tools get started on the new projects this is the reality we are stuck with.

It's not AMD and some grand plan, but they saw it coming and equipped their cards accordingly, that is just good foresight on their part, Nvidia is kinda stuck because they straight up know if they add more VRAM to their consumer lineup they will be stepping on their workstation sales and they probably did the calculations on how much that would cost them compared to the people who were upset by the VRAM amounts moving to AMD and decided that they made more cutting back on VRAM. Honestly can't fault them for it, their investors would shit a brick if they did, and now that their stock is so massively inflated they have to work to protect that because if things fall too much they get sued anyways. Something something more money more problems.

Aside:

But that is why they branded the 90 series cards as RGB GAMER XTREME SNUF BBQNINJA edition crap so it would trigger every auditing department from here to China and back again, while they were the TITANS we could squeak those in under the radar for jobs where we needed the memory or the processing power but not the EEC memory or the driver validations or any of the extra's you pay for with the workstation and server components. By getting rid of the Titan branding, then killing off Quadro they made it so auditors actually had to look at what IT departments were buying, and with number crunchers watching, you better believe people ask a lot of questions when IT departments, many of which are filled with gamers, start buying gaming parts, accusations get insinuated, managers make meetings about how it affects the image of the department and red flags go up all over. It's a headache nobody wants and if there is government money involved then you have a whole second set of auditors to answer to and it only gets worse from there, it's protectionism at its finest, or worst, I can't tell any more I am in too deep, but I pray that Intel can save me their OneAPI looks solid, well documented, well supported, and has a cross over library so many CUDA functions can be directly mapped one to one. XESS also looks to be the answer to the whole DLSS/FSR battle as well and we can only hope that AMD works with them there, because Nvidia is making bank off everybody else trying to duplicate their work with competing open standards.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Genuinely asking: How much of the increased dev cost is RT, DLSS, etc?

More than 1

RT currently adds a little cost, but not much, and if it were more ubiquitous it saves a crap load.Genuinely asking: How much of the increased dev cost is RT, DLSS, etc?

The standard methods for rasterization require a lot of manual work by art departments to make it look good, they have to manually balance light and shadow, and manually create reflections by mirroring textures, and as environments get bigger so does the workload, open worlds are expensive to fill (which is why Ubisoft leaves theirs empty), but basically the costs of making a rasterized game look good grow exponentially with the size of the environment the game creates.

Ray tracing honestly puts a shit ton of artists out of work when it finally becomes the norm, and it will save studios a lot of money, and give more consistent results which means less testing being required and less need for "optimization" of the assets to hit certain performance metrics.

DLSS is a minor one-and-done thing, you add it to the rendering pipeline and call it a day, it is honestly easier to implement DLSS or FSR than it is to implement most of the existing anti-aliasing methods that are out there and again you get more consistent results so the sooner those replace the old AA methods the better it is for everybody.

Ray Tracing is about making things look better, but it is more about making things cheaper, It is about automating a tedious labor-intensive process. I know a lot of people here will say straight up that the Ray Traced stuff doesn't look any better than the rasterized stuff, and that is more condemning than they realize, because that rasterized work took 10's of thousands of man-hours to accomplish, while the Ray Traced side took 10's of thousands of computation hours done by some moderately expensive hardware manned by a single person.

Jensen and his the more you buy the more you save comments weren't aimed at us buying the cards, it was aimed at the developers buying those 5U DGX boxes, because that $200,000 box makes redundant a few million dollars worth of people.

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,998

Just decided to take a look at the differences from 30 series to 40 series

3090 to 4090 +56% shaders, +57% render units, +56% RT cores, +56% Tensor cores, +7% price (+$100) : By all accounts great value proposition if this is the purchase range you're considering

3080 to 4080 +12%, +17%, +9%, +12%, +72% price increase (+$500!!!!!): Now we're dipping our toes into WTF is this fuckery!? Now granted it's more than number of cores it's about speed and memory which got a whopping 4GB more, and memory bandwidth oh wait never mind that got slower.

3070ti to 4070ti +25%, -17%, -12%, +25%, +33% price increase (+100) : ... I mean 12GB is better than 8GB right?

3070 to 4070 0%, -25%, 0%, 0%, +20% price (because adding +$100 is the new in thing to do!) : yup right back to WTF is this fuckery, sure an extra 4GB of ram, less bandwidth, boosted clocks though! And supposedly is equivalent to the 3080 for $100 less!

That's progress boys!

3090 to 4090 +56% shaders, +57% render units, +56% RT cores, +56% Tensor cores, +7% price (+$100) : By all accounts great value proposition if this is the purchase range you're considering

3080 to 4080 +12%, +17%, +9%, +12%, +72% price increase (+$500!!!!!): Now we're dipping our toes into WTF is this fuckery!? Now granted it's more than number of cores it's about speed and memory which got a whopping 4GB more, and memory bandwidth oh wait never mind that got slower.

3070ti to 4070ti +25%, -17%, -12%, +25%, +33% price increase (+100) : ... I mean 12GB is better than 8GB right?

3070 to 4070 0%, -25%, 0%, 0%, +20% price (because adding +$100 is the new in thing to do!) : yup right back to WTF is this fuckery, sure an extra 4GB of ram, less bandwidth, boosted clocks though! And supposedly is equivalent to the 3080 for $100 less!

That's progress boys!

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Why would you get the RTX 4090 when you could just get the RTX 5070ti is my question

Edit: I forgot where I was my bad lol

Edit: I forgot where I was my bad lol

Last edited:

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,016

This is actually a great point. Had the roles been reversed and it had been Nvidia hardware in the consoles, you can bet your bottom dollar the horde would be out for blood because the "big, bad green meanie" is hampering performance on little old AMD. But that's not the case, games like Hogwarts Legacy come out with obvious glaring issues and people just throw their hands up and blame less VRAM; when it has been proven in the passed that allocated VRAM is not the same thing as VRAM in actual use. Then to top it all off, when the developer fixes the issues proving that the amount of VRAM was actually not the issue; people go on to ignore the issue ever existed.It’s a console title first and foremost , AMD manages and maintains the development suite and the development hardware and is primarily responsible for all software and firmware updates related to them. How is that not an AMD sponsored title? This is quite literally their plan to gain a larger foothold in the gaming community at work.

It is something I see ocassionally that always has me like:

Funny, if the roles were revered as I just said above; a lot of you would be holding pitchforks and torches instead of brushing the issue under the rug.I would think Sony and Microsoft would be the ones working with the developer on the consoles. AMD would work directly with Sony or Microsoft on the hardware level and drivers. I dont think you can stretch and say it's a AMD sponsored title just because it's all AMD hardware in the consoles, it just made Sony's and Microsoft's life easier as well as the developers. Consoles require a bit more work for developers as they need to perform within the hardwares limitation, which may help on the PC side for AMD, but is a far cry from AMD actively working with the developer to optimize solely for AMD hardware.

Both companies have sponsored titles and it often shows by performance gains, but I think your stretching the truth to call Hogwarts Legacy a sponsored title by AMD. Nvidia just had to tweak their drivers, which happens sometimes with a new game regardless of which hardware you own. It has however shown a issue with having too little VRAM on your video card, which caught people by surprise. A problem that I think will continue with newer games coming out.

Rev. Night

[H]ard|Gawd

- Joined

- Mar 30, 2004

- Messages

- 1,509

Will trade you my 6700xt12GB of VRAM is going to be an issue before too long. Why I'm looking to replace my 3080, 10GB isn't far off from that.

While RT can "automate" certain aspects of development it does not make things cheaper in the grand scheme of things, primarily because of its computational energy cost for fidelity and accuracy that never goes a way. It's either pre-rendered full scene or real time shadows. The problem is that to do full scene ray-tracing its computational cost is staggeringly high and still can't be done at home. Not even close. DLSS (which owes quite a bit of it's technology from Pixar), frame generation, and path tracing all reduce the cost of RT. But RT by itself is expensive. The average Pixar movie costs somewhere in the ballpark of 800 million to make. The average AAA title? About 100 - 150 million.Ray tracing honestly puts a shit ton of artists out of work when it finally becomes the norm, and it will save studios a lot of money, and give more consistent results which means less testing being required and less need for "optimization" of the assets to hit certain performance metrics.

DLSS is a minor one-and-done thing, you add it to the rendering pipeline and call it a day, it is honestly easier to implement DLSS or FSR than it is to implement most of the existing anti-aliasing methods that are out there and again you get more consistent results so the sooner those replace the old AA methods the better it is for everybody.

Ray Tracing is about making things look better, but it is more about making things cheaper, It is about automating a tedious labor-intensive process. I know a lot of people here will say straight up that the Ray Traced stuff doesn't look any better than the rasterized stuff, and that is more condemning than they realize, because that rasterized work took 10's of thousands of man-hours to accomplish, while the Ray Traced side took 10's of thousands of computation hours done by some moderately expensive hardware manned by a single person.

Nvidia (now AMD and Intel as well) drop resolution or literally throw up a fake frames to reduce load and that's just for a few localized reflections or limited global illumination.

This has reduced the cost of RT over the years but nothing even approaching what would be required to do full scene, or something close to a movie. Nvidia's Path Tracing actually does quite a bit of approximation which is why there's still quite a bit of noise. It was very recognizable in Cyberpunk for me. I guessing people thought it was an effect filter. But in reality it's noise that's not present in raster. Want more fidelity by increasing samples to create better approximation of rays? Cool beans! Now watch your FPS tank.

In so many ways Nvidia is creating it's own render pipeline but I'm not so sure its needed for gamers.

Last edited:

This is actually a great point. Had the roles been reversed and it had been Nvidia hardware in the consoles, you can bet your bottom dollar the horde would be out for blood because the "big, bad green meanie" is hampering performance on little old AMD. But that's not the case, games like Hogwarts Legacy come out with obvious glaring issues and people just throw their hands up and blame less VRAM; when it has been proven in the passed that allocated VRAM is not the same thing as VRAM in actual use. Then to top it all off, when the developer fixes the issues proving that the amount of VRAM was actually not the issue; people go on to ignore the issue ever existed.

Literally Hardware Unboxed tested this but you refuse to admit it. Everyone knew this was an issue when the 30xx series launched. That's why it came up. No one is bringing this crap up just because. Actually in history this SAME exact issue came up with the Fury/Fiji AMD launched and HardOCP itself came out and said at the time 4GB wasn't enough. They tested it annnd it wasn't!

While I'm sure a whole bunch of AMD fans hated to hear the news no one could deny it was true. Lets take a trip in the way back machine. This is a quote from HardOCP from 2015!!!

"That's right, AMD sees the AMD Radeon R9 390 series as the cards best used in 4K, and doesn't even recommend the lower and more common and popular resolutions of 1440p or 1080p for the R9 390 series. The AMD Radeon R9 390 and 390X now have 8GB of VRAM standard to help facilitate better 4K performance.

Why are we dragging out the design goals for the R9 390 series in a Radeon R9 Fury X review? Because AMDs design goals for the AMD Radeon Fury series are also for a 4K experience, but there are certainly differences between the cards. It is interesting to take note of AMD's firm stance on 4K, and firm stance that lesser resolutions like 1440p and 1080p are not the goals with Fury X, even if those are the more common resolutions gamers use."

But here ya'll go "well if you stream in the textures and it's better optimized" good god I've never felt a hemorrhoid come back from 2015 like I do now.

Look developers will ALWAYS take the easiest route because time is money. No developer is going to create a custom engine ala Rage just because your video card is walking around with VRAM sizes that existed SEVEN YEARS AGO!!

Deal with it and ask nVidia for more.

Last edited:

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,016

I'm sorry, is there somewhere in your post that's debating the fact that AMD gets a pass where Nvidia would have been raked over the coals? Because I missed it in the rest of your post that has nothing to do with what you quoted of me.View attachment 564363

Literally Hardware Unboxed tested this but you refuse to admit it. Everyone knew this was an issue when the 30xx series launched. That's why it came up. No one is bringing this crap up just because. Actually in history this SAME exact issue came up with the Fury/Fiji AMD launched and HardOCP itself came out and said at the time 4GB wasn't enough. They tested it annnd it wasn't!

While I'm sure a whole bunch of AMD fans hated to hear the news no one could deny it was true. Lets take a trip in the way back machine. This is a quote from HardOCP from 2015!!!

View attachment 564365

"That's right, AMD sees the AMD Radeon R9 390 series as the cards best used in 4K, and doesn't even recommend the lower and more common and popular resolutions of 1440p or 1080p for the R9 390 series. The AMD Radeon R9 390 and 390X now have 8GB of VRAM standard to help facilitate better 4K performance.

Why are we dragging out the design goals for the R9 390 series in a Radeon R9 Fury X review? Because AMDs design goals for the AMD Radeon Fury series are also for a 4K experience, but there are certainly differences between the cards. It is interesting to take note of AMD's firm stance on 4K, and firm stance that lesser resolutions like 1440p and 1080p are not the goals with Fury X, even if those are the more common resolutions gamers use."

But here ya'll go "well if you stream in the textures and it's better optimized" good god I've never felt a hemorrhoid come back from 2015 like I do now.

Look developers will ALWAYS take the easiest route because time is money. No developer is going to create a custom engine ala Rage just because your video card is walking around with VRAM sizes that existed SEVEN YEARS AGO!!

Deal with it and ask nVidia for more.

Yes… I mean I certainly didn’t buy 12 1080TI’s for some lab servers rather than the corresponding Quadro cards at the time…. Noooo. I would never do that.

Install the creator drivers, tweak some ini and registry files to get proper pass through to the VM containers so I could do remote applications with out the need for an NVidia Grid license saving some $20,000 a year in licensing… I’m not a monster and I completely followed EULA’s…

sadly though by removing SLI connectors and changing the memory as they did along with minor tweaks to their drivers that thing I totally never did stopped working for those who were doing it.

Side note, it should be noted that AMD wrecked the workstation and server GPU markets by releasing products that get very limited support outside of windows and I’m not sure how many people run windows on their servers… and you have to tripple check what updates you do push to workstations with the AMD GPUs they are a smidge…. Fragile.

You know what, fair enough. I was thinking some of the higher end stuff when I wrote that but yes, this was possible. I know that was one of the use cases for the “90” series cards since they were “gaming” cards that didn’t really yield much benefit from a gamer’s standpoint but workstation guys could take advantage of the RAM. Still, when the 3080 was launched, there was already a debate about whether or not 10GB of VRAM would be sufficient for high end gaming within a few years. It’s hard for me to escape the conclusion that Nvidia was also discussing this and decided they didn’t care because it would force an upgrade, and that line of thinking was validated by many people, including on this forum. From a business standpoint, it made sense, I just hate it as a consumer.

AMD seems to have been more generous on VRAM for some time though.. I bought my R9 280X instead of the 770 largely because it had an extra 1GB of VRAM on board (comparable performance and price otherwise) and it remained playable for longer as a result.

AMD can be more generous because their gaming cards straight up don’t do their workstation workloads in a manner that makes sense given the price difference. AMD still uses different silicon between the two.You know what, fair enough. I was thinking some of the higher end stuff when I wrote that but yes, this was possible. I know that was one of the use cases for the “90” series cards since they were “gaming” cards that didn’t really yield much benefit from a gamer’s standpoint but workstation guys could take advantage of the RAM. Still, when the 3080 was launched, there was already a debate about whether or not 10GB of VRAM would be sufficient for high end gaming within a few years. It’s hard for me to escape the conclusion that Nvidia was also discussing this and decided they didn’t care because it would force an upgrade, and that line of thinking was validated by many people, including on this forum. From a business standpoint, it made sense, I just hate it as a consumer.

AMD seems to have been more generous on VRAM for some time though.. I bought my R9 280X instead of the 770 largely because it had an extra 1GB of VRAM on board (comparable performance and price otherwise) and it remained playable for longer as a result.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

Like?

In Hogwarts, it bumps my FPS from the mid-90's to low 100's in Hogwarts to 150-160 FPS (frame rate capped it at 160).

In CP2077 with the new Path Tracing patch with DLSS + FG at 1440p I'm getting an easy 70-80 FPS, making an otherwise unplayable game on any level of current hardware actually playable and looking extremely nice.

In Witcher 3 I was able to get around 110 FPS in the open, and about 85-90 in Novagrad.

The AMD 7900XT, the 4070Ti's counterpart, on every one of these games at these settings falls WAAAAY behind in these three games. Maybe in pure raster, without frame generation--it beats the 4070Ti in Hogwarts, but in CP2077 with those settings it doesn't even breathe the same air as the 4070Ti, let alone 4080/4090. In Witcher 3 with those same settings with FSR it maybe reaches the 60's? I'd have to rewatch some videos to see what it actually gets. In each one of these titles I've seen very little wrong with FG, what little I have seen has not been anything immersion breaking, or game breaking for that matter.

So what's to hate about a technology that makes what would normally be impossible to achieve, possible?

Because the frames are fake? Only way you can really tell the difference is if you record yourself and slow that recording down to maybe 25-30%, but during normal game play you'd have to actively search for those fake frames or any artifacts.

Because it increases input latency? Personally, testing with both controller and KB/M and testing both settings I saw absolutely zero differences, everything responded the exact same for me. Now, you can make a case for competitive multiplayer games, but if you're having to use FG to get more frames in a multiplayer game, then you have other issues with your PC's performance you need to address first.

Because it messes with the HUD, or UI elements? That's about the only issue I've had, but it hasn't been anything to fuss about, as long as the UI elements aren't completely disappearing, I'd rather nab a smoother overall experience at the cost of an occasional UI element flickering, like the quest destination icon in Hogwarts Legacy that'll flicker if I pan the camera too fast.

The reason I said anything about AMD fanboy'ism in my post was because I know once FSR 3.0 comes out people are going to use the shit out of their version of FG if it's decent enough, and probably will claim it as a god send, yet those very same people aren't going to go back and say they were wrong about FG, instead, they'll justify their disdain towards FG with something like "it's a 40-series only feature, while FSR 3 is universal," or some form of "because Ngreedia!" Honestly people should be happy that FG exists, it's pushing AMD to try and compete on a features level, something they probably wouldn't have bothered doing if Nvidia hadn't paved the way.

To put it out there--I'm not a fanboy. The 4070Ti is my first Nvidia card that I've actually used and kept since the 970, in between the 970 and 4070Ti I've used the Vega 56, 5700XT, and 6700XT--frame generation is what sold me on the 40-series, and I didn't want to sit around and wait on AMD's implementation, I wanted it now, and decided to venture back to the Nvidia side, and to me Frame Generation has not disappointed in the slightest, hence why I don't see the reason for the flack.

Nvidia isn’t selling graphics cards — it’s selling DLSS https://www.digitaltrends.com/computing/nvidia-selling-dlss/

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

What's the best nvidia AIB partner how that EVGA is gone? Thinking about having my boss get me one of these for my work comptuer, my 1660 needs replacing. Either that or a 6950 XT, he might want me on Radeon since the rest of the office is on 3080s.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

What's the best nvidia AIB partner how that EVGA is gone? Thinking about having my boss get me one of these for my work comptuer, my 1660 needs replacing. Either that or a 6950 XT, he might want me on Radeon since the rest of the office is on 3080s.

Check Best Buy for Founder's Edition restocks

I'm not paying for it lol, I don't give a crap which card it is.Check Best Buy for Founder's Edition restocks

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,956

And people laughed at me for buying DVDs. Justification.Nvidia isn’t selling graphics cards — it’s selling DLSS https://www.digitaltrends.com/computing/nvidia-selling-dlss/

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

HmmAnd people laughed at me for buying DVDs. Justification.

AMD can be more generous because their gaming cards straight up don’t do their workstation workloads in a manner that makes sense given the price difference. AMD still uses different silicon between the two.

Not only that, but they’re also a distant second in the market and need to incentivize people to buy their cards.

Or Consoles or APUs.Not only that, but they’re also a distant second in the market and need to incentivize people to buy their cards.

Usual_suspect

Limp Gawd

- Joined

- Apr 15, 2011

- Messages

- 317

Okay... and?Nvidia isn’t selling graphics cards — it’s selling DLSS https://www.digitaltrends.com/computing/nvidia-selling-dlss/

The cards by themselves are perfectly capable, but having a feature that can turn a 4070Ti into a 4090, granted with a minor hit in IQ, with the with the click of one, or maybe two buttons is by all accounts an awesome feature. Why do you think AMD's followed suit? FSR, and now FSR "Fluid Frames" are only there because Nvidia showed how beneficial features like this would be. When you can reduce the load on the GPU itself, effectively lowering power consumption it will appeal to that crowd of people who value low power consumption. When you can bump your frames up massively in a game where you may not be getting playable frame rates with all the bells and whistles turned up to max, it will appeal to that crowd. It's a win-win in every situation.

DLSS will eventually get to the point to where you won't be able to tell the difference between native and DLSS, and with Frame Generation in the mix going forward, again I don't understand why it gets flack, outside of a lot of the AMD crowd finding reasons to poke at Nvidia instead of demanding AMD do something more than just slap a bunch of VRAM on a card while offering last gen RT performance, and still relatively high power consumption compared to their Nvidia counterparts for slightly better raster performance, and nothing else. This is one of the reasons I didn't go AMD this time around--they're not innovating, they're playing catch up. Frame Generation is just another example of how AMD just isn't interested in innovation until Nvidia does it first.

The only negative I can see coming from techs like FSR or DLSS is it has the possibility of making AAA dev's lazier with their PC ports.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

how is it turned into a 4090 if the same feature gets turned on with the 4090Okay... and?

The cards by themselves are perfectly capable, but having a feature that can turn a 4070Ti into a 4090, granted with a minor hit in IQ, with the with the click of one, or maybe two buttons is by all accounts an awesome feature. Why do you think AMD's followed suit? FSR, and now FSR "Fluid Frames" are only there because Nvidia showed how beneficial features like this would be. When you can reduce the load on the GPU itself, effectively lowering power consumption it will appeal to that crowd of people who value low power consumption. When you can bump your frames up massively in a game where you may not be getting playable frame rates with all the bells and whistles turned up to max, it will appeal to that crowd. It's a win-win in every situation.

DLSS will eventually get to the point to where you won't be able to tell the difference between native and DLSS, and with Frame Generation in the mix going forward, again I don't understand why it gets flack, outside of a lot of the AMD crowd finding reasons to poke at Nvidia instead of demanding AMD do something more than just slap a bunch of VRAM on a card while offering last gen RT performance, and still relatively high power consumption compared to their Nvidia counterparts for slightly better raster performance, and nothing else. This is one of the reasons I didn't go AMD this time around--they're not innovating, they're playing catch up. Frame Generation is just another example of how AMD just isn't interested in innovation until Nvidia does it first.

The only negative I can see coming from techs like FSR or DLSS is it has the possibility of making AAA dev's lazier with their PC ports.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

how is it turned into a 4090 if the same feature gets turned on with the 4090

4090 becomes a 4090 tie plus

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,844

Think they can squeeze an "ultra" on at the end?4090 becomes a 4090 tie plus

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

Nah it’s a 4090 and the 4090 Ti (+/Plus) is pending announcement4090 becomes a 4090 tie plus

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

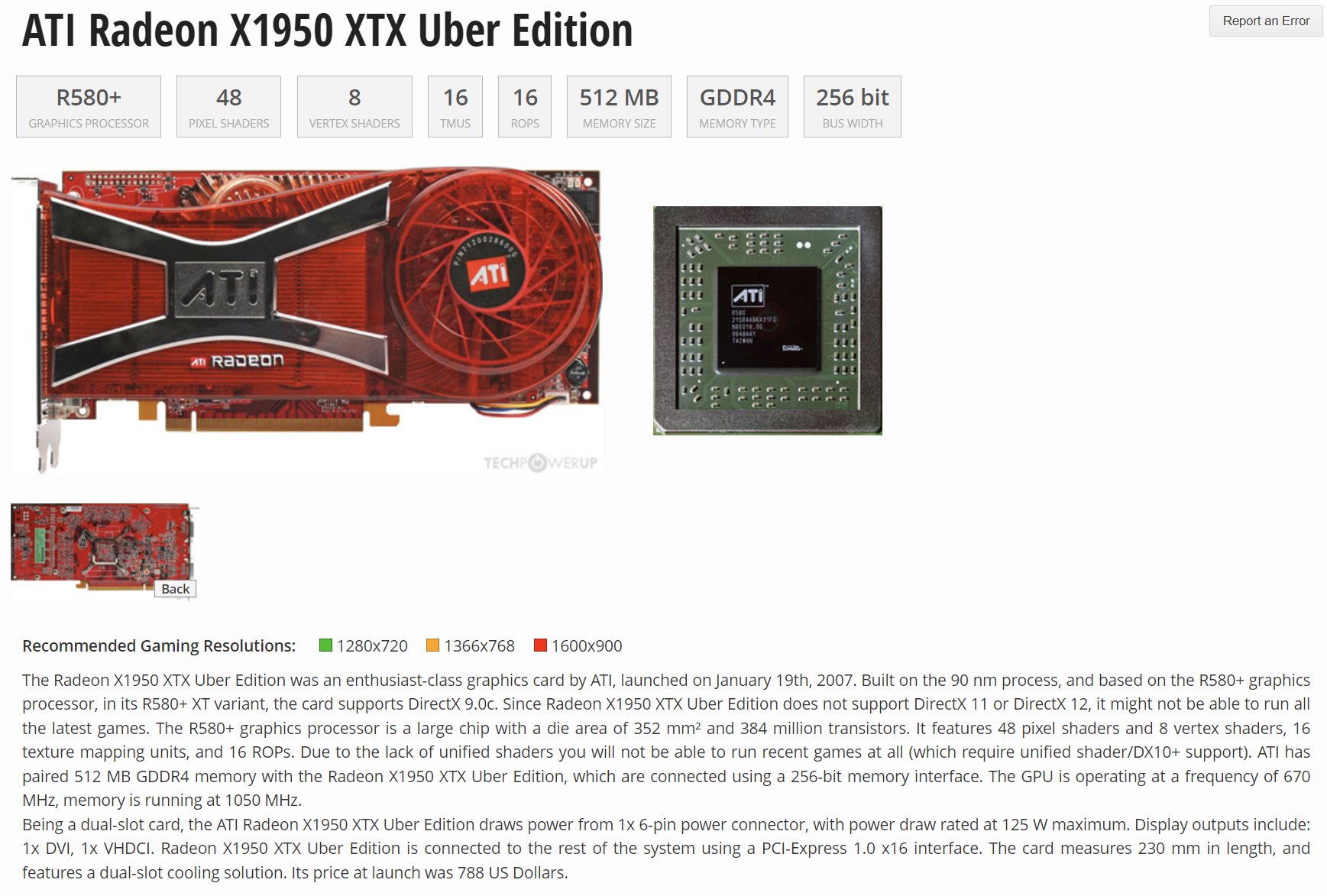

There used to be an X850 XTX Platinum Edition (not sure if there’s anything else beyond that level. Might be)Think they can squeeze an "ultra" on at the end?

https://www.techpowerup.com/gpu-specs/radeon-x1950-xtx-uber-edition.c3345

Last edited:

Usual_suspect

Limp Gawd

- Joined

- Apr 15, 2011

- Messages

- 317

To clarify--the 4070Ti gets the same performance as the 4090 without FG. Yes, the 4090 has FG also, but what I was getting at is getting 4090 like performance with a minor hit to image quality from a card that costs half the price.how is it turned into a 4090 if the same feature gets turned on with the 4090

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,844

You heard it here guys, no need to buy a 4090. All anyone ever needs is a 4070ti. Man, bunch of people wasted $1600+.

Software performance =/= actual hardware performance.

Software performance =/= actual hardware performance.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,944

i heard not even a Ti is needed, just the 4070You heard it here guys, no need to buy a 4090. All anyone ever needs is a 4070ti. Man, bunch of people wasted $1600+.

Software performance =/= actual hardware performance.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,844

WTB rtx 4090, I'll even be generous and offer $700i heard not even a Ti is needed, just the 4070

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,016

Strong opinion since the competition is lacking in hardware performance in the top end. Are you going to complain about them naming their competitor a **90 series card instead of a **80 series card that they continually crow it's supposed to compete against, or is this a one sided hate thing that is so prevalent on this board now?You heard it here guys, no need to buy a 4090. All anyone ever needs is a 4070ti. Man, bunch of people wasted $1600+.

Software performance =/= actual hardware performance.

Usual_suspect

Limp Gawd

- Joined

- Apr 15, 2011

- Messages

- 317

I’m either getting trolled or reading comprehension isn’t strong with these ones. I said “like performance.”

I never said the 4070Ti was as good as the 4090, it isn’t and never will be, but with FG it can get like performance if you’re not wanting to spend the cash, it won’t be as good since the 4090 can do what the 4070Ti needs DLSS + FG to do, with no need for upscaling hence better visuals and no DLSS issues, and no concerns of ever running out of VRAM, etc.

I never said the 4070Ti was as good as the 4090, it isn’t and never will be, but with FG it can get like performance if you’re not wanting to spend the cash, it won’t be as good since the 4090 can do what the 4070Ti needs DLSS + FG to do, with no need for upscaling hence better visuals and no DLSS issues, and no concerns of ever running out of VRAM, etc.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,758

I don't know which it is, but it sure lessens my enjoyment of the forums nowadaysI’m either getting trolled or reading comprehension isn’t strong with these ones. I said “like performance.”

I never said the 4070Ti was as good as the 4090, it isn’t and never will be, but with FG it can get like performance if you’re not wanting to spend the cash, it won’t be as good since the 4090 can do what the 4070Ti needs DLSS + FG to do, with no need for upscaling hence better visuals and no DLSS issues, and no concerns of ever running out of VRAM, etc.

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,381

https://twitter.com/HardwareUnboxed/status/1646654457781063681

https://twitter.com/tomshardware/status/1646872696624492545

https://twitter.com/firstadopter/status/1647408780773031936

https://twitter.com/TechEpiphany/status/1646644386694873088

Suck it Ngreedia....Jenson will have to hold off buying another whale skin lined leather jacket until the 50X0 release.

https://twitter.com/tomshardware/status/1646872696624492545

https://twitter.com/firstadopter/status/1647408780773031936

https://twitter.com/TechEpiphany/status/1646644386694873088

Suck it Ngreedia....Jenson will have to hold off buying another whale skin lined leather jacket until the 50X0 release.

Last edited:

Think they can squeeze an "ultra" on at the end?

4090 Super Ultra Ti Tie, MSRP $3000, retails for $4000.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)