GDI Lord

Limp Gawd

- Joined

- Jan 14, 2017

- Messages

- 306

AMD ROCm Solution Enables Native Execution of NVIDIA CUDA Binaries on Radeon GPUs

Published One hour ago by Hilbert Hagedoorn

https://www.guru3d.com/story/amd-ro...ution-of-nvidia-cuda-binaries-on-radeon-gpus/

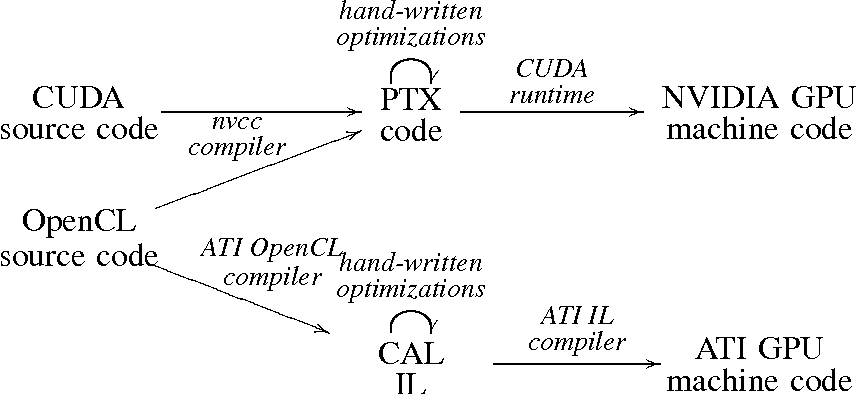

"AMD has introduced a solution using ROCm technology to enable the running of NVIDIA CUDA binaries on AMD graphics hardware without any modifications. This project, known as ZLUDA, was discreetly backed by AMD over two years. Initially aimed at making CUDA compatible with Intel graphics, ZLUDA was reconfigured by developer Andrzej Janik, who was hired by AMD in 2022, to also support AMD's Radeon GPUs through the HIP/ROCm framework. The project, which took two years to develop, allows for CUDA compatibility on AMD devices without the need to alter the original source code."

I'm pretty darn impressed, TBH. Not very impressed that both Intel and AMD dropped Andrzej, but impressed that it works - better than I expected.

Previously mention by erek in 2020: https://hardforum.com/threads/zluda-project-paves-the-way-for-cuda-on-intel.2004052/

Published One hour ago by Hilbert Hagedoorn

https://www.guru3d.com/story/amd-ro...ution-of-nvidia-cuda-binaries-on-radeon-gpus/

"AMD has introduced a solution using ROCm technology to enable the running of NVIDIA CUDA binaries on AMD graphics hardware without any modifications. This project, known as ZLUDA, was discreetly backed by AMD over two years. Initially aimed at making CUDA compatible with Intel graphics, ZLUDA was reconfigured by developer Andrzej Janik, who was hired by AMD in 2022, to also support AMD's Radeon GPUs through the HIP/ROCm framework. The project, which took two years to develop, allows for CUDA compatibility on AMD devices without the need to alter the original source code."

I'm pretty darn impressed, TBH. Not very impressed that both Intel and AMD dropped Andrzej, but impressed that it works - better than I expected.

Previously mention by erek in 2020: https://hardforum.com/threads/zluda-project-paves-the-way-for-cuda-on-intel.2004052/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)