Twisted Kidney

Supreme [H]ardness

- Joined

- Mar 18, 2013

- Messages

- 4,106

Damnit I wish Macs weren't so shit for gaming.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

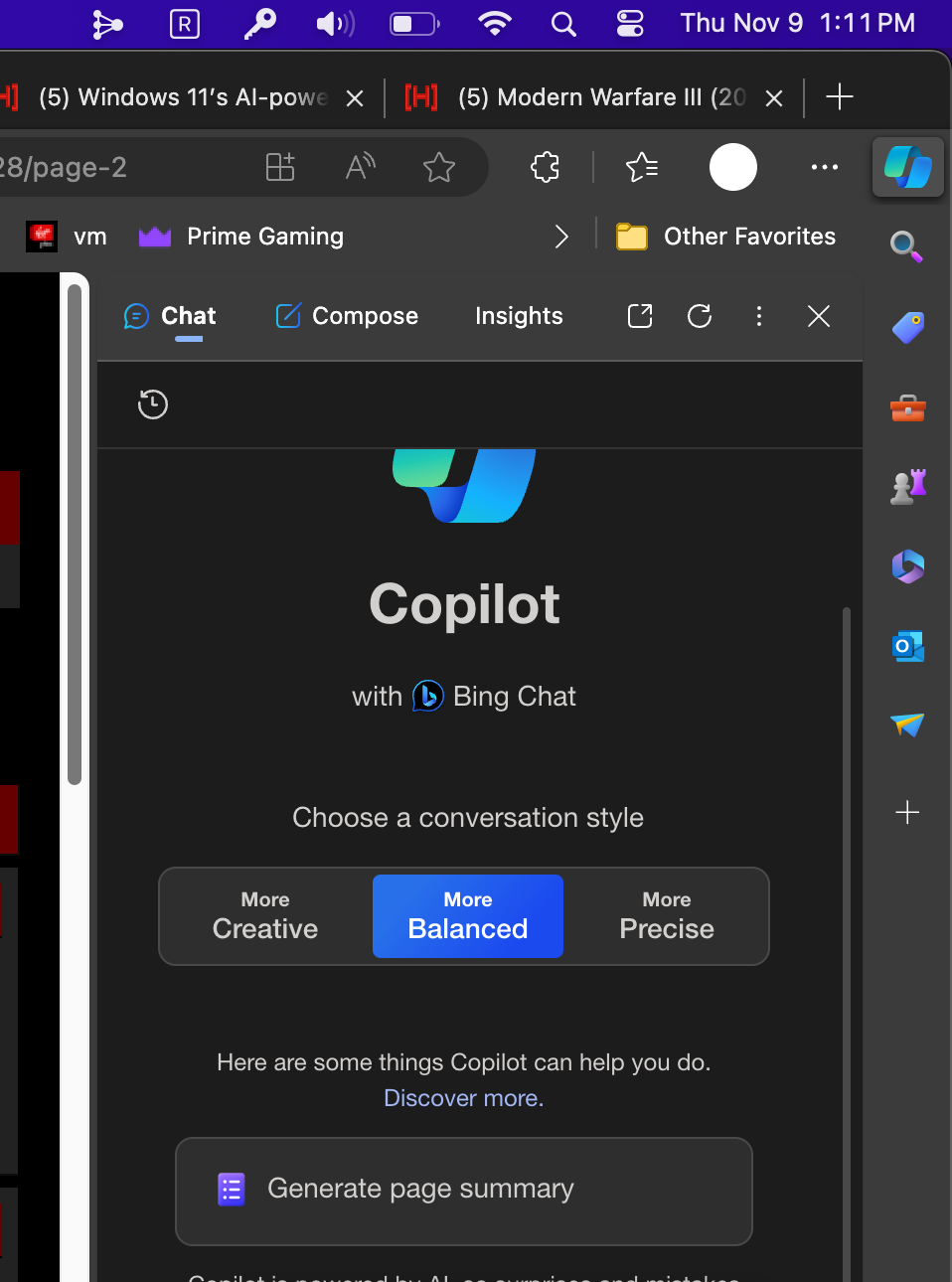

The toggle will be buried deep inside the ancient recesses of the layers of Windows past.So do you agree to launch this "copilot" or is it on by default and you have to find where the setting is to disable it?

only a matter of time till they have it too, and if you use edge it's already in that...Damnit I wish Macs weren't so shit for gaming.

its already in edge...Looks as though m$ is going to inject this trash into 10 as well.

https://ghacks.net/2023/11/09/brace-yourself-a-report-suggests-that-copilot-is-coming-to-windows-10/

only a matter of time till they have it too, and if you use edge it's already in that...

its already in edge...

View attachment 612155

Must loose a little fortune by clients at that cheap of pricing.

shitty.Brave infected its browser as well. Skynet is growing at an exponential rate.

It's opt out, so it's on by default after the update gets installed. It's not an app, so there is no way to uninstall it. The only thing to do at the moment is turn it off, but who knows how long Microsoft will let you do that.So do you agree to launch this "copilot" or is it on by default and you have to find where the setting is to disable it?

they wont even let you turn it off in edge on mac...It's opt out, so it's on by default after the update gets installed. It's not an app, so there is no way to uninstall it. The only thing to do at the moment is turn it off, but who knows how long Microsoft will let you do that.

Looks as though m$ is going to inject this trash into 10 as well.

https://ghacks.net/2023/11/09/brace-yourself-a-report-suggests-that-copilot-is-coming-to-windows-10/

plebs dont care, thats why.At this point it feels like they are actively trying to piss people off and get them to abandon Windows.

only a matter of time till they have it too, and if you use edge it's already in that...

People use AI everyday on their IPhone without calling it that, I can easily see that.. Microsoft will push that shit into everything while Apple will make it unobtrusive

Machine learning used to understand speech, generative AI to do text to speech, image correction-stabilisation, pathfind for maps, pre give a relevant name to picture instead of anonymous long numbers, edit picture by voice command including remove X, change Y by Z, etc.. even agents that speech to hotel-restaurant agents to do reservation it will never be patched out.Quietly patched out ten years later.

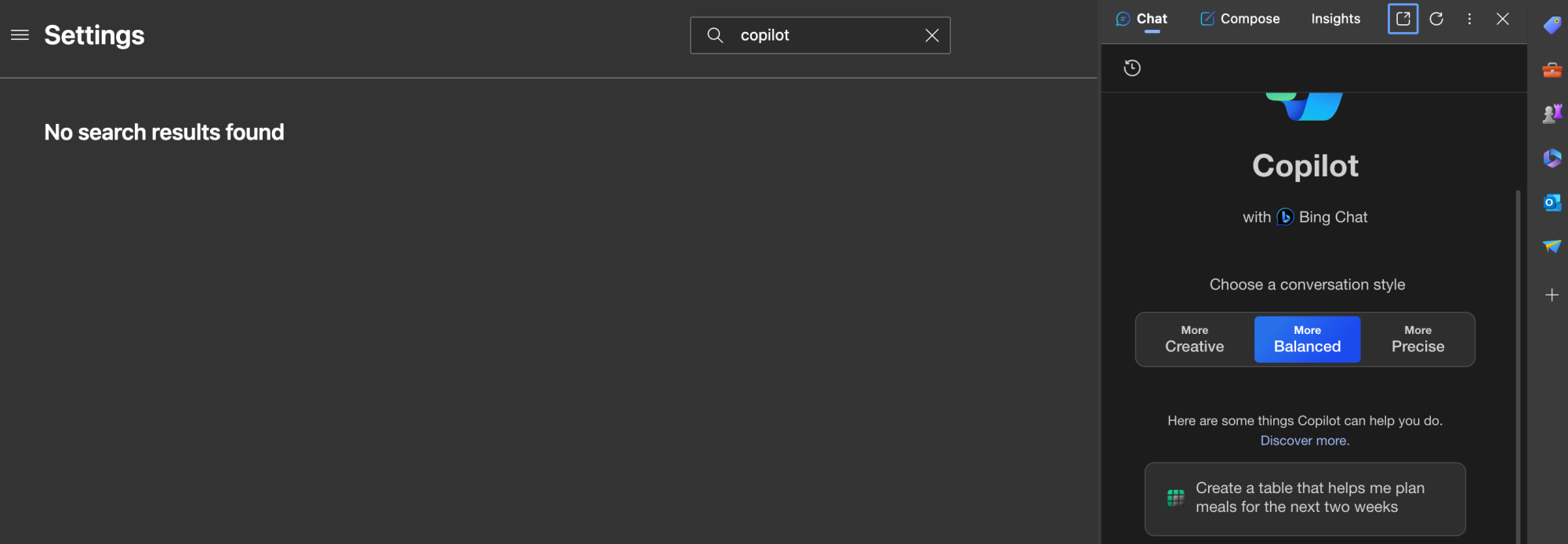

Woof, Microsoft must think they are not only Apple, but also IBM/Oracle/Adobe all at once with licensing like that.They wanted 30 dollars per person each month with a minimum of 300 people to get the "preview" into our tenant. If we licensed everyone in the company it would be 1.9 million a year for copilot.

I know, I'm mostly being flippant about the hype and hysteria over machine learning.People use AI everyday on their IPhone without calling it that, I can easily see that.

Machine learning used to understand speech, generative AI to do text to speech, image correction-stabilisation, pathfind for maps, pre give a relevant name to picture instead of anonymous long numbers, edit picture by voice command including remove X, change Y by Z, etc.. even agents that speech to hotel-restaurant agents to do reservation it will never be patched out.

People will simply like they always did, start to call it computer again and reserve the word AI for stuff computer have yet to do well.

Turn it off... until the next minor update where it switches it back onIt's opt out, so it's on by default after the update gets installed. It's not an app, so there is no way to uninstall it. The only thing to do at the moment is turn it off, but who knows how long Microsoft will let you do that.

People use AI everyday on their IPhone without calling it that, I can easily see that.

Machine learning used to understand speech, generative AI to do text to speech, image correction-stabilisation, pathfind for maps, pre give a relevant name to picture instead of anonymous long numbers, edit picture by voice command including remove X, change Y by Z, etc.. even agents that speech to hotel-restaurant agents to do reservation it will never be patched out.

People will simply like they always did, start to call it computer again and reserve the word AI for stuff computer have yet to do well.

Debloating tools like NTLite and MSMG Toolkit are currently being updated for 23H2, to be able to remove Copilot from the install ISO. Copilot likely falls onto the growing list of Windows components that are not uninstallable after the fact, and the only way to avoid them is never let them install to begin with. As the saying goes, "the best way to survive a plane crash is be on the ground when it happens."It's opt out, so it's on by default after the update gets installed. It's not an app, so there is no way to uninstall it. The only thing to do at the moment is turn it off, but who knows how long Microsoft will let you do that.

Data analysis is the same with people. The difference is people, while having bias, still has intuition and foresight. AI doing data analysis will be doing cold analysis with whatever bias demanded by the executives hard-coded into the algorithm. Such a thing will accelerate the death by data epidemic we're currently dealing with.I would like to see a list of what organizations are actually planning on using this for organizational data in support of decisionmaking so I can avoid them.

It's only a matter of time u til this AI nonsense makes a catastrophic business ending statistical assumption, and I don't want to be working at or invested in any of those businesses when it happens.

You cannot trust AI. It's just a series of statistically based guesses. It is not a substitute for rational decisionmaking.

Humans make company ending bad decisions all the time. Humans struggle to order a donut in drivethrough in the morning or after finding out they are getting divorced go on to make corporate decisions. Removing peoples useless and impeding emotions from data analysis is a far better path.

The number of humans who actually change their minds based on explicit data is pretty small. Most go by their gut which is in fact making statistical guesses based on its training data.

But programming based on a gut instinct about which Function you should build next is a risk process, unless you have been building the same thing the same way for a decade.

There a reason I keep making Butlerian Jihad cracks.The danger of AI

I mean you are not serious and you say you are in favor of what you would ban but this (if just even a bit serious) is ridiculous.If I were king for a day, I'd even go so far as to completely ban all applications of AI. I'm OK with and even in favor of Machine Learning models where they find potential patterns for humans to further investigate, but AI making decisions is fantastically dangerous

I mean you are not serious and you say you are in favor of what you would ban but this (if just even a bit serious) is ridiculous.

Banning video game NPC, video stabilization, computer playing chess, how will we define AI in a way that will not just ban pretty much all computer application ?

Which type of AI we are talking about then ? That a bit the point, it will be really hard to define any ban on AI.That's not the type of AI we are discussing here. Applications that have real works implications - however - should ideally be off the table.

I dunno. GPT 4 isn't nearly as dumb as some of my friends, and isn't going to choose windows for a desktop for example. Or get knocked up by a dipshit. Or buy a house with a mortgage right before interest rates are obviously set to climb for decades.

Here’s what got added to my licenses today

View attachment 613563

Which type of AI we are talking about then ? That a bit the point, it will be really hard to define any ban on AI.

If we talk about real works-world implication, say a machine learning system that help to detect from a giant amount of drone picture of a crop field a potential issue, send the picture with a warning to someone, that should be off the table ? What about speech to text ? System that detect child pornographic content ?