MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,529

Do you have a amazon link to the display you're talking about? I'm just curious about Its price and reviews on Amazon since you give it such a good recommendation.

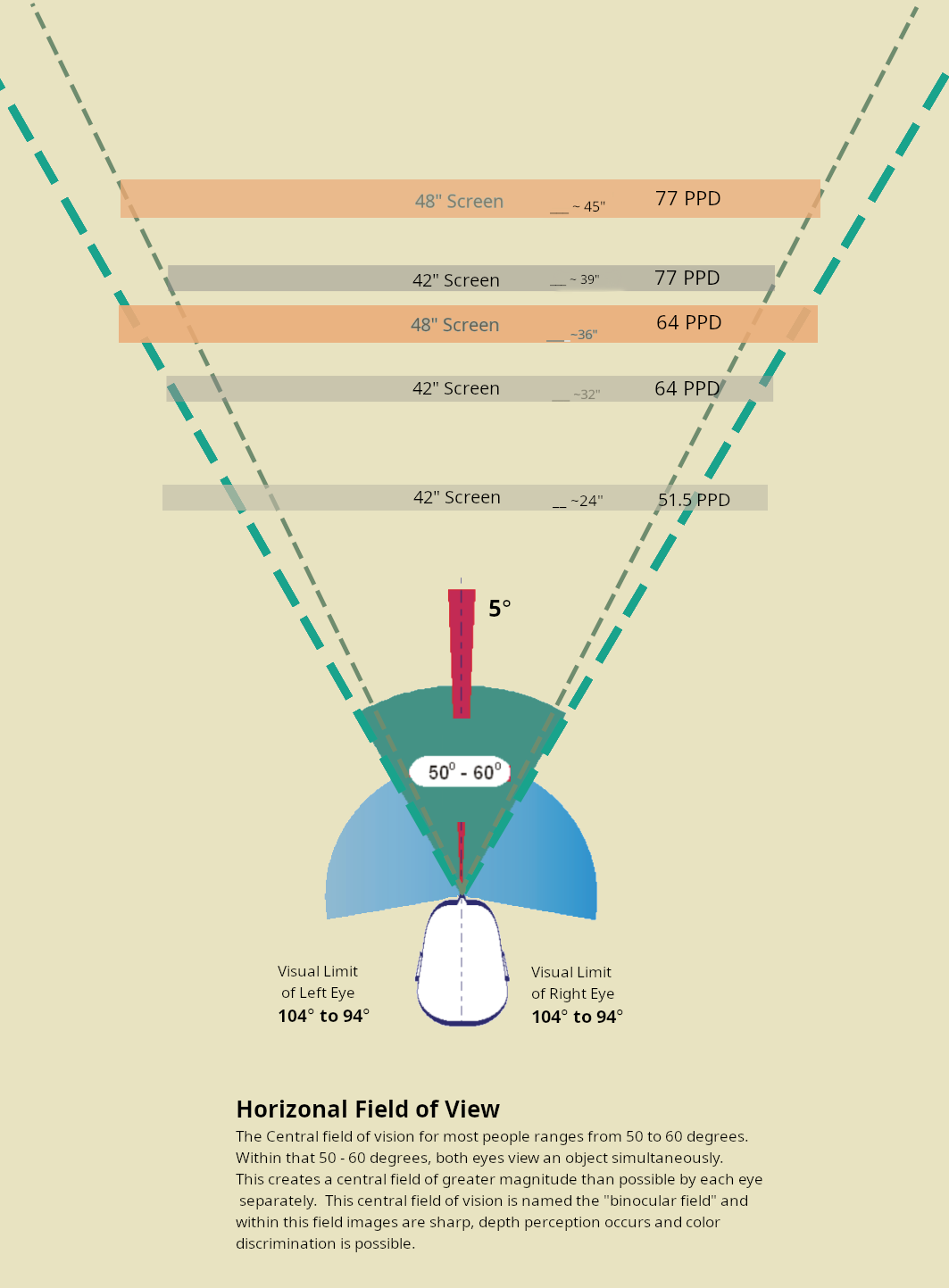

They actually de list the product every time it goes out of stock and you have to wait for them to restock it before the item gets listed again. RTings already reviewed the 27 inch version, biggest difference being that the 27 inch version can do 160Hz and has a faster response time. I paid $850 for mine back in Feb and between this display and an LG C2 for about the same price I would pick this one every single time.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)