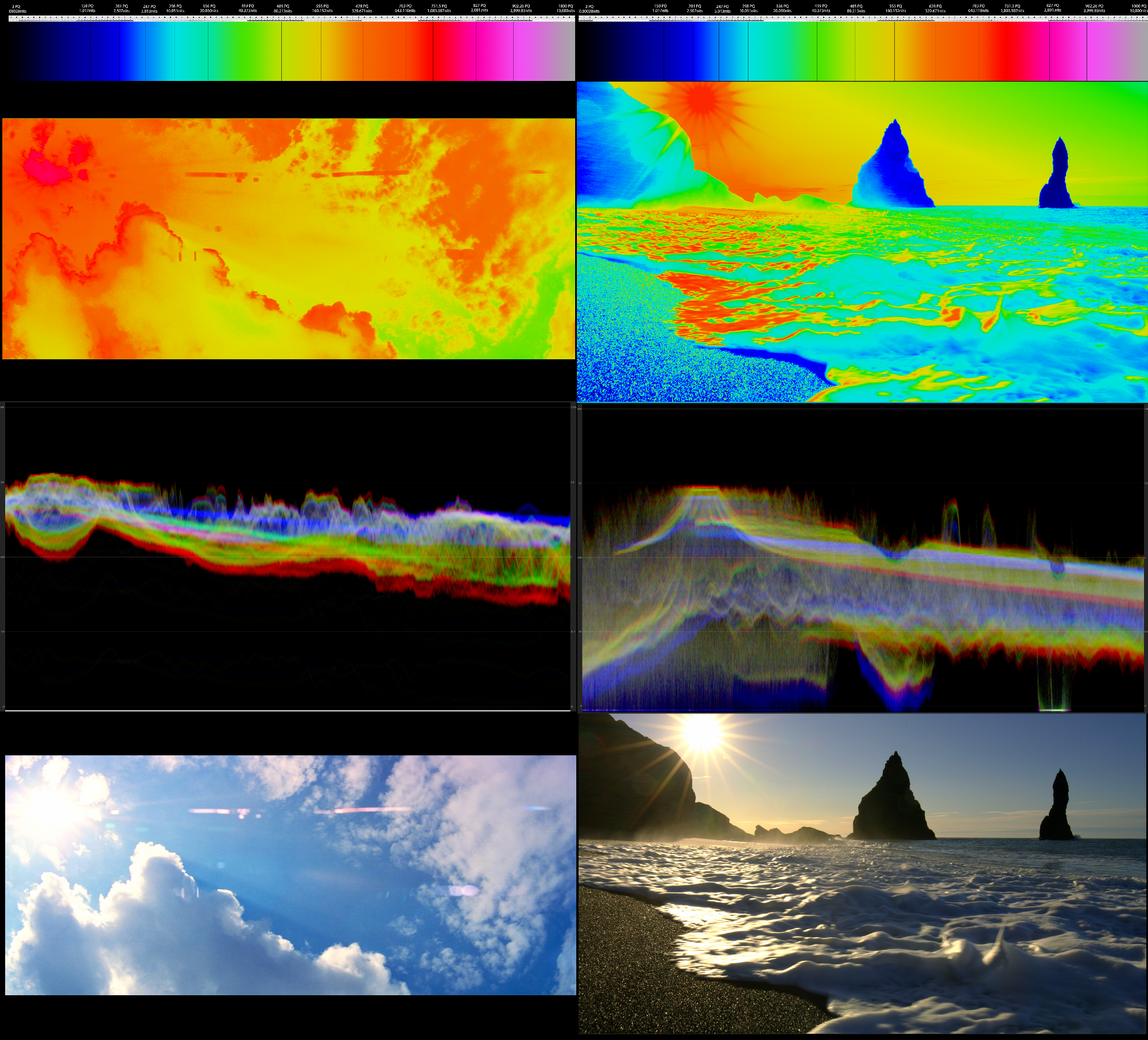

You just downplay the importance of brightness as if it doesn't matter. If there is no brightness then there is no color. Realistic images has much higher mid to showcase 10bit color. A higher range is always better.

Of course overall image brightness matters, but is somewhat moot for SDR. SDR brightness reference is generally 100 nits. Anyone is free to go above that, as I do, if you want a bit more pop. But again, mastered in sRGB can't look more accurate than sRGB.

I can turn the brightness all the way up on my TV for SDR if I want (it's at 9 now for ~160 nits and goes all the way to 50). Colors will pop much more and compared side-by-side, and there would be no contest as to which looks prettier/more vibrant if I took a photo. I'd also have a headache and my eyes would burn at the first white screen I came to. Of course, this isn't desireable. In a dark room, not comparing side-by-side, my eyes adjust to the 160 nits and things look good and are sufficiently easy on the eyes while looking like they're supposed to, albeit a touch brighter per my preference.

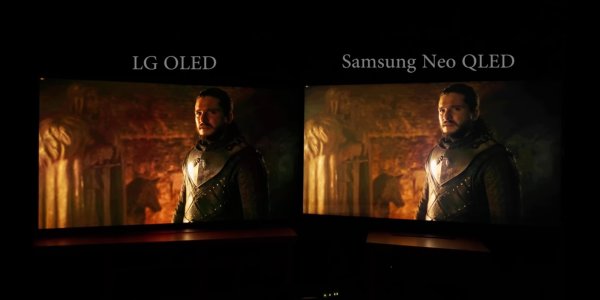

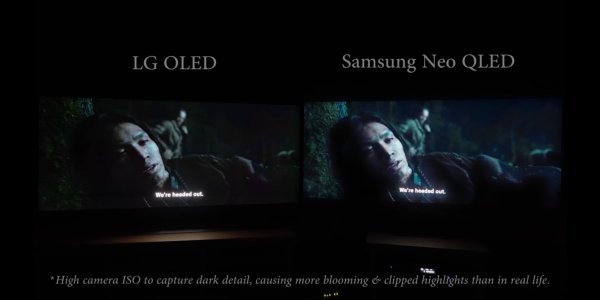

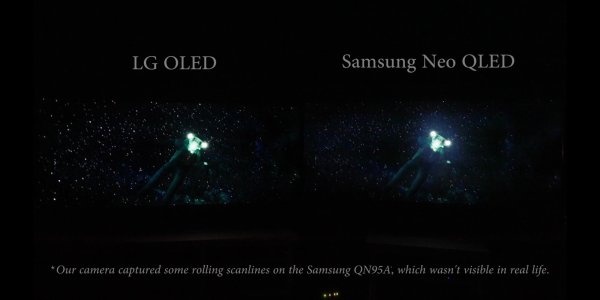

Even for HDR, the technology is displaying things in the nits they're meant to display in, and the majority of those things are going to be in the SDR or slightly-above-SDR brightness range. Where you need that extra brightness oompf is things like suns, lights, neon signs, etc., and OLED does fine here most of the time. FALD does, of course, do better because it can get brighter and sustain it longer, so OLEDs would be limited in full field, super bright images. But, those tend to be fairly brief in games and movies as they'd be too bright to look at sustained comfortably anyways, so in most cases, OLED is going to look quite good, and that's been my experience so far.

It's ridiculous to trade a little local dimming bloom or trade sustained 1600nits or trade 10bit color for a 200nits OLED experience. A dim image with a fewer dots highlight is not true HDR. FLAD LCD can display better than this.

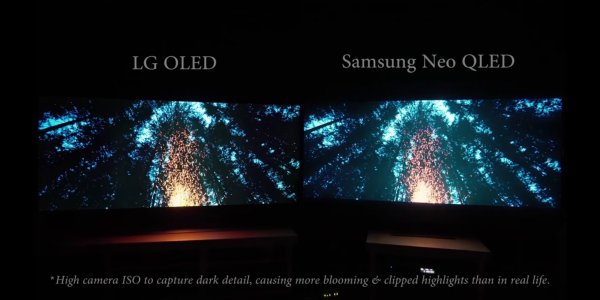

It may be ridiculous *to you*, and that's fine. The bloom I was experiencing in day-to-day use bothered me much more than any ABL on this monitor ever could, and at a higher price. In short, I tried one of the best-reviewed FALD on the market and was pretty unhappy. I'm much happier with the OLED. You can argue specs all you want. Hell, I bought the FALD because of the specs and was avoiding OLED for those same reasons. But real-world use matters, and in the end, I ended up much happier with the picture in the vast majority of the situations I use the display for with the OLED, and the HDR implementation is plenty for me.

As far as panel technology can go, manufacturers especially LG is on a road to go all-in just like Sharp. If things doesn't go well it will be too late for LG to realize that before jumping to the next gen tech the FALD LCD already takes the market.

I'm excited for the future of miniLED and perhaps even moreso microLED. But I also don't feel it's there yet - it still has a lot of issues that need to be ironed out. Who knows - maybe FALD will solve its issues and be king with something like microLED, maybe OLED will continue to improve and solve burn-in and brightness and be king, or maybe it will be something different altogether that ends up being the best technology that can combine the best of both. But for right now, both FALD and OLED are popular for different people for valid reasons. They both have clear pros and cons that comes down to what upsides a person values most and what downsides a person can live with.

Accuracy in limited sRGB doesn't mean it will have a better or a natural image. Accuracy is intended for mass distribution. It's more important for the creators. These creators might not have the intention or budget to implement HDR for better images. Even if they implement native HDR, the UI can still shine at max brightness just like every game made by Supermassive. These anomalies are easy to fix.

Native HDR can be good or bad depending on implementation, and the industry is still working out the kinks in that. I remember Red Dead Redemption's HDR was notoriously broken for quite a while. But the anomalies with Auto SDR do extend beyond that. When there's not proper information as to what the brightness of different aspects of a picture is supposed to be, extrapolation from an algorithm can be good, but far from perfect. Again, you may not prefer an sRGB image. But I do, for its objective accuracy, and for the naturalness I perceive. Many creators do like and prefer it when those who enjoy their work view it as close to reference as possible.

AutoHDR is a way if you want to see a higher range. It is only a matter of time before AutoHDR will give users various options such as adjusting levels of shadows, midtones, highlights, and saturation just like native HDR so they can see better HDR matched on the range of their monitors instead of SDR sRGB.

The higher range is artificial, though. It's nothing more than an educated guess expanding an image not meant for the HDR range into the HDR range. The results may be appealing to some people, but they won't be for everyone, me included.

As far as the customizability, I think giving people options is great. Me, personally? I don't want to spend forever tweaking an image to make it the way I want it, especially when I may have no reference to what it was supposed to look like in the first place. Intent and accuracy matters to me, so I just want to watch it as close to the creator's intent as possible on my display. I might make minor tweaks to things like brightness, but otherwise, I'd prefer to keep it simple for myself. (Though I'm great with others having all the options in the world, as long as I don't have to use them =oP).

I think on most of these points, we're just going to have to agree to disagree. I can respect not everyone has the same priorities with content. I'm just trying to point out not everyone has the same criteria for what's best, and a great monitor that's the best fit for someone can mean different things to different people. I never expected that to be OLED for me, but here we are, and at this time in technology, it is.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)