Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,820

That's a lot of "documentary" storageI know nothing about Snapraid or Drivepool, but I am going to have to read up now.

I have always been a huge ZFS fan. It's JBOD does its magic in software, and is reportedly one of the most reliable RAID-like solutions out there due to how well it combats bit-rot.

I believe TrueNAS Core (formerly FreeNAS) still has a web based GUI implementation of ZFS ontop of a barebones FreeBSD install. I haven't used it in ages though, instead favoring the DIY approach using ZFS from the command line.

I currently have my main pool configured as follows:

Code:state: ONLINE scan: scrub repaired 0B in 10:02:09 with 0 errors on Sun Nov 12 10:26:14 2023 config: NAME STATE READ WRITE CKSUM pool ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 raidz2-1 ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 Seagate Exos X18 16TB ONLINE 0 0 0 special mirror-4 ONLINE 0 0 0 Inland Premium NVMe 1TB ONLINE 0 0 0 Inland Premium NVMe 1TB ONLINE 0 0 0 Inland Premium NVMe 1TB ONLINE 0 0 0 logs mirror-3 ONLINE 0 0 0 Intel 280GB Optane 900p ONLINE 0 0 0 Intel 280GB Optane 900p ONLINE 0 0 0 cache Inland Premium NVMe 2TB ONLINE 0 0 0 Inland Premium NVMe 2TB ONLINE 0 0 0 errors: No known data errors

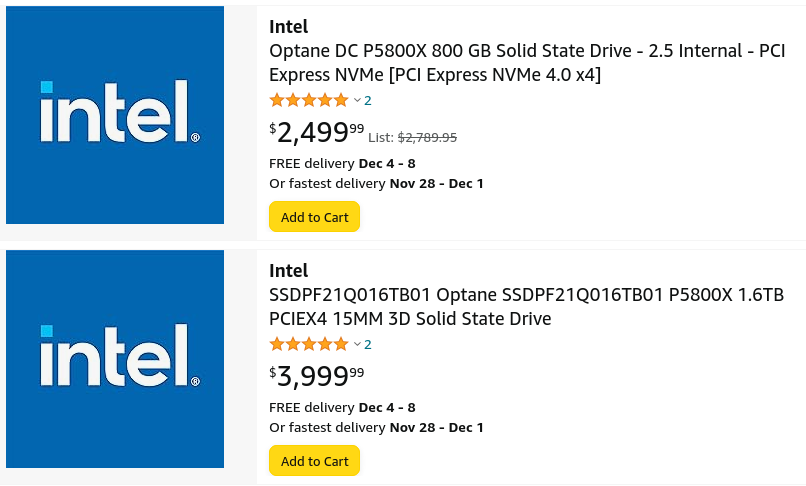

So, essentially ZFS 12 drive RAID60 equivalent on the hard drives. A three way mirror of 1TB Gen 3 MLC NVMe drives for small files and metadata, two mirrored Optanes for the log device (speeds up sync writes) and two striped 2TB Gen 3 NVMe drives for read cache.

It works pretty well for me. Well, maybe all except the read cache. The hit rate is atrocious on those. Despite being 4TB total that read cache does very little for me.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)