Western Digital made both enterprise storage planners and dedicated porn hoarders swoon today with the availability of 24TB CMR hard disks, while production on 28TB SMR hard disks ramps up during enterprise trials.

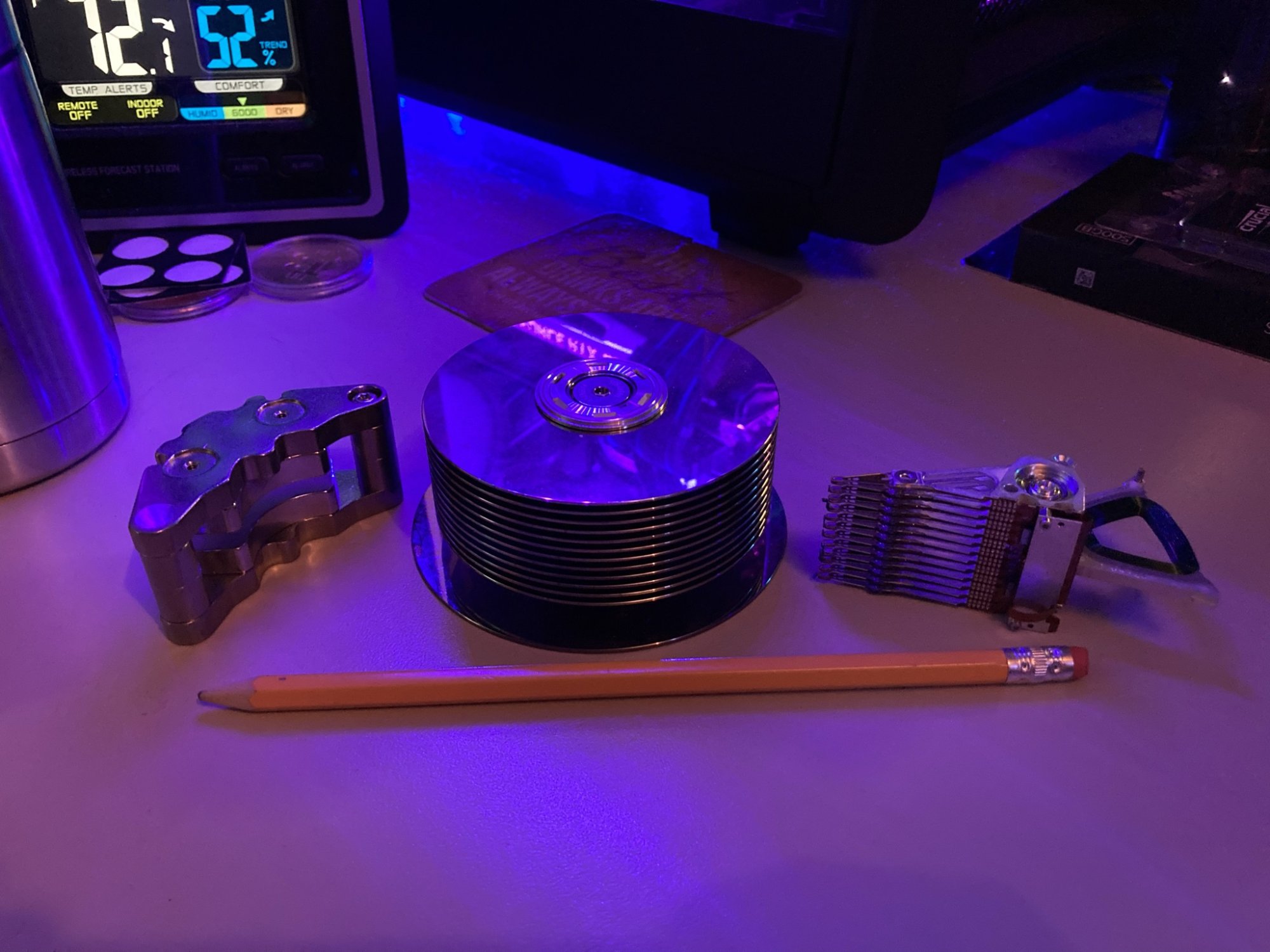

The new lineup of 3.5-inch 7200 RPM hard drives includes Western Digital's Ultrastar DC HC580 24 TB and WD Gold 24 TB HDDs, which are based on the company's energy-assisted perpendicular magnetic recording (ePMR) technology. Both of these drives are further enhanced with OptiNAND to improve performance by storing repeatable runout (RRO) metadata on NAND memory (instead of on disks) and improve reliability.

The new drives are slightly faster than predecessors due to higher areal density. Meanwhile, per-TB power efficiency of Western Digital's 24 TB and 28 TB HDDs is around 10% - 12% higher than that of 22 TB and 26 TB drives, respectively, due to higher capacity and more or less the same power consumption.

Source

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)