cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,132

Another new release; another reason not to upgrade. Insert game name here; TitanFall 2. The game will not load on a dual core if it doesn't have 4 threads.

http://www.techspot.com/review/1271-titanfall-2-pc-benchmarks/page3.html

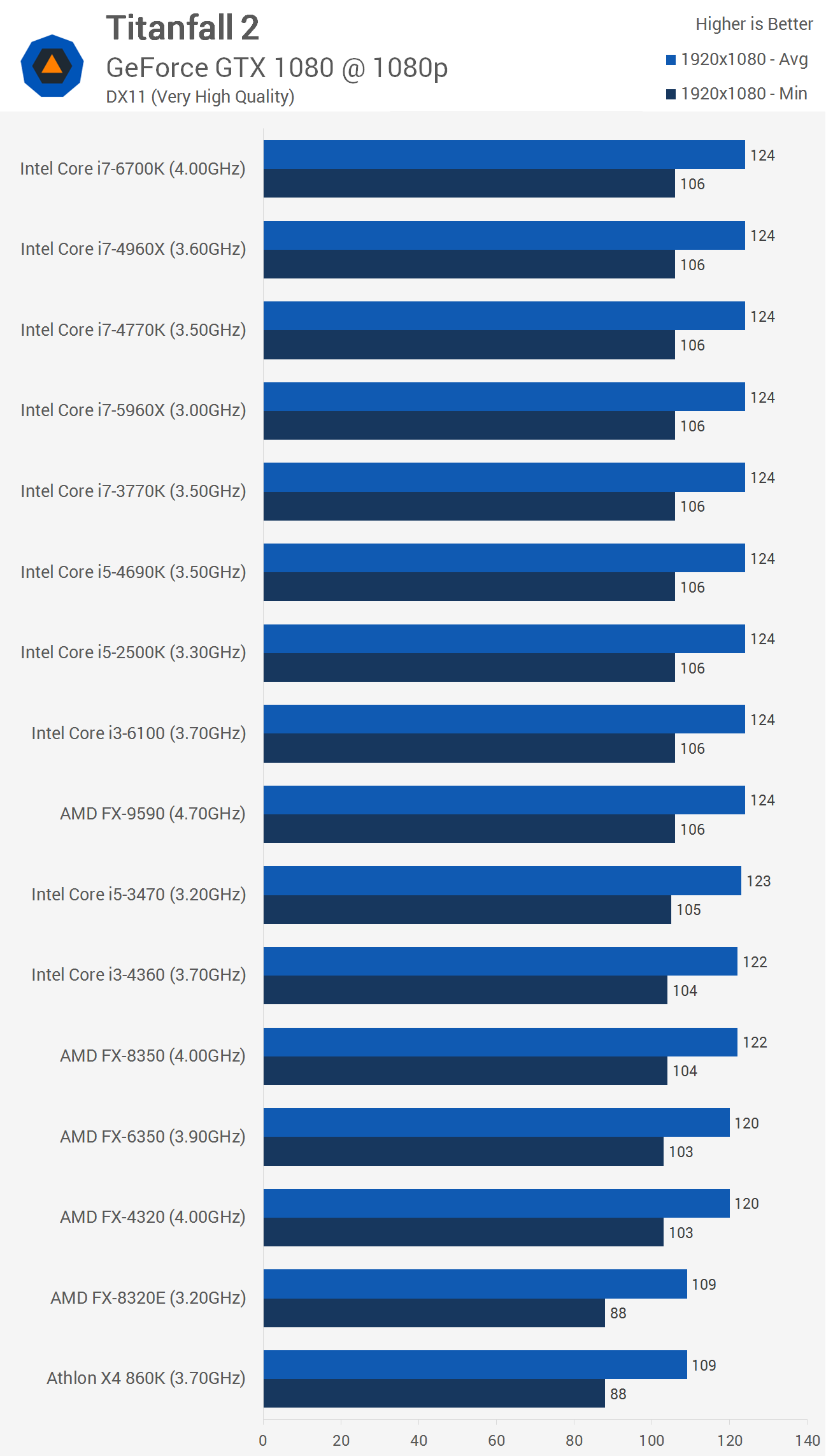

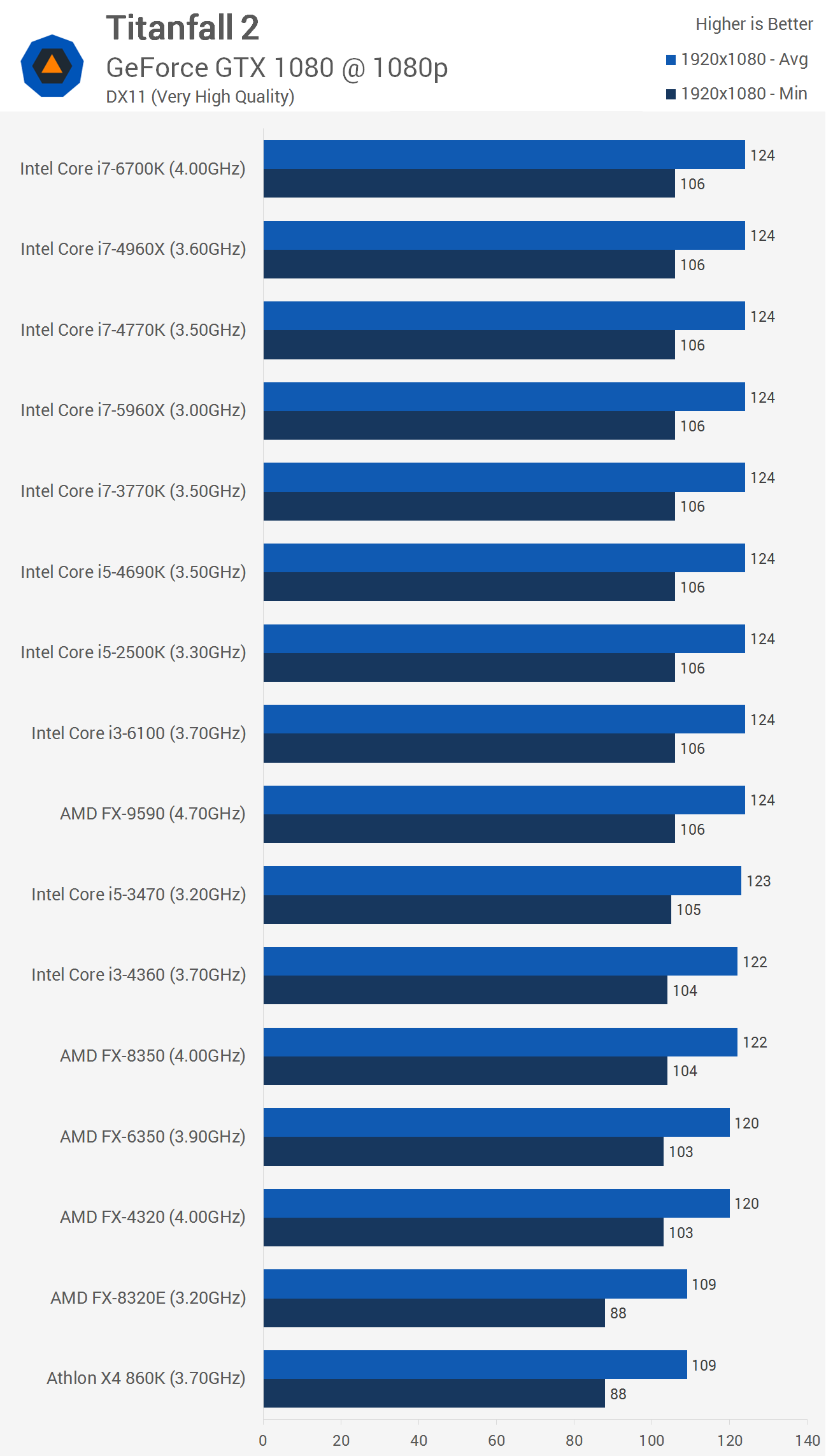

Anyway, from what we found, pretty much any CPU supporting four threads will play Titanfall 2 without an issue. For those interested, we found the same surprisingly low CPU utilization in the multiplayer portion of the game as well.

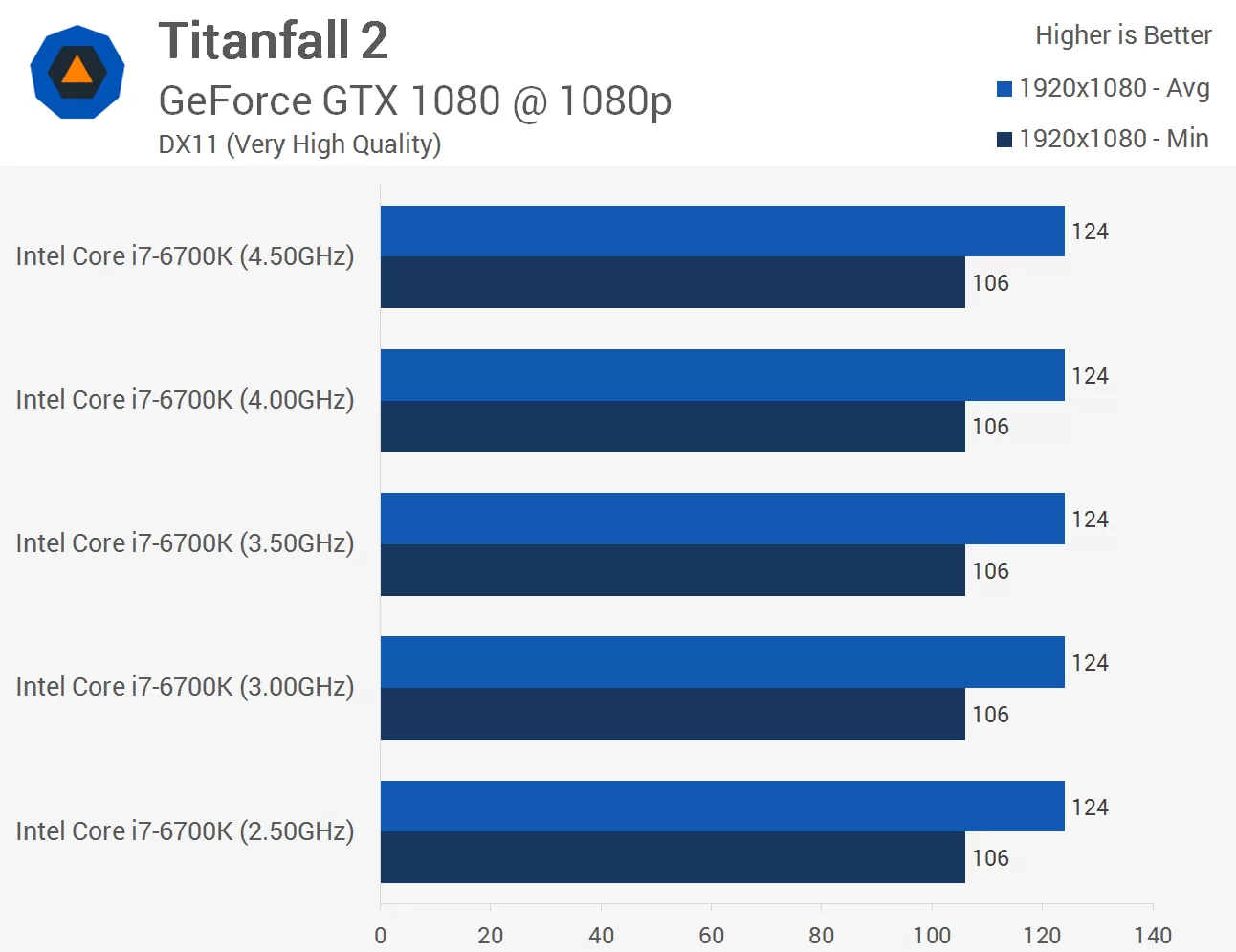

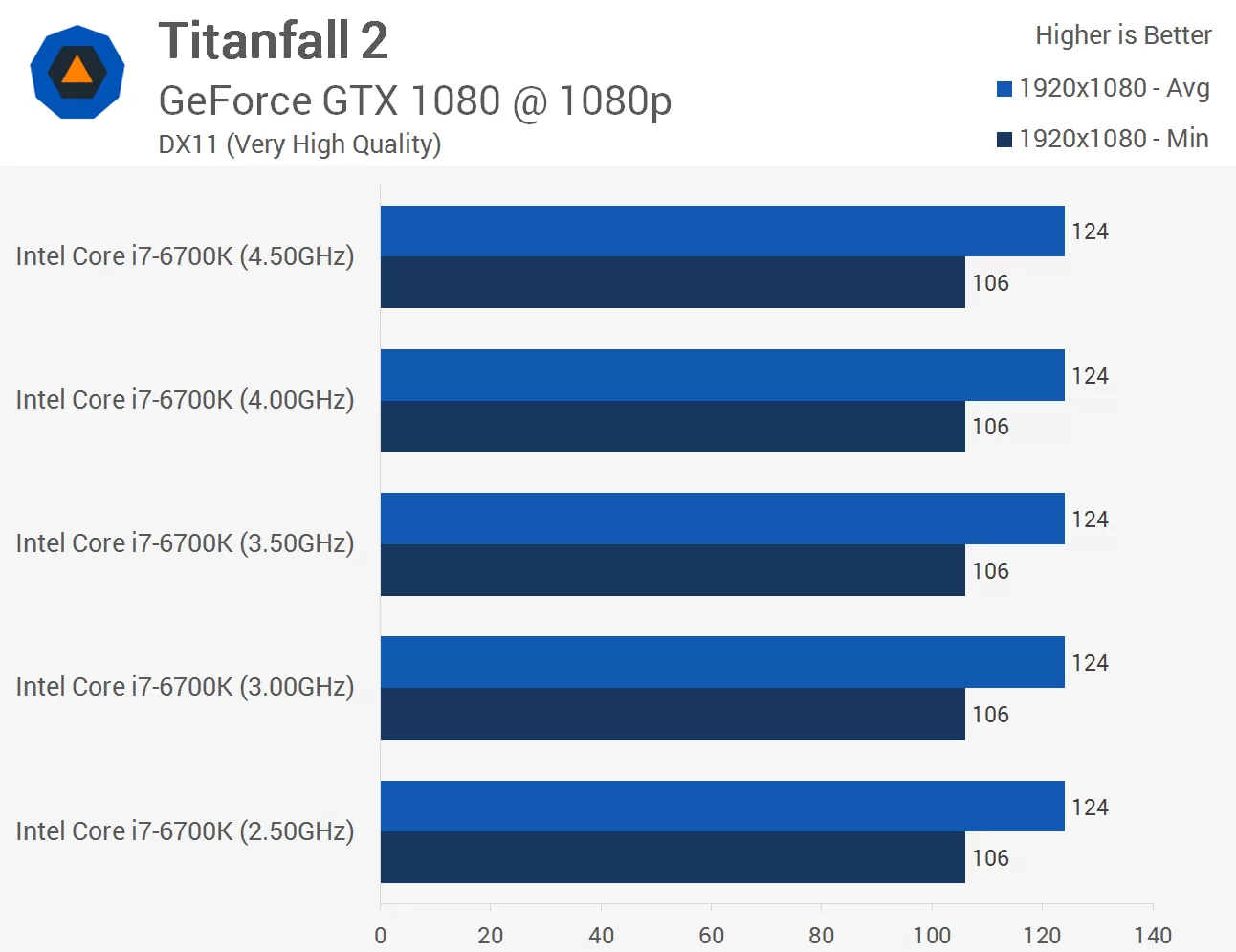

Intel performance at various clock speeds.

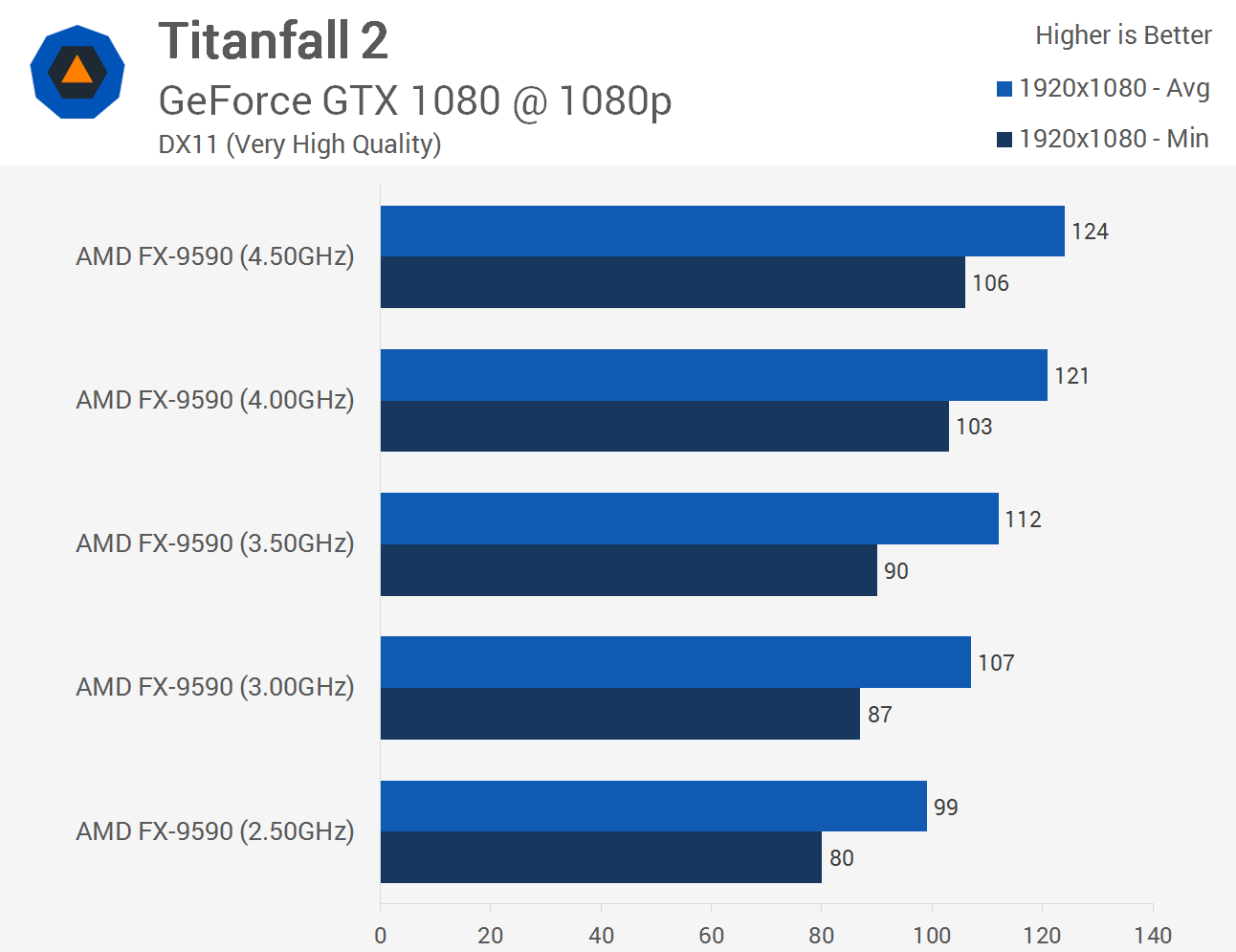

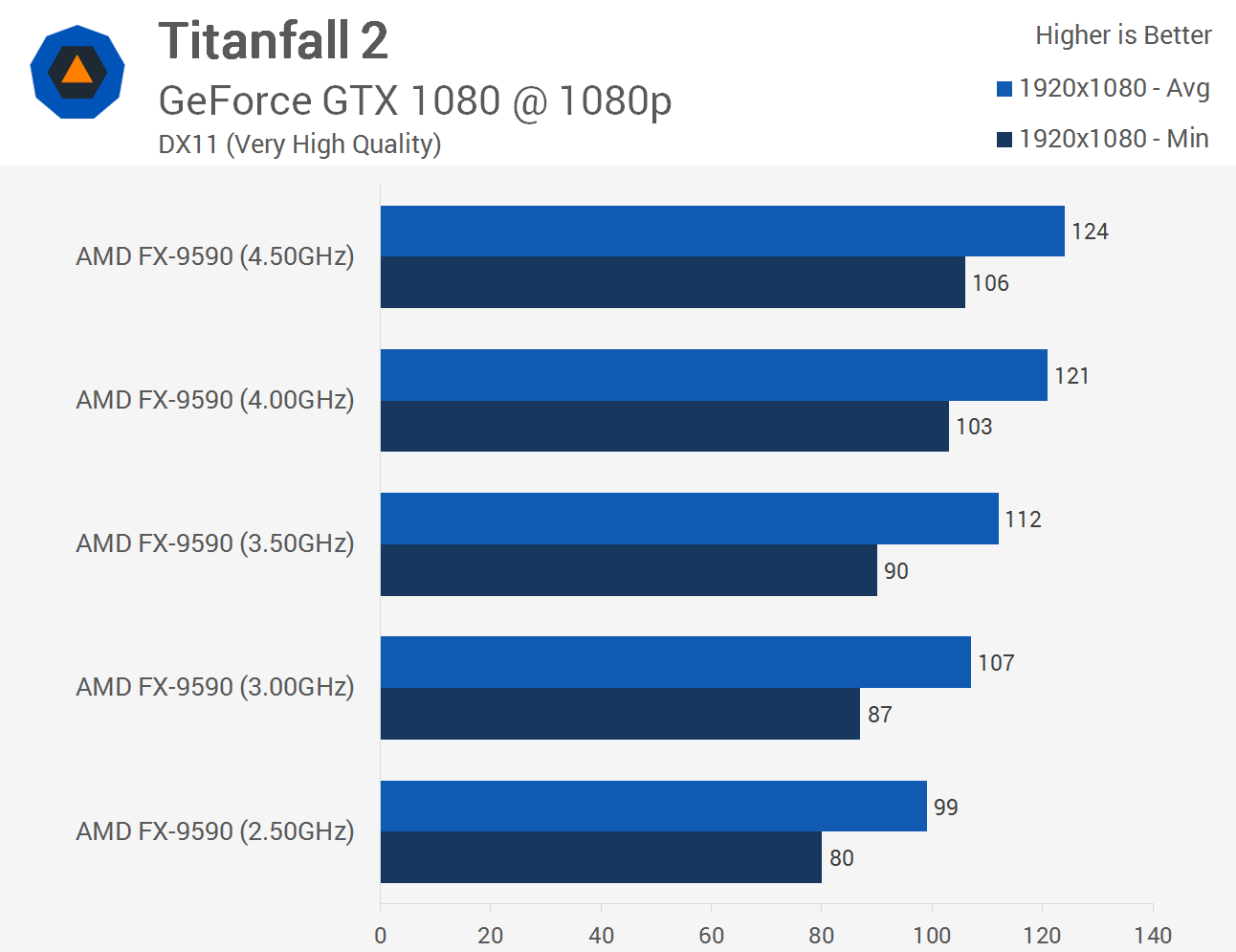

AMD performance at various clock speeds.

http://www.techspot.com/review/1271-titanfall-2-pc-benchmarks/page3.html

Anyway, from what we found, pretty much any CPU supporting four threads will play Titanfall 2 without an issue. For those interested, we found the same surprisingly low CPU utilization in the multiplayer portion of the game as well.

Intel performance at various clock speeds.

AMD performance at various clock speeds.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)