Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,924

I wonder if Civilization could leverage it, a core for every civ should speed up those turns late game

yeah my googling was turning up that civ6 tops out around 20 threads....

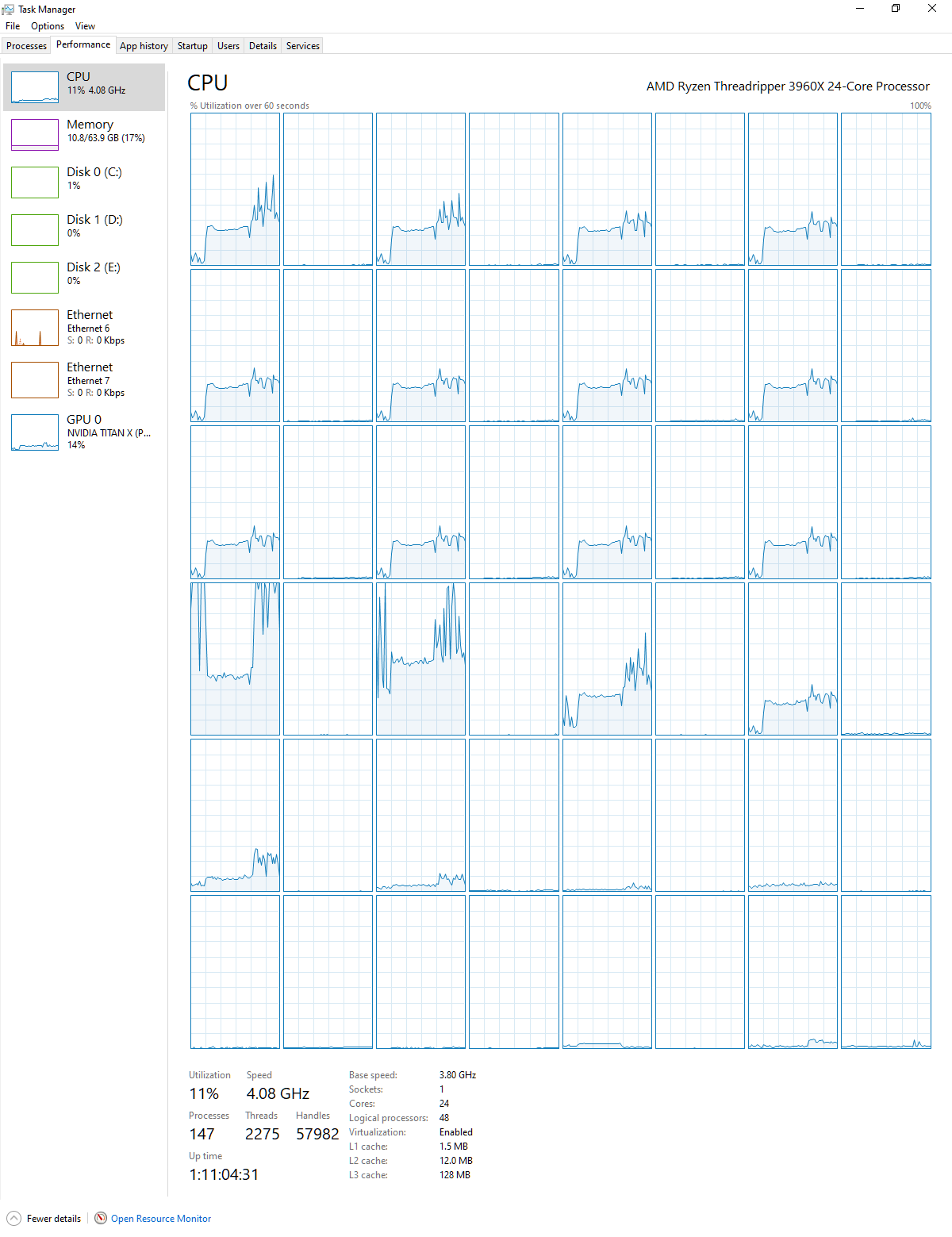

I did all my testing back in 2019 after first buying the threadripper. I probably posted it on here somewhere

What I do remember was that it was WAY fewer threads than I expected based on all of their bragging about multithreading.

Of course I did.

From this thread (which has more context)

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)