LittleBuddy

Limp Gawd

- Joined

- Jan 3, 2023

- Messages

- 372

It looks so much better with DLSS.DLSS mod seem to have good visual stability advantage

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

It looks so much better with DLSS.DLSS mod seem to have good visual stability advantage

I don't want to live in the country you do where 11yr olds vote.Man you must really hate the game to appreciate a tech demo old enough to vote like Star Citizen better.

Haha. His production values/way of doing things is definitely DIY. But it works! He's been around for a couple of years. But I feel like this year, he's hit a bit of a groove and is a good, alt resource.Proof that AMD paid off Bethesda, and put a $13B company into the corner. /s

Have no idea who this guy is, but I really like how he moves his camera around with his finger pointer to exactly what he is referring to.

Starfield on a potato.

Minimum spec R5 2600X and a GTX 1070ti.

Honestly plays a lot better than I thought it would.

View: https://www.youtube.com/watch?v=kfCCKCeEzUU

Borderless Window can perform just as well as exclusive full-screen, if they use something called "flip-model". Which I believe MS has attempted to force on any windowed game, in Windows 11. Since a few months ago. Some games do it natively already. And SpecialK mod ads it for a few games. And otherwise, borderless Window is nice, because your game doesn't hang or crash when alt-tabbing or doing stuff in the task bar.https://steamcommunity.com/app/1716740/discussions/0/3824173464656784022/?ctp=2#c3824173464658978011

How to turn on Auto-HDR in Win11. It works. Looks a little better but still screwed up.

I also find it a bit odd that there's no exclusive full-screen mode in the game. Wouldn't that improve performance?

There are problems for Nvidia in this game. But....7900 XTX SHOULD be performing a lot better than a 3090 ti, as a rule. 7900 XT is closer in equivalent performance to a 3090 ti.Folks running Starfield on NVidia GPUs, I'm curious what your performance / GPU utilization / GPU power consumption are like, specifically at Ultra settings and native res.

Based on some things I'm seeing around (such as the Daniel Owen's video) and my own little bit of testing, it seems that Ultra quality settings may result in broken performance on NV cards in some scenarios...

Peep this (not actually spoilers)

Note

- 7900XT is performing ~30% better than 3090Ti and could even go further on faster CPU as it's hitting CPU limit on 5950X

- 3090Ti is only consuming 300W despite reporting 99% utilization at 1075mV- that's a big red flag for me, should be more like 400W-500W at that voltage at 99% util

- This seems consistent across the few areas of the game I've visited so far

- Odd performance behavior persists when the Radeon GPU is disabled in Device Manager and all Radeon-related background software is closed

- Behavior persists after clearing shader caches and reinstalling the game

- Both GPUs are using latest (as of 9/1) drivers, Windows 11 fully updated, Steam version of Starfield

So is there something wrong with maxxing settings at native res on NV GPUs in the current version of the game? I'd say this is a fluke on my system, NV and AMD drivers conflicting or something- but I have yet to see this behavior in any other game, and some of the early YT vids seem to cooberate this.

I'm enjoying it. At least it's a full fledged game unlike star citizen.There is literally like no immersion to this game. You are locked into a lot of tiny cubes on the planets and in space, all linked together with string/loading screens.

You cannot move your spacecraft on a planet, you can only touchdown and takeoff with cut scenes, and in space you can fly about 2 kilometers in either direction. That's the entirety of the movement in this game, besides walking. This in 2023.

Makes me appreciate Star Citizen more.

With coherent leadership and management, SC really could've been the defining PC space title. What we're left with is a landscape of well-meaning and talented developers taking a stab at it, but due to the time and resources required, always having to settle on some subset of The Ultimate Space Game-- a baby pool sized interpretation of space. Thank you for coming to my TED talk.I'm enjoying it. At least it's a full fledged game unlike star citizen.

That being said, I do wish we'd get more of the complexity of Elite Dangerous and how that works with the ship/planet stuff and having a more full featured campaign and building systems that this game has.

Starfield on a potato.

Minimum spec R5 2600X and a GTX 1070ti.

Honestly plays a lot better than I thought it would.

View: https://www.youtube.com/watch?v=kfCCKCeEzUU

The XG438Q, if that is the display you're referring to, is edge lit and DisplayHDR 600. It only has 8 dimming zones that project vertically across the height of the display. It is the second worst form of local dimming, made even worse by the low number of zones on such a large display. Imagine trying to provide highlight to individual stars in space. On edge lit you will have a fat light beam stretching across the screen to try and highlight it, while washing out everything else in its way.I'm willing to admit that it is possibly because the HDR features on my screen aren't that amazing. It's an Assus XG438q, a 43" 4k VA panel with DisplayHDR 600...

But it was a screen that got top reviews when I only just bough it a little while ago.... uuhh. I guess that was 4 years ago, but still! In the grand scheme of things, what is 4 years?

Anyway, I don't use Windows 11, so I don't have any of the AutoHDR features. For some games that natively support HDR I use the feature. Cyberpunk 2077 was one of these, but - at least in Windows 10 - it requires that I turn on HDR for the desktop (otherwise the HDR settings don't show up in game, and it doesn't use HDR), which makes white windows (like the file manager and other things) blast so bright that they sear my eyeballs. In general it makes the desktop experience mostly unusable, or at the very least very uncomfortable, so I wind up turning it on, starting the game and turning it off again, which gets old, and I often forget to enable it before starting the game, and don't even notice until I've been playing for a half an hour, and don't feel like quitting to enable it.

I guess to me HDR is more of a marginal feature than a game changing one, and on or off it doesn't make a huge difference in game to me, but it does completely ruin my 2D/Desktop experience, and for this reason I almost always keep it off.

And yes, this may be in part because I have refused to "upgrade" to Windows 11, and in part because my monitor is 4 years old, but still.

Monitors used to be the one part of our hobby that would last nearly indefinitely. I can't bring myself to replace it just for HDR, a feature my screen purportedly already has...

At some point I may invest in a 42" LG C<insert number here> but it hasn't been a priority yet, in part because the possibility of burn-in concerns me. I still use this computer for work more than I do for games or movies. We are probably talking 50 hours a week for work work (MS Office, Stats software, email, etc.) with static windows menus and window decorations all day every day. Then maybe another 20-30 hours a week of home productivity and web browsing. I maybe squeeze in 4 hours of games per week.

So for this reason, the screen I pick HAS TO be compatible with productivity style static windows without side effects. Everything else is secondary. I'm not going to get a dedicated screen just for games, when I only have time to spend a few hours per week on games.

I havent read up enough to know the difference between FALD and other forms of local dimming, but I know my monitor has local dimming and I usually turn it off. The screen segments are way too large, and it usually just winds up looking worse.

I'm with you on the "too bright for my eyes" part in many cases. Usually not in games, but definitely on the desktop, which is where I spend most of my time.

If 90 is the baseline for 4:3, 106.6667 is what you should use for 16:9.in Documents/MyGames/Starfield create a text file and name it "StarfieldCustom.ini"

Here is a sample config, from a satisfied user:

[Display]

fDefault1stPersonFOV=90

fDefaultFOV=90

fDefaultWorldFOV=90

fFPWorldFOV=90.0000

fTPWorldFOV=90.0000

[Camera]

fDefault1stPersonFOV=90

fDefaultFOV=90

fDefaultWorldFOV=90

fFPWorldFOV=90.0000

fTPWorldFOV=90.0000

I'm using 7900XT, not XTX. Hence the confusion... Usually the 7900XT has been very close to the 3090Ti (maybe a little faster sometimes) which is why the significant gap was such a surprise, coupled with the suspiciously-low TBP on the GeForce card.There are problems for Nvidia in this game. But....7900 XTX SHOULD be performing a lot better than a 3090 ti, as a rule. 7900 XT is closer in equivalent performance to a 3090 ti.

No idea how legit video like this are:

View: https://youtu.be/ZtJLCAWSzR8?t=37

DLSS mod seem to have good visual stability advantage

He's a Brit, that's what they do.I don't understand why this weirdo is walking his PC parts around his garden

The XG438Q, if that is the display you're referring to, is edge lit and DisplayHDR 600. It only has 8 dimming zones that project vertically across the height of the display. It is the second worst form of local dimming, made even worse by the low number of zones on such a large display. Imagine trying to provide highlight to individual stars in space. On edge lit you will have a fat light beam stretching across the screen to try and highlight it, while washing out everything else in its way.

FALD is an array of lights replacing the backlight that light up a circular area of the screen. We're getting more accurate with this form of local dimming, as you can better isolate bright spots on the screen. The localized nature of the lighting also means that it can generally get brighter. The higher the number of zones, the better it is. MicroLED FALD use a sequence of LEDs to light up an area for more accuracy, but the drawback is blooming that needs to be compensate for in some way.

Direct lighting is an hybrid solution that puts a second LCD screen behind the primary LCD screen which mirrors the output image, but only display the luminance channel to boost or tone down the brightness of the primary image. It can be more accurate and support brightness levels even higher than FALD, but latency is an issue. The reference displays that video producers use which reach up to 10,000 nit Dolby specification use direct lighting, and they're extremely expensive. The latency is also up to hundreds of milliseconds.

OLED is a self-emissive display, where each individual pixel is able to adjust brightness levels independently. As a result, it can be the most accurate HDR display if the signal processing adheres to the EOTF curve. The drawback, of course, is peak brightness level due to the diodes being sensitive having a habit of burning themselves alive when too much voltage is applied. Current OLED displays all suffer from varying levels of automatic brightness limiting (ABL) to minimize the speed and effect of burn in.

TL;DR

A display with FALD for local dimming is the minimum type of display you need to get a good HDR experience. The number of zones for such an experience also varies by screen size. 576 would be the floor for a 27-32" monitor, in my opinion. I would pick FALD if peak brightness is your primary concern, OLED for picture quality.

If 90 is the baseline for 4:3, 106.6667 is what you should use for 16:9.

Ah......for some reason I thought you said XTX!I'm using 7900XT, not XTX. Hence the confusion... Usually the 7900XT has been very close to the 3090Ti (maybe a little faster sometimes) which is why the significant gap was such a surprise, coupled with the suspiciously-low TBP on the GeForce card.

Proof that AMD paid off Bethesda, and put a $13B company into the corner. /s

Have no idea who this guy is, but I really like how he moves his camera around with his finger pointer to exactly what he is referring to.

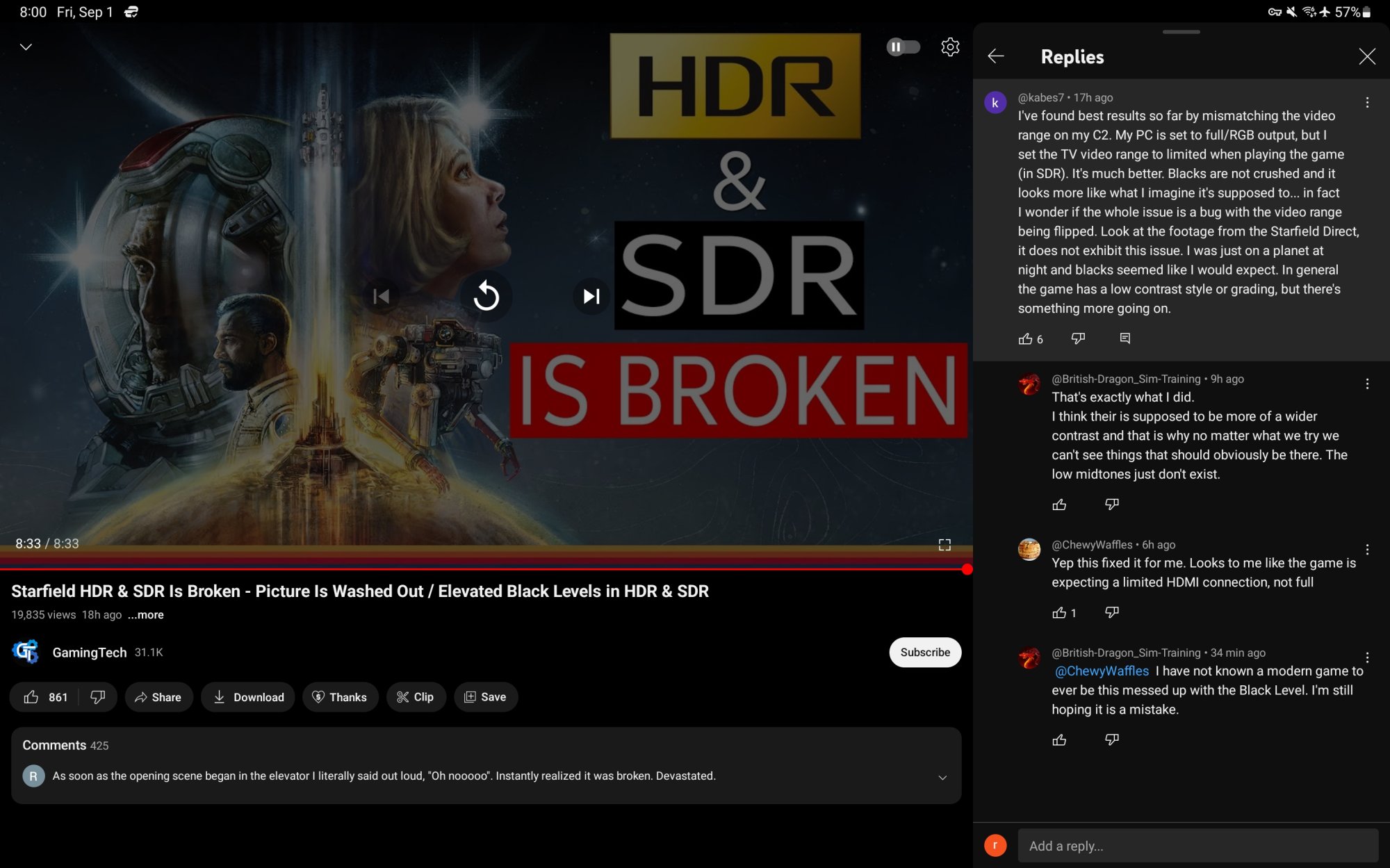

No HDR or Auto HDR on PC, HDR is broken on console, and the SDR color gamut is broken. On console, HDR works in the main menu and loading screens, but not the game itself. Elevated black level is a serious issue on both PC and console. This is retarded. I don't understand how this continues to be an issue in the industry.

View: https://www.youtube.com/watch?v=xi-C3PzKdsI

I’m going to try it on my c2 tomorrow.View attachment 595478

Refunded the game before seeing these comments. Wish I could check how much better it may look on my C1.

HDR works in the main menu and loading screens, but not the game itself. Elevated black level is a serious issue on both PC and console. This is retarded. I don't understand how this continues to be an issue in the industry.

We have folks putting fake flowers pots around or inside of their PC.He's a Brit, that's what they do.

The graphics seem kinda mid to me tbh. Not bad per se, but not quite what I'd expect from a 2023 AAA game that pushes high-end systems to the max. Cyberpunk 2077, Elden Ring, and Control (to name a few) all look better to me, and run better too.Now that I've got DLSS and AutoHDR working, I decided to keep the game. Although; this game has the worst graphics quality to performance ratio of any game I've ever played.

I firmly believe that its a 20-80 mixture. People buy Bethesda games for the platform, then mod the game to their own taste. The REAL game is downloading and testing the mods to someday finally have a game you like playing.The graphics seem kinda mid to me tbh. Not bad per se, but not quite what I'd expect from a 2023 AAA game that pushes high-end systems to the max. Cyberpunk 2077, Elden Ring, and Control (to name a few) all look better to me, and run better too.

Starfield... Just looks and runs like a Bethesda game? If that makes sense?

Ultimately I don't think that matters so much though aside from the poor performance... People tend to play Bethesda games very much for the gameplay, characters, and story. Look at Fallout New Vegas- looks like absolute ass even compared to contemporary games, but people still love it and play it.

If your lucky. Better stick to 1080p for best results.So I'm looking at 40fps on my RTX 2070 at 1440p...if I'm lucky?

Yikes.

We use to read about PC's in Maxium PC back in the day, it never said anything about all the PC experts falling from the Sky 20 years into the future making Youtube Vidoes. they need to look up the word MOHAA and then read about it as my era of PC gaming.It drives me insane that he thinks his face should be in the center of a video comparing performance and image quality.

If thats the case I simply wont buy it. I may see what the 7800xt does in terms of performance before I make my ultimate decision.If your lucky. Better stick to 1080p for best results.

I feel your pain, I have a 2060.

This is still early days.If thats the case I simply wont buy it. I may see what the 7800xt does in terms of performance before I make my ultimate decision.

No offense in any way shape or form but listen to what you just said, "the early days". They've had 10 fucking years!This is still early days.

I'm sure performance will be a bit more palatable after a few patches from Bethesda and driver updates from AMD & Nvidia.

Also don't forget to grab the anime ass main menu replacer.

https://www.nexusmods.com/starfield/mods/264