Dopamin3

Gawd

- Joined

- Jul 3, 2009

- Messages

- 805

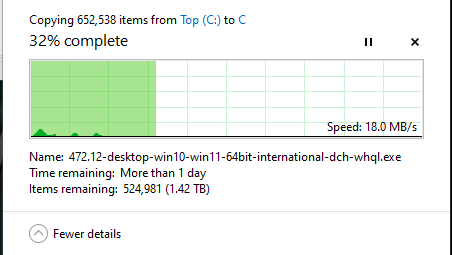

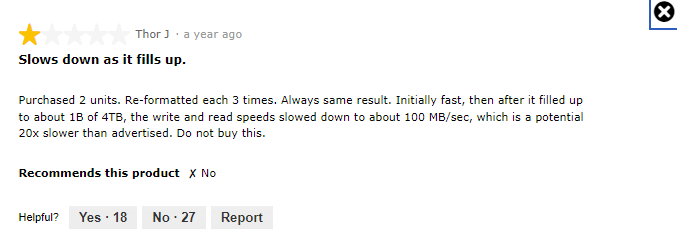

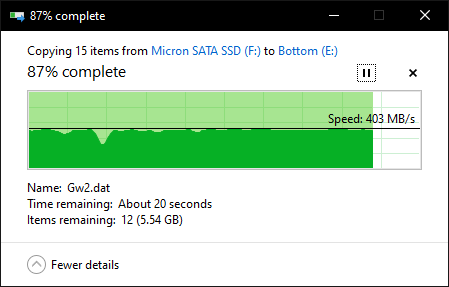

The issue is back after 3 months and I noticed it only happens on older data, fresh files on the drives seem unaffected. Ultimately going to try to RMA to MicroCenter.

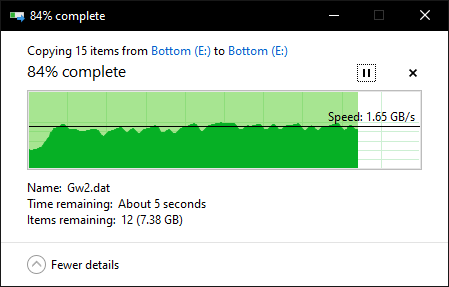

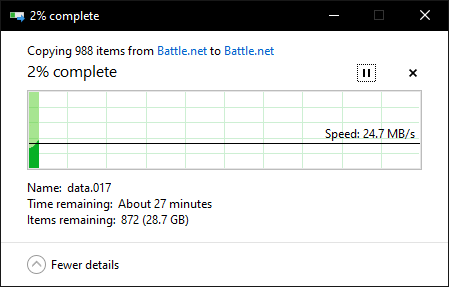

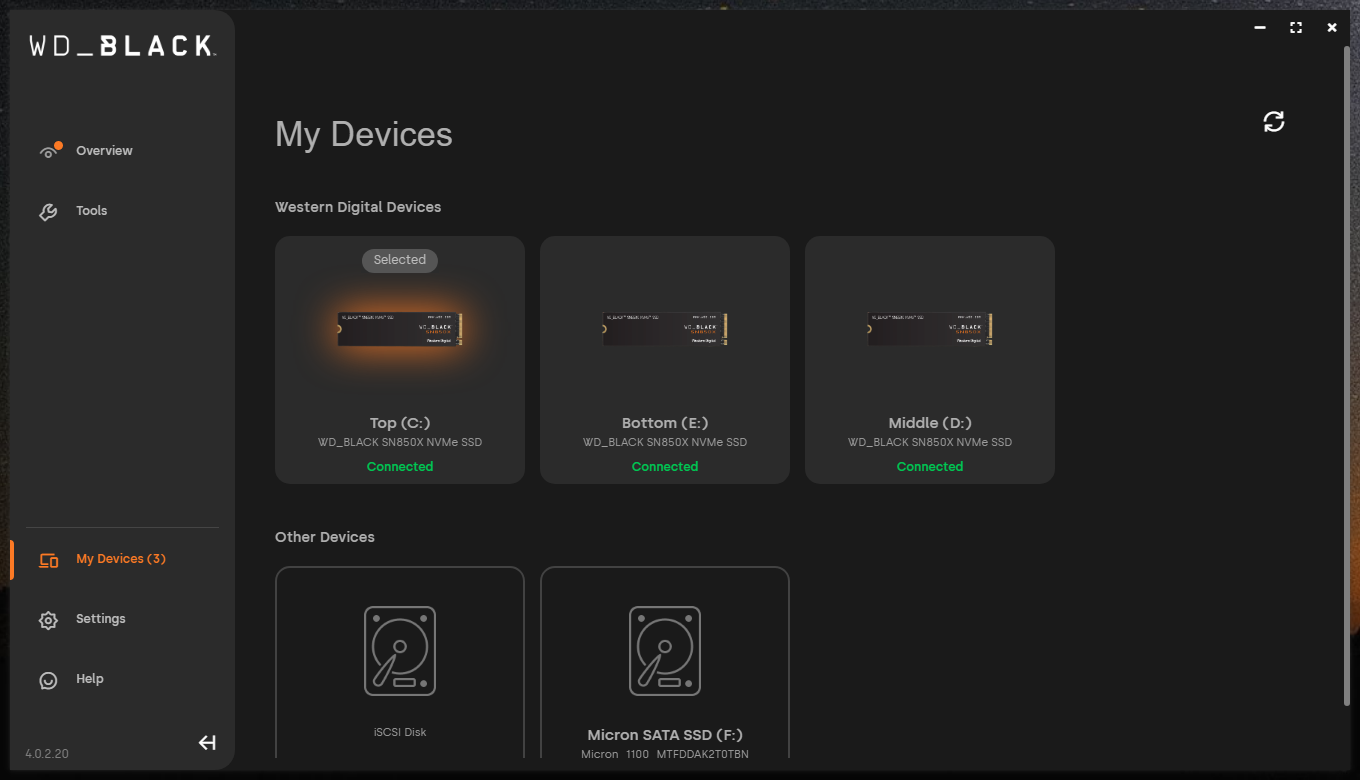

Final update: got approved for RMA with MicroCenter on all three Inland drives, using WD SN850X as replacements. Hopefully no more issues now.

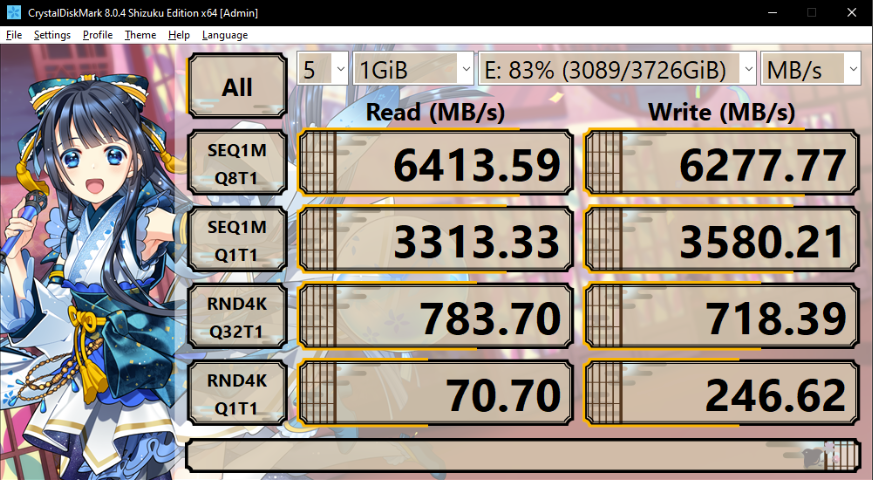

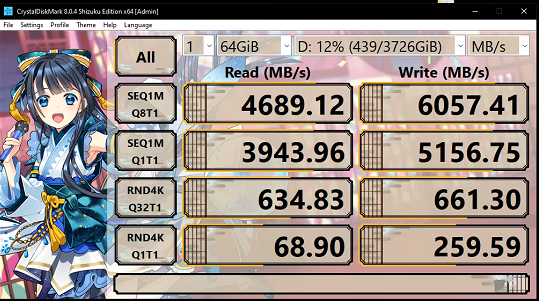

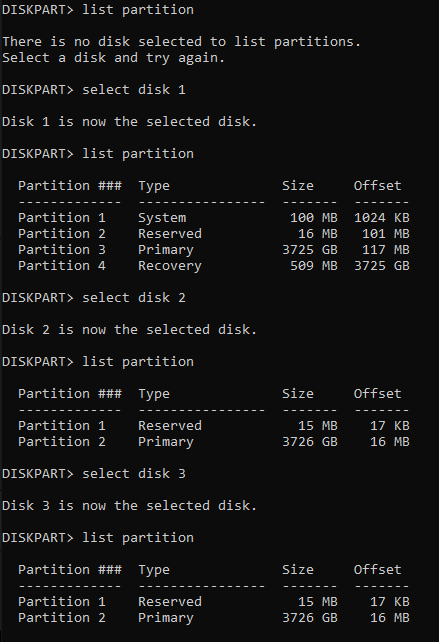

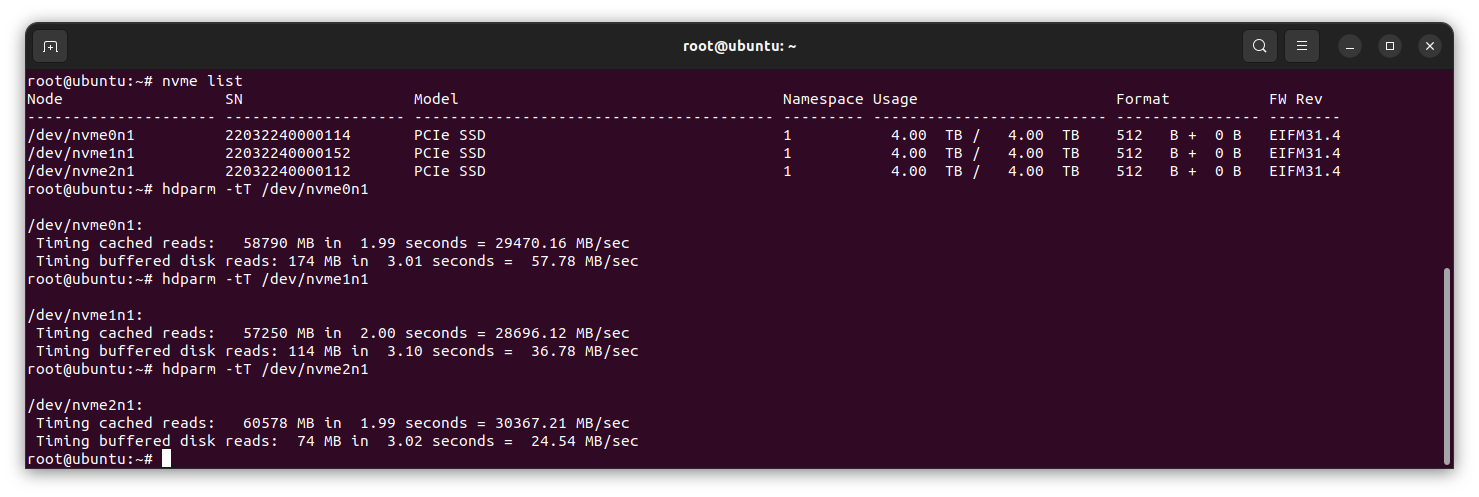

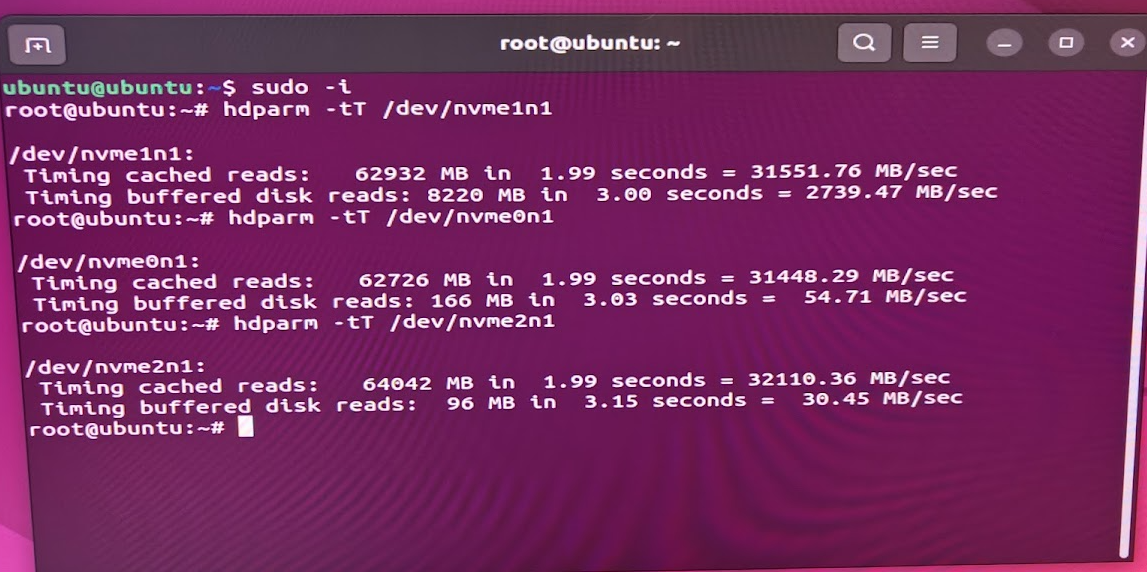

The rig in question is in my signature below, and I'm on Windows 10 Pro 22H2. The drive in question is an Inland Performance Plus 4TB NVME (supposed to be up to 7200MB/s read and 6800 MB/s write).

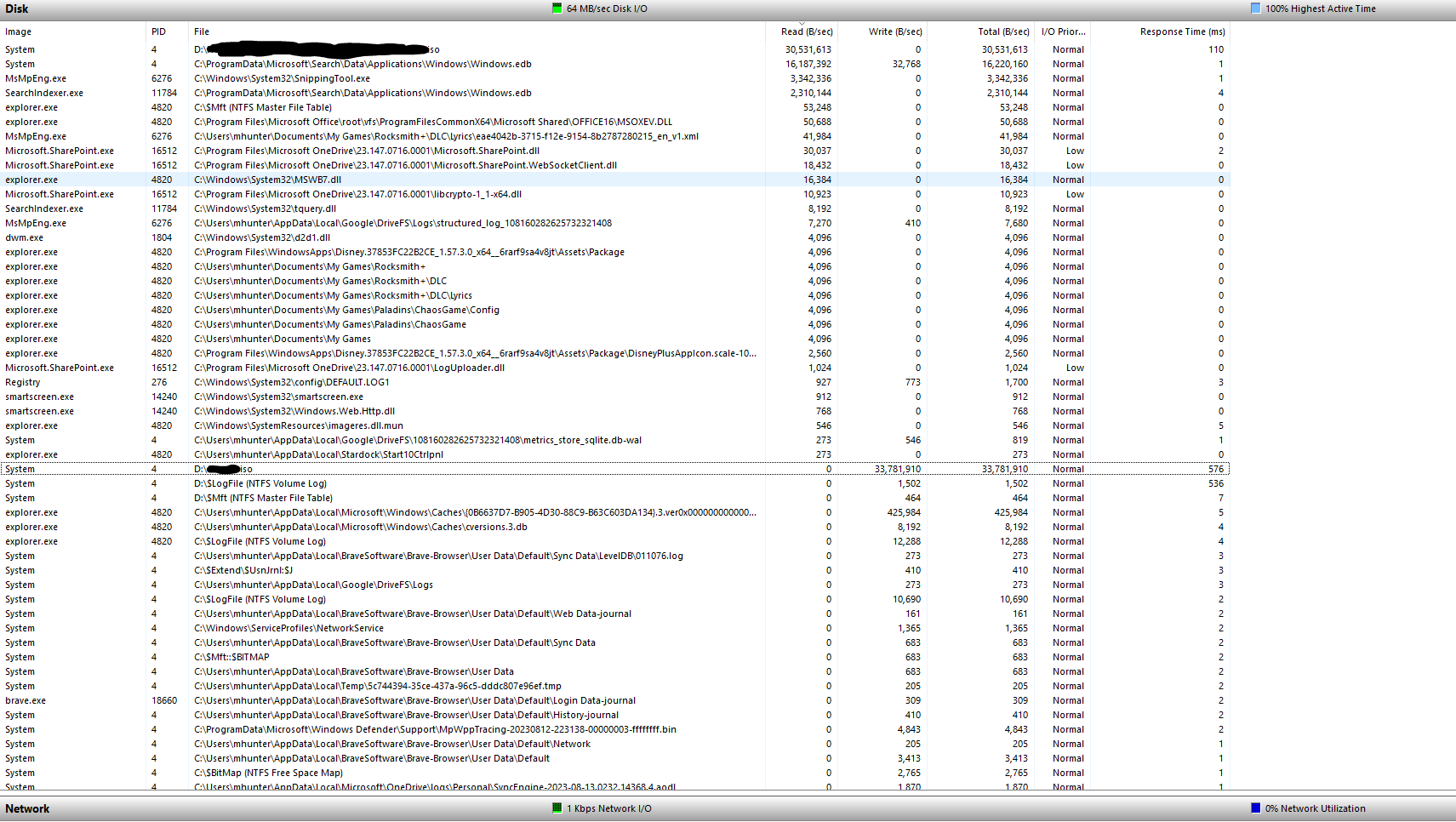

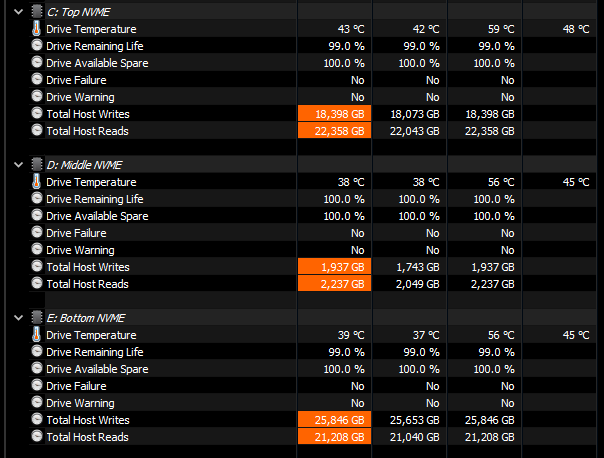

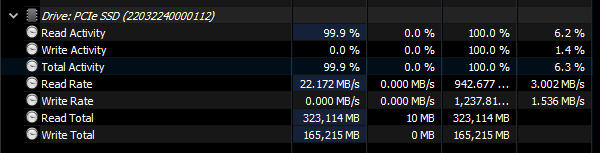

Screenshot is from HWInfo64. I was playing Uncharted: Legacy of Thieves and some of the cutscenes were laggy/jumping around. After Googling this it basically just appears to be slow storage, and low and behold that's my issue. I'm currently verifying the game files as well, and it's reading at a steady 22 MB/s. The maximum it ever reached while the PC was on for a few days was 942 MB/s. I primarily use this drive just for games. It has a good bit on it, 3.01 TB used with 636 GB remaining (total capacity 3.63 TB in Windows) but is it normal to see such terrible performance at ~82.5% capacity?

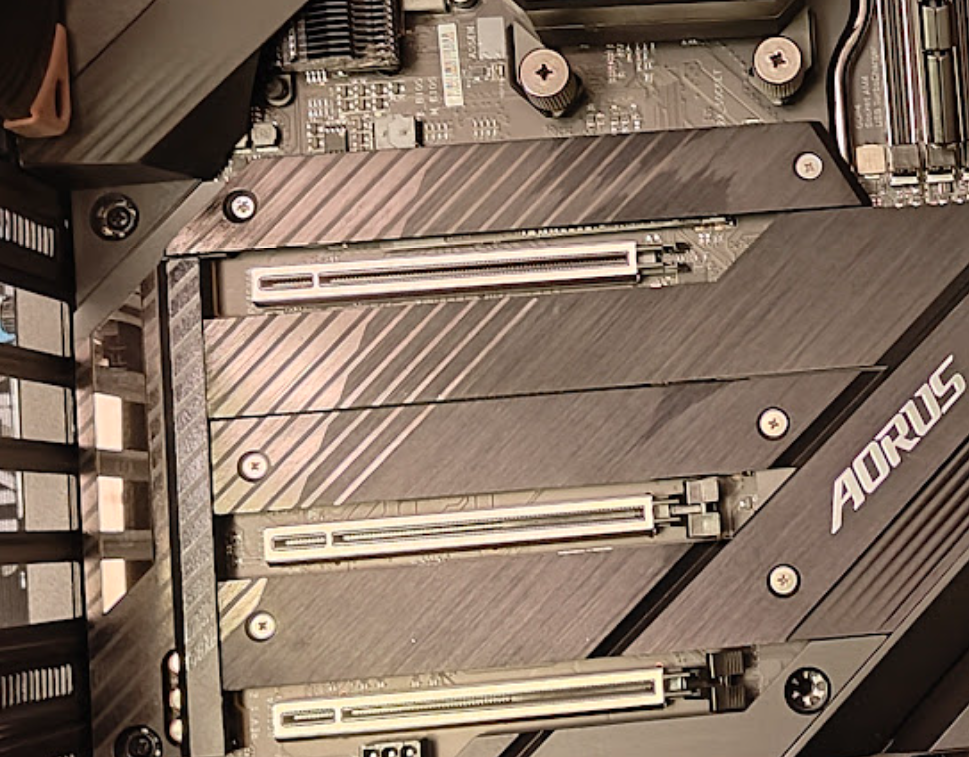

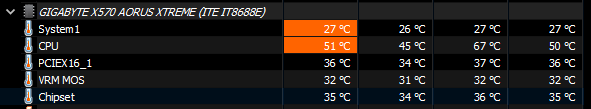

This is running off a chipset fed PCIe 4.0 NVME slot on the X570 Aorus Xtreme, I understand it's not going to be as fast as a CPU provided NVME slot, but c'mon really? I might as well use a hard drive...

edit: I'm on AMD Chipset driver version 4.03.03.431 and the newest on AMD website is 5.05.16.529 so I will update that and post if it changes anything.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)