NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

Yep, an Intel Titan! GG Sparkle on the dubious naming ;-)

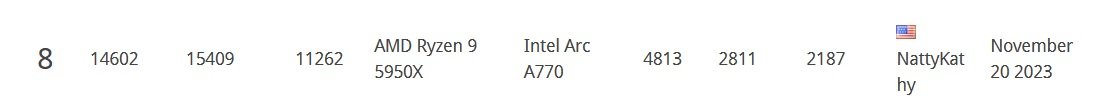

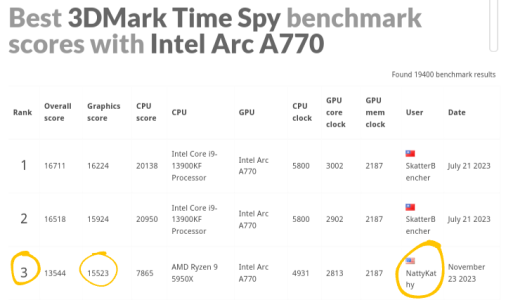

I've been wanting an A770 since before it was released and with this model bringing the 16GB version below $300 it is time to give Arc a try. Why this potato when I already have a 3090Ti and a 7900XT in my main rig plus a 3070Ti sitting unused?

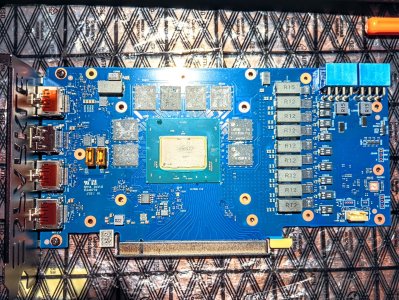

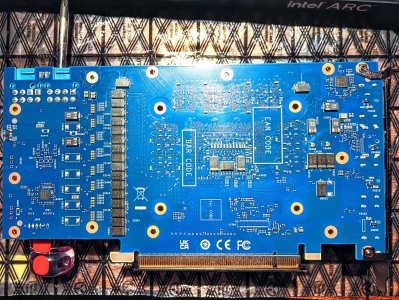

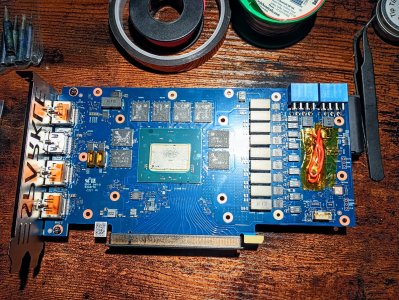

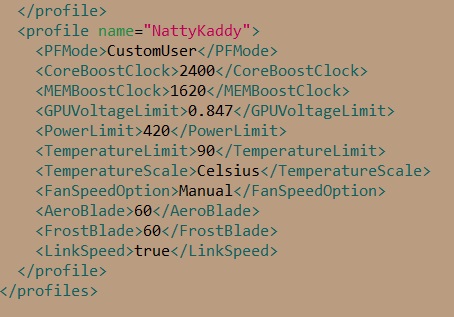

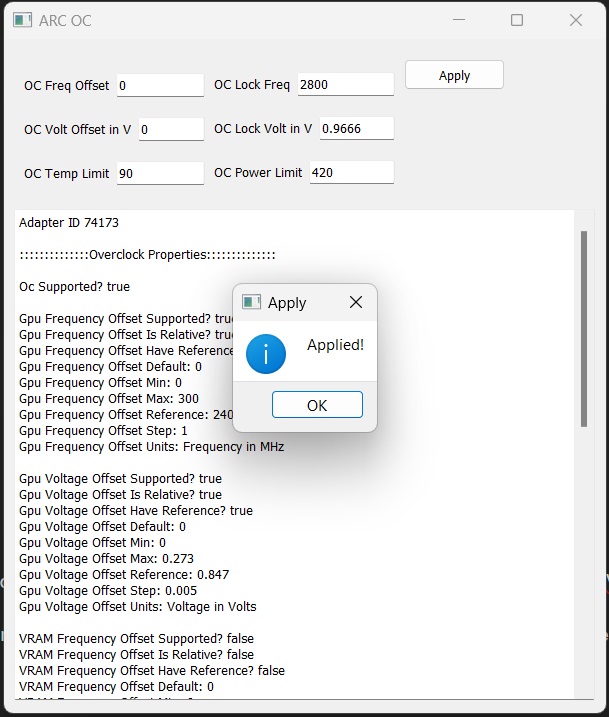

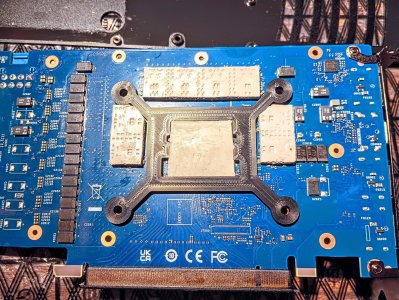

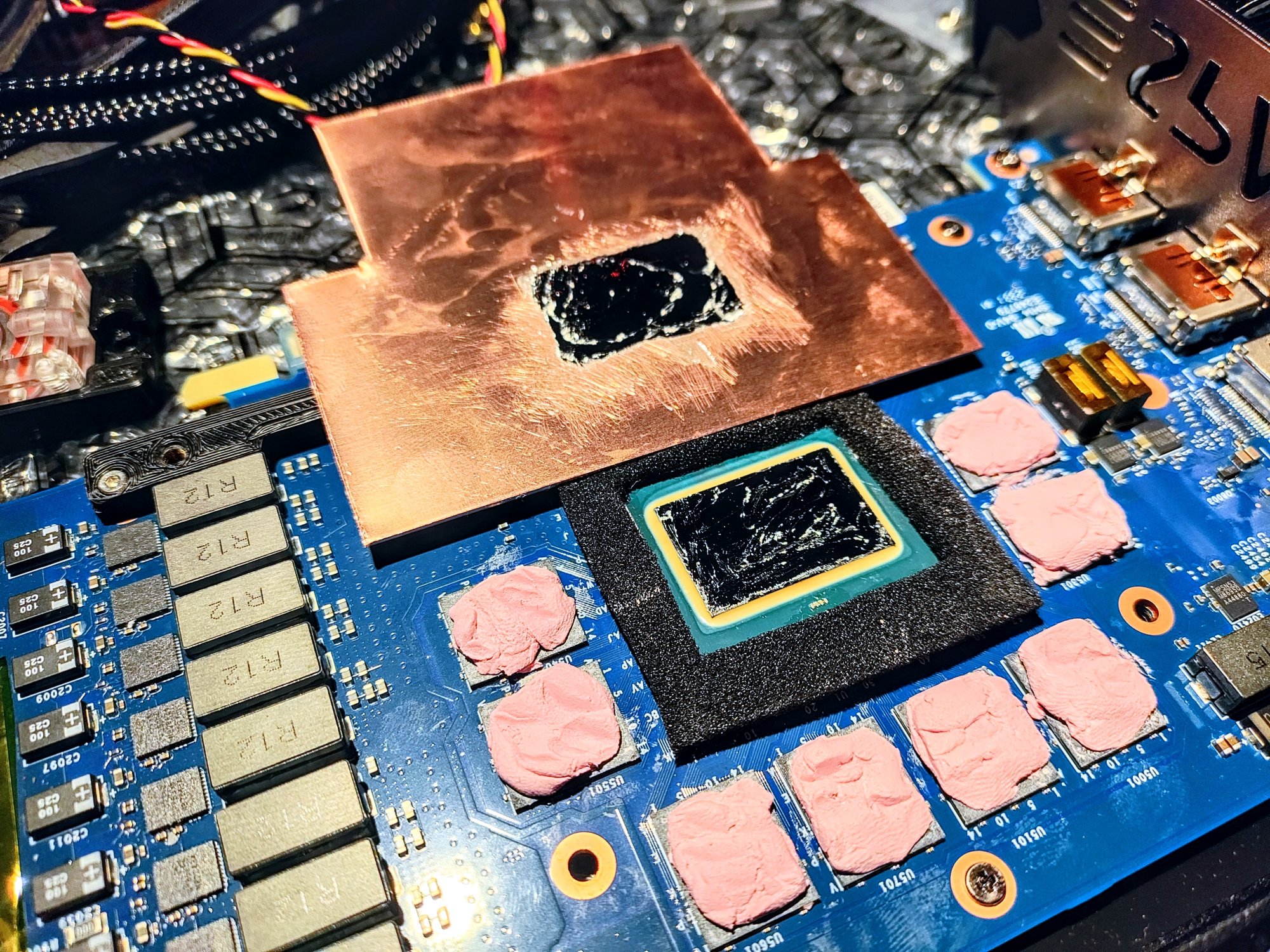

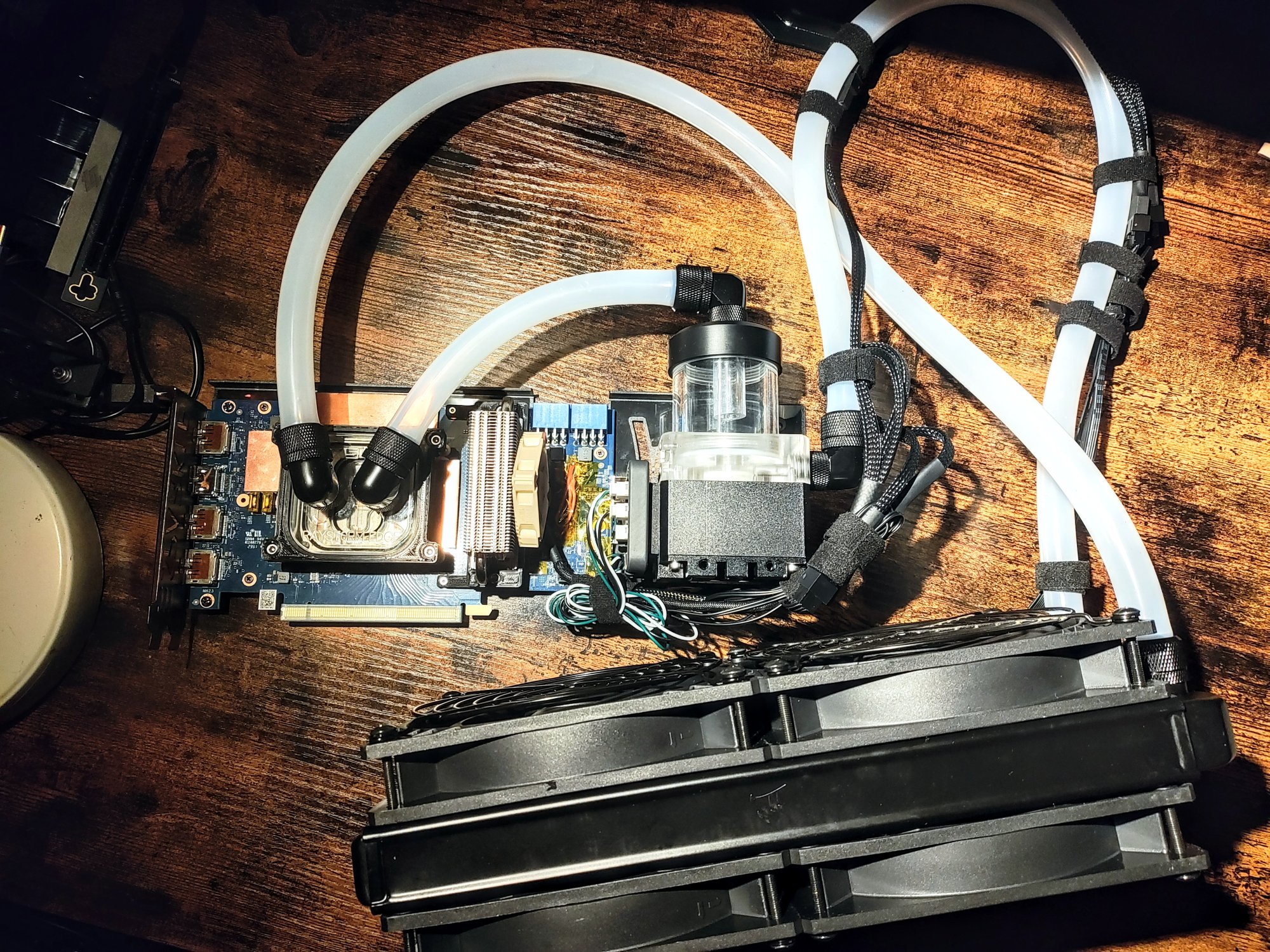

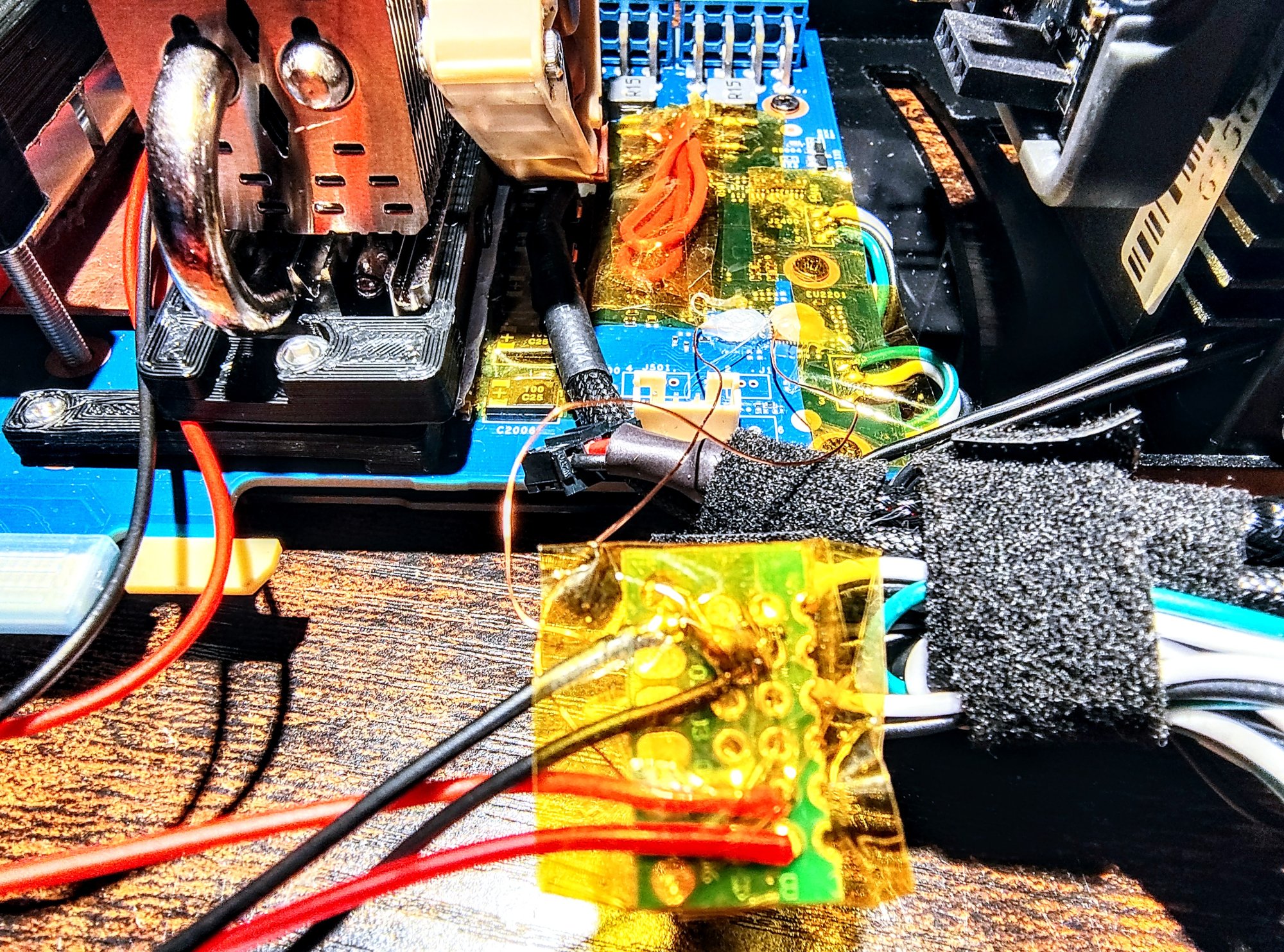

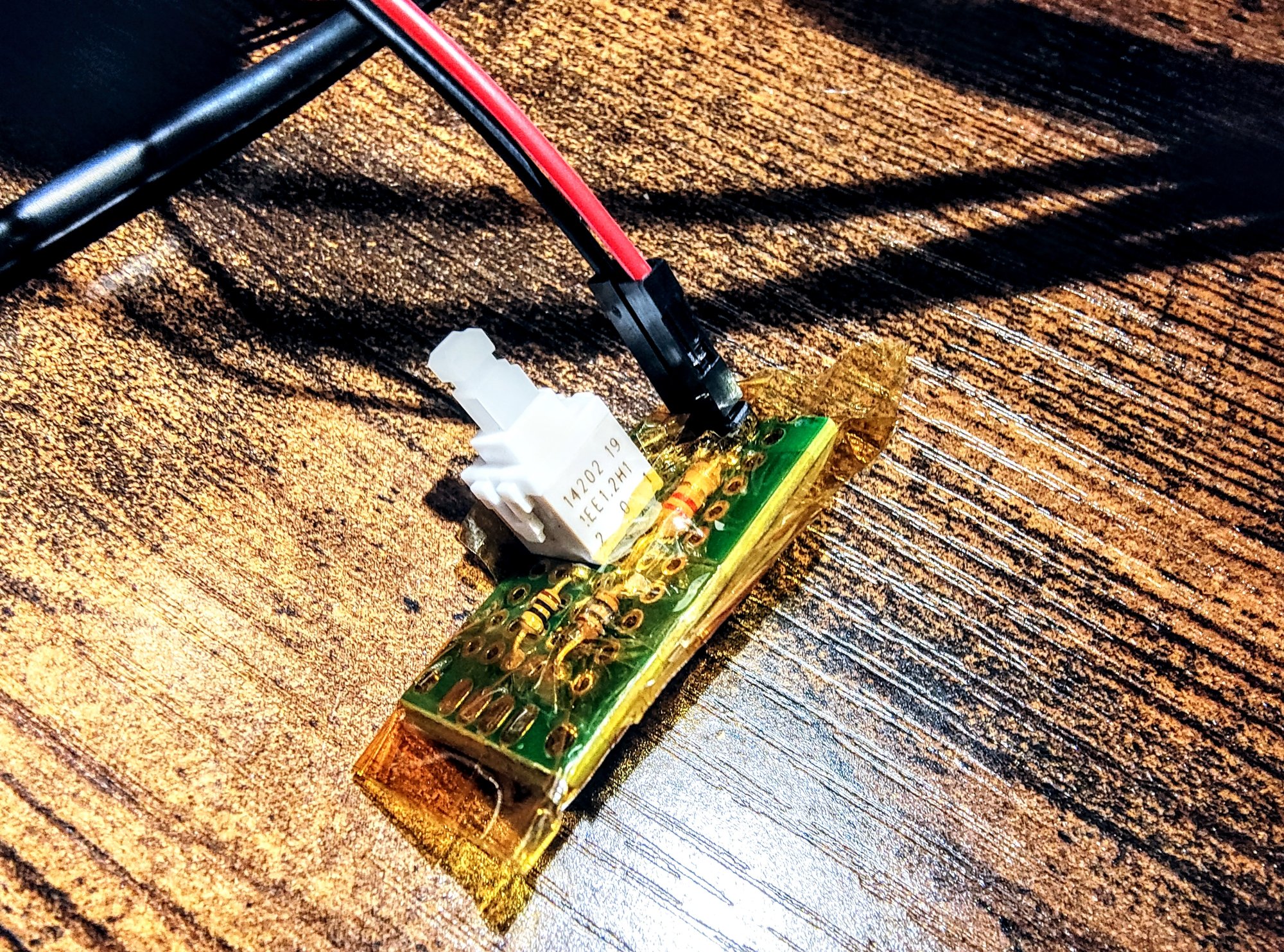

Seriously, I just like GPUs! Each different architecture is a new overclocking and optimization adventure. I have some ideas about power limit hardmodding I want to test, that would also be applicable to Radeon GPUs. And of course this thing is gonna get a DIY hybrid mod since I apparently can't get enough of those.

Here's to silly hardware purchases! I feel like one of those Car People who always has a new project despite owning a perfectly good daily driver :-P

I've been wanting an A770 since before it was released and with this model bringing the 16GB version below $300 it is time to give Arc a try. Why this potato when I already have a 3090Ti and a 7900XT in my main rig plus a 3070Ti sitting unused?

Seriously, I just like GPUs! Each different architecture is a new overclocking and optimization adventure. I have some ideas about power limit hardmodding I want to test, that would also be applicable to Radeon GPUs. And of course this thing is gonna get a DIY hybrid mod since I apparently can't get enough of those.

Here's to silly hardware purchases! I feel like one of those Car People who always has a new project despite owning a perfectly good daily driver :-P

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)