lol, pics or it didn't happen?I also improvised a ghetto cooling solution using a piece of think plywood and a squirrel cage fan. Works surprisingly well.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Share your pfSense / OPNsense builds

- Thread starter blackmomba

- Start date

Deadjasper

2[H]4U

- Joined

- Oct 28, 2001

- Messages

- 2,584

Thank you! I couldn't picture where the plywood came in, lol. Yes, this is an awesome cooler.

Dopamin3

Gawd

- Joined

- Jul 3, 2009

- Messages

- 808

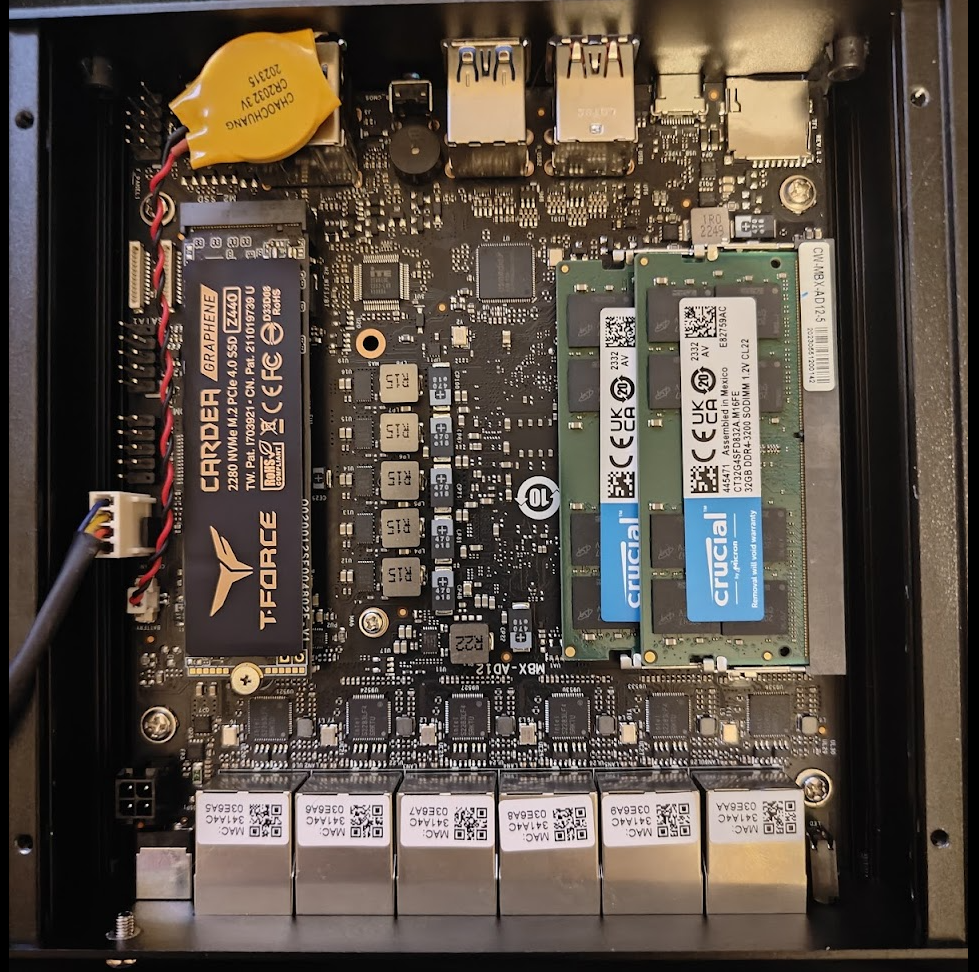

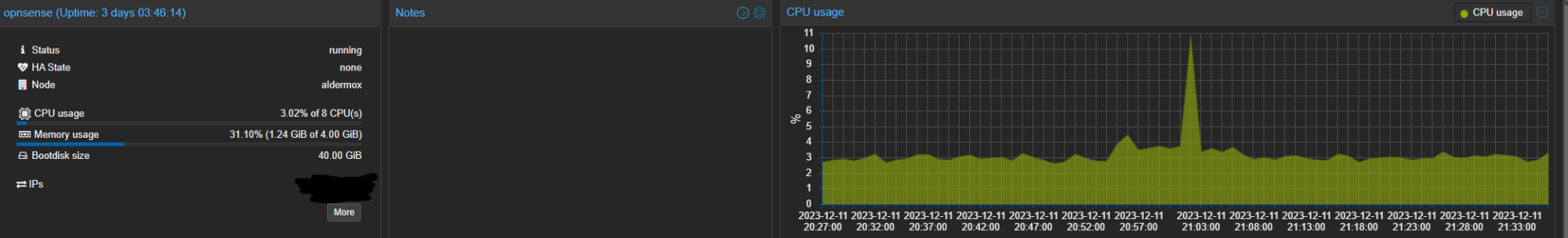

I run virtualized OPNsense on a CWWK mini PC (6x i226-V NICs) with i5-1235U, 64GB DDR4-3200 and a 2TB TeamGroup Z440 NVME. Host is Proxmox and I do IOMMU passthrough on two of the NICs for my WAN/LAN to the OPNsense VM. The routing speed on this thing is insane while simultaneously running lots of other things on VMs/LXCs. You could easily do line rate 2.5GbE (I'm stuck with Comcast at 1.3Gbps download and very mediocre upload). My core switch is a Netgear MS510TXUP- 10GbE to desktop/NAS and 2.5GbE to POE APs.

This was the first time I've virtualized my firewall, and I'm doing it from now on. Migrating my OPNsense config to it was as simple as exporting the existing config from a bare metal instance, and doing a find/replace on the interface names in the file. Imported the config and I was up and flying. Watching ServeTheHome YouTube videos got me interested in these types of mini PCs and they are flat out amazing for the cost and low power usage. And having 4-6 independent LAN ports you can individually hardware passthrough to VMs/LXCs is wild. I have Proxmox Backup Server setup on another physical machine so I'm prepared if this ever goes down for whatever reason but I think it should last.

As an Amazon Associate, HardForum may earn from qualifying purchases.

Deadjasper

2[H]4U

- Joined

- Oct 28, 2001

- Messages

- 2,584

View attachment 619540

I run virtualized OPNsense on a CWWK mini PC (6x i226-V NICs) with i5-1235U, 64GB DDR4-3200 and a 2TB TeamGroup Z440 NVME. Host is Proxmox and I do IOMMU passthrough on two of the NICs for my WAN/LAN to the OPNsense VM. The routing speed on this thing is insane while simultaneously running lots of other things on VMs/LXCs. You could easily do line rate 2.5GbE (I'm stuck with Comcast at 1.3Gbps download and very mediocre upload). My core switch is a Netgear MS510TXUP- 10GbE to desktop/NAS and 2.5GbE to POE APs.

View attachment 619542

This was the first time I've virtualized my firewall, and I'm doing it from now on. Migrating my OPNsense config to it was as simple as exporting the existing config from a bare metal instance, and doing a find/replace on the interface names in the file. Imported the config and I was up and flying. Watching ServeTheHome YouTube videos got me interested in these types of mini PCs and they are flat out amazing for the cost and low power usage. And having 4-6 independent LAN ports you can individually hardware passthrough to VMs/LXCs is wild. I have Proxmox Backup Server setup on another physical machine so I'm prepared if this ever goes down for whatever reason but I think it should last.

Excellent.

As an Amazon Associate, HardForum may earn from qualifying purchases.

Byteus

n00b

- Joined

- Feb 13, 2024

- Messages

- 10

I rode my i7-950 Gigabyte X58 for 13 years, surprisingly I didn't have a real need to upgrade until the last few years and then I just wasn't thrilled with the options until Alderlake released at which point I built my current system. About that time Tomato on my Nighthawk R7000 was also aged and while shopping for a router found Pfsense. I figured if I'm going to run this o'l beast 24x7 I'm going to get more use out of it then just pfsense so I put Hyper-V to work and virtualized it so I could also make it a Jellyfin media server with Radarr and Sonarr and a home for my old 2TB & 4TB HDD's as a NAS.

The X58 was a dual 1Gb NiC MB and that worked fine since my ISP was only 250Mb DSL. I've recently moved to a fiber ISP with 250Mb that will get a free upgrade to 500Mbs when they make that their entry package. I've also recently upgraded my network to 2.5Gbp since it's become really cheap to do and the LAN NiC on this X58 to 2.5Gb which was incredibly easy to do being a VM. Initially tried to get a i226-V T1 card from Ghina off Amazon to work but it either failed to post or Intel's latest drivers didn't work although the same driver version released a month earlier off station-drivers did work but the performance was poor. Replaced it with a Realtek 8125B which has run fine. The X58 can easily keep up with my 250Mbs ISP and gets around 105Mbs with PIA via OpenVpn. About the only real downside is a 230w power draw 24x7. Hopefully with Arrowlake I'll replace the X58 with my z690 12700K/RTX3060 system which should drop the draw a bit unless I add AI training to its list of jobs which I'll likely do.

The X58 was a dual 1Gb NiC MB and that worked fine since my ISP was only 250Mb DSL. I've recently moved to a fiber ISP with 250Mb that will get a free upgrade to 500Mbs when they make that their entry package. I've also recently upgraded my network to 2.5Gbp since it's become really cheap to do and the LAN NiC on this X58 to 2.5Gb which was incredibly easy to do being a VM. Initially tried to get a i226-V T1 card from Ghina off Amazon to work but it either failed to post or Intel's latest drivers didn't work although the same driver version released a month earlier off station-drivers did work but the performance was poor. Replaced it with a Realtek 8125B which has run fine. The X58 can easily keep up with my 250Mbs ISP and gets around 105Mbs with PIA via OpenVpn. About the only real downside is a 230w power draw 24x7. Hopefully with Arrowlake I'll replace the X58 with my z690 12700K/RTX3060 system which should drop the draw a bit unless I add AI training to its list of jobs which I'll likely do.

Last edited:

Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,216

so many better systems but I know most of the world pays way lower prices for power. My home lab including networking gear only draws 150 watts under load and I am scheming to reduce this but another 50watts or so

Byteus

n00b

- Joined

- Feb 13, 2024

- Messages

- 10

Better than X58 no doubt, but it's also about putting old hardware to use with zero cost rather than e-waste and I have enough tasks to give it to justify the power draw. Adding AI training is not something you can do on a mini and I currently run the z690 system 24x7 already doing that in the background in addition to the X58 system so putting them all on one system will be a 50% reduction in power. AI training is 100% 3060 GPU, very little CPU, it will be a nice add with the system tasks using the UHD770 GPU as well.so many better systems but I know most of the world pays way lower prices for power. My home lab including networking gear only draws 150 watts under load and I am scheming to reduce this but another 50watts or so

Last edited:

For my 100 Mbit/s up/down internet connection, I'm running OPNsense on a fanless, 1-core, 1.2 GHz Via C7 board (Jetway J7F5M)  . It's got a single 1 GiB RAM stick and an 80 GB Intel DC SSD (S3500?). I bought the board dec 2007.

. It's got a single 1 GiB RAM stick and an 80 GB Intel DC SSD (S3500?). I bought the board dec 2007.  For the WAN side it's got a 100BASE-TX 3Com PCI (not PCIe) card. It's been amazingly stable over the years; I cannot recall a single crash. I did go through a few external brick power supplies - I think I replaced them three times and they all failed within a couple of years - before I got a MeanWell HRP-100 12 VDC supply and mounted it in an aluminium box. Oh, and the internal 12 VDC->ATX conversion board failed some years ago and needed a recapping (still running it now).

For the WAN side it's got a 100BASE-TX 3Com PCI (not PCIe) card. It's been amazingly stable over the years; I cannot recall a single crash. I did go through a few external brick power supplies - I think I replaced them three times and they all failed within a couple of years - before I got a MeanWell HRP-100 12 VDC supply and mounted it in an aluminium box. Oh, and the internal 12 VDC->ATX conversion board failed some years ago and needed a recapping (still running it now).

I get full 100 Mbit/s line speed though the thing. I'm not running any VPN, packet inspection or any outside-facing services on it though. But I do have some virtual VLAN devices, port forwardings and such set up. The web interface isn't exactly snappy but fully usable.

I'm thinking about replacing it with a Supermicro A1SAi-2550F board with 16 GiB of ECC RAM I have lying around, but...

I get full 100 Mbit/s line speed though the thing. I'm not running any VPN, packet inspection or any outside-facing services on it though. But I do have some virtual VLAN devices, port forwardings and such set up. The web interface isn't exactly snappy but fully usable.

I'm thinking about replacing it with a Supermicro A1SAi-2550F board with 16 GiB of ECC RAM I have lying around, but...

Last edited:

Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,216

Look at one of those

Beelink S12 Pro Mini PC would be my pick for 150$. Can do alot more than just OPNsense

Latest Alderlake Atom like cores for 150$ with 2 2.5GBe intel nics

Plenty of things could replace this ancient box. Anything with 2 Intel GB ethernet would be fine. Something like the cheap mini PCs on amazon for 100-150$For my 100 Mbit/s up/down internet connection, I'm running OPNsense on a fanless, 1-core, 1.2 GHz Via C7 board (Jetway J7F5M). It's got a single 1 GiB RAM stick and an 80 GB Intel DC SSD (S3500?). I bought the board dec 2007.

For the WAN side it's got a 100BASE-TX 3Com PCI (not PCIe) card. It's been amazingly stable over the years; I cannot recall a single crash. I did go through a few external brick power supplies - I think I replaced them three times and they all failed within a couple of years - before I got a MeanWell HRP-100 12 VDC supply and mounted it in an aluminium box. Oh, and the internal 12 VDC->ATX conversion board failed some years ago and needed a recapping (still running it now).

I get full 100 Mbit/s line speed though the thing. I'm not running any VPN, packet inspection or any outside-facing services on it though. But I do have some virtual VLAN devices, port forwardings and such set up. The web interface isn't exactly snappy but fully usable.

I'm thinking about replacing it with a Supermicro A1SAi-2550F board with 16 GiB of ECC RAM I have lying around, but...

Beelink S12 Pro Mini PC would be my pick for 150$. Can do alot more than just OPNsense

Latest Alderlake Atom like cores for 150$ with 2 2.5GBe intel nics

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,946

We switched to a different model server for our phone servers at work. We were using Dell R340/R350s but switched to Poweredge XR11s. We had a R350 sitting in a corner that wasnt going to be used so i asked my boss if i could have it and he said yes.

Brand new in the box R350. Has 4HR ProSupport Mission Cirtital until March 2026. Has a Xeon E-2378 65w cpu, 32GB DDR4, iDRAC Enterprise, redundant 600w psus.and two 960GB SSDs. Plans for this are to remove the SSDs and buy the parts for going the BOSS card route. I will also need to pick up two NICs for it to be complete so it will be an ongoing project that im hoping to have done soon.

Brand new in the box R350. Has 4HR ProSupport Mission Cirtital until March 2026. Has a Xeon E-2378 65w cpu, 32GB DDR4, iDRAC Enterprise, redundant 600w psus.and two 960GB SSDs. Plans for this are to remove the SSDs and buy the parts for going the BOSS card route. I will also need to pick up two NICs for it to be complete so it will be an ongoing project that im hoping to have done soon.

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,946

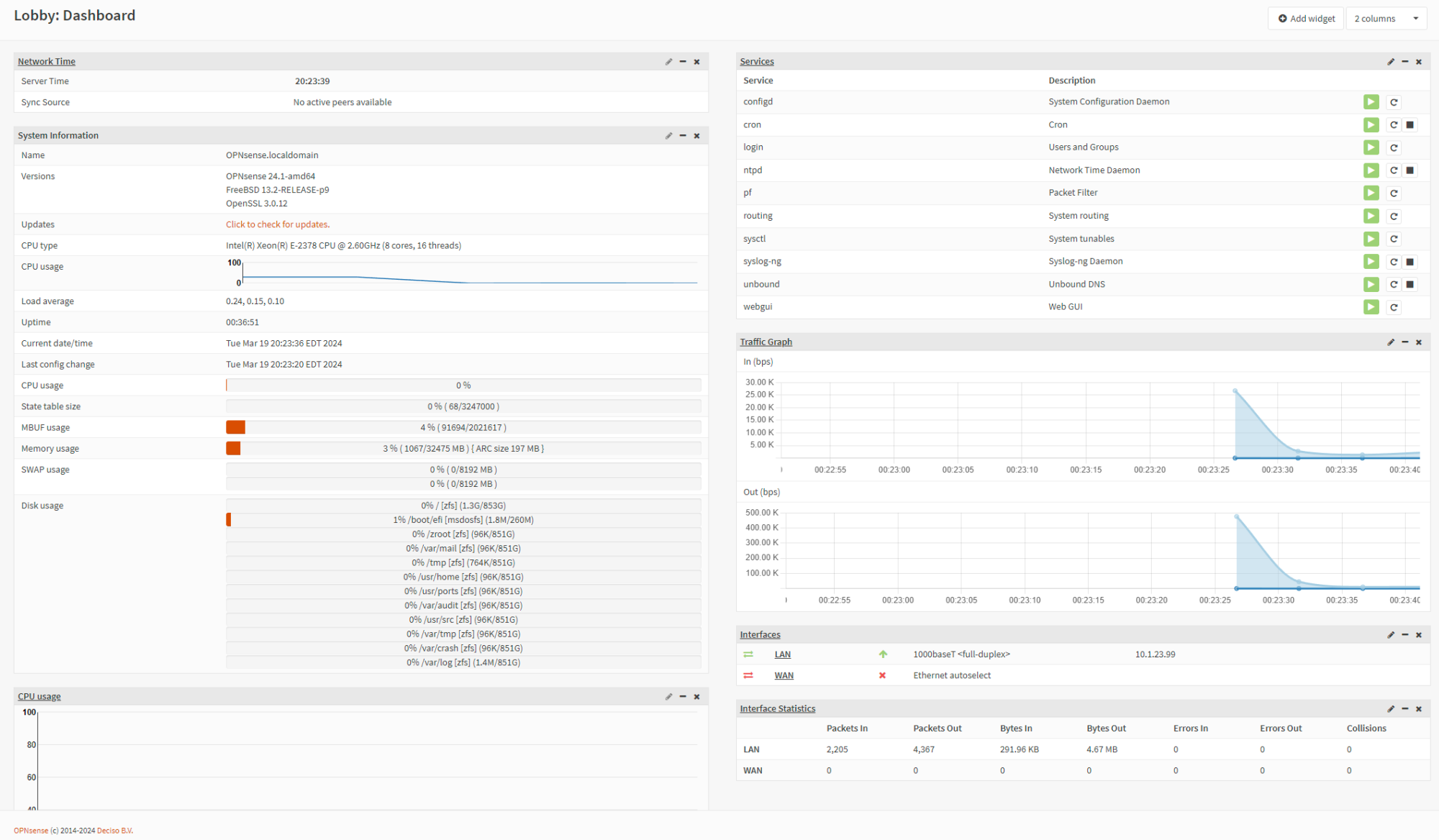

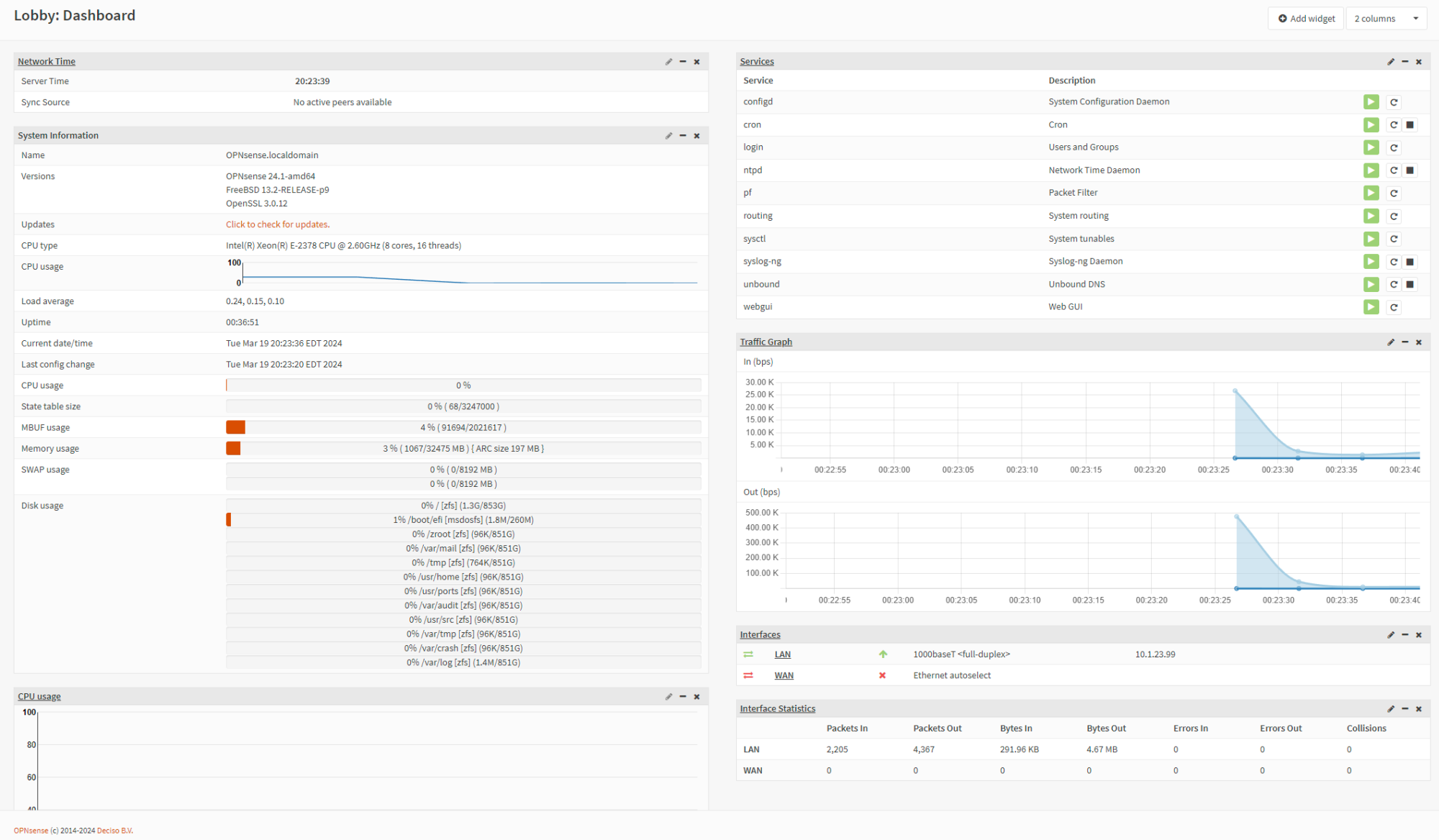

Finally got the Intel X550-T2 and Intel X710-DA2 NICs in and installed and got some time to install opnsense 24.1. Using a ZFS mirror on the 960g SSDs. Just have to configure the interfaces then i think im ready to upload my cfg. Still throwing around the idea of disabling cores.

Deadjasper

2[H]4U

- Joined

- Oct 28, 2001

- Messages

- 2,584

Finally got the Intel X550-T2 and Intel X710-DA2 NICs in and installed and got some time to install opnsense 24.1. Using a ZFS mirror on the 960g SSDs. Just have to configure the interfaces then i think im ready to upload my cfg. Still throwing around the idea of disabling cores.

View attachment 642674

Sounds like overkill for an OPNSense box. What's your use case for 10G and 960G mirrored SSD's?

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,946

20g routing with NGFW features enabled. This is an effort to reduce power draw from my current Dell R620 that is currently my router.Sounds like overkill for an OPNSense box.

Because i like to go fast. I have a 48 port 10g switch, all of my servers have 10g.What's your use case for 10G

It came with them. I took them out in effort to switch from SATA 960GB SSDs to 400GB SAS12 SSDs but dell used stupid star screws and it was easier to just put them back in then go get my ifixit kit and take them out. Why SSD? Higher reliability with less power draw. Why mirror? Because i ran a single ssd previously and it failed. If one dies now with the mirror i can put a new drive in and off we go with the rebuild.and 960G mirrored SSD's?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)