ah yes the next years 39" and 45" oleds will be interesting as the replacement for this 57" Neo.

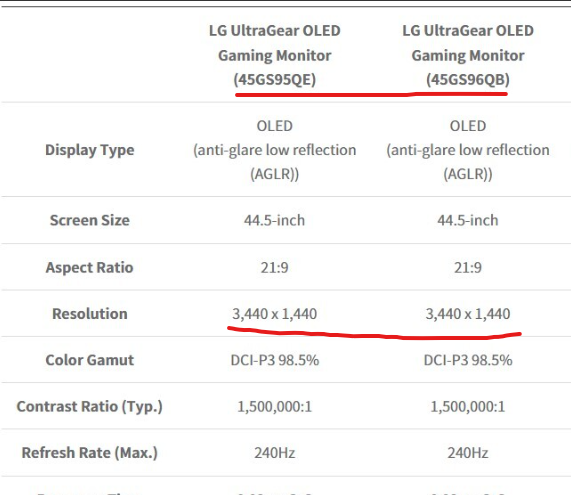

But only if they have a decent resolution, i dont want none of that digusting 83ppi of the current 45" oled monitors available otherwise I would have jumped on them already.

But only if they have a decent resolution, i dont want none of that digusting 83ppi of the current 45" oled monitors available otherwise I would have jumped on them already.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)