It must work well when the in-between input was only camera movement change but if say it was the jump button or the shoot the gun button the latency will be higher than before I imagine and if the extra camera movement frame went as always you can have 8 frame in the future after the jump-shot to "roll-back" to reality ? So a tradeoff, one easy to pick for VR where ultra high FPS always and fully constant is a must, would need to experience on a tv-monitor to know if it is much better

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RUMOR: AMD FSR3 may generate multiple frames per original frame, be enabled driverside.

- Thread starter GoldenTiger

- Start date

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Advanced reprojection algorithms can help with all of that -- including reprojecting large objects (e.g. reprojecting geometry contained within the approximate size of enemy hitboxes). So it is also possible for enemy movements can also be warped to new geometries, if the engine provides the positional data to the time-warping engine. There is some research occurring on this.It must work well when the in-between input was only camera movement change but if say it was the jump button or the shoot the gun button the latency will be higher than before I imagine and if the extra camera movement frame went as always you can have 8 frame in the future after the jump-shot to "roll-back" to reality ? So a tradeoff, one easy to pick for VR where ultra high FPS always and fully constant is a must, would need to experience on a tv-monitor to know if it is much better

One historical problem is the latency difference between original frames and frame-generated frames. This is solved if using the two-thread workflow (The 2-thread method)

Local/remote lags can be solved via alternate workflows than faking a scene with triangles and textures. The problem is we have to make it as artifactless as possible, and go beyond just mouselook.

Currently FSR/XeSS/DLSS (and even 3 to a point) is still literally Wright Brothers. Literally MPEG1/MPEG2 of the era, and we have to make it perceptually lagless and artifactless -- it will take time. MPEG1 was very artifacty, and pulsed between those "real" (fully compressed) and "fake" (predicted frames), and corrupted for a while if one bit was lost in a predicted frame, until the next fully compressed frame arrived. Today, tech such as DLSS can sometimes become very artifacty, but this will improve.

Remember -- video compression went through a similar path of iterative improvements over years. Netflix is (essentially) 23 "fake" frames per second and 1 "real" frame per second, if you consider interpolation as "fake". Due to MPEG/H.26X standards using interpolation/prediction mathematics. Like video compression having access to original uncompressed files to prevent the artifacts that hurt black-box interpolation (e.g. TV interpolators) -- we just have to de-blackbox the predictiveness with engine ground truth (with all positionals and actions). And also de-latency it with techniques like the lagless reprojection algorithm. But nowadays, we can't tell the fakeness of those frames apart. GPUs are still faking real life with triangles and textures, aren't they? As long as the "other method of faking" is as good, why not?

We're still very Wright Brothers in frame generation technology, but with Moore's Law being almost dead, the GPU workflow is going to have to hybridize to gain the benefits of strobeless motion blur reduction (without sacrificing the delicious UE5+ style detail levels).

Even without this, your esports game may be running at only 150fps. You'd reproject 150fps to 1000fps. The enemy/shoot lag may still be there, but you'd eliminate your mouselook latency. So, assuming reprojection is done instantly on very fast GPUs (reprojection can execute in less than 1ms), it's still less latency than strobe backlights like ULMB or DyAc. So even just line-item reductions in latency can still be a big stepping stone.

Hybrid approaches are also possible (e.g. combining reprojection and extrapolation, or drawing new graphics into reprojected frame buffers), for line-item things like gun behaviors. Lots of developers are experimenting, some with good results, some with bad results. Also, reprojection/warping techniques is being tested on temporally dense raytracing techniques too -- so warping is not exclusive to classical triangle+texture workflows.

It may be a few years before reprojection improves enough to be used by esports. The goal is year 2030.

The first desktop reprojection/warping engines probably won't do everything (shoot latencies, enemy-movement latencies) but later reprojection engines could be able to do those extras. My aspirational goal is reprojection in desktop software -- for mouselook/strafe by 2025 in a game using UE5+ detail -- and for weapon/enemy by 2030. Sadly, with Moore's Law sputtering out, we are unable to get lagless 4K 1000fps Ultra path-traced by doing full original renders from scratch -- and frame generation is here to stay.

My article also puts GPU silicon designers on notice to make the two-thread system low-penalty (cache optimizations, context switching optimizations). I do have hundreds of friends at AMD / NVIDIA / Intel, and they typically widely share my Area 51 articles internally, because I'm such a good Training Explainer to their new employees, after all!

I'm just one big gigantic advocacy domino in the Chaos Effect -- but I will execute that privelege hard -- to incubate strobeless blur reduction. All the techtubers are literally helping things along (like the LTT video etc). That got over a million views and dropped a lot of jaws already. I just need to shove things along as much as I can; as it's part of "Blur Busters" namesake.

__________

<Thought Exercise>

Initially for early Wright Brothers 1000fps experiments of tomorrow -- For a non-multithreaded GPU, that'd be 2000 context switches per second for 1000fps 1000Hz reprojection, a bit expensive, but not impossible for a GPU vendor to optimize for. The GPU, drivers and software needs to be designed to do 2-threads without much context-switching overhead. One could theoretically surge-execute the original frame render a few times in 0.75ms timeslices each millisecond doing UE5-quality. The reprojection thread would surge-execute for 0.25ms every second (a very rudimentary, simple reprojection algorithm can execute in as little as 0.25ms on an RTX 4090), to generate the reprojected frame (based on the last-complete render frame), then context-switch back to the original frame render to do a bit more work on the still-unfinished frame. Then context-switch back to continuing to render the next original frame. Cache miss penalities may apply here, if the GPU is not optiimzed for the 2-thread system. Another interim solution, the two-GPU approach also solves the context-switching overhead problem (one GPU to render, one GPU to reproject), at the cost of bus overhead. It's kind of too bad that NVIDIA discontinued SLI -- that would be a perfect reprojection pipeline. However, it can be done over the bus. Researchers (or high-cost enterprise projects like commercial simulator screens) can still use the two-GPU approach over the PCIe bus instead -- instead of two threads on the same GPU. Blitting frame buffers over the bus isn't a big penalty on a PCIe 4.0 bus anymore, so you could blit 1000fps over the bus, to the reprojection GPU. Bonus is, you have more GPU reprojection compute power per frame, so you could use more compute per reprojected frame, to do better AI-based parallax-reveal infilling. Or spewing even more frame rate (e.g. 4000fps 4000Hz) using simple reprojection, since one GPU is dedicated to reprojecting the other GPU's frames.

</Thought Exercise>

Last edited:

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

If you mean the GPU motion blur effect setting -- it is still extra blur relative to real life. Forced "extra" motion blur can be a problem in some contexts, such as simulating real life like VR.Isn't that effectively what motion blur does?

But GPU blur effect does have an advantage (read on).

You're in San Francisco State University Physics according to your username "sfsu physics"?

Let me reveal my reputation; you might find it higher than expected. I am in more than 25 peer reviewed research papers (Research tab at main Blur Busters site has a lot of easy explainers) -- For the complex stuff, see my search result in Google Scholar, although there are more elsewhere.

This is a problem when emulating real life (e.g. VR headset or photorealistic rendering). Motion blur is good if wanted, but motion blur is bad if undesired. Motion blur can create headaches on large-FOV displays (sitting in front of 45" ultrawide, or wearing headset, or TV on desk).

Displays behave differently whether:

- Stationary eye, stationary object

(e.g. looking at a photo)

- - Moving eye, stationary object

(e.g. tracking eyes on a flying object in front of static background)

- - Stationary eye, moving object

(e.g. staring at crosshairs during a mouselook where background scrolls)

- - Moving eye, moving object

(e.g. tracking moving objects on the screen)

For example, this TestUFO animation, www.testufo.com/eyetracking is a great demo of item (3) versus item (4).

Now, I wrote "Cole Notes Explainer" style articles titled "The Stroboscopic Effect of Finite Frame Rates" as well as "Blur Busters Law: The Amazing Journey To Future 1000Hz Displays" -- if you haven't seen those, you should.

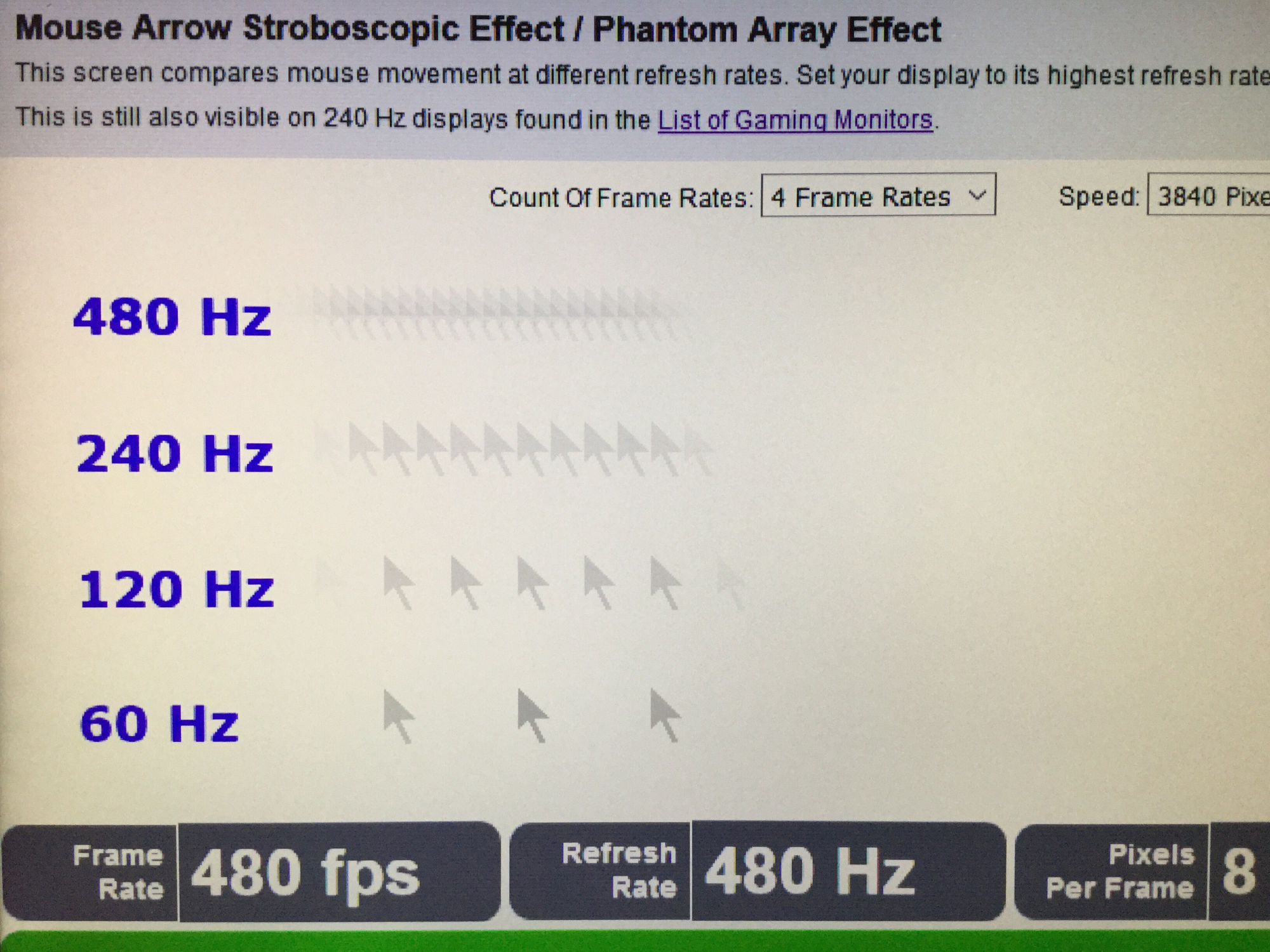

GPU blur effect can be useful to fix a lot of artifacts, especially the stroboscopic-stepping artifact in lineitem (3), as seen at www.testufo.com/mousearrow

Many artifacts (that make displays aberrate from real life) is far more than just flicker fusion threshold (aka ~70Hz-ish) -- so a lot of display research concepts that someone in the 2000s and 2010s was taught about humankind frame rate visibilities (Hz of no further benefit) -- the figurative textbook is thrown out of the window with newer research.

(Figure 1: Artifact from stationary-eye, moving-object)

The bonus is that at higher refresh rates, you need LESS of the GPU blur effect to eliminate stroboscopics (which can be an eyestrain factor for some individuals).

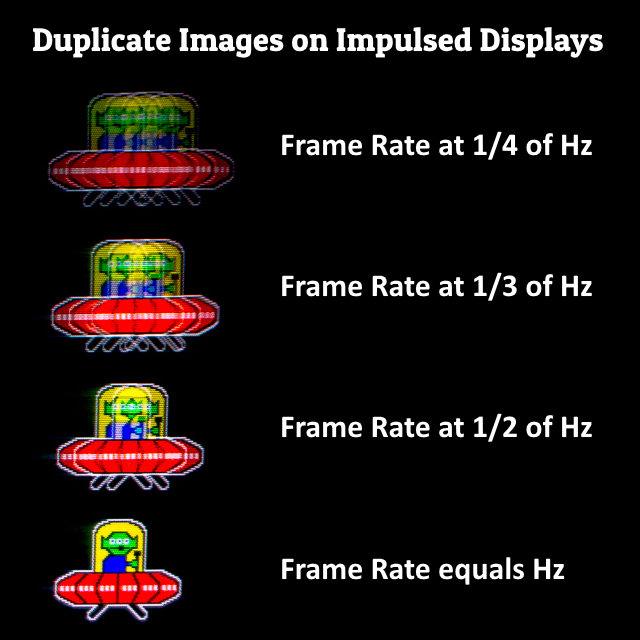

Now, if you use pulse-based motion blur reduction (BFI, ULMB, DyAc, strobing), you have this problem too:

(Figure 2: Moving-eye, moving-object on flicker displays like CRT and plasma, ala 30fps at 60Hz)

It's also why multi-pulse PWM dimming creates eyestrain, despite being high frequencies. So you can't pulse a frame multiple times, and expect to avoid artifacts. It's reproducible in software: TestUFO Software BFI Double Image Effect (adjust motion speed depending on your screen size. You may have to slow it down if viewing on a smartphone). So you can reproduce the old "CRT 30fps at 60Hz" double image effect on an LCD using software-based BFI + repeat flashing of same frame! (See animation for yourself).

But how to fix all artifacts? Especially when simulating real life? No additional blur forced into your eyes above-and-beyond real life?

So you need (A) high frame rates and (B) high refresh rates, and (C) flickerless -- in order to brute-force away all the "extra blur beyond real life" and the "display does not match real life" problem of simulating reality with a display -- especially important for virtual reality but also an increasing problem with the increasingly-bigger-FOV monitors now sitting on our desks that amplify frame rate limitations and refresh rate limitations -- and creates motion blur headaches beyond a certain point.

One can still use GPU blur effect, but a lot less is needed if you're doing 1000Hz. You only need to GPU-blur-effect (360-degree camera shutter simulation) for 1ms, if you're at 1000fps 1000Hz. So you eliminate a lot of stroboscopics, while only adding +1ms of extra motion blur. So it's a fair artifact-removing tool, for people who hate stroboscopic-stepping effects (phantom arrays and wagon wheel effect), and stroboscopics can be visibly headache-inducing in a lot of real world high-contrast content such as neons / cyberpunk content if you thrash your mouse (PC) or head (VR) around a lot.

So GPU blur effect fixes that kind of headache. However, having cake and eating it too requires fixing both (blurless AND stroboscopicsless) -- and that requires brute frame rate at brute Hz to simulate analog real life for lineitems other than flicker fusion threshold. Making the GPU blur effect so tiny (due to ultra short intervals between frames) will greatly help it gain more advantages than disadvantages in reality simulation contexts. At low frame rates, GPU blur effect is like forcing an extra camera shutter (1/60sec of blur = everything is motion blurry like a 1/60sec camera shutter). You don't want that in VR.

Since we need an ergonomic flickerless, lagless & strobeless method of motion blur reduction -- that's why I am a big fan of lagless frame generation as an alternative motion blur reduction technology for situations where you don't want extra blur or stroboscopics pushed into your eyeballs (above and beyond real life) -- especially in VR or bigger-screen / wide-FOV gaming.

In a blind test, more than 90% of human population can tell apart 240fps vs 1000fps on a 0ms-GtG display such as the Viewpixx 1440Hz DLP Vision Research projector. 240-vs-360 is a crappy 1.5x difference throttled to 1.1x due to slow LCD GtG. 0ms GtG can still have persistence motion blur, due to stationary static sample and hold pixels being smeared across retinas during analog eye tracking. So briefer frames of movement + no black frames = brute ultra framerate at ultra Hz = less opportunity for display motion blur forced onto your eyeballs. It's all about geometrics in the diminishing curve of returns. Retina refresh rate is not until 5-digits, when maxing out (e.g. 180-degree FOV 16K retina-resolution VR), according to calculations on human angular resolving resolution on spatials, in eliminating human visible differences between static resolution versus temporal resolution (motion resolution). For fast motion speeds of 10,000/second (takes almost a second to transit an 8K display) -- even 1ms frames with no black periods (aka 1000fps 1000Hz) still creates 10 pixels of motion blur at 1000fps 1000Hz. Or 10 pixel stroboscopic stepping. So, with sufficient spatial resolution and enough time to track eyes over that many pixels (to identify that a display aberrates from real life), the retina refresh rate of no further humankind benefit (below human visibility noise floors) is pushed to 5-digits rather than 4-digits. There's quite a lot of variables, including caused by resolution and large-FOV amplifying visibility of various temporal artifacts. Now if you want to add GPU blur effect, you have to oversample the Hz by roughly about 2, to compensate for the extra blur, to get the same blur as before, but without stroboscopic stepping effects. Whac a mole, indeed, on the "weak links" aberrating displays from real life!

Displays physics are no laughing matter when we're getting closer and closer to mimicking immersion in a Star Trek Holodeck with big FOV displays (big screen displays on a desk, and VR displays). Not everyone can stand VR for various reasons (even if not due to vertigo), including things like blur or flicker or other attribute aberrating displays from real life.

Last edited:

Chief Blur Buster

I really wish we could have some of that greatness on consoles, too.

60 to 120 fps on consoles would be good enough for most people. Any chance of that happening (fsr 3, ps5 pro or whatever)?

What's realistic in near future and on next Gen in that space?

I really wish we could have some of that greatness on consoles, too.

60 to 120 fps on consoles would be good enough for most people. Any chance of that happening (fsr 3, ps5 pro or whatever)?

What's realistic in near future and on next Gen in that space?

If it work on rdna 2 card I would not exclude the possibility for it to work on XboxX-PS5 (if you mean the upcoming FSR 3).60 to 120 fps on consoles would be good enough for most people. Any chance of that happening (fsr 3, ps5 pro or whatever)?

It seem a good platform for it, 30 fps annoucement get backlash and people-media let them use expression like 4k or 1440p when the game does not run near that resolution and just upscale to them, so they could maybe get a pass to call frame generated 60-90-120 simply 60-90-120 hz

Last edited:

Feasible at 1080p not so much for 4K, certainly not at a price the majority would be willing to pay.Chief Blur Buster

I really wish we could have some of that greatness on consoles, too.

60 to 120 fps on consoles would be good enough for most people. Any chance of that happening (fsr 3, ps5 pro or whatever)?

What's realistic in near future and on next Gen in that space?

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

We'll see.Chief Blur Buster

I really wish we could have some of that greatness on consoles, too.

60 to 120 fps on consoles would be good enough for most people. Any chance of that happening (fsr 3, ps5 pro or whatever)?

What's realistic in near future and on next Gen in that space?

First thing first, I want to figure out how to bring a team of people to create kind of 4K 1000fps 1000Hz UE5 demo at some future GDC or similar convention (2024 or 2025).

I've even solved the 4K 1000Hz display problem (lab demo that can be used at a convention).

It's just waiting for the 4K 1000fps 1000Hz content, which is now solvable by the new lagless 2-thread reprojection algorithm.

Contact me off [H] if you can help me find a way (advice, existing dev skills, sponsor, etc)

- Creating a new Windows Indirect Display Driver

(since the 4K 1000Hz is achieved via a special Blur Busters invented "refresh rate combining" algorithm to multiple strobed projectors pointing at the same screen)

- Modifications of a UE5 type demo for the two-thread workflow. Two separate 4090s can do it for now. One doing 4K 100fps render, other doing 4K 1000fps reprojection warping.

- The cost of the multiple 4K 60Hz or 120Hz projectors

- The cost of the systems (including GPUs)

- The cost of bringing and setting up at a convention such as Game Developer Convention (GDC) in 2024 or 2025

My dream goal is to incubate interest in 4K 1000fps 1000Hz, as a show-the-world demo. Convince thousands of game developers with a true 4K 1000fps 1000Hz raytraced-enhanced demo ("RTX ON" if NVIDIA GPUs are used, but I'm open to using top-end AMD GPUs too). It may or may not happen -- but it is a dream of mine -- and I'll try to pull together some group.

VHS-vs-8K this bleep, not 720p-vs-1080p frame rate incrementalism like worthless 240Hz-vs-360Hz. Human visibility is all about the geometrics (and GtG=0ms).

The rest can come later (developer interest) after doing many semi trailers worth of micdropping.

The more game developers convinced, the more that consoles will do 4K 120fps(+) in the future. Mainstreaming 1000fps 1000Hz is almost pie-in-sky, but the first step begins with The Demo of the Decade.

(As part of my personal dream, I also definitely veer into commercial interests. Mea culpa. But y'know, under this account created August 2017, I have never posted a blurbusters com link here.)

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)