Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 5xxx / RX 8xxx speculation

- Thread starter Nebell

- Start date

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,672

Rumors are that the top GPU for RTX 5000, is targeting about double performance of a 4090.Ampere (RTX 3000) was a really good architecture held back by the Samsung 8N process. The reason we saw such an amazing uplift going from the 3000 to 4000 GPUs was mainly due to the process improvement. It allowed Nvidia to push their products much further without incurring the power penalty imposed by Samsung 8N.

Unfortunately, I doubt we will see such an uplift again with the 5000 GPUs.

I think there's still some fuel left in the tank. The 4090 got a 0% increase in memory bandwidth over the 3090 Ti so who knows maybe GDDR7 with a huge uplift in memory bandwidth can do a good amount of the heavy lifting for performance gains next gen. Let's not forget that Nvidia also managed to pull some decent gains going from Kepler to Maxwell which was made on the same TSMC 28nm node. So if they could achieve what they achieved with Maxwell which had no node advantage over Kepler, then I'm sure going to 3nm they definitely have some room to work with.

Nvidia could also gain by re-working their drivers for less CPU overhead. Especially in minimum frames.

That should be enough to play Immortals of Aveum at 4K, without need for help from any upscalingRumors are that the top GPU for RTX 5000, is targeting about double performance of a 4090.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

That should be enough to play Immortals of Aveum at 4K, without need for help from any upscaling

Rumors are the last thing you want to believe. Remember how RDNA 3 was supposed to be "2x or even 3x" faster than RDNA 2? Lol. If the 5090 does end up being 2x faster than the 4090, it's only going to be in some new path tracing mode 2.0 using some new DLSS frame generation 2.0 that only the 50 series has or some crap. In a true apples to apples comparison there's no way it's going to be 2x faster. No GPU from Nvidia in the last decade or so has been 2x faster than the previous.

H100 was more than twice as fast then A100 (often 2.2-2.3) and I imagine they will try to be around 2.2-2.6 this time around again, not sure why they would do it at least at the 5090 level if they release one on the gaming side, they probably could if they needed or wanted too. I doubt they will.

1080ti was not that far the mark and the window between release is rumoured to be longer than usual.

1080ti was not that far the mark and the window between release is rumoured to be longer than usual.

TheSlySyl

2[H]4U

- Joined

- May 30, 2018

- Messages

- 2,704

The next generation of cards, especially Nvidia, are gonna be AI first and graphics as an afterthought.

I don't think the cards are gonna release till GDDR7 and the majority of rasterization performance improvement is gonna be almost entirely because of more memory bandwidth as they push DLSS for frame rate.

I don't think the cards are gonna release till GDDR7 and the majority of rasterization performance improvement is gonna be almost entirely because of more memory bandwidth as they push DLSS for frame rate.

If they feel like they can continue to make those margin on AI cards and that there any possibility that the gaming card compete with themselve, it could be timid and not that much of a factor specially for training.The next generation of cards, especially Nvidia, are gonna be AI first and graphics as an afterthought.

Moreso if the lineup continue to diverge more and more, trhe 3090 was quite far from an a100 for AI stuff, same for the 4090 and H100 we can expect the same with again the 5090/RTX family be a graphic/non ai datacenter-rendering farm first type of product with the ML training on the hopper next product line, would be putting those 1000% type of margin in faster geopardy, they will crash down from competition but if they can help it not Nvidia competition would be my guess.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

If they feel like they can continue to make those margin on AI cards and that there any possibility that the gaming card compete with themselve, it could be timid and not that much of a factor specially for training.

Like I said earlier, I don't think AMD is going to be alone in skipping out on a generation of halo cards...

TheSlySyl

2[H]4U

- Joined

- May 30, 2018

- Messages

- 2,704

It's gonna be for consumer level AI. The hobby market for Stable Diffusion, LLamaGTP, AudioCraft, etc. is gonna grow exponentially. The amount of open source stuff available just on the image side of stuff is incredible given how short of a period of time that AI has existed.Moreso if the lineup continue to diverge more and more, trhe 3090 was quite far from an a100 for AI stuff, same for the 4090 and H100 we can expect the same with again the 5090/RTX family be a graphic/non ai datacenter-rendering farm first type of product with the ML training on the hopper next product line, would be putting those 1000% type of margin in faster geopardy, they will crash down from competition but if they can help it not Nvidia competition would be my guess.

https://civitai.com/

If they can split inference with training, but I extremely doubt that most of the die will be AI in mind over graphics (that will be an afterthought) on the consumer cards.It's gonna be for consumer level AI. The hobby market for Stable Diffusion, LLamaGTP, AudioCraft, etc. is gonna grow exponentially. The amount of open source stuff available just on the image side of stuff is incredible given how short of a period of time that AI has existed.

https://civitai.com/

They would have been working on this since before the hobby market explosion, some of the bin of those gpu goes into rendering farm, lot of AI stuff was build to take advantage of the GPU that existed and made with graphics and they can be quite aligned when it come at hardware requirement would it be memory bandwith-parralel matrix multiplication, floating point operation.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

View: https://www.youtube.com/watch?v=IPSB_BKd9Dg

MLID confirming what I suspected AMD is planning on doing (along with plenty of others). Chiefly, moving their "mid-range" up a tier in performance and dropping support for entry/mid-range graphics in favor of APUs with on-board graphics.

https://www.guru3d.com/news-story/l...-point-apu-with-16-rdna3-5-compute-units.html

Strix Point, which is probably going to be the de-facto laptop chip for damn near everything will have 16 CUs while we already know Strix Halo will have 40. 16 CUs would roughly be a 8500-series graphics, while 40 would put it at 8700-series level. Which means that discrete graphics will probably start around 8700 and go up from there since there is literally no point in producing discrete graphics that's weaker than what an APU can bring, unless they spin up some crazy low-power discrete graphics for laptops running some kind of minimum graphics APU by the side, but that seems unlikely to me.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

View: https://www.youtube.com/watch?v=IPSB_BKd9Dg

MLID confirming what I suspected AMD is planning on doing (along with plenty of others). Chiefly, moving their "mid-range" up a tier in performance and dropping support for entry/mid-range graphics in favor of APUs with on-board graphics.

https://www.guru3d.com/news-story/l...-point-apu-with-16-rdna3-5-compute-units.html

Strix Point, which is probably going to be the de-facto laptop chip for damn near everything will have 16 CUs while we already know Strix Halo will have 40. 16 CUs would roughly be a 8500-series graphics, while 40 would put it at 8700-series level. Which means that discrete graphics will probably start around 8700 and go up from there since there is literally no point in producing discrete graphics that's weaker than what an APU can bring, unless they spin up some crazy low-power discrete graphics for laptops running some kind of minimum graphics APU by the side, but that seems unlikely to me.

I think I am misunderstanding something because that sounds like an absolutely terrible idea. Moving the mid range up? Does that mean making an 8700XT but then saying it's actually the 8900XT in marketing material? I'm pretty confused by this. If APUs are targetting 8700 level performance with 40 CUs then that would make a 60 CU dGPU which is supposed to be the real mid range will now become the 8900XT for $1000?

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

Moving the mid range up? Does that mean making an 8700XT but then saying it's actually the 8900XT in marketing material?

No, I'm thinking their "mid-range" cards will perform higher than what their codenames typically target.

So codename 42 and 43 will start at least at the X700 level and go up from there. What they name them, who knows, maybe they might even bump them down, who knows.

Imagine if a 600-series AMD product punches at a 70-series Nvidia level, it would be phenomenal marketing. (AMD I know you're in here, think about this seriously.)

Hawk Point: 12 CU 8300M

Strix point: 16 CU 8400M

Strix Halo: 40 CU 8600M

N43 (cut down): 40? CU 8600XT

N43 (full die): 40? CU 8700

N42 (cut down): 60? CU 8700XT

N42 (full die): 60? CU 8800

N41 (cut down) ?? CU 8800XT

N41 (full die(s)) ?? CU 8900

Golden samples ?? CU 8900XT (XTX for big RAM edition)

I know that's not how the naming scheme works now, but if you drop everything one tier, assuming you can fill out the stack, and price things competitively, your lower tier name will take out a higher tier Nvidia car.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

No, I'm thinking their "mid-range" cards will perform higher than what their codenames typically target.

So codename 42 and 43 will start at least at the X700 level and go up from there. What they name them, who knows, maybe they might even bump them down, who knows.

Imagine if a 600-series AMD product punches at a 70-series Nvidia level, it would be phenomenal marketing. (AMD I know you're in here, think about this seriously.)

Hawk Point: 12 CU 8300M

Strix point: 16 CU 8400M

Strix Halo: 40 CU 8600M

N43 (cut down): 40? CU 8600XT

N43 (full die): 40? CU 8700

N42 (cut down): 60? CU 8700XT

N42 (full die): 60? CU 8800

N41 (cut down) ?? CU 8800XT

N41 (full die(s)) ?? CU 8900

Golden samples ?? CU 8900XT (XTX for big RAM edition)

I know that's not how the naming scheme works now, but if you drop everything one tier, assuming you can fill out the stack, and price things competitively, your lower tier name will take out a higher tier Nvidia car.

N41 and N42 are the completely cancelled configurations no? So by that extension there shouldn't be an 8800 or higher class GPU at all next gen. If N43 really maxes out at 40 CU then it really is just a mid range 8700(XT). There would be no need to do any shuffling around or renaming or whatever, just release it as a the 8700 or 8700 XT for ~$450 and call it a day.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

N41 and N42 are the completely cancelled configurations no? So by that extension there shouldn't be an 8800 or higher class GPU at all next gen. If N43 really maxes out at 40 CU then it really is just a mid range 8700(XT). There would be no need to do any shuffling around or renaming or whatever, just release it as a the 8700 or 8700 XT for ~$450 and call it a day.

Yeah, sorry, brain fart, I was thinking about RDNA 5 and using RDNA 4 terms.

Edit: Yep, nope, the naming thing won't work, I don't think, they don't have the stack for it. Maybe for RDNA 5, but not 4.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

Yeah, sorry, brain fart, I was thinking about RDNA 5 and using RDNA 4 terms.

Edit: Yep, nope, the naming thing won't work, I don't think, they don't have the stack for it. Maybe for RDNA 5, but not 4.

The other thing that's confusing me is this APU that supposedly will have 40 CUs. If such a thing exists, why even make a mid range 8700XT at all if it's going to max out at 40 CUs as well? How much faster would 40 CUs in a dGPU with it's own GDDR7 and higher TDP limits be Vs. 40 CUs found in an APU? And such an APU surely cannot be cheap which wouldn't appeal to it's budget constrained target audience.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

The other thing that's confusing me is this APU that supposedly will have 40 CUs. If such a thing exists, why even make a mid range 8700XT at all if it's going to max out at 40 CUs as well? How much faster would 40 CUs in a dGPU with it's own GDDR7 and higher TDP limits be Vs. 40 CUs found in an APU? And such an APU surely cannot be cheap which wouldn't appeal to it's budget constrained target audience.

I picked 40 because it's at the low number of CUs they'd have to have for a discrete graphics card to make sense. Maybe with a few less they could hit higher clocks, and with v-cache and on-board RAM they can match or beat Strix Halo.

Strix Halo has been confirmed, something would have to have gone very wrong if the 40 CU APU doesn't go into production. AMD has said they don't have any plans to make low-power discrete GPUs with this gen going forward.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

I picked 40 because it's at the low number of CUs they'd have to have for a discrete graphics card to make sense. Maybe with a few less they could hit higher clocks, and with v-cache and on-board RAM they can match or beat Strix Halo.

Strix Halo has been confirmed, something would have to have gone very wrong if the 40 CU APU doesn't go into production. AMD has said they don't have any plans to make low-power discrete GPUs with this gen going forward.

Ok I just googled what this Strix Halo thing actually is. It's actually an APU that's made only for LAPTOPS, as there's no way you can possibly fit all that within a typical CPU sized die made for desktop. Desktop APUs wouldn't max out anywhere near 40 CUs so there's still room for a dGPU with up to 40 CUs. I would image the weakest dGPU they make next gen start at 28 CUs then go up to 40 CUs, anything below 28 CUs they will just not bother and leave it to APUs like you said. With this info, the RX 8000 lineup seems pretty easy to estimate now:

RX 8600 - 28 CU/ 192bit 12GB

RX 8600 XT - 32 CU/ 192bit 12GB

RX 8700 - 36 CU/ 256bit 16GB

RX 8700 XT - 40 CU/ 256bit 16GB

Last edited:

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,358

I think it'll be 36/40 and 56/60, and I fully expect desktop APUs to go up to 40 CUs as well, if not higher, given Granite Ridge's reported 170W upper limit.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

I think it'll be 36/40 and 56/60, and I fully expect desktop APUs to go up to 40 CUs as well, if not higher, given Granite Ridge's reported 170W upper limit.

60 CUs would be pretty good. If AMD can bring roughly 7900 XT performance down to ~$450 with a 60 CU 8700 XT then I think that would make a lot of people happy enough. What they also need to do though is greatly improve the RT performance with RDNA 4.

AMD's initial plan for N43/N44 is 64/32I think it'll be 36/40 and 56/60, and I fully expect desktop APUs to go up to 40 CUs as well, if not higher, given Granite Ridge's reported 170W upper limit.

That makes

n43 as a monolithic n32 (on an advanced node?)

&n44 as a n33 on an advanced node

I expect n43 to have 2 cards

($500) 8700xt = 4070 ti

($400) 8700 = 4070 / 7800xt

($300-$330) 8600xt = 4060 ti / 7700xt

Last edited:

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

AMD's initial plan for N43/N44 is 64/32

That makes

n43 as a monolithic n32 (on an advanced node?)

&n44 as a n33 on an advanced node

I expect n43 to have 2 cards

($500) 8700xt = 4070 ti

($400) 8700 = 4070 / 7800xt

N33 could be

($300-$330) 8600xt = 4060 ti / 7700xt

Where did you hear that it's going to be 64 CU for N43?

MLID sourcesWhere did you hear that it's going to be 64 CU for N43?

Samsung and Intel will be a half node or less behind tsmc in 25 so I can see another play like the 3080 at 699 (sorta) by getting a discount on the wafers but sacrificing a little in top perf for some parts while others use tsmc 3nm. People are probably thinking hard right now about wafer reservations and allocations - that AI play may take up all the 3nm tsmc wafers leaving consumer gpu on lesser processes.

Eventually Nvidia will need to release something new at 399-599 whatever they call it (5060/ti?) that may make a good upgrade in that price segment.

Eventually Nvidia will need to release something new at 399-599 whatever they call it (5060/ti?) that may make a good upgrade in that price segment.

I don't think, they don't have the stack for it. Maybe for RDNA 5, but not 4.

The irony of AMD choosing chiplet strategy to reduce prices yet it is now precisely the chiplet packaging tech that is constrained (atleast till end of 2024), due to "unexpected" AI & Compute boom

Shortages of a key chip packaging technology are constraining the supply of some processors, Taiwan Semiconductor Manufacturing Co. Ltd. chair Mark Liu has revealed.

Liu made the remarks during a Wednesday interview with Nikkei Asia on the sidelines of SEMICON Taiwan, a chip industry event. The executive said that the supply shortage will likely take 18 months to resolve.

Historically, processors were implemented as a single piece of silicon. Today, many of the most advanced chips on the market comprise not one but multiple semiconductor dies that are manufactured separately and linked together later. One of the technologies most commonly used to link dies together is known as CoWoS.

https://siliconangle.com/2023/09/08/tsmc-says-chip-packaging-shortage-constraining-processor-supply/

TSMC reportedly intends to expand its CoWoS capacity from 8,000 wafers per month today to 11,000 wafers per month by the end of the year, and then to around 20,000 by the end of 2024.

TSMC currently has the capacity to process roughly 8,000 CoWoS wafers every month. Between them, Nvidia and AMD utilize about 70% to 80% of this capacity, making them the dominant users of this technology. Following them, Broadcom emerges as the third largest user, accounting for about 10% of the available CoWoS wafer processing capacity. The remaining capacity is distributed between 20 other fabless chip designers.

Nvidia uses CoWoS for its highly successful A100, A30, A800, H100, and H800 compute GPUs.

AMD's Instinct MI100, Instinct MI200/MI200/MI250X, and the upcoming Instinct MI300 also use CoWoS.

https://www.tomshardware.com/news/amd-and-nvidia-gpus-consume-lions-share-of-tsmc-cowos-capacity

Taiwan Semiconductor Manufacturing Co. Chairman Mark Liu said the squeeze on AI chip supplies is "temporary" and could be alleviated by the end of 2024.

https://asia.nikkei.com/Business/Te...-AI-chip-output-constraints-lasting-1.5-years

Liu revealed that demand for CoWoS surged unexpectedly earlier this year, tripling year-over-year and leading to the current supply constraints. The company expects its CoWoS capacity to double by the end of 2024.

https://ca.investing.com/news/stock...-amid-cowos-capacity-constraints-93CH-3101943

Gatecrasher3000

Gawd

- Joined

- Mar 18, 2013

- Messages

- 580

All I want to know is; with LG rumored to release a 240hz version of their OLED TVs next year, will the 5000/8000 series cards be enough to push 240fps at 4k?

My guess

With upscaling - yes.

Straight raster - errr maybe...

My guess

With upscaling - yes.

Straight raster - errr maybe...

Depends game and setting, the answer being certainly yes for some case and absolutely no for others, those blanket statement I am not sure what they would mean.

It will not run Immortals of Aveum, Starfield, Jedi survivor and a long list getting longer at 240fps even at 720p, 120fps at native 4k maybe for something like Jedi, 70fps for unreal engine 5 title going all in, with current cpu at least.

Lot of title will probably need frame generation to go around the cpu bottleneck we see, but maybe by then a 8800x3d / Arrow Lake and software advancement will have changed things.

It will not run Immortals of Aveum, Starfield, Jedi survivor and a long list getting longer at 240fps even at 720p, 120fps at native 4k maybe for something like Jedi, 70fps for unreal engine 5 title going all in, with current cpu at least.

Lot of title will probably need frame generation to go around the cpu bottleneck we see, but maybe by then a 8800x3d / Arrow Lake and software advancement will have changed things.

MelonSplitter

[H]ard|Gawd

- Joined

- Aug 6, 2006

- Messages

- 1,088

Okay son, we've heard that before.Fuck no. I'll get a console at that point.

It is becoming increasingly accepted in the mainstream that AMD will go missing at the high end in 2024 (& most of 2025 too)

Nvidia also might prioritize 3nm wafers to AI cards.

What does it mean for pricing (& launch dates) of top-end blackwell cards

https://www.techradar.com/pro/gpu-p...prioritize-ai-what-could-that-mean-for-gamers

When AMD launches its RDNA 4 family of GPUs, possibly next year, there won’t be an AMD Radeon RX 8800 or 8900, according to TechSpot. This will give its rival Nvidia a clear run at manufacturing the best GPUs to meet the high-end gaming market, but could also serve to constrain supply and spike prices.

Nvidia also might prioritize 3nm wafers to AI cards.

What does it mean for pricing (& launch dates) of top-end blackwell cards

https://www.techradar.com/pro/gpu-p...prioritize-ai-what-could-that-mean-for-gamers

When AMD launches its RDNA 4 family of GPUs, possibly next year, there won’t be an AMD Radeon RX 8800 or 8900, according to TechSpot. This will give its rival Nvidia a clear run at manufacturing the best GPUs to meet the high-end gaming market, but could also serve to constrain supply and spike prices.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

It is becoming increasingly accepted in the mainstream that AMD will go missing at the high end in 2024 (& most of 2025 too)

Nvidia also might prioritize 3nm wafers to AI cards.

What does it mean for pricing (& launch dates) of top-end blackwell cards

https://www.techradar.com/pro/gpu-p...prioritize-ai-what-could-that-mean-for-gamers

When AMD launches its RDNA 4 family of GPUs, possibly next year, there won’t be an AMD Radeon RX 8800 or 8900, according to TechSpot. This will give its rival Nvidia a clear run at manufacturing the best GPUs to meet the high-end gaming market, but could also serve to constrain supply and spike prices.

Just like how it was once accepted in the mainstream that RDNA 3 was gonna be AMD's "Zen 3 moment" and beat Nvidia with something that's 2x or even 3x better than RDNA2? Rumors are still nothing more than that, rumors. Even the more reasonable "leaks" from MILD ended up being wrong, and he was double downing on it right up until the last minute before the launch of RDNA3.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,384

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

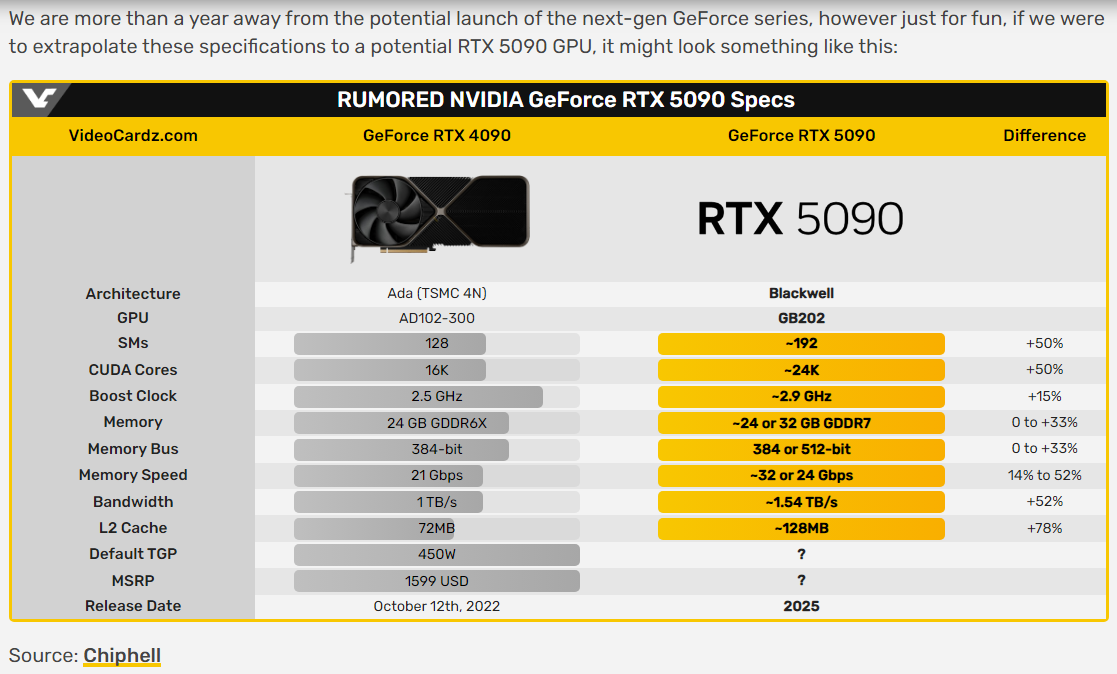

For rumors this looks pretty reasonable at least. Perhaps we can get 50%+ performance uplift over a 4090?

ChronoDetector

2[H]4U

- Joined

- Apr 1, 2008

- Messages

- 2,783

If the 5090 still has 24GB of VRAM would be rather disappointing, 48GB would be nice but would drive up the cost of the card. Already I can see some games using close to 20GB of VRAM and in 2025 I don't think 24GB of VRAM will cut it on higher resolutions. I don't really see NVIDIA would use a 512 bit memory bus due to it's high cost as well, if they want a 32GB configuration a 256 bit memory bus would be suitable but yet again, a 256 bit memory bus would most likely hold back on higher resolutions.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,017

Allocating VRAM ≠ using VRAMIf the 5090 still has 24GB of VRAM would be rather disappointing, 48GB would be nice but would drive up the cost of the card. Already I can see some games using close to 20GB of VRAM and in 2025 I don't think 24GB of VRAM will cut it on higher resolutions. I don't really see NVIDIA would use a 512 bit memory bus due to it's high cost as well, if they want a 32GB configuration a 256 bit memory bus would be suitable but yet again, a 256 bit memory bus would most likely hold back on higher resolutions.

Most games allocate more VRAM than that actually need and very few actually need anything over 16GB. We have been over this ad nauseum on these forums. How is his still misinformation floating around here?

Last edited:

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

Allocating VRAM ≠ using VRAM

Most games allocate more VRAM than that actually need and very few actually need anything over 16GB. We have been over this ad nauseum on these forums. How is his still misinformation floating around here?

Yeah it makes no sense at all. If games suddenly require 32GB VRAM in 2025 for 4K gaming then what? 1440p is gonna require 24GB and 1080p 16GB? Lol...

Require is a strong word and 2025 could be a bit short-sighted.

If the 5090 release in 2025, good enough for the first wave of PS6 (2027 launch I imagine) games will start to be in sight,

Game will probably not require more than 8-10 gig if they want to be run on a PS5-XboxX and that should be safe until 2027, but has it started they do not require necessarily more than 8 (will run well at medium-high a bit of playing around with the setting, done by your auto-configurator usually) but can start to use it if it is available.

Almost certain than no game will require more than 16 in 2026-2027 (12 even probably), but could use more than 24 in 2027 when the 5090 could still be the biggest Nvidia card available, maybe. The rumors of a 32GB 5090 could be true.

If the 5090 release in 2025, good enough for the first wave of PS6 (2027 launch I imagine) games will start to be in sight,

Game will probably not require more than 8-10 gig if they want to be run on a PS5-XboxX and that should be safe until 2027, but has it started they do not require necessarily more than 8 (will run well at medium-high a bit of playing around with the setting, done by your auto-configurator usually) but can start to use it if it is available.

Almost certain than no game will require more than 16 in 2026-2027 (12 even probably), but could use more than 24 in 2027 when the 5090 could still be the biggest Nvidia card available, maybe. The rumors of a 32GB 5090 could be true.

This is from a hardocp forum member, I believe:

https://twitter.com/robbiekhan/status/1704366480521871781?s=20

it's actually possible to max out the VRAM on an RTX 4090 in #starfield at 1440p - You just need 64GB of high-def texture packs!

https://twitter.com/robbiekhan/status/1704366480521871781?s=20

it's actually possible to max out the VRAM on an RTX 4090 in #starfield at 1440p - You just need 64GB of high-def texture packs!

Nebell

2[H]4U

- Joined

- Jul 20, 2015

- Messages

- 2,384

With 4090 mobile being basically desktop 4070Ti but a bit more vram and high end mobile CPUs pushing some insane numbers, I'm more interested in laptops for the next gen.

Especially when somethng like BigScreen Beyond and/or Visor 4k are around the corner.

In 2025, 5090 mobile will probably be a monster and compare to desktop 4090, or better. CPUs as well but honestly, performance they deliver now would be enough.

New Legion 9 with mini-led, water cooling, 5090 and 15980HX (or whatever would be top tier in 2025) would cost an arm and a leg (about €5500 in EU) but that thing would be an absolute monster.

Especially when somethng like BigScreen Beyond and/or Visor 4k are around the corner.

In 2025, 5090 mobile will probably be a monster and compare to desktop 4090, or better. CPUs as well but honestly, performance they deliver now would be enough.

New Legion 9 with mini-led, water cooling, 5090 and 15980HX (or whatever would be top tier in 2025) would cost an arm and a leg (about €5500 in EU) but that thing would be an absolute monster.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,577

With 4090 mobile being basically desktop 4070Ti but a bit more vram and high end mobile CPUs pushing some insane numbers, I'm more interested in laptops for the next gen.

Especially when somethng like BigScreen Beyond and/or Visor 4k are around the corner.

In 2025, 5090 mobile will probably be a monster and compare to desktop 4090, or better. CPUs as well but honestly, performance they deliver now would be enough.

New Legion 9 with mini-led, water cooling, 5090 and 15980HX (or whatever would be top tier in 2025) would cost an arm and a leg (about €5500 in EU) but that thing would be an absolute monster.

I agree. While the naming of laptop GPUs absolutely suck (The 4090 should have really been called a 4090M or something just like the old days of GTX 980M), the performance is pretty wild. Laptop 4090s basically provide desktop 3090 class performance. If someone told you 2 years ago when the 3090 launched that we would get that level of performance in a laptop just 2 years later you would find it hard to believe. In a few more generations we will have laptops capable of playing fully path traced games at high frame rates and that's pretty exciting.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,672

AMD just released a Laptop Ryzen CPU with VcacheWith 4090 mobile being basically desktop 4070Ti but a bit more vram and high end mobile CPUs pushing some insane numbers, I'm more interested in laptops for the next gen.

Especially when somethng like BigScreen Beyond and/or Visor 4k are around the corner.

In 2025, 5090 mobile will probably be a monster and compare to desktop 4090, or better. CPUs as well but honestly, performance they deliver now would be enough.

New Legion 9 with mini-led, water cooling, 5090 and 15980HX (or whatever would be top tier in 2025) would cost an arm and a leg (about €5500 in EU) but that thing would be an absolute monster.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)