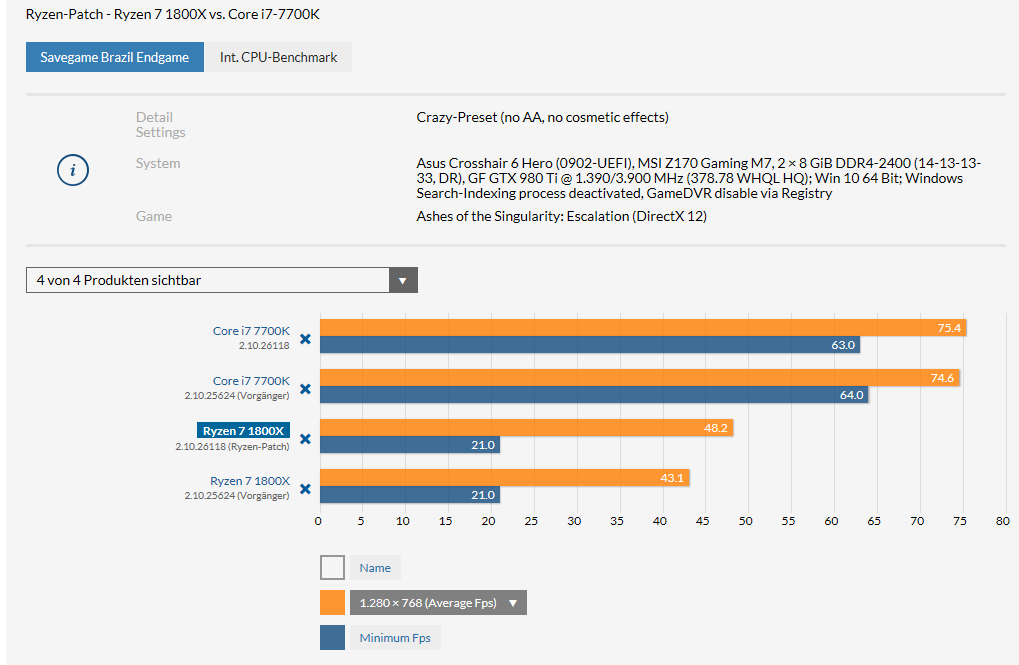

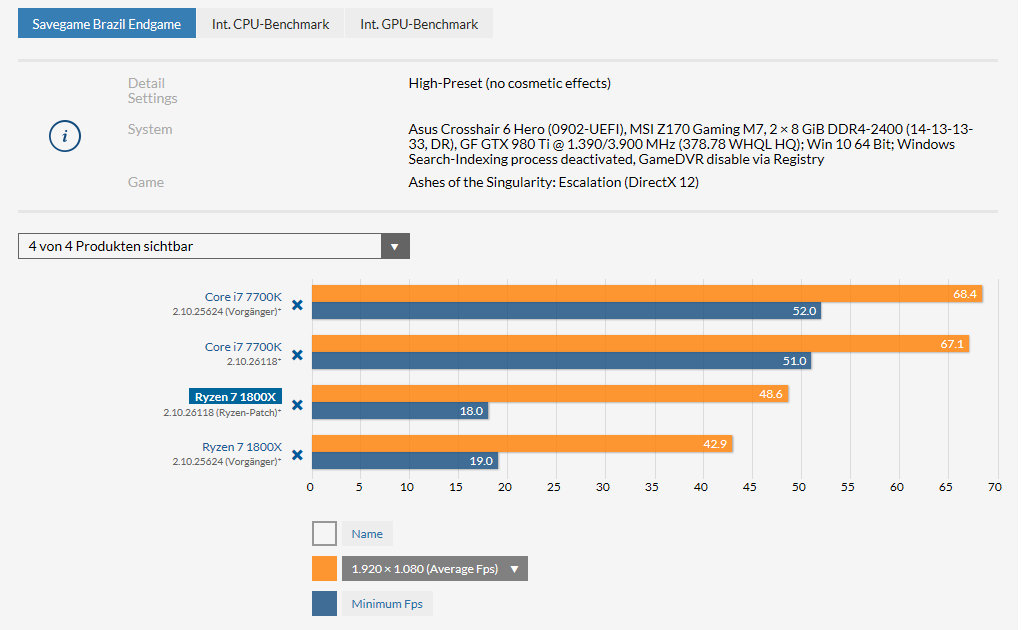

I ran the benchmark last night at Crazy settings, twice. I saved the output. Total coincidence -- I was just benchmarking the new rig for the hell of it -- but maybe useful in this situation. I just need to match settings and run again. Obviously I can't do PresentMon on the old version, but I should be able to do both internal and PresentMon on the new runs, and compare.

Ah that is unfortunate, you would really want the PresentMon for both otherwise it has no context/baseline to compare to.

I would hold off putting any time into testing with PresentMon for that reason, unless you still have it not updated AoTS and can try benchmark offline.

Thanks

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)