erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,929

2025 isn't soon enough!

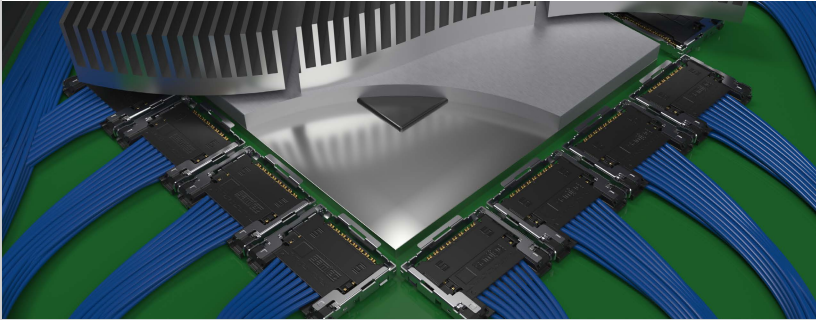

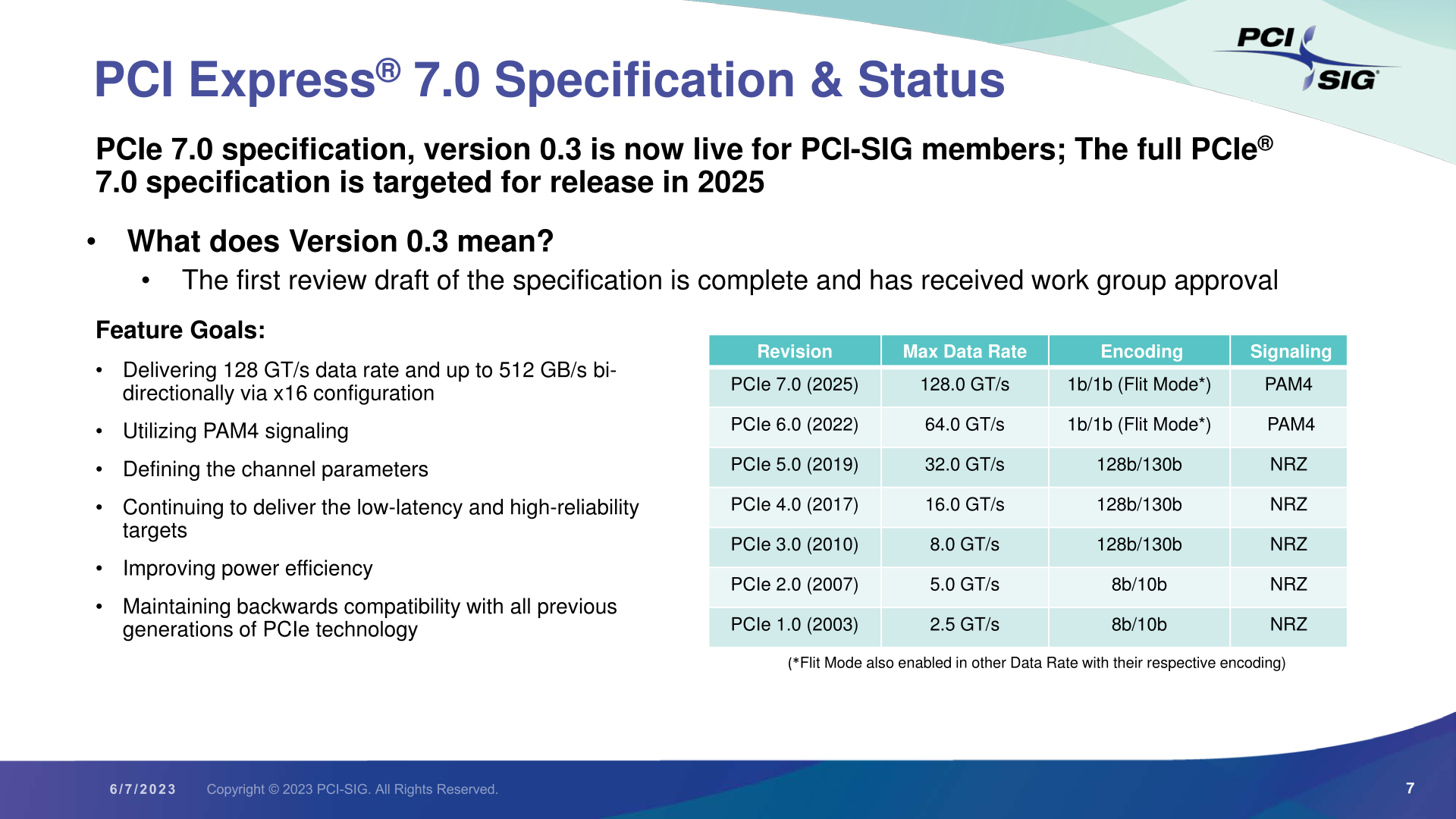

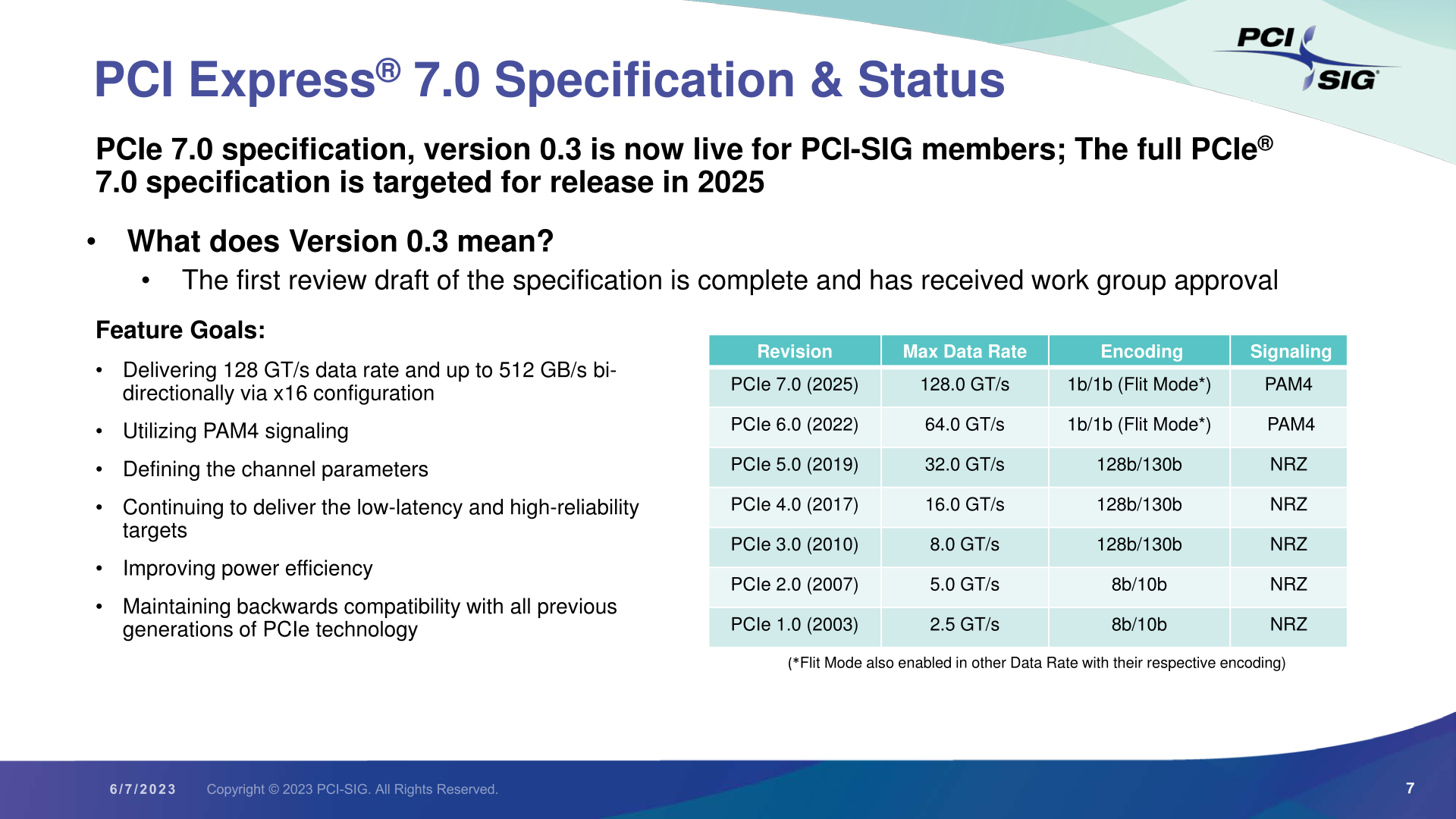

"While we traditionally think of PCIe first and foremost as a bus routed over printed circuit boards, the standard has always allowed cabling as well. And with their newer standards, the PCI-SIG is actually expecting the use of cabling in servers and other high-end devices to grow, owing to the channel reach limitations of PCBs, and how it’s getting worse with higher signaling frequencies. So, cabling is being given a fresh look as an option to maintain/extend channel reach with the latest standards, as new techniques and new materials are creating new options for better cables.

To that end, the PCI-SIG is developing two cabling specifications, which are expected to be ready for release in Q4 of this year. The specs will cover both PCIe 5.0 and PCIe 6.0 (since the signaling frequency is unchanged), with specifications for both internal and external cables. Internal cabling would be to hook up devices to other parts within a system – both devices and motherboards/backplanes – while external cabling would be used for system-to-system connections.

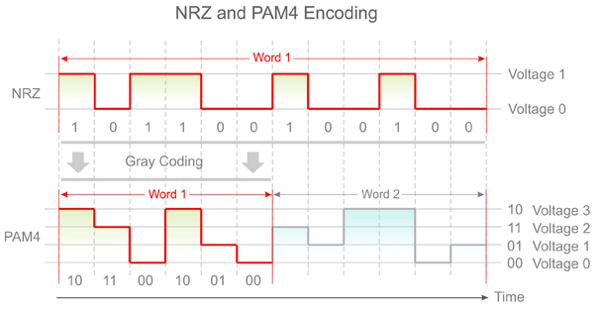

In terms of signaling technologies and absolute signaling rates, PCI Express trails Ethernet by a generation or so. And that means that much of the initial development on high speed copper signaling has already been tackled by the Ethernet workgroups. So, while work still has to be done to adapt these techniques for PCIe, the basic techniques have already been proven, which helps to simplify the development of the PCIe standard and cabling by a bit.

All told, cable development is decidedly a more server use case of the technology than what we see in the consumer space. But a cabling standard is still going to be an important development for those use cases, especially as companies continue to stitch together ever more powerful systems and clusters."

Source: https://www.anandtech.com/show/1890...12gbps-connectivity-on-track-for-2025-release

"While we traditionally think of PCIe first and foremost as a bus routed over printed circuit boards, the standard has always allowed cabling as well. And with their newer standards, the PCI-SIG is actually expecting the use of cabling in servers and other high-end devices to grow, owing to the channel reach limitations of PCBs, and how it’s getting worse with higher signaling frequencies. So, cabling is being given a fresh look as an option to maintain/extend channel reach with the latest standards, as new techniques and new materials are creating new options for better cables.

To that end, the PCI-SIG is developing two cabling specifications, which are expected to be ready for release in Q4 of this year. The specs will cover both PCIe 5.0 and PCIe 6.0 (since the signaling frequency is unchanged), with specifications for both internal and external cables. Internal cabling would be to hook up devices to other parts within a system – both devices and motherboards/backplanes – while external cabling would be used for system-to-system connections.

In terms of signaling technologies and absolute signaling rates, PCI Express trails Ethernet by a generation or so. And that means that much of the initial development on high speed copper signaling has already been tackled by the Ethernet workgroups. So, while work still has to be done to adapt these techniques for PCIe, the basic techniques have already been proven, which helps to simplify the development of the PCIe standard and cabling by a bit.

All told, cable development is decidedly a more server use case of the technology than what we see in the consumer space. But a cabling standard is still going to be an important development for those use cases, especially as companies continue to stitch together ever more powerful systems and clusters."

Source: https://www.anandtech.com/show/1890...12gbps-connectivity-on-track-for-2025-release

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)