We’ve come a crazy long way from the days of having to install aftermarket coolers to get decent cooling that’s for sure.If it were a GTX 480 it would certainly have grilled the eggs and bacon for you.

So glad the stock cooling solutions have come so far, especially with such high TDPs with current designs.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Overhauled NVIDIA RTX 50 "Blackwell" GPUs reportedly up to 2.6x faster vs RTX 40 cards courtesy of revised Streaming Multiprocessors and 3 GHz+ clock

- Thread starter erek

- Start date

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

No kidding.... I'm especially happy there are models with stock aio water too.We’ve come a crazy long way from the days of having to install aftermarket coolers to get decent cooling that’s for sure.

Where is it double the 3090, with DLSS? RT? In pure raster it is 60% faster.The 4090 wasn't double the cost of the 3090 despite having double the performance.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

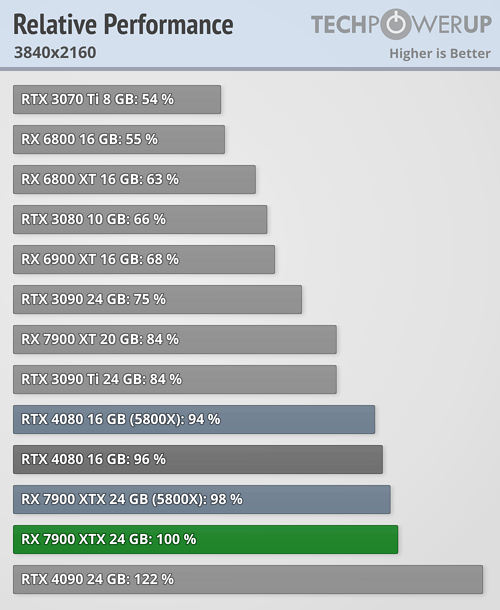

Eh, no. It's 70% per techpowerup game averages at 4k, pure raster. It only had a 7% price increase. Plus it has frame gen and updated rt cores. Talk about a nice gen on gen gain...! EDIT: Plus it uses less power.Where is it double the 3090, with DLSS? RT? In pure raster it is 60% faster.

Last edited:

OK, 70%, that is not double.Eh, no. It's 70% per techpowerup game averages at 4k, pure raster. It only had a 7% price increase. Plus it has frame gen and updated rt cores. Talk about a nice gen on gen gain...!

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

DLSS3 brings it to 3.4x the base performance of the 3090, or 240% faster. Many more games with it new or patched are coming in hot, now that Nvidia released the software tools. Already owners report it works great in Spider-Man, Witcher 3, Cyberpunk 2077, and more.OK, 70%, that is not double.

Johnx64

[H]F Junkie

- Joined

- Apr 22, 2002

- Messages

- 9,257

It will have its own self contained AI that will generate frames based on what it decides you want. In fact, you won't have to purchase games at all, the card will generate the games too.

Hopefully they will be fully playable instead of the Alphas publishers push out these days.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

Customer: Whats the Price?

Nvidia: Fuck you is the price.

Nvidia: Fuck you is the price.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

... and yet, the prices are bearable by those who have the cash, so they buy them. If the card is too much for you, no shame in that, just buy something cheaper or barring that, an older card. No one's forcing you to buy at the price you're statingCustomer: Whats the Price?

Nvidia: Fuck you is the price.

That is great, but I only care about raster performance.DLSS3 brings it to 3.4x the base performance of the 3090, or 240% faster. Many more games with it new or patched are coming in hot, now that Nvidia released the software tools. Already owners report it works great in Spider-Man, Witcher 3, Cyberpunk 2077, and more.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

That is raster performance. I didn't include raytracing, as stated.That is great, but I only care about raster performance.

Eh, no. It's 70% per techpowerup game averages at 4k, pure raster. It only had a 7% price increase. Plus it has frame gen and updated rt cores. Talk about a nice gen on gen gain...! EDIT: Plus it uses less power.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Customer: Whats the Price?

Nvidia: Fuck you is the price.

Me, a shareholder: You heard the man, fuck you!

Me, a customer: Well, fuck me...

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Hehe.Me, a shareholder: You heard the man, fuck you!

Me, a customer: Well, fuck me...

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,820

I always love how positive you are about these things.I hear it also comes with a voucher good for one *free reacharound.

I ran 2x gtx 470s in Sli... Talk about heat!

Your poor air conditioner

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Indeed. That was the first time I really thought about heat being vented out of my computer like a space heater!Your poor air conditioner.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

Alright mister passive aggressive I'll bite.... and yet, the prices are bearable by those who have the cash, so they buy them. If the card is too much for you, no shame in that, just buy something cheaper or barring that, an older card. No one's forcing you to buy at the price you're stating.

I'm finding as I get older and older my priorities are changing. While I can afford any price on the market, I start to ponder how much its really "worth". Since worth is subjective I find myself wondering if playing @1200+ dollars for a graphics card in 2023 is really worth it.

I suppose you are right in the fact that I should buy something cheaper since I only play a fraction of the games that I used to in my youth. Diablo 4 & Starfield being the ones Im planning on for this year. Of course Diablo 4 runs fine on the 2070 @ 1440p anyways.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

Your poor air conditioner.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

I said it before but I'm never paying more than I did for my EVGA 2070 Ultra ($525) - and if that means I end up buying a GT 6030 next then so be it - I've paid for and played on x80 tier cards, x70 tier cards, x60 tier cards, x50 tier cards over the years/decades

Dollar amount I pay for the card matters to me, not the card tier - I think there's also a bit of people tying what tier they're used to/want to their identity too much

But that said it's legit to complain about rising costs - even directed at Nvidia - though keep in mind it's not just Nvidia nor just GPUs - but still fine to direct it at them too

Also keep in mind - Nvidia is setting itself up to be a "oh yeah and we do gaming too still I think" company - so get used to it or move on in whatever way you decide

Dollar amount I pay for the card matters to me, not the card tier - I think there's also a bit of people tying what tier they're used to/want to their identity too much

But that said it's legit to complain about rising costs - even directed at Nvidia - though keep in mind it's not just Nvidia nor just GPUs - but still fine to direct it at them too

Also keep in mind - Nvidia is setting itself up to be a "oh yeah and we do gaming too still I think" company - so get used to it or move on in whatever way you decide

Agreed, I would call horse shit on 2x 90% of the time. There wasnt a big enough performance increase on TSMC processes lately to say 2x+ faster without massively increasing the die and that would eat into Nvidia's margins and we all know they love their margins. That and GPU sales have slowed dramatically. We will see if Nvidia will increase the 5090 price I guess. 4090 with DLSS is exactly at the limit of 4k high refresh monitors/tv are. I gues 5090 can do it without DLSS, but not sure its worth the money since DLSS without frame generation is pretty damn good.it wasn't 2x, more like 75% faster. Thats why I call horse shit in 2.6x lmao. I am sure its ray tracing number.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

I wasn't trying to be passive aggressive, just pragmatic. Same argument is make for your situation, which you arrived at anyway.Alright mister passive aggressive I'll bite.

I'm finding as I get older and older my priorities are changing. While I can afford any price on the market, I start to ponder how much its really "worth". Since worth is subjective I find myself wondering if playing @1200+ dollars for a graphics card in 2023 is really worth it.

I suppose you are right in the fact that I should buy something cheaper since I only play a fraction of the games that I used to in my youth. Diablo 4 & Starfield being the ones Im planning on for this year. Of course Diablo 4 runs fine on the 2070 @ 1440p anyways.

NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

So a top-end Blackwell GPU could actually hit over 90 FPS in MSFS 2020 in VR mode where the RTX 4090 only does 45 FPS? I'll believe it when I see it.

Not that I plan on upgrading so soon, I have a "skip a generation" rule and I'm already on Ada Lovelace right now, so that puts me on whatever's after Blackwell at the soonest.

With that said, I have no complaints about my Zotac 4080's thermals or fan noise, just the fact that I can't use all but one of my other PCIe slots due to mobo layout.

Not that I plan on upgrading so soon, I have a "skip a generation" rule and I'm already on Ada Lovelace right now, so that puts me on whatever's after Blackwell at the soonest.

At the cost of gigantic heatsinks that block off four slots (most AIB 4080/4090 cards), which leaves me wondering if they should really just waterblock 'em all from the factory if this is the direction we're going.We’ve come a crazy long way from the days of having to install aftermarket coolers to get decent cooling that’s for sure.

With that said, I have no complaints about my Zotac 4080's thermals or fan noise, just the fact that I can't use all but one of my other PCIe slots due to mobo layout.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Pfft, use your imagination! $5080!And you can have one too! ... for $5399.95

Also keep in mind - Nvidia is setting itself up to be a "oh yeah and we do gaming too still I think" company - so get used to it or move on in whatever way you decide

A lot of people are saying this, but the gaming market is worth billions and Nvidia isn’t going to walk away from it. Just because they’re cranking prices doesn’t mean they don’t want to play, they’re just trying to find out what the market will bear. The fact that gamers (not miners) lined up to buy a $1600 video card proves to them their pricing strategy is fine. Look forward to an $1800-$2000 RTX 5090 next generation because why not, and the same people will buy it.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

A lot of people are saying this, but the gaming market is worth billions and Nvidia isn’t going to walk away from it. Just because they’re cranking prices doesn’t mean they don’t want to play, they’re just trying to find out what the market will bear. The fact that gamers (not miners) lined up to buy a $1600 video card proves to them their pricing strategy is fine. Look forward to an $1800-$2000 RTX 5090 next generation because why not, and the same people will buy it.

I never said walk away, I said won't be their primary business anymore

Maybe so obviously, but the competition outside gaming will be significantly bigger, IBM amazon, facebook, google, amd-xilinx, intel, AppleI never said walk away, I said won't be their primary business anymore

https://www.ibm.com/blogs/systems/i...en-microprocessor-for-ibm-z-and-ibm-linuxone/

A lot of it is "new", as to run specific thing, does not need to build relationship with something as diverse as the world gaming industry, support almost all PC config from a decade, DX9, DX11, DX12 and in the gaming side for game to work to support you when it is needed you need to be big creating a giant moat for a new entry.

So unlike gaming gpu there are a long list of startup in the game, like: https://groq.com/why-ai-requires-a-new-chip-architecture/

Take an AMD right now, just imagine just how much more than gaming Zen5 is involved in, still made quite the gaming oriented launch, arguably the 7950x3d was even targeted at them, even thought it seem to have an hard time delivering all the epyc-threadripper-laptop it could sales, still kept the focus on the gaming part.

Marging are so great that Nvidia will keep a large focus in that market as long has they have them and it should much easier to keep them in that market than most others, until it become a commodity (when your 3 years old regular chip can play 240hz, 8k in each eye, photo realistic rendering).

I could be saying non-sense, but that my feeling, as long you have some duopoly with a competitor that play game, it is for both, a margin dream (I think Nvidia reached the 60%, Nvidia in a good year reached microsoft in a bad year, a software company with non sense margin).

Everything outside gaming feel quite dangerous to be overtaken by the competition or the biggest player having their in-house solution

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

Maybe so obviously, but the competition outside gaming will be significantly bigger, IBM amazon, facebook, google, amd-xilinx, intel, Apple

https://www.ibm.com/blogs/systems/i...en-microprocessor-for-ibm-z-and-ibm-linuxone/

A lot of it is "new", as to run specific thing, does not need to build relationship with something as diverse as the world gaming industry, support almost all PC config from a decade, DX9, DX11, DX12 and in the gaming side for game to work to support you when it is needed you need to be big creating a giant moat for a new entry.

So unlike gaming gpu there are a long list of startup in the game, like: https://groq.com/why-ai-requires-a-new-chip-architecture/

Take an AMD right now, just imagine just how much more than gaming Zen5 is involved in, still made quite the gaming oriented launch, arguably the 7950x3d was even targeted at them, even thought it seem to have an hard time delivering all the epyc-threadripper-laptop it could sales, still kept the focus on the gaming part.

Marging are so great that Nvidia will keep a large focus in that market as long has they have them and it should much easier to keep them in that market than most others, until it become a commodity (when your 3 years old regular chip can play 240hz, 8k in each eye, photo realistic rendering).

I could be saying non-sense, but that my feeling, as long you have some duopoly with a competitor that play game, it is for both, a margin dream (I think Nvidia reached the 60%, Nvidia in a good year reached microsoft in a bad year, a software company with non sense margin).

Everything outside gaming feel quite dangerous to be overtaken by the competition or the biggest player having their in-house solution

Take a look at datacenter in nvidia's past quarterly reports, it's already happening, along with them pushing omniverse in industry sectors

And look at all the recent interviews with Jensen and how he talks about how they're an accelerated computing company, for all different parts of the economy. And they were just right, in his words, on betting on gaming as the first type of accelerated computing application for them. Now is their time of branching/building/cementing themselves out elsewhere, and gaming is just where they started.

Last edited:

The 2.x-2.6x faster target figure would probably like almost always be the pipe dream they will not actually reach as an average FPS, specially not with the cpu-game existing at launch.

But was it specific to the 5090 versus the 4090 ? That would be quite something going from TSMC 5 to 3 to be achieved even with a new architecture

But it could be an option for the 5080 vs 4080, 5070 vs 4070, if they need to do the 2.x faster if they feel they need to do that to keep the price high, why not ?

Lovelace pricing-performance was made in a madworld after all, the 5xxx could be in a different mindset.

But was it specific to the 5090 versus the 4090 ? That would be quite something going from TSMC 5 to 3 to be achieved even with a new architecture

But it could be an option for the 5080 vs 4080, 5070 vs 4070, if they need to do the 2.x faster if they feel they need to do that to keep the price high, why not ?

Lovelace pricing-performance was made in a madworld after all, the 5xxx could be in a different mindset.

Did not know by how much it was bigger already (doubling), since Turing there was already a significant there a GPU computer, find stuff to do with it in your game if you can to take advantage of all the hardware it simplifies the design and manufacturing versus having a completely separate product line, and it could get more and more like that.Take a look at datacenter in nvidia's past quarterly reports, it's already happening, along with them pushing omniverse in industry sectors

And look at all the recent interviews with Jensen and how he talks about how they're an accelerated computing company, for all different parts of the economy. And they were just right, in his words, on betting on gaming as the first type of accelerated computing application for them. Now is their time of branching/building/cementing themselves out elsewhere, and gaming is just where they started.

Games engine will probably shift a bit toward this to match it (it will run language model for npc dialogue, generative construction of trees and building to be all unique and so on) too.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,373

I have zero qualifications to give any insight into this. But let me tell you that is not going to happen.

Even if somehow possible Nvidia will typically stay at around 30% real world performance boosts between generations. They really have no reason to not slow roll out performance jumps even if they are capable of much more. They have so much market share and I doubt AMD will be Nvidia's fastest GPU anytime soon.

Even if somehow possible Nvidia will typically stay at around 30% real world performance boosts between generations. They really have no reason to not slow roll out performance jumps even if they are capable of much more. They have so much market share and I doubt AMD will be Nvidia's fastest GPU anytime soon.

Even if somehow possible Nvidia will typically stay at around 30% real world performance boosts between generations. They really have no reason to not slow roll out performance jumps even if they are capable of much more. They have so much market share and I doubt AMD will be Nvidia's fastest GPU anytime soon.

The 7900xtx, keeping the same "price tag" more or less in a context where we had an 18% inflation between 2020 and 2023 seem to beat at launch the 6900xt by almost 50% at 4k (56% with RT on) while having 50% more ram for those for who it would matter.

A similar jump from AMD offer would certainly force Nvidia to do more than the 30% every 2 years or so jump, specially with ampere-lovelace creating much bigger expectation, even more so if they want to keep those price tag, the 4080 seem to beat a 45% performance jump (+53% with RT on) + DLSS 3 over the 3080 and it was not well received.

They could go lower price obviously, but I am not sure if they want too, if the 5080 beat the 4080 by only 30%, it could be hard on the marketing and pricing side in a world where the 2 years old 4090 beat the 4080 by about the same amount.

There is always a wildcard of AMD fixing virtually all their chiplet issues that could push Nvidia to be ready for that type of performance and via some industrial espionage cutting it back closer to the launch if it is not needed to keep the next generational jump they need certain to be achievable.

at 4k raster according to techpowerup sample of games

2080->3080 jump was of 66%

3080->4080 jump was of 50%

Would not surprise me if they go for something similar again, while having some best case scenario they can show of a near 2x that get rounded up to 2x

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,898

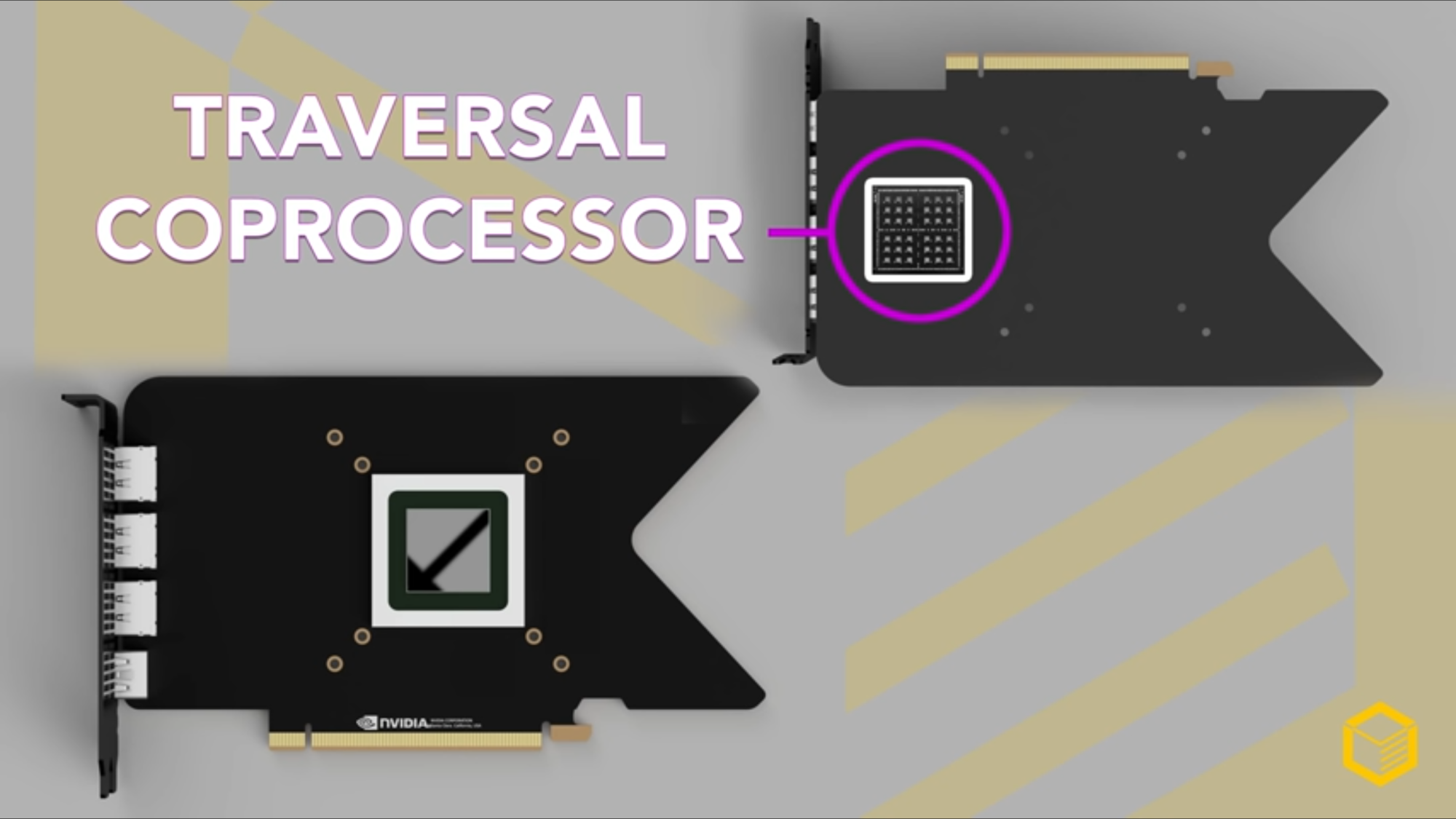

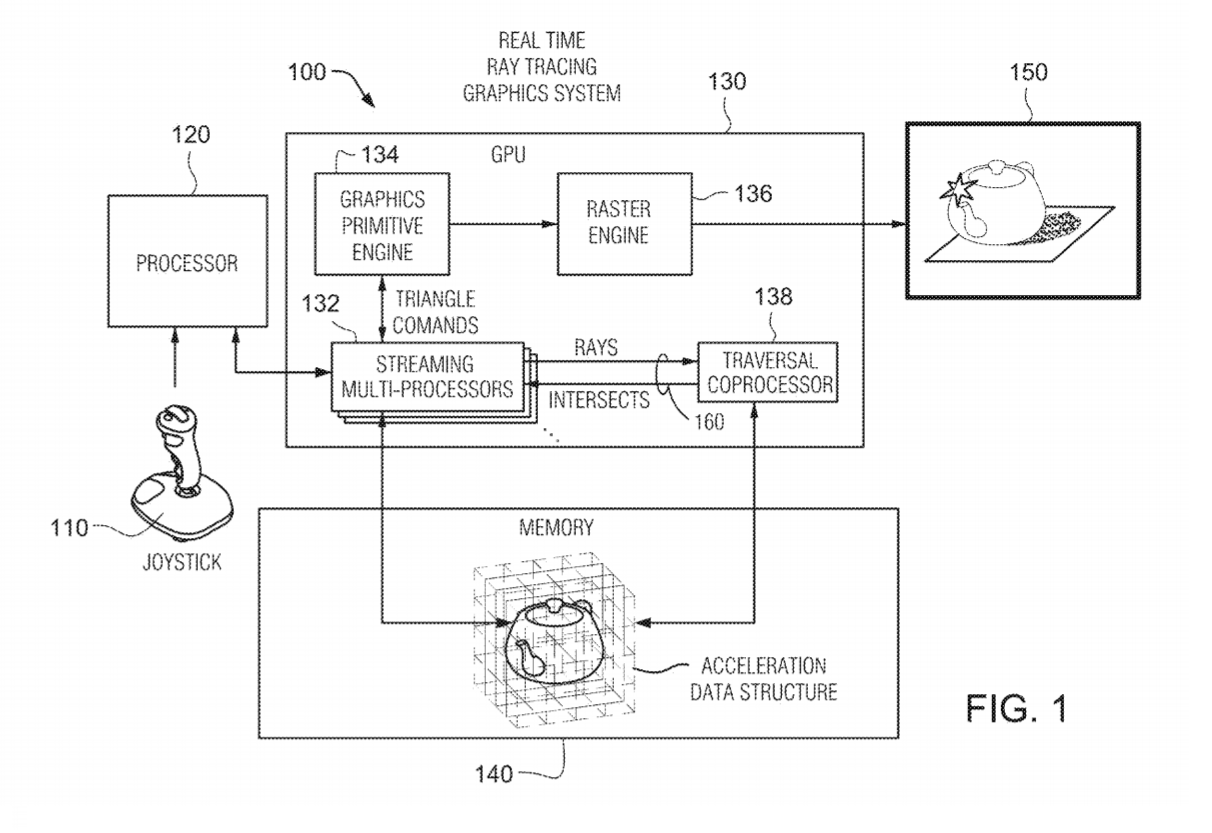

They’re deploying the Traversal Co-processor this time for realTemporal Transmission Control Protocol. TTCP it is. Nvidia needs to get on this for more frames.

http://www.freepatentsonline.com/y2020/0050451.html

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,162

I'm talking about the whole card, which means ray tracing. If you're just looking at rasterization performance, then you're only talking about 70% of the hardware.Where is it double the 3090, with DLSS? RT? In pure raster it is 60% faster.

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,759

If you don't care about ray tracing you don't really care about graphics. It isn't 2018 anymore. Every new AAA game has ray tracing, games without look like garbage by comparison. Ray tracing performance is more relevant than rasterization at this point.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 947

If you don't care about ray tracing you don't really care about graphics. It isn't 2018 anymore. Every new AAA game has ray tracing, games without look like garbage by comparison. Ray tracing performance is more relevant than rasterization at this point.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

Lol, yeah that's not reality at all. Hogwarts Legacy looks so good without turning on ray tracing, I was hard pressed to notice the difference when I turned on Ray Tracing. Raster performance remains king when it comes to almost all buyers still.If you don't care about ray tracing you don't really care about graphics. It isn't 2018 anymore. Every new AAA game has ray tracing, games without look like garbage by comparison. Ray tracing performance is more relevant than rasterization at this point.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,817

I guess if you just stare at curated digital foundry screenshots all day. Most games barely make a difference with it.If you don't care about ray tracing you don't really care about graphics. It isn't 2018 anymore. Every new AAA game has ray tracing, games without look like garbage by comparison. Ray tracing performance is more relevant than rasterization at this point.

*Edit* Speaking of "curated screenshots", I love when they clearly gimp the non-RT version to make RT look better. The best example I saw of this was, I think, a cyperpunk one with a car and the non-RT screenshot had literally no shadowing under the car, which is absolute nonsense. You don't need RT to make a shadow under an object.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)