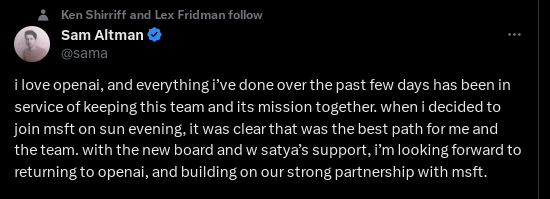

OpenAI CEO Sam Altman was ousted in a sudden boardroom knifing on Friday, arising from a power struggle over the direction of the company behind ChatGPT.

According to a tweet from former OpenAI President Greg Brockman, Altman received a text on Thursday night from OpenAI chief scientist and fellow board member Ilya Sutskever, asking him to meet at noon on Friday. When Altman logged into the meeting, Brockman wrote, the entire OpenAI board was present—except for Brockman. Sutskever informed Altman he was being fired.

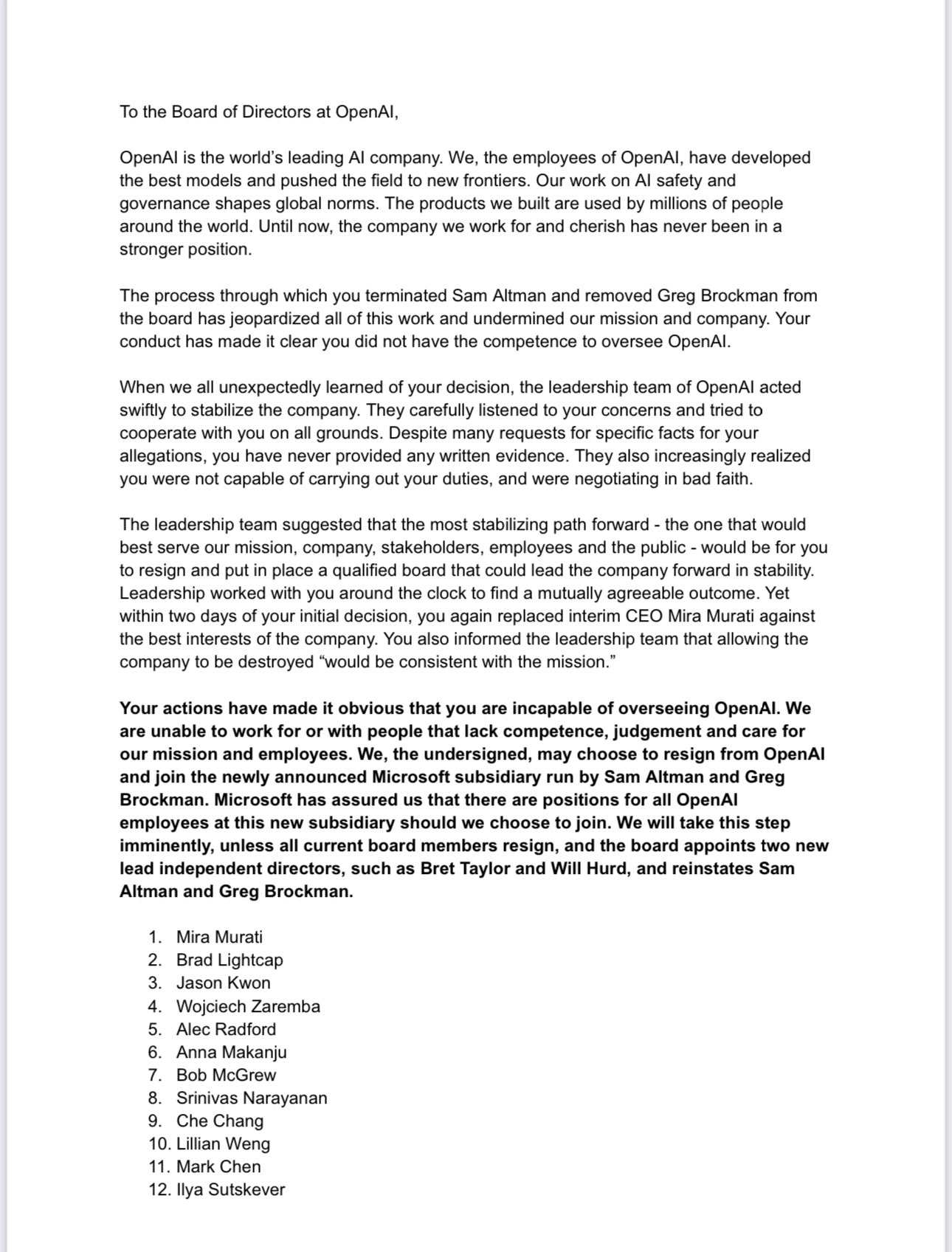

Brockman said that soon after, he had a call with the board, where he was informed that he would be removed from his board position and that Altman had been fired. Then, OpenAI published a blog post sharing the news of Altman’s ouster. Brockman wrote that the only person on the management team who knew ahead of time was Chief Technology Officer Mira Murati, who was named interim CEO.

“Sam and I are shocked and saddened by what the board did today,” Brockman wrote.

A knowledgeable source said the board struggle reflected a cultural clash at the organization, with Altman and Brockman focused on commercialization and Sutskever and his allies focused on the original non-profit mission of OpenAI.

https://arstechnica.com/ai/2023/11/...ltman-citing-less-than-candid-communications/

https://www.reuters.com/technology/openai-ceo-sam-altman-step-down-2023-11-17/

According to a tweet from former OpenAI President Greg Brockman, Altman received a text on Thursday night from OpenAI chief scientist and fellow board member Ilya Sutskever, asking him to meet at noon on Friday. When Altman logged into the meeting, Brockman wrote, the entire OpenAI board was present—except for Brockman. Sutskever informed Altman he was being fired.

Brockman said that soon after, he had a call with the board, where he was informed that he would be removed from his board position and that Altman had been fired. Then, OpenAI published a blog post sharing the news of Altman’s ouster. Brockman wrote that the only person on the management team who knew ahead of time was Chief Technology Officer Mira Murati, who was named interim CEO.

“Sam and I are shocked and saddened by what the board did today,” Brockman wrote.

A knowledgeable source said the board struggle reflected a cultural clash at the organization, with Altman and Brockman focused on commercialization and Sutskever and his allies focused on the original non-profit mission of OpenAI.

https://arstechnica.com/ai/2023/11/...ltman-citing-less-than-candid-communications/

https://www.reuters.com/technology/openai-ceo-sam-altman-step-down-2023-11-17/

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)