erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,898

GeForce NTC

Source: https://videocardz.com/newz/nvidia-...than-standard-compression-with-30-less-memory

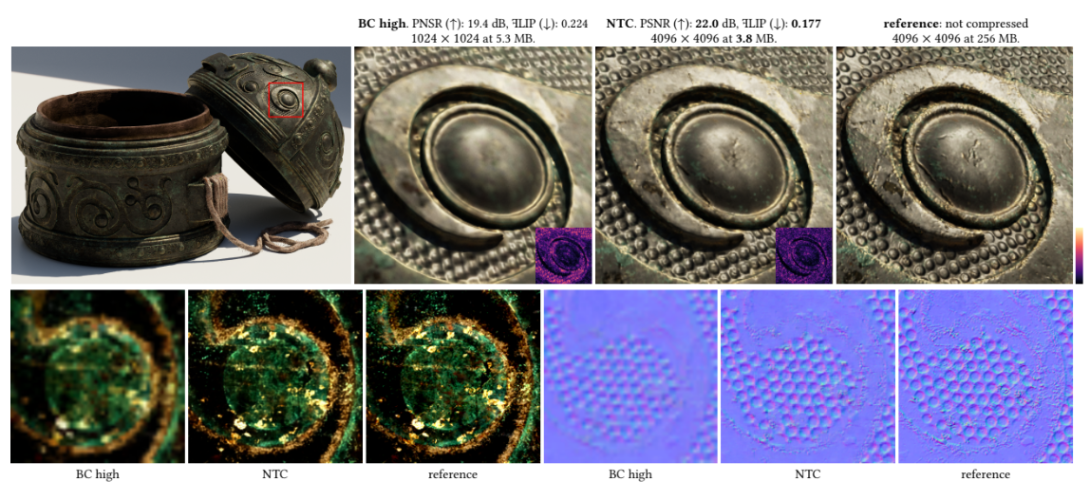

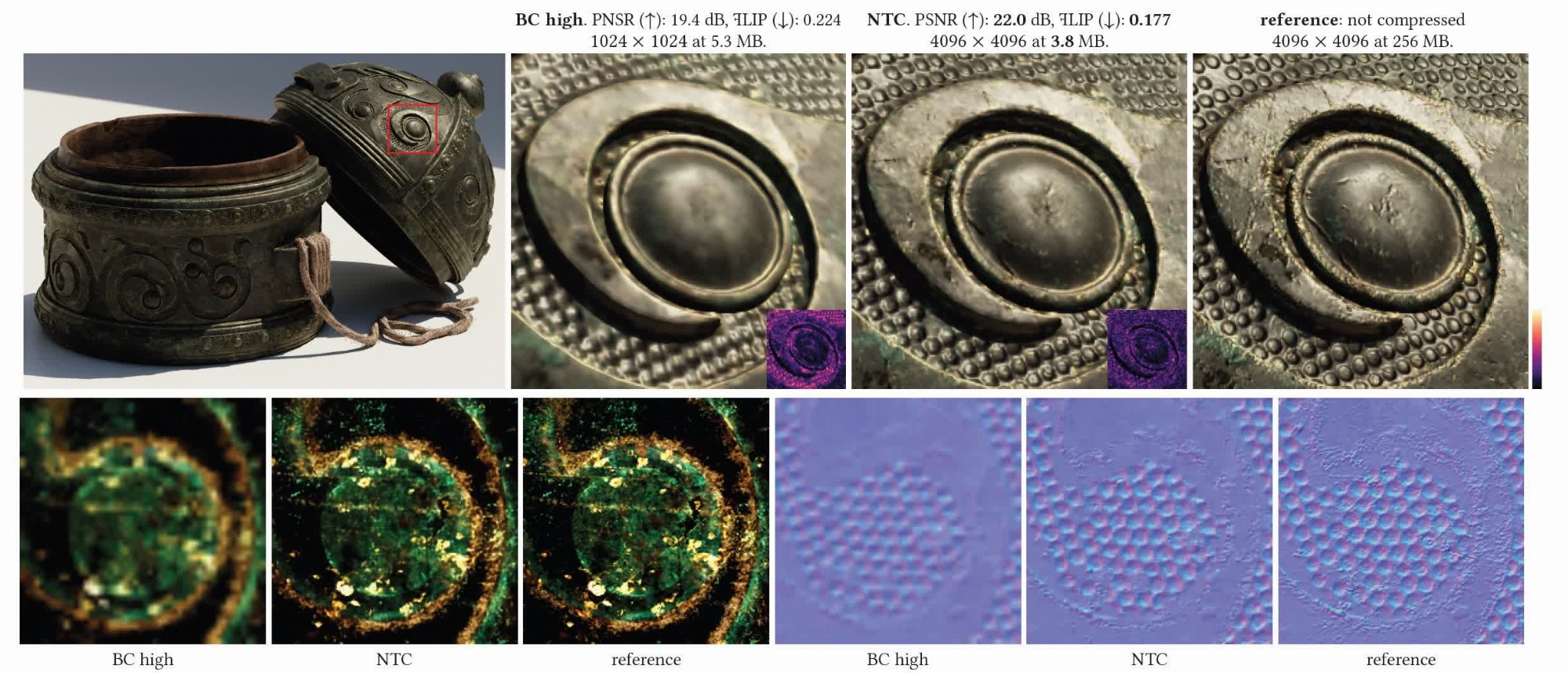

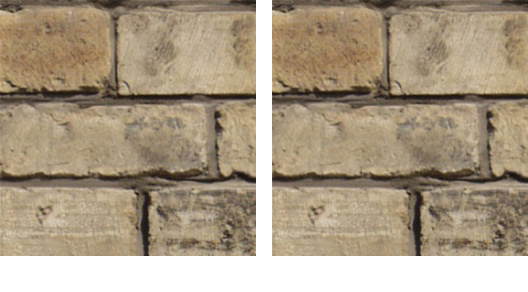

"Unlike common BCx algorithms, which require custom hardware, this algorithm utilizes the matrix multiplication methods, which are now accelerated by modern GPUs. According to the paper, this makes the NTC algorithm more practical and more capable due to lower disk and memory constraints."The continuous advancement of photorealism in rendering is accompanied by a growth in texture data and, consequently, increasing storage and memory demands. To address this issue, we propose a novel neural compression technique specifically designed for material textures. We unlock two more levels of detail, i.e., 16× more texels, using low bitrate compression, with image quality that is better than advanced image compression techniques, such as AVIF and JPEG XL. At the same time, our method allows for on-demand, real-time decompression with random access similar to block texture compression on GPUs. This extends our compression benefits all the way from disk storage to memory. The key idea behind our approach is compressing multiple material textures and their mipmap chains together, and using a small neural network, that is optimized for each material, to decompress them. Finally, we use a custom training implementation to achieve practical compression speeds, whose performance surpasses that of general frameworks, like PyTorch, by an order of magnitude.

— Random-Access Neural Compression of Material Textures, NVIDIA

Source: https://videocardz.com/newz/nvidia-...than-standard-compression-with-30-less-memory

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)