M76

[H]F Junkie

- Joined

- Jun 12, 2012

- Messages

- 14,042

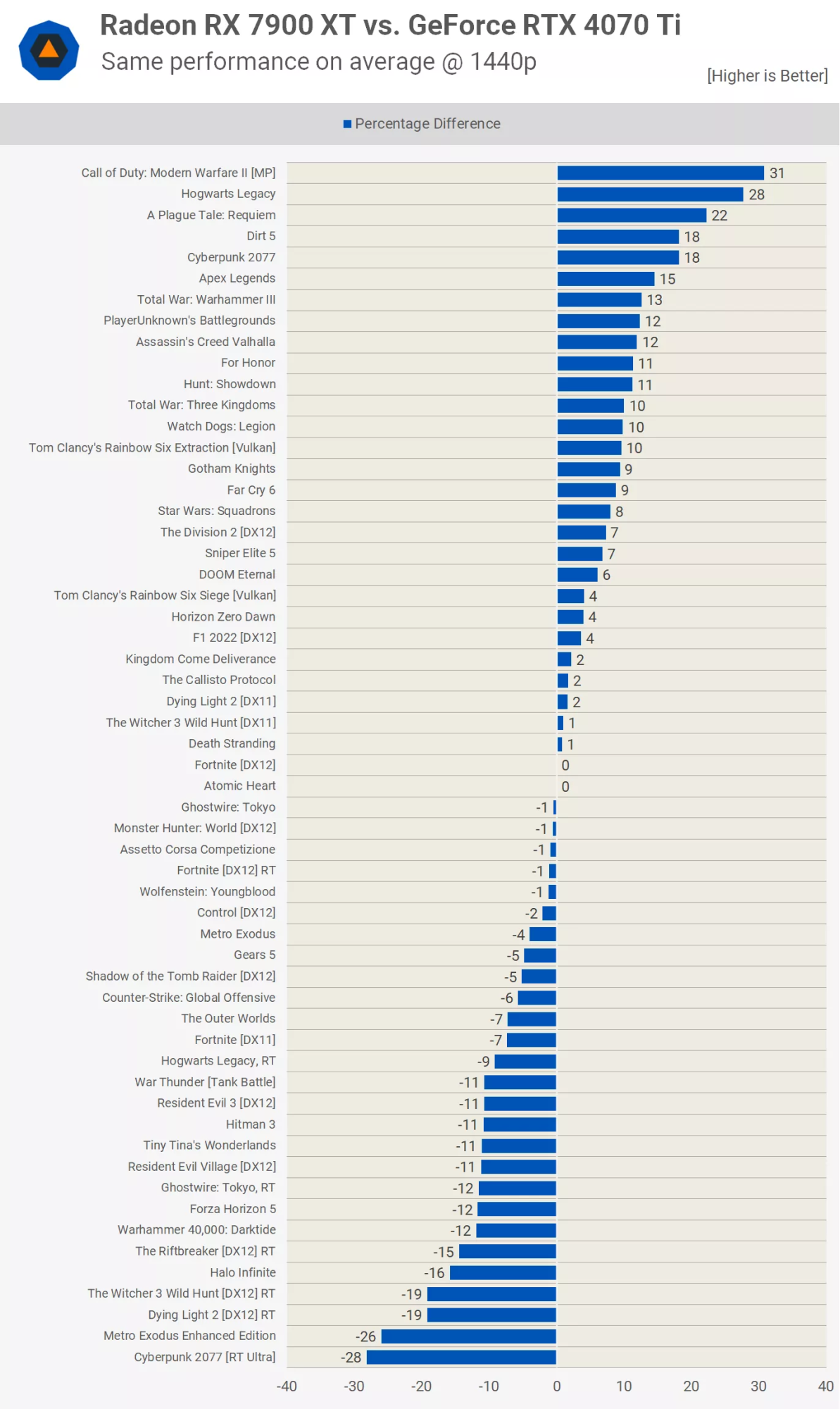

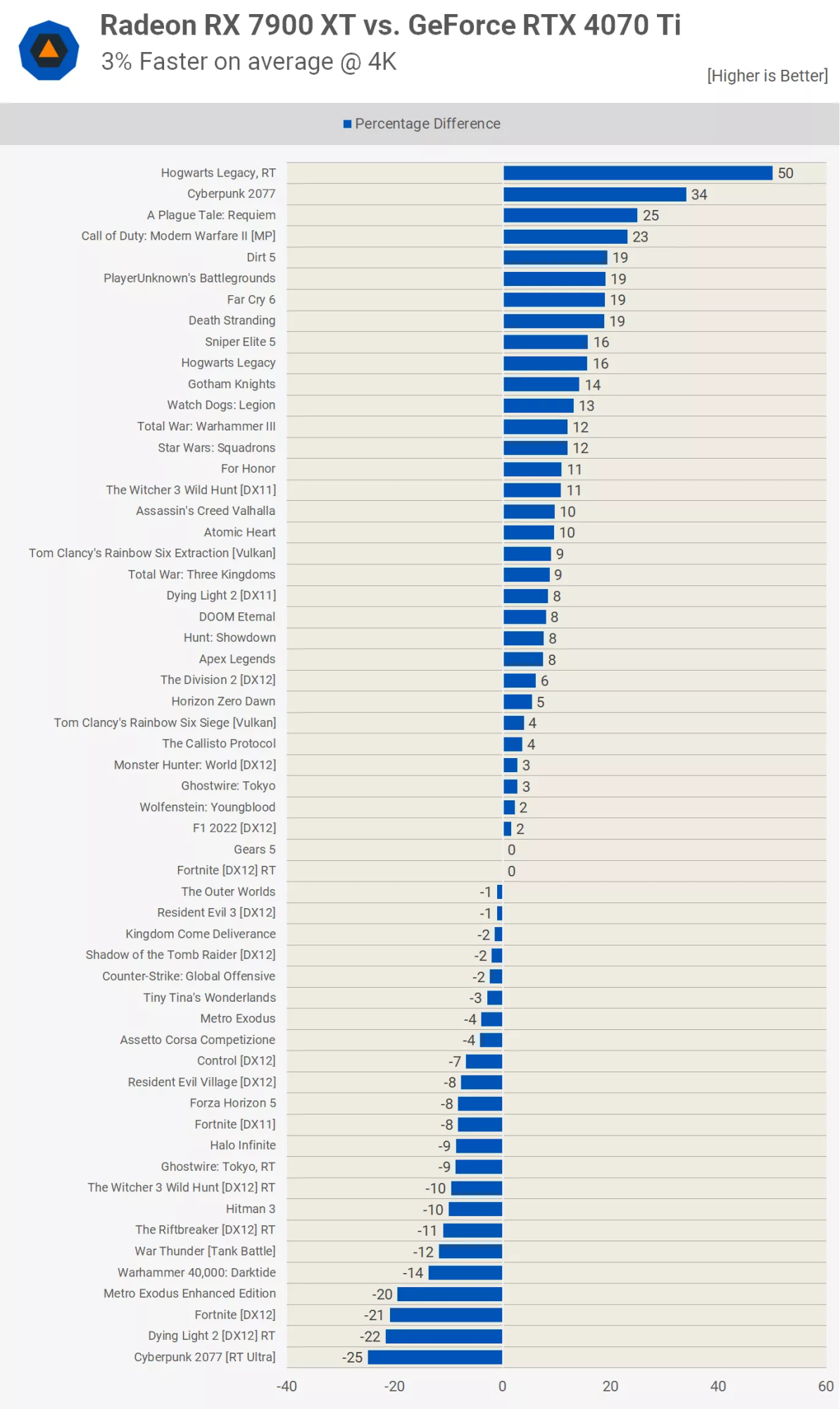

I was recently looking for a decent FHD card, and to my surprise nVIDIA is nowhere to be found in the ~$300 segment, my choice was between Intel and AMD, because what nvidia sells at this price point offers laughable performance comparatively.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)