applegrcoug

Gawd

- Joined

- Aug 28, 2021

- Messages

- 609

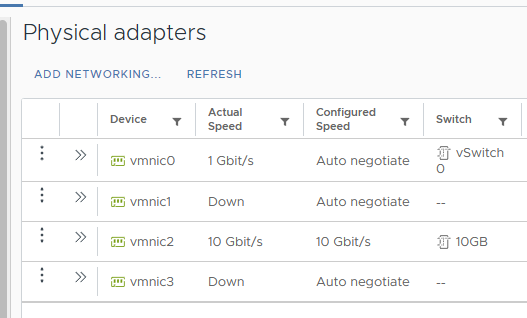

I'm trying to setup a 10Gb/s network between my main NAS, my Windows Emby/Minecraft server and then a test NAS.

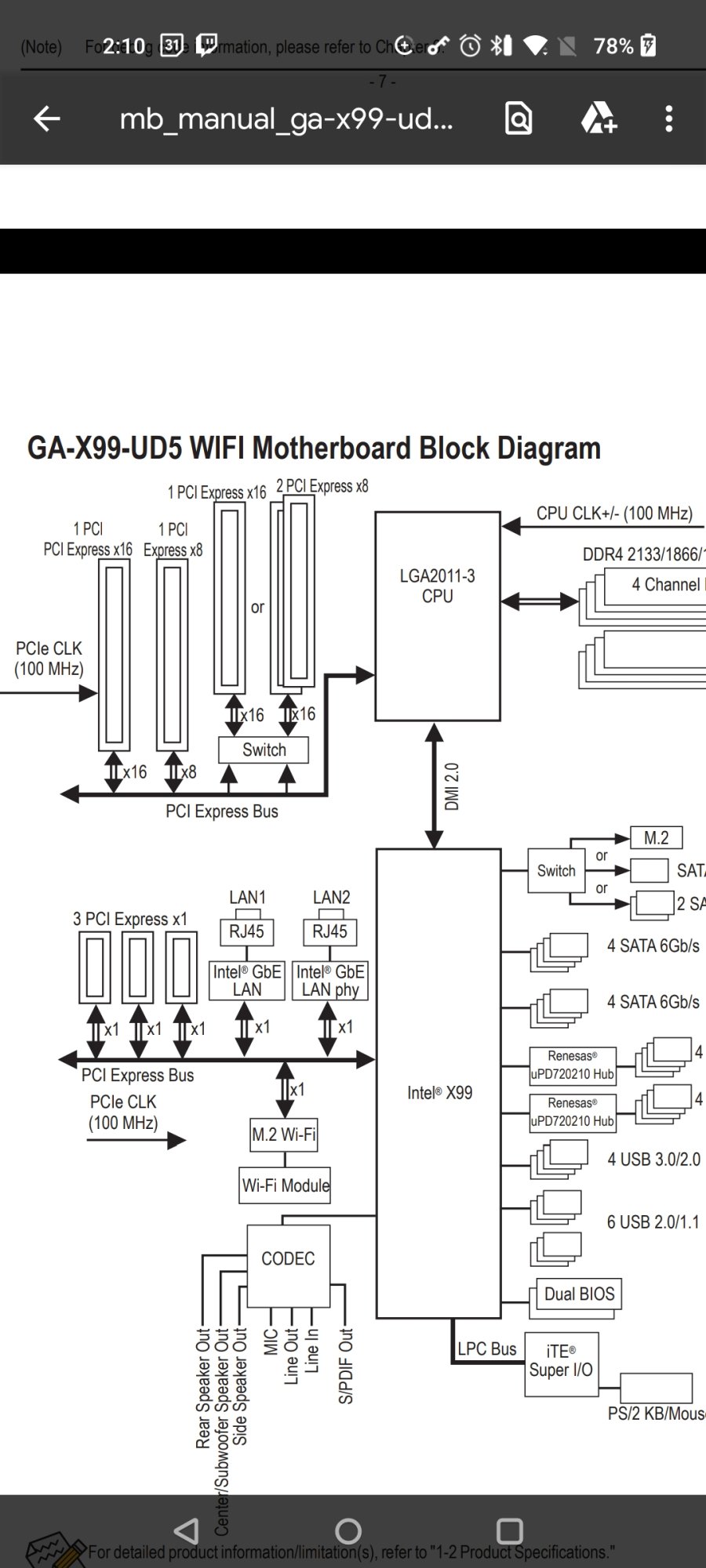

NAS is a 4790k with a 10gbe card and the Windows box is a E5-2699 v3 on a Gigabyte X99-UD5 with a 10gbe card. The test NAS is an old FX-8320..nothing special. These boxes are sitting side by side and are connected directly into the switch. Most of my network cards are ASUS XG-C100C, which is a PCIe Gen 3 x4 card...I also have one card that is PCIe Gen2 x8 for the old stuff that only runs at Gen 2.

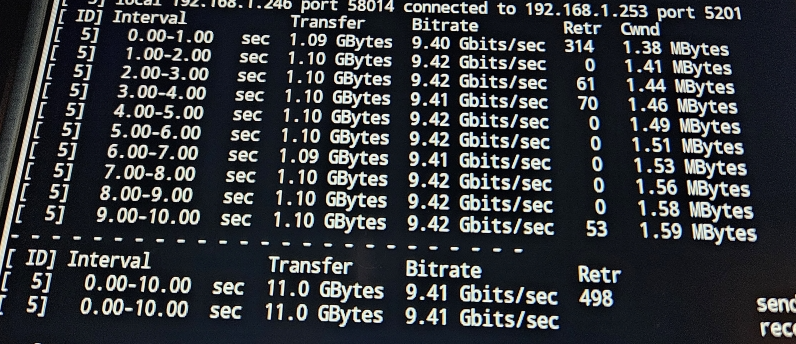

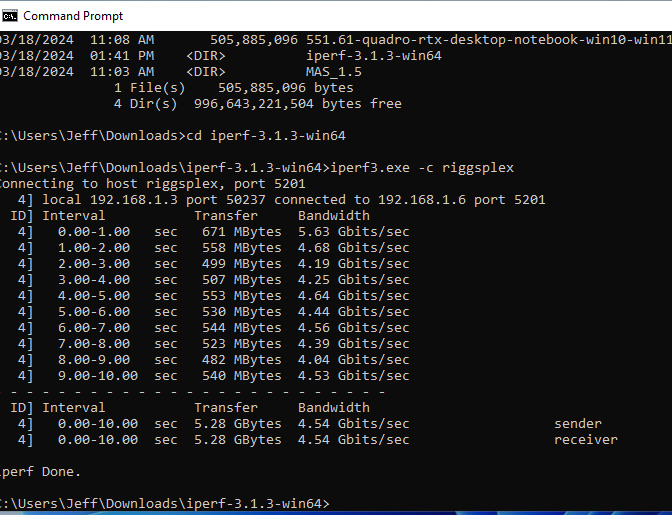

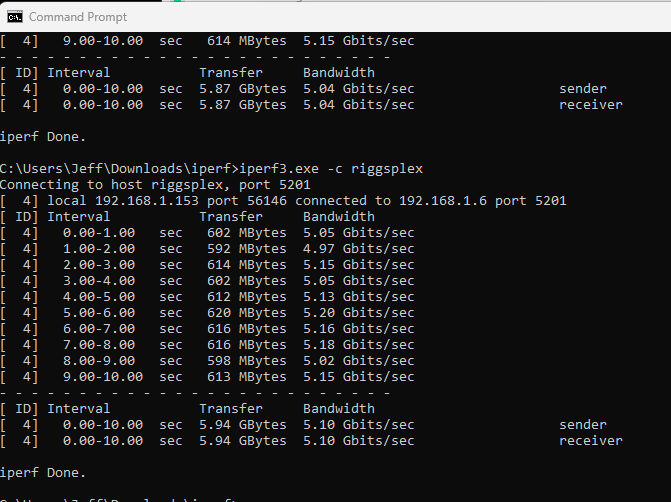

When I run iperf between the two NAS boxes, I get 9 Gb/s, which seems reasonable.

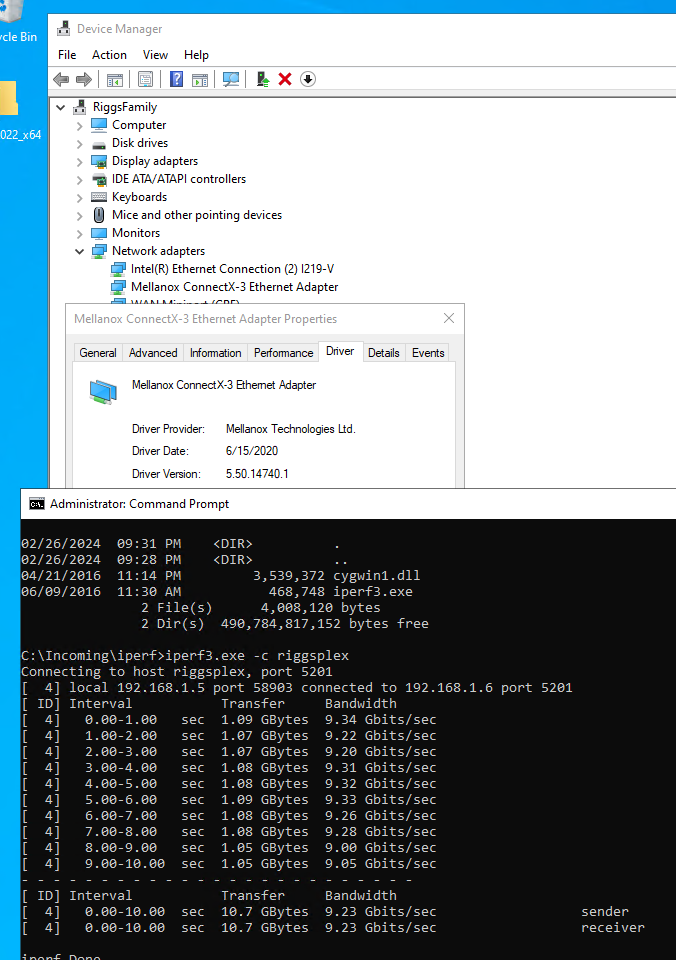

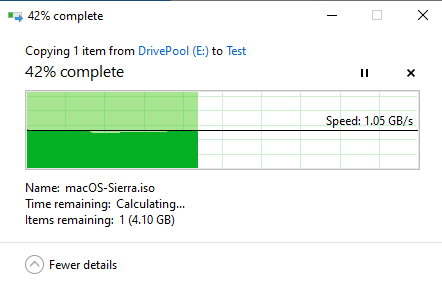

However, when I run Iperf to the Windows box it seems to be plagued with gremlins...I get more like 3 Gb/s.

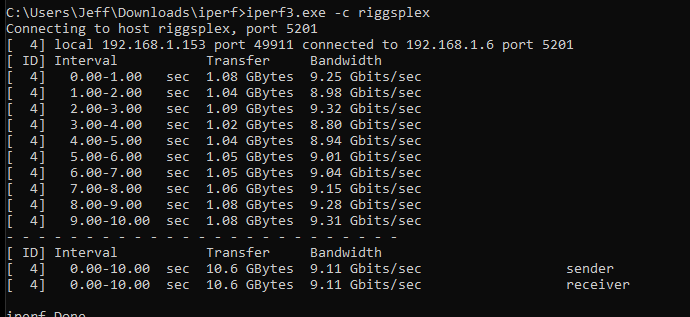

So then swapped network ports on the switch and cables cables...3 Gb/s. So how about using a different PCIe slot, right? The X99 board has two x16 and one x8. Nope, 3 Gb/s. Same with a different card. Then I tried safe mode with networking and I got up to 5 Gb/s. Then I tried the boot drive from my testnas and was up like 9 Gb/s.

So, what gives with windows and truenas not playing???

NAS is a 4790k with a 10gbe card and the Windows box is a E5-2699 v3 on a Gigabyte X99-UD5 with a 10gbe card. The test NAS is an old FX-8320..nothing special. These boxes are sitting side by side and are connected directly into the switch. Most of my network cards are ASUS XG-C100C, which is a PCIe Gen 3 x4 card...I also have one card that is PCIe Gen2 x8 for the old stuff that only runs at Gen 2.

When I run iperf between the two NAS boxes, I get 9 Gb/s, which seems reasonable.

However, when I run Iperf to the Windows box it seems to be plagued with gremlins...I get more like 3 Gb/s.

So then swapped network ports on the switch and cables cables...3 Gb/s. So how about using a different PCIe slot, right? The X99 board has two x16 and one x8. Nope, 3 Gb/s. Same with a different card. Then I tried safe mode with networking and I got up to 5 Gb/s. Then I tried the boot drive from my testnas and was up like 9 Gb/s.

So, what gives with windows and truenas not playing???

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)