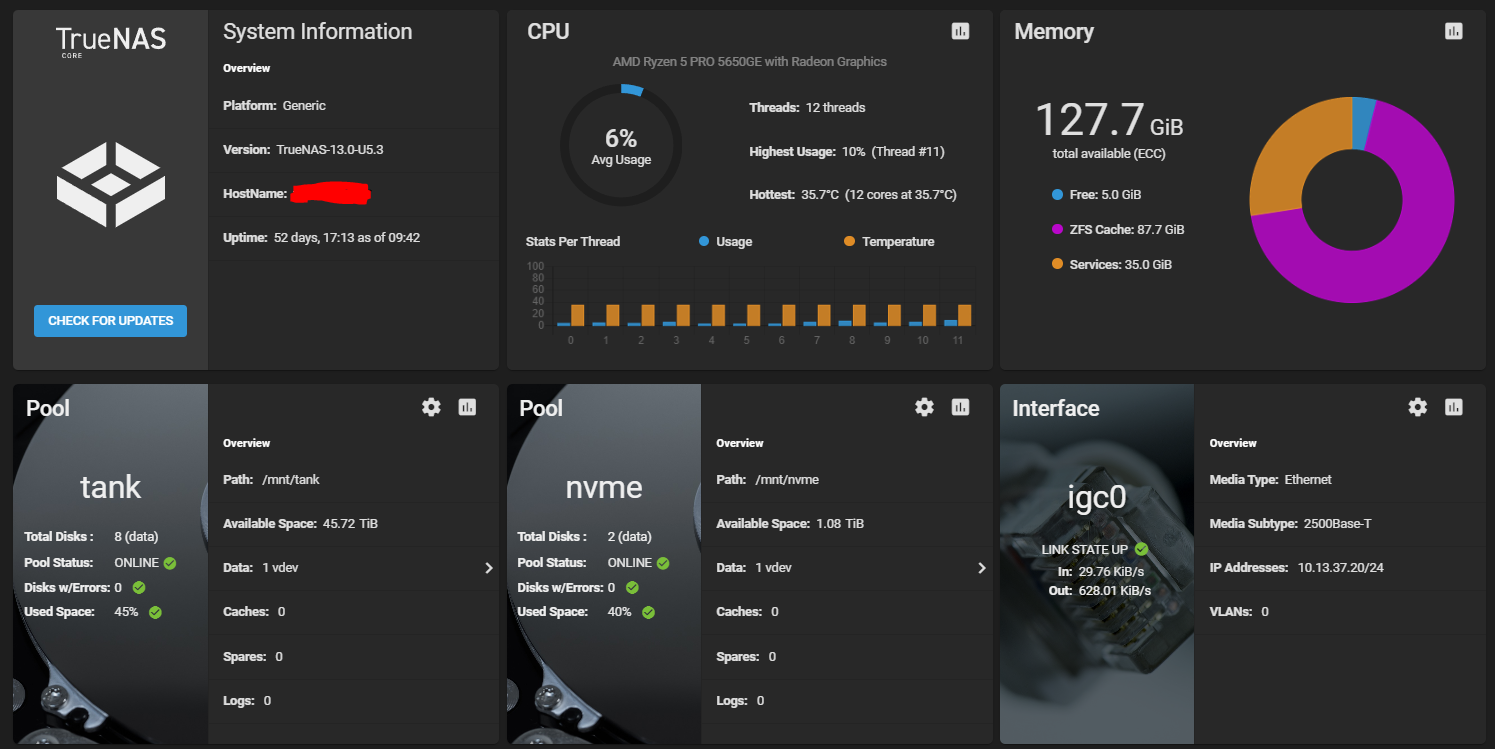

My current file server has been rock solid for the last 10 years but its really showing its age when trying to stream 4k rips , plus most of the drives are over 7 years old so I am starting to feel the pressing need to build now and migrate while still functional.

Current server is 6 6TB WD blacks In raid, would like to at least double the capacity looking for the best bang for my buck but pretty fast storage.

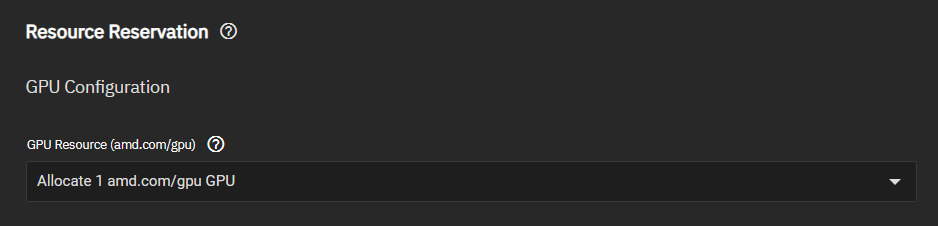

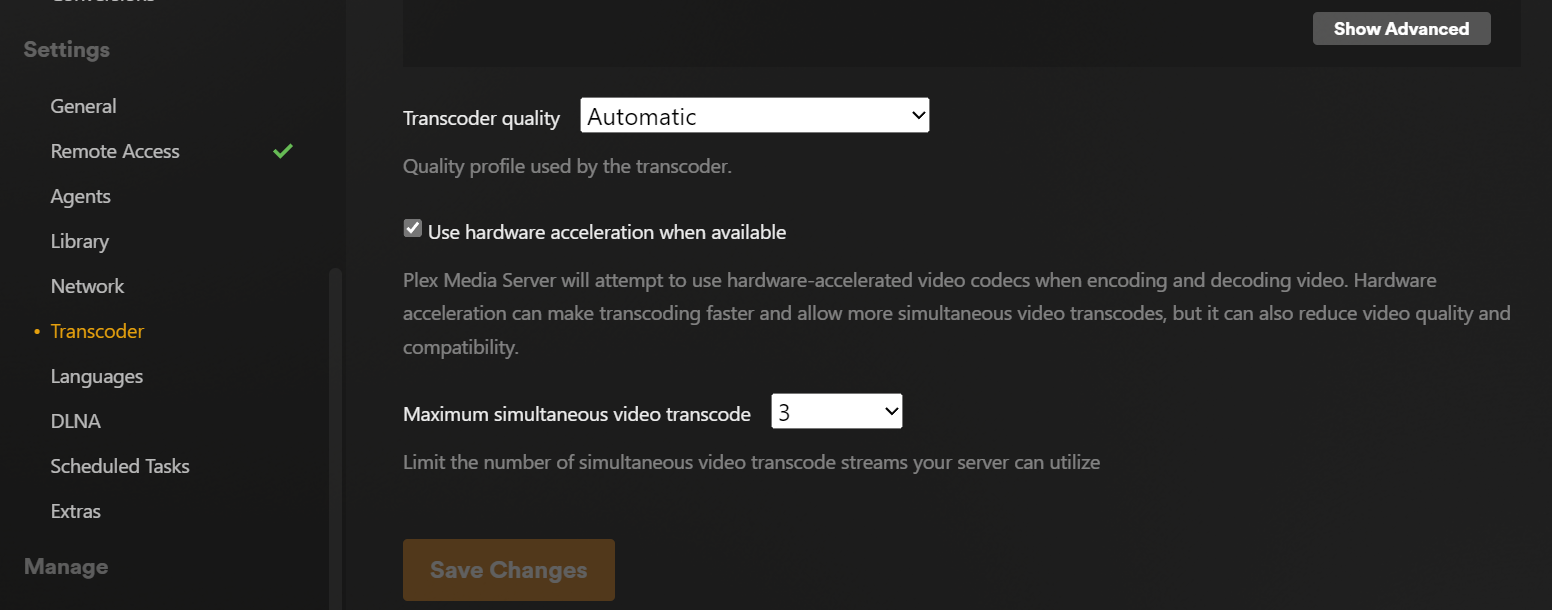

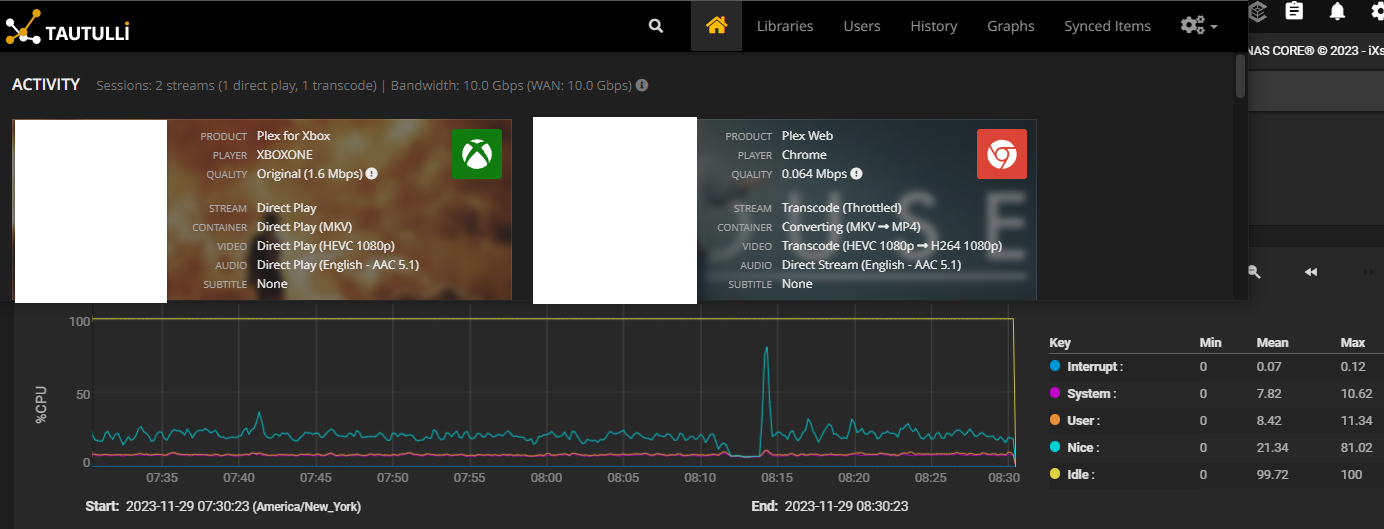

Main use is going to be a plex server but would like to try new things like maybe local cloud storage for the family. Will also be storing my steam library for the first time since next year we are going to be building rural with Starlink internet so i wont be able to just download a game in 15min like i do now.

Wishlist will be something that can saturate my 2.5gb Switch with future plans for 10gb when new house is built and wired.

Thinking about repurposing my current CPU/motherboard but i am open to all suggestions.

Current server is 6 6TB WD blacks In raid, would like to at least double the capacity looking for the best bang for my buck but pretty fast storage.

Main use is going to be a plex server but would like to try new things like maybe local cloud storage for the family. Will also be storing my steam library for the first time since next year we are going to be building rural with Starlink internet so i wont be able to just download a game in 15min like i do now.

Wishlist will be something that can saturate my 2.5gb Switch with future plans for 10gb when new house is built and wired.

Thinking about repurposing my current CPU/motherboard but i am open to all suggestions.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)