Hi,

I am building a new rig 7800X3d centered rig. This will be my first new computer since 2012, so I am out of the loop on things like ray tracing and if I need it or not. I have been reading and watching videos, but there is so much contradicting info.

I will be looking to play BG3 and other RPGs (Elden Ring, Upcoming Elder Scrolls VI) as my primary games. Other games will be sims like Mechwarrior 5, and MW5 Clans when it comes out, sports games like Madden, First person (Star Wars type games (like Jedi Survivor and the upcoming Outlaws)/some shooters). My other uses will include 3d printer slicing software (Cura, Lychee), some linfrequent ight CAD for when I need to create STLs (if they are not available for 3d printing) , surfing, productivity work (office, Photoshop, acrobat), streaming, etc . Currently using 2 24”1080 monitors, but will likely upgrade within the year to bigger screens at 1440.

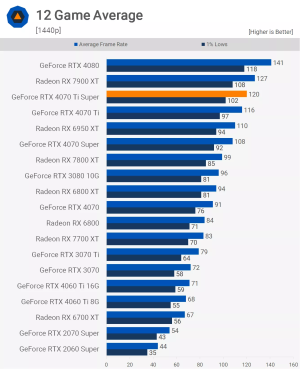

I am stuck between a 7900xt at $699.99 or a 4070 ti Super at $799.99- $840.99. With the info above, do I even care about ray tracing? And what recommendation do you have and why?

Thanks!

-Matt

I am building a new rig 7800X3d centered rig. This will be my first new computer since 2012, so I am out of the loop on things like ray tracing and if I need it or not. I have been reading and watching videos, but there is so much contradicting info.

I will be looking to play BG3 and other RPGs (Elden Ring, Upcoming Elder Scrolls VI) as my primary games. Other games will be sims like Mechwarrior 5, and MW5 Clans when it comes out, sports games like Madden, First person (Star Wars type games (like Jedi Survivor and the upcoming Outlaws)/some shooters). My other uses will include 3d printer slicing software (Cura, Lychee), some linfrequent ight CAD for when I need to create STLs (if they are not available for 3d printing) , surfing, productivity work (office, Photoshop, acrobat), streaming, etc . Currently using 2 24”1080 monitors, but will likely upgrade within the year to bigger screens at 1440.

I am stuck between a 7900xt at $699.99 or a 4070 ti Super at $799.99- $840.99. With the info above, do I even care about ray tracing? And what recommendation do you have and why?

Thanks!

-Matt

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)