New multi-threading technique promises to double processing speeds

'SHMT' also sliced power usage by 51% compared to existing techniques

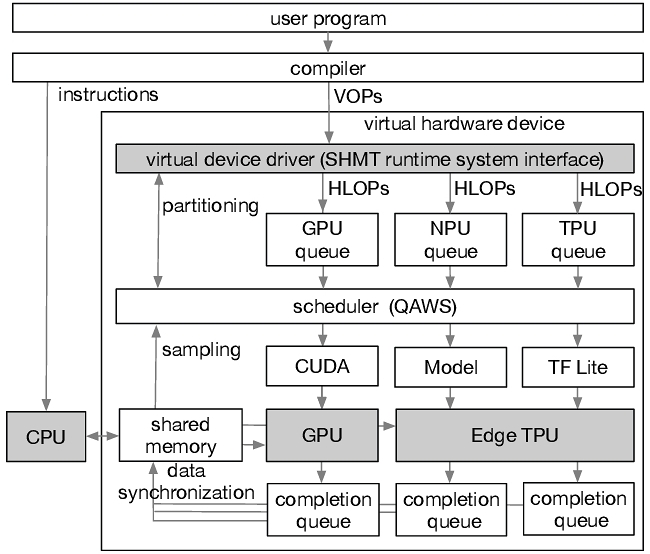

By Zane Khan Today 11:12 AMResearchers at the University of California Riverside developed a technique called Simultaneous and Heterogeneous Multithreading (SHMT), which builds on contemporary simultaneous multithreading. Simultaneous multithreading splits a CPU core into numerous threads, but SHMT goes further by incorporating the graphics and AI processors.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)