Yes I saw those, and ? I am not sure I understand them.Scroll down to "Expansion Slots" and you will find:

Isn't this when you use the M2_1 slot ?You can have 1 16x card in slot 1 --OR-- one 8x in slot one and one 8x in slot two.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yes I saw those, and ? I am not sure I understand them.Scroll down to "Expansion Slots" and you will find:

Isn't this when you use the M2_1 slot ?You can have 1 16x card in slot 1 --OR-- one 8x in slot one and one 8x in slot two.

Which is why manufacturers now including block diagrams is so incredible. At least with the release of AM5, Asrock and Gigabyte have been great about it. MSI and Asus, not so much.Yeah, they really don't make interpreting PCIe lane configurations easy.

criccioSorry, I have no idea how to make this not embed.

DMed. (or.. "started a conversation", I couldn't find a normal direct message option)criccio

I would like to access that spreadsheet directly from Google. I will probably upgrade to AMD Zen5 maybe 6 months after announcements, when there are enough motherboard choices. In the past I reflexively used ASIUS because I have had good results with ASUS boards. But I know market conditions change and I should be open to non-ASUS alternatives. That said, doing all the research myself would drive me batshit. The author of this spreadsheet seems to have done all that research.

One point. Buying Intel is like going over to the dark side and signing a long-term lease for an apartment there.

https://docs.google.com/spreadsheets/d/1NQHkDEcgDPm34Mns3C93K6SJoBnua-x9O-y_6hv8sPs/edit#gid=0PCIe 4.0 NICs have been out since at least 2020.Hopefully soon we'll see PCIe 4.0 x4 NICs come out since this is the same bandwidth as PCIe 3.0 x8 and that will invalidate your need for the mythical x16/x8. Or... simply eat a 2% - 3% performance loss on your GPU to run x8/x8; which is a perfectly acceptable trade-off to get very high speed networking on a consumer platform.

That is the same problem as every other board.My MSI x470 Gaming Plus is X Fire and will surport the 5800x 3D

- 2 x PCIe 3.0 x16 slots (PCIE_1, PCIE_4)

- 1st, 2nd and 3rd Gen AMD® Ryzen™ processors support x16/x0, x8/x8 mode

- Ryzen™ with Radeon™ Vega Graphics and 2nd Gen AMD® Ryzen™ with Radeon™ Graphics processors support x8/x0 mode

- Athlon™ with Radeon™ Vega Graphics processor supports x4/x0 mode

- 1 x PCIe 2.0 x16 slot (PCIE_6, supports x4 mode)1

- 3 x PCIe 2.0 x1 slots

- PCI_E6 slot will be unavailable when installing M.2 PCIe SSD in M2_2 slot.

Maybe the guys who designed the X670E chiipset didn't fully understand use cases.That is the same problem as every other board.

you put the GPU in slot #1 and it runs at x16. Then, you skip slot #2 and put the card in slot #3 which is x16 long, so the card will fit, but it only has the pins for x4, so it runs x4....not the x8 that is being requested.

This dumb pci-e problem has really been vexing me too. I have a similar issue, but at least mine is manageable as I am only deal with 10gbe, not 40.

The only true board I ever owned that would do what your asking is X58 Evga 3way Sli 758e, I lucked up buying late and got the board that made the Xeon 5660x a drop in without the mod, 1.2v board.That is the same problem as every other board.

you put the GPU in slot #1 and it runs at x16. Then, you skip slot #2 and put the card in slot #3 which is x16 long, so the card will fit, but it only has the pins for x4, so it runs x4....not the x8 that is being requested.

This dumb pci-e problem has really been vexing me too. I have a similar issue, but at least mine is manageable as I am only deal with 10gbe, not 40.

The only true board I ever owned that would do what your asking is X58 Evga 3way Sli 758e, I lucked up buying late and got the board that made the Xeon 5660x a drop in without the mod, 1.2v board.

Slot 1 and 2 was full x16,slot 3 was x8, I could CX with 1 and 3 for better airflow and what that looked like http://www.3dmark.com/fs/19756996

Mystery Machine award, they never seen that setup before.

Just need to find a wizbang on aliexpress that takes two m.2 slots and then aggregates them into a single x8.Yeah, x58 was technically HEDT though, so more PCIe lanes than regular consumer.

I did something similar with X79 and later TRX40, both of which were HEDT.

Unfortunately HEDT seems to be dead. Current Threadrippers and Xeons are Workstation platforms. HEDT no longer seems to exist.

In lieu of HEDT I'm trying to figure out what I can do with consumer parts, because workstation parts are not going to work for me.

It should be possible judging by how many higher end boars have dual dedicated electrical x4 ports (in physical 16x slots) but at least thus far, no board I have found offers that configuration yet. Maybe this is a limitation of the chipset? offering two groups of x4, but not the ability to combine them to a single 8x.

The best of both worlds would be if these 8 lanes were offered across two slots in an 8x/0x or 4x/4x configuration, like they do with the primary x16 slot and secondary slot, but maybe that is asking too much.

It would add a little cost to design it that way, but once you do that one board would work for everyone, rather than needing multiple versions. The question is which choice would have a lower overall cost.

Reading this thread, I realized that I should be running an HEDT, even if I wasn't aware of that until now. Just for grins I searched on "ASUS HEDT" Nothing except ROG and gaming machines.Unfortunately HEDT seems to be dead. Current Threadrippers and Xeons are Workstation platforms. HEDT no longer seems to exist.

Same. Driving me nuts. The workstation performance is great, but lord is the non-workstation... weak. Not terrible, mind you, but weak weak weak.I guess I'm just going to have to wait then.

Until I can find a motherboard that both boasts top tier consumer CPU performance in low threaded workloads AND allows me full one full 16x (gen 4+) and one full 8x (gen3+) electrically, at the same time I'm just not going to upgrade.

I was ready to jump on the new Threadrippers, but unlike the 3xxx series, the performance is just terrible in non-workstation loads wjhen compared to their consumer counterparts.

Fingers crossed Zen5 and its associated new chipset brings more options along this line. They could start by bumping up the chipset links to actually support Gen5 instead of Gen4, which should provide enough bandwidth to offer some more flexibility to Motherboard designers.

Same struggle I'm having. I'm getting close to "gonna have to live with x4s on the third and fourth slot."Yeah, x58 was technically HEDT though, so more PCIe lanes than regular consumer.

I did something similar with X79 and later TRX40, both of which were HEDT.

Unfortunately HEDT seems to be dead. Current Threadrippers and Xeons are Workstation platforms. HEDT no longer seems to exist.

In lieu of HEDT I'm trying to figure out what I can do with consumer parts, because workstation parts are not going to work for me.

Let me know if you find oneIt should be possible judging by how many higher end boars have dual dedicated electrical x4 ports (in physical 16x slots) but at least thus far, no board I have found offers that configuration yet. Maybe this is a limitation of the chipset? offering two groups of x4, but not the ability to combine them to a single 8x.

The best of both worlds would be if these 8 lanes were offered across two slots in an 8x/0x or 4x/4x configuration, like they do with the primary x16 slot and secondary slot, but maybe that is asking too much.

It would add a little cost to design it that way, but once you do that one board would work for everyone, rather than needing multiple versions. The question is which choice would have a lower overall cost.

Zenith II Extreme Alpha was the last real one. The others are all WS boards now (Sage line). They're damned fine boards, but VERY workstation focused.Reading this thread, I realized that I should be running an HEDT, even if I wasn't aware of that until now. Just for grins I searched on "ASUS HEDT" Nothing except ROG and gaming machines.

They also don't support bifurcation which defeats the purpose in a lot of cases. The last "consumer" board with ones was an X299 board I had which was neat but not being able to use the 4x m.2 cards was kind of a bummer. (supermicro also had a z490 board with 8888 capability but I didn't own one)PCIe switches got real expensive.

They knew exactly what they were doing- separating the market between consumer and workstation. This started with LGA2011 when dual-CPU models couldn't be overclocked unlike the LGA1366 dual-CPU brethren.Maybe the guys who designed the X670E chiipset didn't fully understand use cases.

X79 could do it. 990FX could do it. When multi-GPU setups fell out of favor and PCI-E controllers moved onto the CPU, Intel and AMD saw no reason to give consumers x16 + x8 configurations. It became a way to differentiate the consumer and workstation markets.The only true board I ever owned that would do what your asking is X58 Evga 3way Sli 758e, I lucked up buying late and got the board that made the Xeon 5660x a drop in without the mod, 1.2v board.

Slot 1 and 2 was full x16,slot 3 was x8, I could CX with 1 and 3 for better airflow and what that looked like http://www.3dmark.com/fs/19756996

Mystery Machine award, they never seen that setup before.

I would be OK with buyuing a workstation board if there were entry-level models priced like medium priced consumer boards. Or maybe there needs to be a new category of boards, between today's consumer and workstation markets. I for one don't need a dual CPU setup. (Back in the day I had an AMD A7M-266D with dual CPUs. I used the "pencil trick" to get the CPUs to run in MP mode.)X79 could do it. 990FX could do it. When multi-GPU setups fell out of favor and PCI-E controllers moved onto the CPU, Intel and AMD saw no reason to give consumers x16 + x8 configurations. It became a way to differentiate the consumer and workstation markets.

Eh, that's what X58 and X79 were (AMD didn't really compete back then). Intel and AMD either decided that the market was too small or they could make more money forcing enthusiasts into true workstation platforms if they wanted the additional capability. The prosumer motherboards that existed with X58 and X79 are gone now and they don't show any sign of coming back soon, which is a shame. Maybe if multi-GPU gaming were able to make a comeback but we pretty much know that's not going to happen. The only niche scenario I could see for multi-GPU gaming is with 3D where a GPU is tasked to each eye.I would be OK with buyuing a workstation board if there were entry-level models priced like medium priced consumer boards. Or maybe there needs to be a new category of boards, between today's consumer and workstation markets. I for one don't need a dual CPU setup. (Back in the day I had an AMD A7M-266D with dual CPUs. I used the "pencil trick" to get the CPUs to run in MP mode.)

They knew exactly what they were doing- separating the market between consumer and workstation. This started with LGA2011 when dual-CPU models couldn't be overclocked unlike the LGA1366 dual-CPU brethren.

X79 could do it. 990FX could do it. When multi-GPU setups fell out of favor and PCI-E controllers moved onto the CPU, Intel and AMD saw no reason to give consumers x16 + x8 configurations. It became a way to differentiate the consumer and workstation markets.

My only concern is that current workstation products - while great at workstation stuff - underperform in most typical client workloads and games, probably due to no lober being able to offer both unregistered and registered RAM on the same motherboard with DDR5, like they could with DDR4 and earlier.

The side effect of this has been to kill HEDT once and for all. In the past they could make a high end CPU product and since registered and unregistered RAM before DDR5 was pin compatible the end user could decide if they wanted it to be more of a workstation (go with registered ECC) or more of a HEDT system (go with kloverclocked screaming fast unregistered, non-ECC)

Now today, if you are an AMD or Intel designing a workstation-like product and have to choose to make it compatible with either registered or unregistered RAM, you are probably going to choose registered every time, and when you do the higher RAM latency resultant from both the registered buffer and the ECC is going to absolutely kill performance for client/gaming stuff.

It's a real shame.

Anyway, I still hope I am wrong about the forced segmentation and that AMD with the new chipsets for Zen5 finally move to Gen5 PCIe lanes for the four lanes that go to the chipset, which gives motherboard makers more flexibility for secondary PCIe slots off of that chipset.

Agreed. How about two 8X slots and two 16x slots.I hope that at least one of them will offer a single 8x slot (Gen3 or higher) instead of more plentiful 4x slots.

Agreed. How about two 8X slots and two 16x slots.

AM4 was 24, AM5 is 28. There's an extra x4 for a second m.2 or a cpu direct slot. I don't think you can split it out to say 4 x1 slots though.Off the top of my head, they way AM5 currently works is that you have a grand total of 24 lanes.

It used to be that you put one fancy expensive NVMe drive in your system, and if you needed more storage, you used a couple of cheaper SATA drives. Now NVMe (especially Gen3 drives) are just as cheap, and sometimes cheaper than sata drives, meaning lots of people would love more m.2 slots, and the powers that bee insist on giving us only 24 lanes in total.

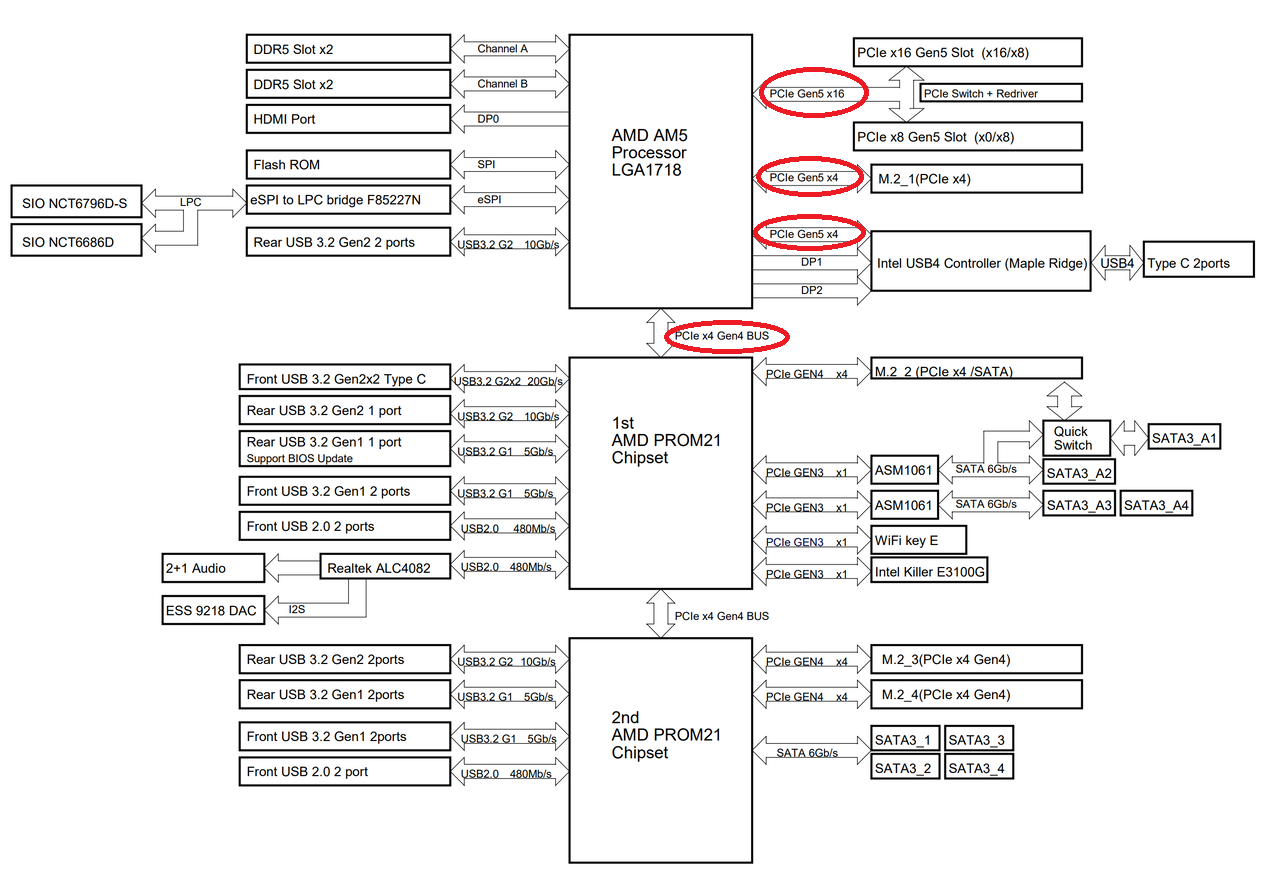

Correct. My board, for example. I find block diagrams the best way to visualize this kind of thing.AM4 was 24, AM5 is 28. There's an extra x4 for a second m.2 or a cpu direct slot. I

Correct. My board, for example. I find block diagrams the best way to visualize this kind of thing.

A lot of boards just come down to how certain I/O decisions are made. Do I think i'm really going to make use of USB4? So far, I haven't. Though being able to run a display off the iGPU directly from one of the Type C ports is pretty nice.

View attachment 647094

I highly doubt it, you'd likely need switches and/or re-drivers to do something like that.I wonder if the chipset even allows for combining the likes of M.2_3 and M.2_4 into one 8x slot (without the motherboard vendor adding an additional expensive PCIe switch), or if their 4x nature is hard coded.

I highly doubt it, you'd likely need switches and/or re-drivers to do something like that.

Frankly, i've wanted the ability to say, for example, instead of offering x8 lanes of Gen5 on a fullsize x16 slot, have it still wired for x16 lanes and if you simply run it in Gen4 mode, you get all x16 lanes accessible. I just think the hardware to do something like this would price these boards out of reach and into true workstation class territory. Some of them already are, TBH.

https://www.amd.com/en/products/processors/workstations/ryzen-threadripper.html#shop

Ryzen™ 9 7900 AMD Radeon™ Graphics 12 24 Up to 5.4 GHz 3.7 GHz AMD

AMD Ryzen™ Threadripper™ PRO 7945WX Discrete Graphics Card Required # of CPU Cores12

# of Threads24

Max. Boost ClockUp to 5.3 GHz

Base Clock4.7 GHz

Thermal Solution (PIB)Not Included

Default TDP350W

Threadripper 7945WX nearly matches a Ryzen 7900:

https://www.amd.com/en/products/processors/desktops/ryzen.html#tabs-0eb49394b2-item-446166865a-tab

https://www.amd.com/en/products/processors/workstations/ryzen-threadripper.html#shop

The boost clock is only 100Mhz lower, base is actually 1Ghz higher. With a good cooler, you could probably make up the 1Mhz boost difference.

Edit: Kinda sucks they don't seem to sell'm retail.

No. Well, if they were utilized differently, yes. But not the way they are, not for what OP wants to do.The z790 chipset has 28 PCIe lanes (20 lanes PCIe 4.0, 8 lanes PCIe 3.0) isn't that enough?

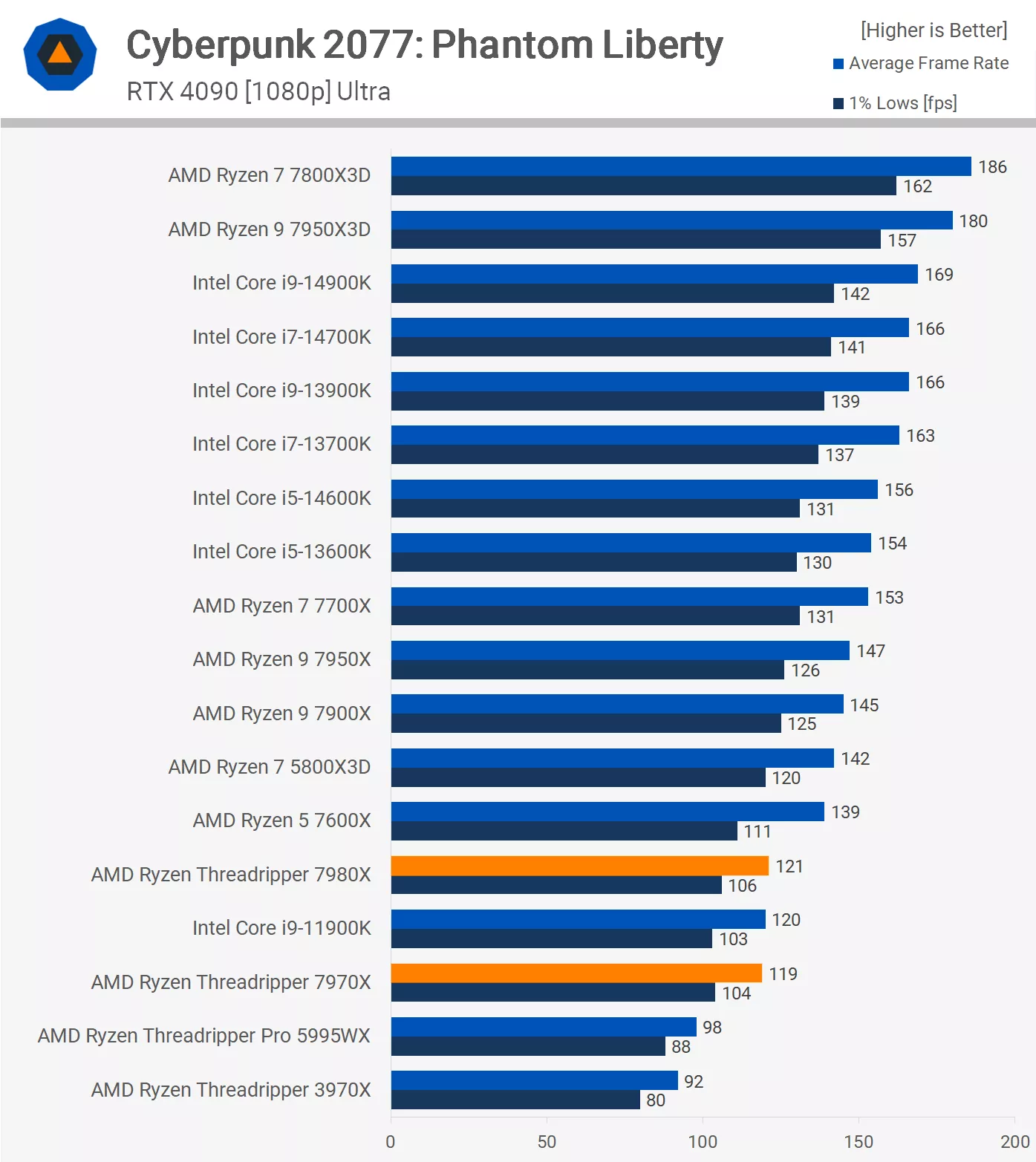

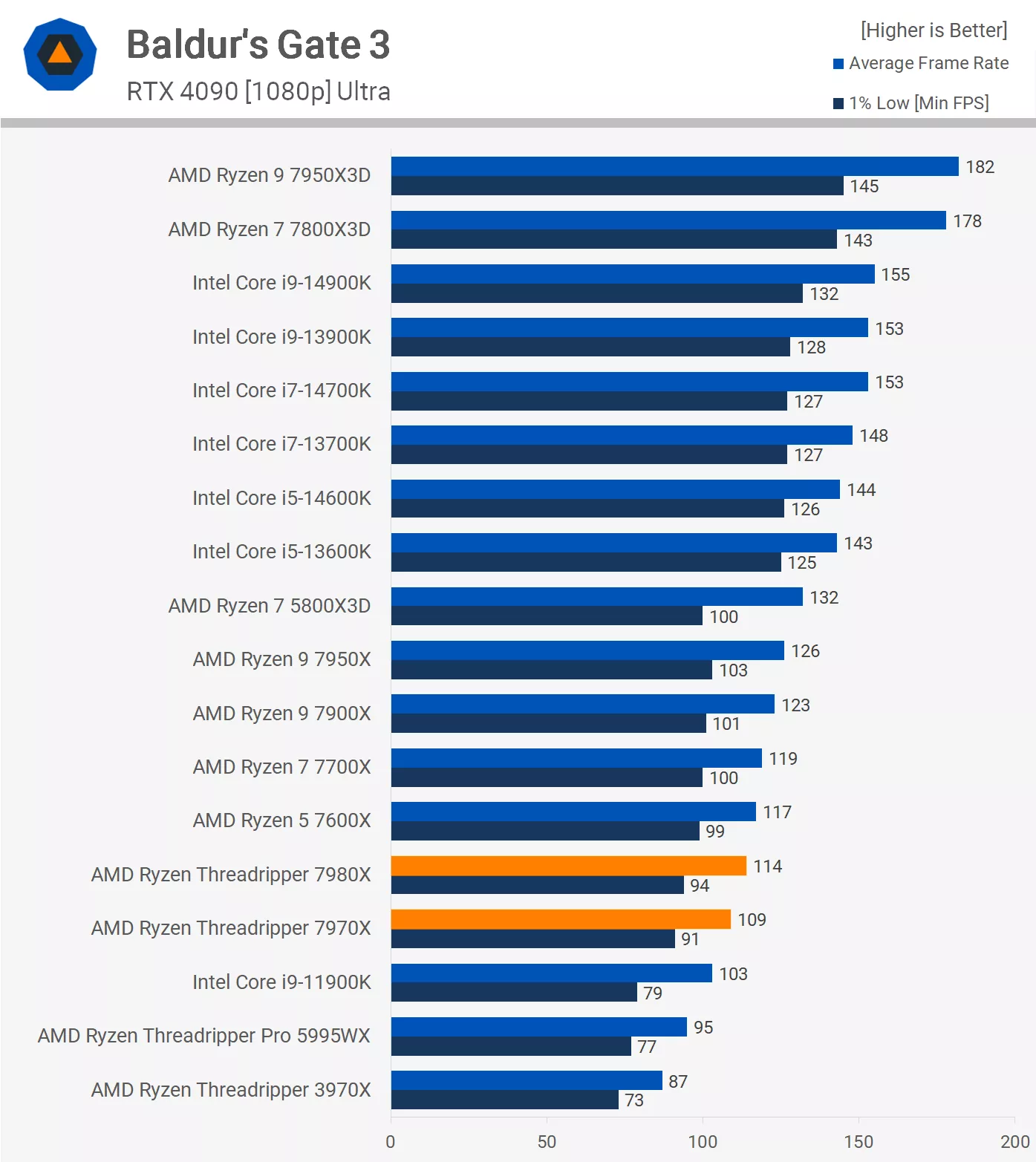

90+ 1% lows seems more than fine to me, but I'm used to <60fps on med/high settings. lol

True. But to be fair, they didn't test the 7945wx. If it's a single CCD processor, or even if it uses two, it would likely be significantly faster than a 4 or 8 CCD threadripper in gaming workloads.Yeah, but that's at the time of purchase.

When you buy something like this you are probably going to have it for 5 years. A motherboard and CPU will last through 2-3 GPU upgrades, so you need a little bit of headroom.

I don't want to invest thousands in something that is "enough" now, especially when consumer parts are so much faster. As always happens, when performance is available, next gen titles start taking advantage of that performance, making something that is adequate right now, more quickly inadequate in a few years.

Neither Baldurs Gate nor Cyberpunk are the harshest title on CPU's right now though. (GPU load possibly, but not CPU) They just happened to be the benchmarks I could find.

It would be interesting to see how theae Threadrippers perform in Starfield wandering through New Atlantis or Akila City or that upcoming title Dragons Dogma 2 which reportedly has some insane CPU load due to a novel new approach to NPC AI. I bet we aren't talking 90+ 1% minimums..

True. But to be fair, they didn't test the 7945wx. If it's a single CCD processor, or even if it uses two, it would likely be significantly faster than a 4 or 8 CCD threadripper in gaming workloads.

I can't help but think that there that there other like me out there as well. The traditional [H] types who used to buy extreme edition Intel CPU's and x58, x79, x99 and x299 platforms to have the true HEDT "best of both worlds, just without ECC" experience.

Are we the majority of the market? Hell no.

But in a crowded field of a hundred different motherboards for each generation of CPU fro AMD and Intel, there ought to be room for at least one such niche product.

And yes, it would come at a premium, but I'm OK with that. Take a current pro-sumer level (but not ROG christmas/diso light ultra gamerlicious monstrosity)

and add this feature, and that is easily worth a $500 premium.

^^Wouldn't even think twice, provided there aren't any other serious drawbacks to the board.

The problem seems to be that ever since Broadcom bought PLX technologies in an attempt to corner the PCIe switching market, the relative lack of competition has driven the pricing of modern PCIe switches (and you'd need the modern ones or they wouldn't support Gen 4 or Gen5) through the absolute roof.

I'm with Zarathustra[H] on this one. He articulates the issues better than I can.These days even the lowest end PCIe switches with the fewest number of lanes sell for $400-$700 for just the chip, before ity winds up on a board. At least to buyers who can't enter into volume purchasing agreements.