jbltecnicspro

[H]F Junkie

- Joined

- Aug 18, 2006

- Messages

- 9,548

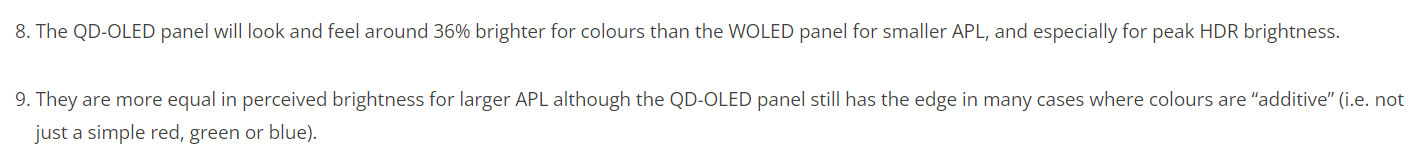

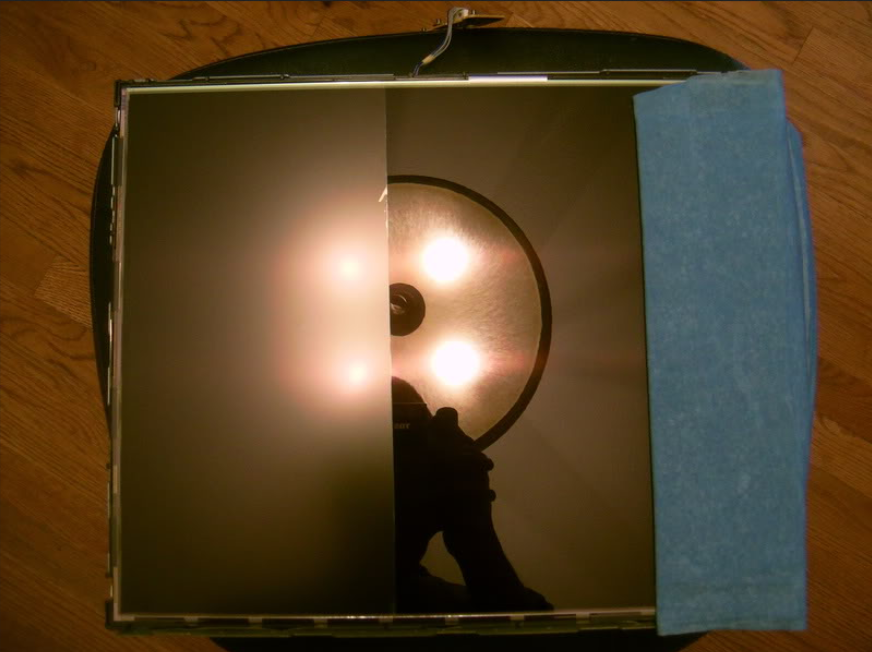

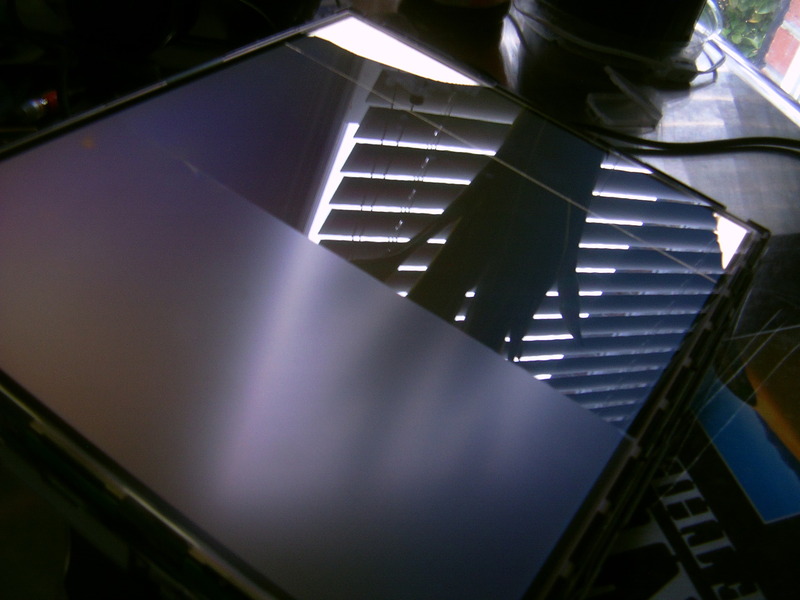

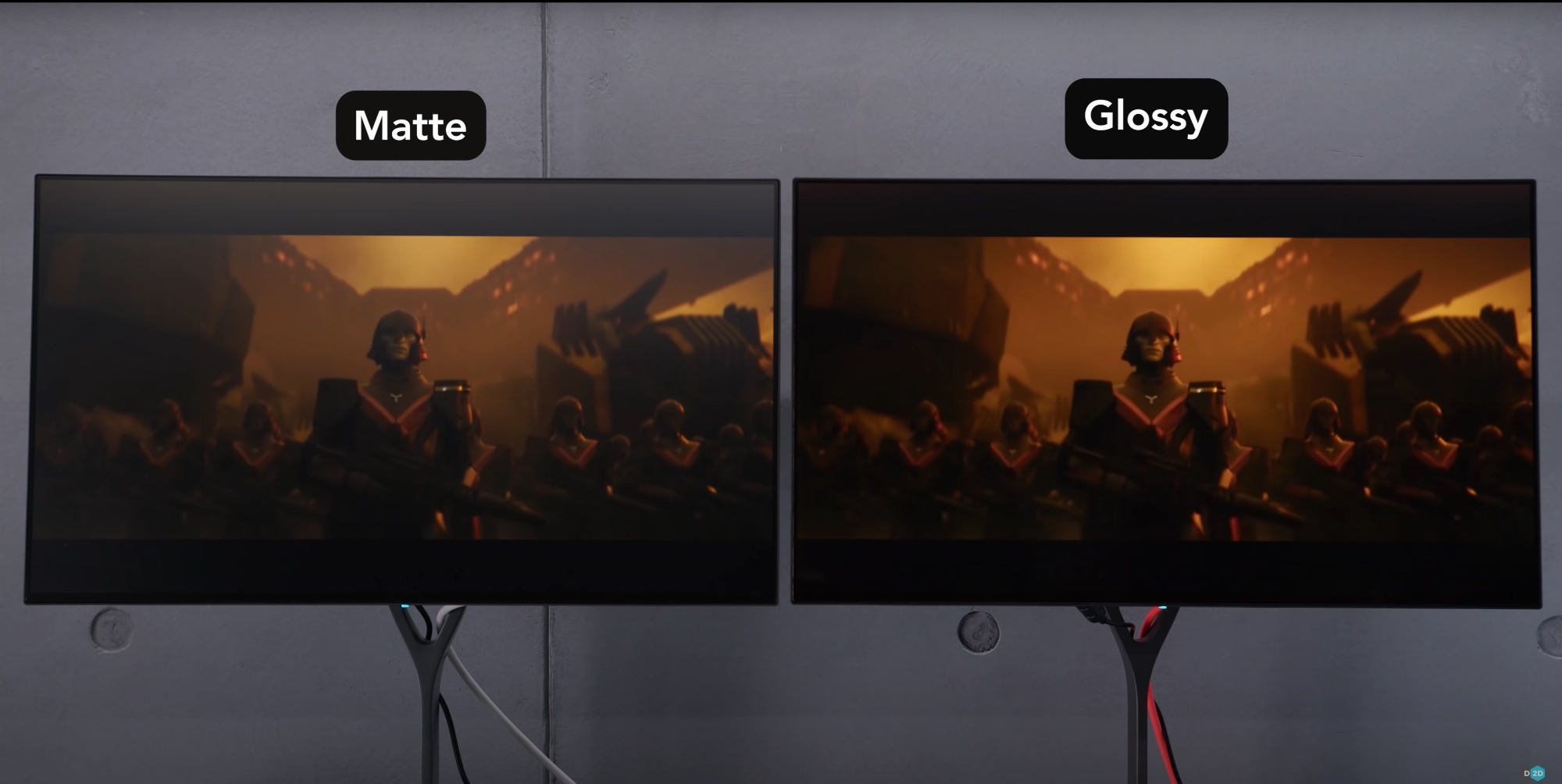

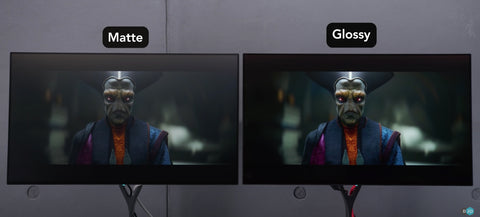

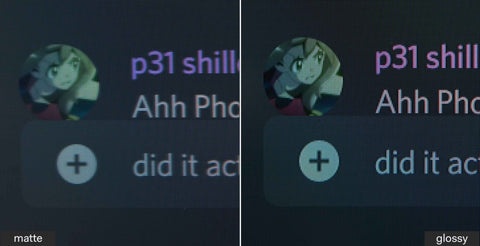

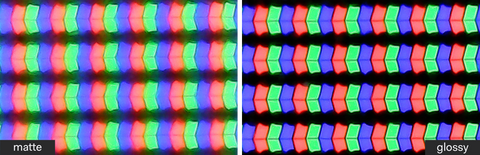

I know you guys will blow me up for this but when I had the C2 and PG42UQ side by side, the difference between glossy and matte if I had to quantify it was like 5% improvement in clarity. In my opinion this glossy vs matte thing gets really blown out of proportion (unless we're talking the Neo G8's Vaseline coating). I'll take a 1080p/480hz button + 200nits+ higher highlight brightness and far less firmware issues over a glossy screen.

This guy comes to the same conclusion:

View: https://youtu.be/iNIN9ud9PZU?si=JSK6dHtKUTlAfRxf&t=439

I just want that guy's hair, nevermind the monitor.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)