Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

Hi Everyone,

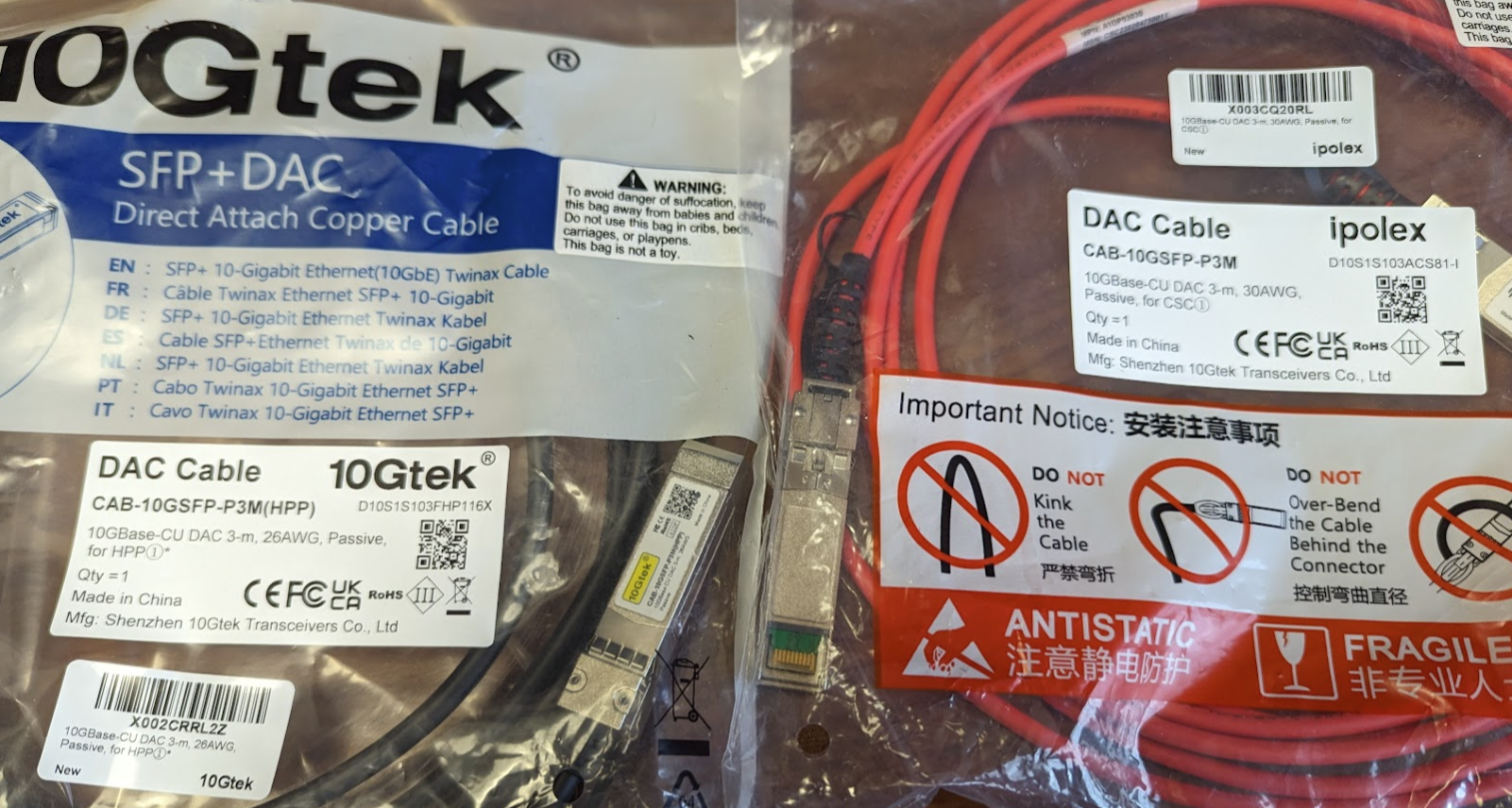

Just picked up a new CRS326-24S+2Q+ and I am struggling a little bit.

I'm using it as a layer2 switch + VLAN's with no routing, so I opted to boot and use it in SwOS mode.

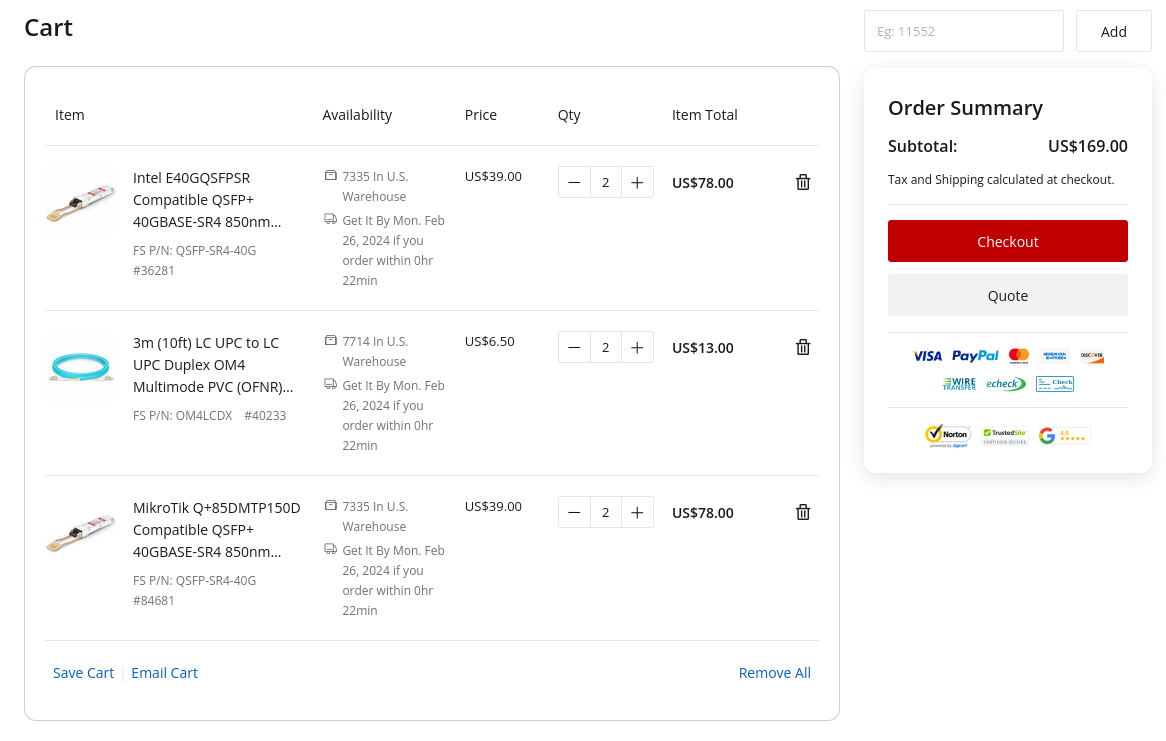

I am using 3M Molex DAC cables to connect the Fortville (Intel XL710-QDA2) NIC's in my servers to the switch. I went with Molex cables as - at least the 10gbit SFP+ varieties have always worked in Mikrotik switches for me, and I needed them quickly, and could not find Mikrotik branded cables locally.

These immediately establish a link when connected directly, NIC to NIC, suggesting the cables are fine, but when plugging into the switch, there is no link light.

Last night, after repeatedly unplugging and re-plugging the cable, and waiting, eventually a link light appeared, and once it did, the connection was solid, and worked perfectly, until the server rebooted, at which point there was - again - no link light and no connection.

I have now been trying for 20-30 minutes, and have been unable to get another link light on the QSFP+ port. I have no idea what finally got it to link last night, but I cannot seem to replicate it again today.

It's notable that the switch detects that the DAC cable is connected, and displays the information on the Link page as follows:

...it just doesn't result in a link.

Does anyone have any suggestions here?

1.) Do I potentially have a bad switch?

2.) Was I just silly to try to use a Molex DAC cable instead of the Mikrotik branded one?

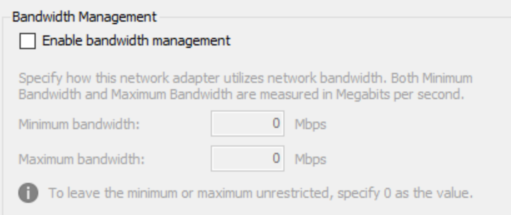

3.) I have read that in some cases disabling auto-negotiation helps with the 40Gbit ports on Mikrotik hardware. Is this something I should try? If so, how do I do it? I clicked around the settings menu but if I disable auto-negotiation, I don't get a 40Gbit option, only 10M, 100M and 1G.

4.) Anything else I can try?

I appreciate any suggestions!

Just picked up a new CRS326-24S+2Q+ and I am struggling a little bit.

I'm using it as a layer2 switch + VLAN's with no routing, so I opted to boot and use it in SwOS mode.

I am using 3M Molex DAC cables to connect the Fortville (Intel XL710-QDA2) NIC's in my servers to the switch. I went with Molex cables as - at least the 10gbit SFP+ varieties have always worked in Mikrotik switches for me, and I needed them quickly, and could not find Mikrotik branded cables locally.

These immediately establish a link when connected directly, NIC to NIC, suggesting the cables are fine, but when plugging into the switch, there is no link light.

Last night, after repeatedly unplugging and re-plugging the cable, and waiting, eventually a link light appeared, and once it did, the connection was solid, and worked perfectly, until the server rebooted, at which point there was - again - no link light and no connection.

I have now been trying for 20-30 minutes, and have been unable to get another link light on the QSFP+ port. I have no idea what finally got it to link last night, but I cannot seem to replicate it again today.

It's notable that the switch detects that the DAC cable is connected, and displays the information on the Link page as follows:

Code:

QSFP+2.1 Molex 1002971301 215021347 22-05-30 3m copper...it just doesn't result in a link.

Does anyone have any suggestions here?

1.) Do I potentially have a bad switch?

2.) Was I just silly to try to use a Molex DAC cable instead of the Mikrotik branded one?

3.) I have read that in some cases disabling auto-negotiation helps with the 40Gbit ports on Mikrotik hardware. Is this something I should try? If so, how do I do it? I clicked around the settings menu but if I disable auto-negotiation, I don't get a 40Gbit option, only 10M, 100M and 1G.

4.) Anything else I can try?

I appreciate any suggestions!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)