The 4090 is significantly more power efficient than the 3090… it uses much less power per frame generated.Otherwise known as the brute force approach. Throw the 4090 in that list as well.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel Core i9-13900K Raptor Lake CPU Offers Same Performance As Core i9-12900K With “Unlimited Power” at Just 80W

- Thread starter kac77

- Start date

I meant in terms of pushing power to the maximum in order to keep the performance crown, but you are correct, it is surprisingly more efficient than the 3090.The 4090 is significantly more power efficient than the 3090… it uses much less power per frame generated.

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,560

Well, they did jump two nodes from the 3090. It's kind of expected.I meant in terms of pushing power to the maximum in order to keep the performance crown, but you are correct, it is surprisingly more efficient than the 3090.

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,947

Yep, back to normal.The opposite happens next cycle, when Intel requires a new mobo but AM5 gets re-used.

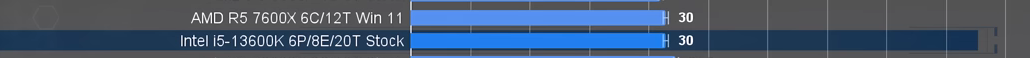

The 13600k destroys the 7600x in productivity and ties it in gaming. Just as R5s were 6 core and i5s were 4 cores, AMD should have priced and named the 8 core 7700x what the 6 core 7600x is now.

Seeing AMD so outmatched in productivity in the midrange is a bit wild.

It better since it's rocking 14 cores...lol.

Well yes more core being better than less core at similar price point or I am not sure of the point made ?It better since it's rocking 14 cores...lol.

What matter is obviously not performance per core, but per dollar

That per dollar difference doesn't factor in the thermal or power difference which would skew the savings. Just because someone takes a hot part and discounts it doesn't mean there's a savings when you factor in the total system cost.Well yes more core being better than less core at similar price point or I am not sure of the point made ?

What matter is obviously not performance per core, but per dollar

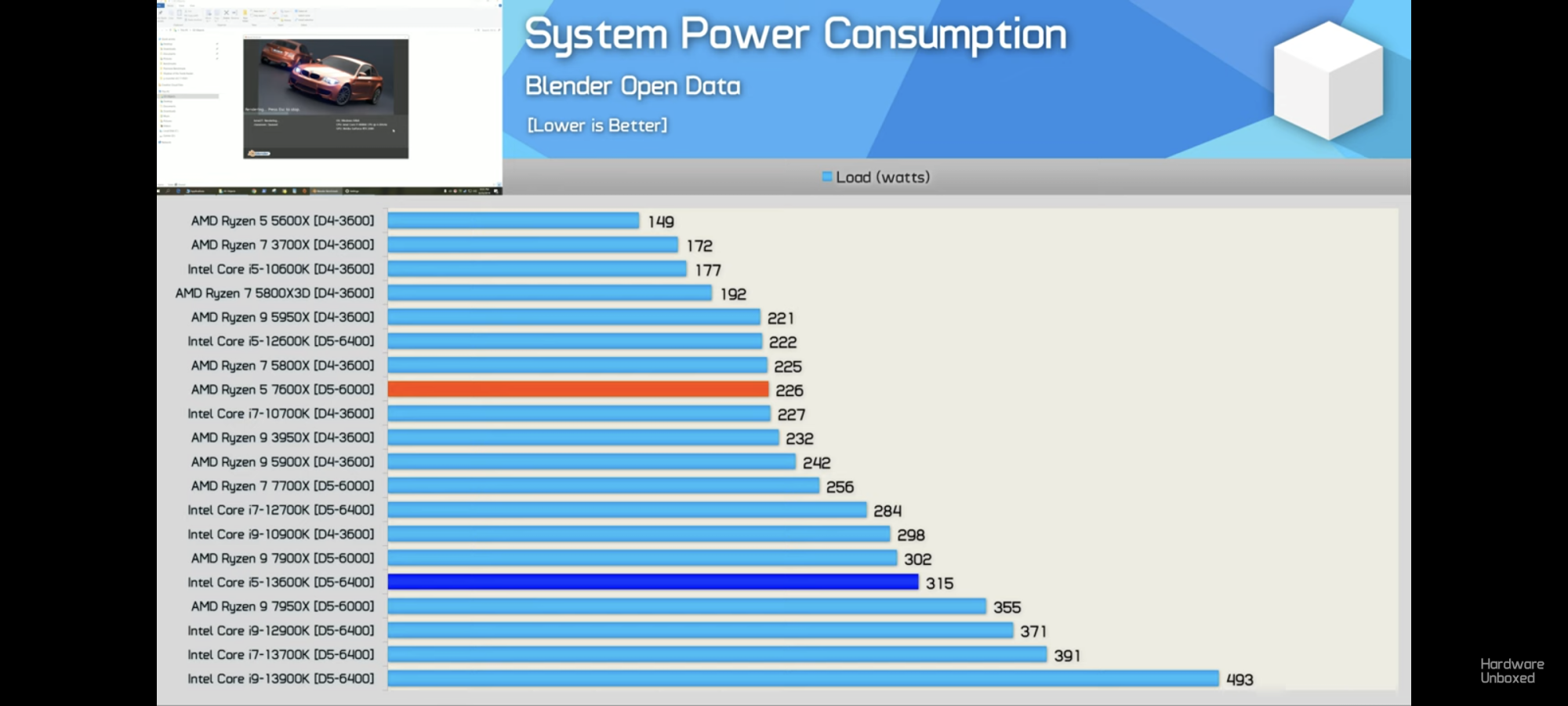

In this case they aren't even close there's a 100w difference at load. That's substantial.

Intel is pulling a fast one here. Loading up E-cores discounting the chips to win productivity benches even though power wise they are in another class.

At least Intel is still in the game here, there is no denying that TSMC's 5nm process is way way more efficient than the "Intel 7" process, the fact Intel still manages to stay ahead in any metric here is somewhat a miracle of engineering.That per dollar difference doesn't factor in the thermal or power difference which would skew the savings. Just because someone takes a hot part and discounts it doesn't mean there's a savings when you factor in the total system cost.

In this case they aren't even close there's a 100w difference at load. That's substantial.

Intel is pulling a fast one here. Loading up E-cores discounting the chips to win productivity benches even though power wise they are in another class.

View attachment 520742

I would not at all be supprised to walk into the Intel engineering department to find a poster of this man:

With some sort of shrine, because they are calling upon some elder god BS to stay in the running here.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,820

I think it’s finally letting the engineers do engineering stuff. That’s why AMD is making huge strides.At least Intel is still in the game here, there is no denying that TSMC's 5nm process is way way more efficient than the "Intel 7" process, the fact Intel still manages to stay ahead in any metric here is somewhat a miracle of engineering.

I would not at all be supprised to walk into the Intel engineering department to find a poster of this man:

View attachment 520746

With some sort of shrine, because they are calling upon some elder god BS to stay in the running here.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,820

QFT. Back in the day the AMD parts were so power hungry even with the discount price you paid more in the end with power. Power is not getting cheaper. Some parts of the world are running out of it. Expensive cooling solutions are just that, expensive l. More fans to cool the cpu is just added power and expense. We need to push companies to do more to reduce electricity consumption. A next gen chip with the same power required and better performance is good. A next gen chip with more performance with a lot more power draw, that’s bad.That per dollar difference doesn't factor in the thermal or power difference which would skew the savings. Just because someone takes a hot part and discounts it doesn't mean there's a savings when you factor in the total system cost.

In this case they aren't even close there's a 100w difference at load. That's substantial.

Intel is pulling a fast one here. Loading up E-cores discounting the chips to win productivity benches even though power wise they are in another class.

View attachment 520742

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,691

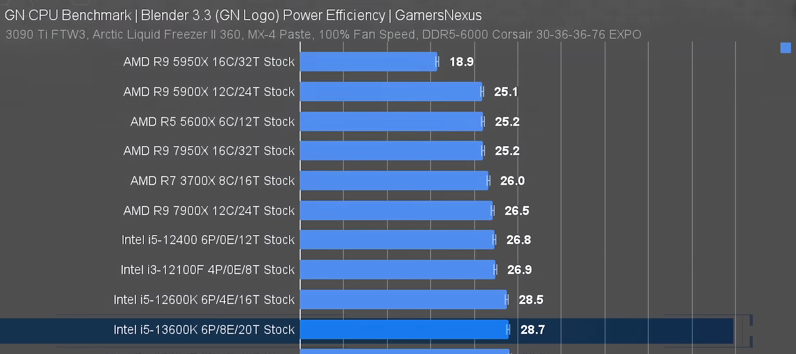

The 13600K is about 30% faster than the 7600X in their Blender test, while consuming 40% more power. You could power limit the 13600K until it matched the 7600X's performance and it would probably consume less power.Intel is pulling a fast one here. Loading up E-cores discounting the chips to win productivity benches even though power wise they are in another class.

But I wanna see that graph measured as a function of time, if something uses 50% more power to complete a job but completes it in half the time then I have actually saved power over the process of that job.QFT. Back in the day the AMD parts were so power hungry even with the discount price you paid more in the end with power. Power is not getting cheaper. Some parts of the world are running out of it. Expensive cooling solutions are just that, expensive l. More fans to cool the cpu is just added power and expense. We need to push companies to do more to reduce electricity consumption. A next gen chip with the same power required and better performance is good. A next gen chip with more performance with a lot more power draw, that’s bad.

Additionally, I am very interested in idle and low usage states, show me what these pull when asleep, locked, or during normal web browsing and light email/excel work.

I get dinged hard for power usage, I have an entire week or two dedicated to generating reports on electrical usage related to our computer infrastructure, and any improvements I can show there are received very favorably.

So far I am eagerly awaiting Dell and Lenovo to start hitting me up in the coming months with their new offerings and how replacing large portions of my existing fleet will actually save me money.

Jensen may be somewhat famous for "The more you buy, the more you save"; but my OEM suppliers have been hitting me up with variations of that phrase for a decade.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,691

@ 7:05 in the GN review.But I wanna see that graph measured as a function of time, if something uses 50% more power to complete a job but completes it in half the time then I have actually saved power over the process of that job.

So they are basically in a dead heat with Intel edging out the 7600x, which actually really surprises me... I figured it would be a close but noticeable loss for Intel here

Now I really want to know what Intel has been feeding its "Intel 7" fabs, this isn't making sense to me

Yes, performance per watt could matter to some.That per dollar difference doesn't factor in the thermal or power difference which would skew the savings. Just because someone takes a hot part and discounts it doesn't mean there's a savings when you factor in the total system cost.

That one way to see it, I have an hard time finding 13600K numbers, but limited at 45w a 13900K seem to still beat a 7600x, I could imagine that even when limited at a similar wattage the 13600k will be significantly above, has individual core will be running nearer their ideal performance per watt (a bit how and why the 5950x is so efficient, has it has extremely low wattage by threads I think it even often beat an M1 type of chips in some task in efficiency).Intel is pulling a fast one here. Loading up E-cores discounting the chips to win productivity benches even though power wise they are in another class.

a 13600k let you go to crazy 270watt type of numbers if you want (which seem a pure plus to have the option to), but should offer good performance at reasonable level if for cooling or other reason it is needed.

It's numbers like these that make me somewhat excited to see what Intel throws together for their Mobile lineup this time around. The AMD 6000 series when dealing with 45w and below is awesome, would be nice to see if Intel can compete there this gen.Yes, performance per watt could matter to some.

That one way to see it, I have an hard time finding 13600K numbers, but limited at 45w a 13900K seem to still beat a 7600x, I could imagine that even when limited at a similar wattage the 13600k will be significantly above, has individual core will be running near their ideal performance per watt that they can (a bit how and why the 5950x is so efficient, has it has extremelly low wattage by threads I think it even often beat an M1 type of chips in some task in efficiancy).

a 13600k let you go to crazy 270watt type of numbers if you want (which seem a pure plus to have the option to), but should offer good performance at reasonable level if for cooling or other reason it is needed.

Based on that previous graph its sucking down way more power than the other chips there. Someone spoke of a previous AMD chip and that's what came to mind for me as well. I had dual Opterons (after that I went to an Intel rig) and a GTX 780 (it was a KVM concept) a long time ago and while sure I could beat most things the power use was through the roof to get there and the heat was crazy too. It could literally keep my room warm in winter with just that box alone in the room. People think "oh it's no big deal", yeah until you have one of those halo rigs and realize just how much power and heat they generate.Yes, performance per watt could matter to some.

That one way to see it, I have an hard time finding 13600K numbers, but limited at 45w a 13900K seem to still beat a 7600x, I could imagine that even when limited at a similar wattage the 13600k will be significantly above, has individual core will be running nearer their ideal performance per watt (a bit how and why the 5950x is so efficient, has it has extremely low wattage by threads I think it even often beat an M1 type of chips in some task in efficiency).

a 13600k let you go to crazy 270watt type of numbers if you want (which seem a pure plus to have the option to), but should offer good performance at reasonable level if for cooling or other reason it is needed.

Last edited:

Only if you ignore the 5950x, the 7900x, and the 7950x all are more efficient. The 5950 x in particular is crazy in its efficiency.So they are basically in a dead heat with Intel edging out the 7600x, which actually really surprises me... I figured it would be a close but noticeable loss for Intel here

Now I really want to know what Intel has been feeding its "Intel 7" fabs, this isn't making sense to me

Attachments

Only if someone want it to do so, if heat and/or power are an issue and you have long multithread workload you can limit it and still have most of the performance and it idle really low.Based on that previous graph its sucking down way more power than the other chips there

Modern Intel cpu are really nice in the regard and let you set an higher limit for short burst workload and sustained one.

The question for an HardForum type of users is much more performance at the wanted power than Intel pre-setting, the idle-auto boost for what you need, limit settings, etc.. evolved quite a bit since back in the days.

If someone feel sustains workload should be limited to 65 watt and peak to 130 watt, they can usually to do so in the bios directly, 13600k with a 65w PL1 will still be well ahead of a 7600x in multitythreaded task, it is more than just a given more power to the chips tricks:

https://arstechnica.com/gadgets/202...-13600k-review-beating-amd-at-its-own-game/4/

R23 cinebench, 18,063 for the 65 watt 13600K. vs 14,784 for the 7600x, 14.5k vs 10.4k on 3kmark spy CPU score, 371 fps vs 288 at Tomb raider CPU render scores (gaming will not move much if you keep an high burst power usage high enough)

Last edited:

I have an hard time finding 13600K numbers, but limited at 45w a 13900K seem to still beat a 7600x,

Here we can see 13600K locked at 115watt (very similar to what a 7600x will use) versus 157 or 168w

10 minutes of Cinebench R23 score / power used

7600x.: 15,237 / 109w

7700x.: 20,167 / 138w

5900x.: 22,026 / 142w

13600K: 22,247 / 115w

13600K: 23,663 / 157w

13600K: 25,119 / 168w

Here we can see 13600K locked at 115watt (very similar to what a 7600x will use) versus 157 or 168w

10 minutes of Cinebench R23 score / power used

7600x.: 15,237 / 109w

7700x.: 20,167 / 138w

5900x.: 22,026 / 142w

13600K: 22,247 / 115w

13600K: 23,663 / 157w

13600K: 25,119 / 168w

That is pretty impressive results for 13600K, might have to build a 13600K system just for funsies.

True, but not other Ryzens in the stack. What AMD might need to do is drop the price on the bottom 2 SKUs which would pit the 13600 against more comparable competition.Only if someone want it to do so, if heat and/or power are an issue and you have long multithread workload you can limit it and still have most of the performance and it idle really low.

Modern Intel cpu are really nice in the regard and let you set an higher limit for short burst workload and sustained one.

The question for an HardForum type of users is much more performance at the wanted power than Intel pre-setting, the idle-auto boost for what you need, limit settings, etc.. evolved quite a bit since back in the days.

If someone feel sustains workload should be limited to 65 watt and peak to 130 watt, they can usually to do so in the bios directly, 13600k with a 65w PL1 will still be well ahead of a 7600x in multitythreaded task, it is more than just a given more power to the chips tricks:

https://arstechnica.com/gadgets/202...-13600k-review-beating-amd-at-its-own-game/4/

R23 cinebench, 18,063 for the 65 watt 13600K. vs 14,784 for the 7600x, 14.5k vs 10.4k on 3kmark spy CPU score, 371 fps vs 288 at Tomb raider CPU render scores (gaming will not move much if you keep an high burst power usage high enough)

riev90

Limp Gawd

- Joined

- Oct 21, 2022

- Messages

- 362

And that should have been done earlier when they announced the pricing.True, but not other Ryzens in the stack. What AMD might need to do is drop the price on the bottom 2 SKUs which would pit the 13600 against more comparable competition.

The rumored price for 7600x $199 and 7700x $299 seemed too good to be true, yet I think those were fairer price compared to ADL chip back then.

Now with B650 (non-E) official price starting from $183 in my country, that's a lot compared to ADL / Raptor overall platform cost.

OnceSetThisCannotChange

Limp Gawd

- Joined

- Sep 15, 2017

- Messages

- 249

Exactly, AMD decided to go very high in terms of pricing, and well... while it still makes sense at high end, due to the platform, it certainly does not make sense to go AMD around $300 mark. Intel is sufficiently ahead there that you can go Intel, price in the cost of a new motherboard in the future, while still having a faster processor today.

My guess is that AMD will have to correct its pricing soon enough, as it needs lower MB, and lower CPU prices.

Intel finally transformed into a value champion, it was a long time coming.

My guess is that AMD will have to correct its pricing soon enough, as it needs lower MB, and lower CPU prices.

Intel finally transformed into a value champion, it was a long time coming.

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

QFT. Back in the day the AMD parts were so power hungry even with the discount price you paid more in the end with power. Power is not getting cheaper. Some parts of the world are running out of it. Expensive cooling solutions are just that, expensive l. More fans to cool the cpu is just added power and expense. We need to push companies to do more to reduce electricity consumption. A next gen chip with the same power required and better performance is good. A next gen chip with more performance with a lot more power draw, that’s bad.

People say that, but the difference between the 7600x and 13600k is ~90W under load. If you make the assumption that you are running 24/7/365 (which rarely happens if ever), 90W*24 = 2160W/Hr = 2.16KWh * $0.15 which is the average cost of electricity in the US = $0.32 a day difference = $118.26 over a year in power costs if you're running full load 24/7/365.

Now the reality is if you're buying a 13600k or 7600x CPU you're not in the business of running your CPU full load 24/7/365. The average user isn't going to do that even 5% of the time. So being generous 5% of $118.26 is $5.91.

So the power difference/savings for a pseudo average user is negligible. Less so back when "power hungry AMD" CPUs roamed the land since power was cheaper.

It better since it's rocking 14 cores...lol.

So Intel wins another generation. Sometimes it feels like I'll need have another AMD rig again.

And given most PCs are idle for 12-16h a day...People say that, but the difference between the 7600x and 13600k is ~90W under load. If you make the assumption that you are running 24/7/365 (which rarely happens if ever), 90W*24 = 2160W/Hr = 2.16KWh * $0.15 which is the average cost of electricity in the US = $0.32 a day difference = $118.26 over a year in power costs if you're running full load 24/7/365.

Now the reality is if you're buying a 13600k or 7600x CPU you're not in the business of running your CPU full load 24/7/365. The average user isn't going to do that even 5% of the time. So being generous 5% of $118.26 is $5.91.

So the power difference/savings for a pseudo average user is negligible. Less so back when "power hungry AMD" CPUs roamed the land since power was cheaper.

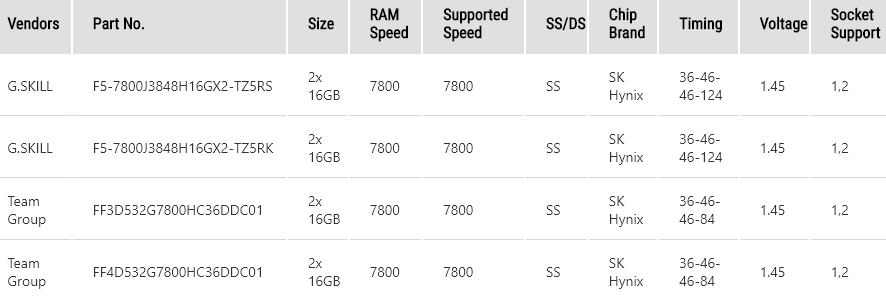

Yep, but getting extreme speeds out of DDR5 modules is still a deep rabbit hole and time commitment right now to get 7200-8000+, having looked into it briefly (overclocknet forums).All the reviews were done with slow DDR5 ram (and it still leads the pack) and not truly taking advantage of RPL's fast memory support. Nothing is gonna touch 13900k + one of these beasts.

View attachment 521017

TeamGroup has a $400 2x16GB DDR5-7200 CL34 kit but it's anything but "pop it in and set XMP and you're done" due to lots of variance in motherboards, silicon lottery on IMC, etc.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,538

Probably take a few bios revisions before they work properly with a simple XMP enable. And even then (just like really highly clocked DDR4) no guarantees.Yep, but getting extreme speeds out of DDR5 modules is still a deep rabbit hole and time commitment right now to get 7200-8000+, having looked into it briefly (overclocknet forums).

TeamGroup has a $400 2x16GB DDR5-7200 CL34 kit but it's anything but "pop it in and set XMP and you're done" due to lots of variance in motherboards, silicon lottery on IMC, etc.

And in that regard intel idle tend to be a tiny bit lower under idle or single thread work, a 13900K seem to idle under a 5600 for example and at light load under a 5900x, not by much but it can be 1,200 hours of 10 less watt versus 200 hours of 70 more watt, ending up not that different at the end of the year.And given most PCs are idle for 12-16h a day...

One element that can be easily go over a lot of hours is GPU power, because they tend to max out easily over something has common than playing a regular game and to use 7950x or even 13900K type of power (or 450 watt for a 3090ti-4090).

OKC Yeakey Trentadue

[H]ard|Gawd

- Joined

- Nov 24, 2021

- Messages

- 1,210

This generation will have that. This first wave of cpus is just for bragging rights in each class, power usage be damned. The 13700 will likely match the 12900k using far less power. The 13400 will so the same against the 12600k. If you are really concerned about power, you would have no problem waiting a few months for the power efficient models.QFT. Back in the day the AMD parts were so power hungry even with the discount price you paid more in the end with power. Power is not getting cheaper. Some parts of the world are running out of it. Expensive cooling solutions are just that, expensive l. More fans to cool the cpu is just added power and expense. We need to push companies to do more to reduce electricity consumption. A next gen chip with the same power required and better performance is good. A next gen chip with more performance with a lot more power draw, that’s bad.

And as always tight subtimings >>>>>>>>>>>>> xmp and high speed. Bandwidth from high MT/s is neat but doesn't help games much.Yep, but getting extreme speeds out of DDR5 modules is still a deep rabbit hole and time commitment right now to get 7200-8000+, having looked into it briefly (overclocknet forums).

TeamGroup has a $400 2x16GB DDR5-7200 CL34 kit but it's anything but "pop it in and set XMP and you're done" due to lots of variance in motherboards, silicon lottery on IMC, etc.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)