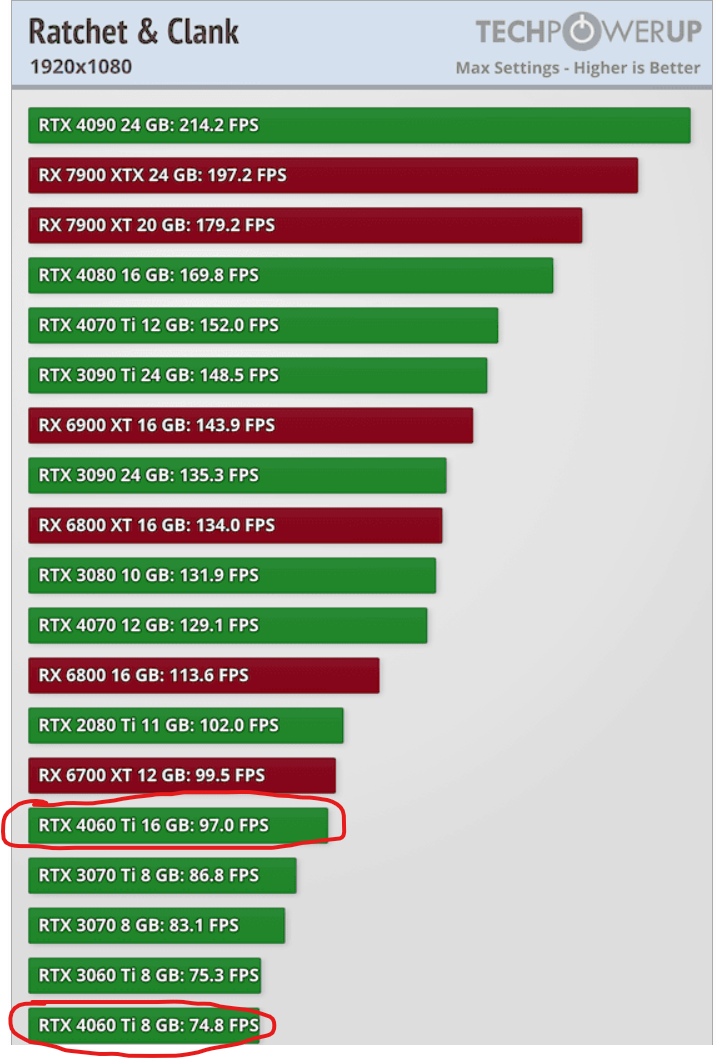

hi all i had a GTX 1060 6GB and it died last month I am hoping to buy a new GPU in the coming weeks and I am a bit confused about which one to go please Help me Choose RTX 3060 12 GB or RTX 4060 8 GB?

will the 8GB in 4060 will be an issue in the future? if I go with the 3060 12 GB will it be enough for the next 4 years? i play most of the games in Max setting possible in 1080p so I like to choose a card where I can play it like that for the next few years at least

the price difference between 2 cards is less than 20 USD in my country

My specs

intel core i5 10400

32GB DDR 4 RAM

FSP AURUM S 600W

will the 8GB in 4060 will be an issue in the future? if I go with the 3060 12 GB will it be enough for the next 4 years? i play most of the games in Max setting possible in 1080p so I like to choose a card where I can play it like that for the next few years at least

the price difference between 2 cards is less than 20 USD in my country

My specs

intel core i5 10400

32GB DDR 4 RAM

FSP AURUM S 600W

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)